[ad_1]

Robots usually are not prepared for the true world. It’s nonetheless an achievement for autonomous robots to merely survive in the true world, which is a great distance from any sort of helpful generalized autonomy. Under some pretty particular constraints, autonomous robots are beginning to discover a number of precious niches in semistructured environments, like workplaces and hospitals and warehouses. But in the case of the unstructured nature of catastrophe areas or human interplay, or actually any state of affairs that requires innovation and creativity, autonomous robots are sometimes at a loss.

For the foreseeable future, which means people are nonetheless vital. It doesn’t imply that people have to be bodily current, nevertheless—simply {that a} human is within the loop someplace. And this creates a chance.

In 2018, the XPrize Foundation introduced a contest (sponsored by the Japanese airline ANA) to create “an avatar system that can transport human presence to a remote location in real time,” with the objective of growing robotic techniques that might be utilized by people to work together with the world anyplace with a good Internet connection. The last occasion occurred final November in Long Beach, Calif., the place 17 groups from world wide competed for US $8 million in prize cash.

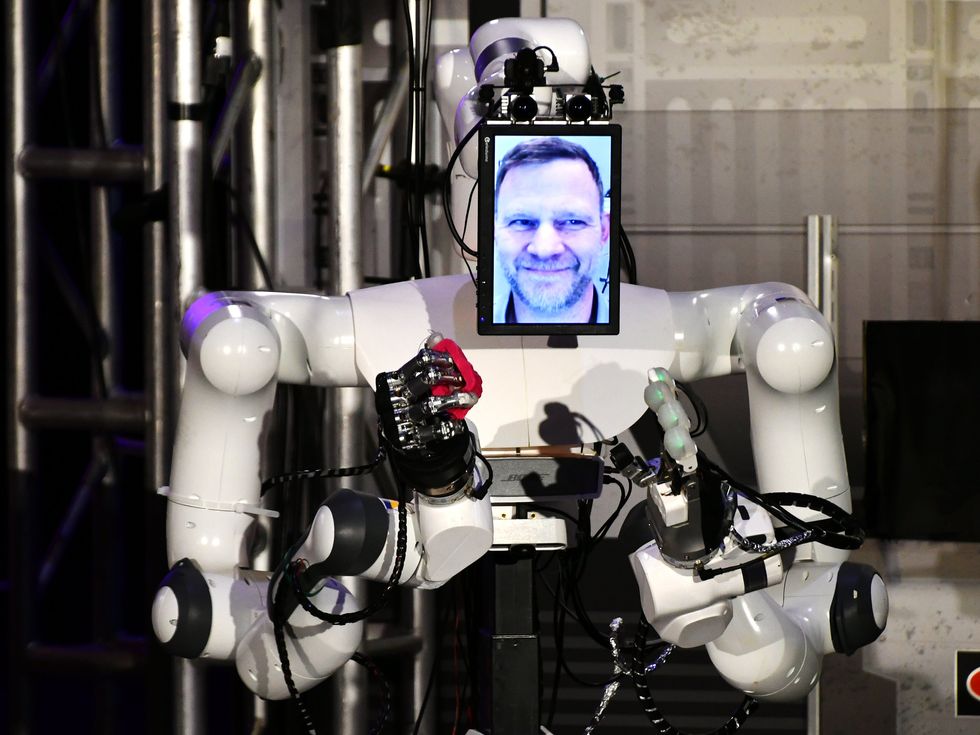

While avatar techniques are all capable of transfer and work together with their setting, the Avatar XPrize competitors showcased quite a lot of completely different {hardware} and software program approaches to creating the best system.XPrize Foundation

While avatar techniques are all capable of transfer and work together with their setting, the Avatar XPrize competitors showcased quite a lot of completely different {hardware} and software program approaches to creating the best system.XPrize Foundation

The competitors showcased the ability of people paired with robotic techniques, transporting our expertise and adaptableness to a distant location. While the robots and interfaces had been very a lot analysis initiatives moderately than techniques prepared for real-world use, the Avatar XPrize supplied the inspiration (in addition to the construction and funding) to assist a number of the world’s finest roboticists push the bounds of what’s potential by way of telepresence.

A robotic avatar

A robotic avatar system is just like digital actuality, in that each enable an individual situated in a single place to expertise and work together with a unique place utilizing know-how as an interface. Like VR, an efficient robotic avatar allows the consumer to see, hear, contact, transfer, and talk in such a means that they really feel like they’re truly someplace else. But the place VR places a human right into a digital setting, a robotic avatar brings a human right into a bodily setting, which might be within the subsequent room or 1000’s of kilometers away.

ANA Avatar XPRIZE Finals: Winning group NimbRo Day 2 Test Runyoutu.be

The XPrize Foundation hopes that avatar robots might at some point be used for extra sensible functions: offering care to anybody immediately, no matter distance; catastrophe aid in areas the place it’s too harmful for human rescuers to go; and performing important repairs, in addition to upkeep and different hard-to-come-by companies.

“The available methods by which we can physically transport ourselves from one place to another are not scaling rapidly enough,” says David Locke, the manager director of Avatar XPrize. “A disruption in this space is long overdue. Our aim is to bypass the barriers of distance and time by introducing a new means of physical connection, allowing anyone in the world to physically experience another location and provide on-the-ground assistance where and when it is needed.”

Global competitors

In the Long Beach conference middle, XPrize did its finest to create an environment that was half rock live performance, half sporting occasion, and half robotics analysis convention and expo. The course was arrange in an area with stadium seating (open to the general public) and extensively embellished and dramatically lit. Live commentary accompanied every competitor’s run. Between runs, groups labored on their avatar techniques in a conference corridor, the place they might work together with one another in addition to with curious onlookers. The 17 groups hailed from France, Germany, Italy, Japan, Mexico, Singapore, South Korea, the Netherlands, the United Kingdom, and the United States. With every group getting ready for a number of runs over three days, the ambiance was by turns frantic and targeted as group members moved across the venue and labored to restore or enhance their robots. Major tutorial analysis labs arrange subsequent to small robotics startups, with every group hoping their distinctive method would triumph.

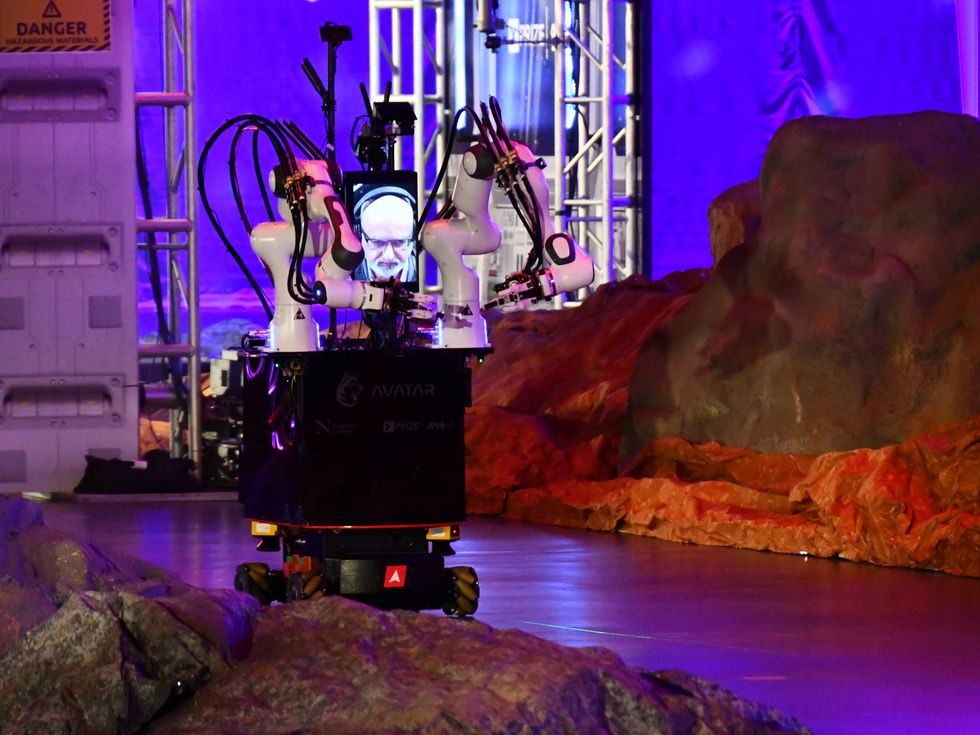

The Avatar XPrize course was designed to appear to be a science station on an alien planet, and the avatar techniques needed to full duties that included utilizing instruments and figuring out rock samples.XPrize Foundation

The Avatar XPrize course was designed to appear to be a science station on an alien planet, and the avatar techniques needed to full duties that included utilizing instruments and figuring out rock samples.XPrize Foundation

The competitors course included a collection of duties that every robotic needed to carry out, primarily based round a science mission on the floor of an alien planet. Completing the course concerned speaking with a human mission commander, flipping {an electrical} swap, transferring by way of an impediment course, figuring out a container by weight and manipulating it, utilizing an influence drill, and at last, utilizing contact to categorize a rock pattern. Teams had been ranked by the period of time their avatar system took to efficiently end all duties.

There are two basic features to an avatar system. The first is the robotic cellular manipulator that the human operator controls. The second is the interface that permits the operator to offer that management, and that is arguably the tougher a part of the system. In earlier robotics competitions, just like the DARPA Robotics Challenge and the DARPA Subterranean Challenge, the interface was usually primarily based round a standard pc (or a number of computer systems) with a keyboard and mouse, and the extremely specialised job of operator required an immense quantity of coaching and expertise. This method just isn’t accessible or scalable, nevertheless. The competitors in Long Beach thus featured avatar techniques that had been basically operator-agnostic, in order that anybody might successfully use them.

XPrize decide Justin Manley celebrates with NimbRo’s avatar robotic after finishing the course.Evan Ackerman

XPrize decide Justin Manley celebrates with NimbRo’s avatar robotic after finishing the course.Evan Ackerman

“Ultimately, the general public will be the end user,” explains Locke. “This competition forced teams to invest time into researching and improving the operator-experience component of the technology. They had to open their technology and labs to general users who could operate and provide feedback on the experience, and the teams who scored highest also had the most intuitive and user-friendly operating interfaces.”

During the competitors, group members weren’t allowed to function their very own robots. Instead, a decide was assigned to every group, and the group had 45 minutes to coach the decide on the robotic and interface. The judges included consultants in robotics, digital actuality, human-computer interplay, and neuroscience, however none of them had earlier expertise as an avatar operator.

Northeastern group member David Nguyen watches XPrize decide Peggy Wu function the avatar system throughout a contest run. XPrize Foundation

Northeastern group member David Nguyen watches XPrize decide Peggy Wu function the avatar system throughout a contest run. XPrize Foundation

Once the coaching was full, the decide used the group’s interface to function the robotic by way of the course, whereas the group might do nothing however sit and watch. Two group members had been allowed to stay with the decide in case of technical issues, and a dwell stream of the operator room captured the stress and helplessness that groups had been beneath: After years of labor and with thousands and thousands of {dollars} at stake, it was as much as a stranger they’d met an hour earlier than to pilot their system to victory. It didn’t all the time go properly, and sometimes it went very badly, as when a bipedal robotic collided with the sting of a doorway on the course throughout a contest run and crashed to the bottom, struggling injury that was finally unfixable.

Hardware and people

The variety of the groups was mirrored within the variety of their avatar techniques. The competitors imposed some primary design necessities for the robotic, together with mobility, manipulation, and a communication interface, however in any other case it was as much as every group to design and implement their very own {hardware} and software program. Most groups favored a wheeled base with two robotic arms and a head consisting of a display screen for displaying the operator’s face. A couple of daring groups introduced bipedal humanoid robots. Stereo cameras had been generally used to offer visible and depth info to the operator, and a few groups included further sensors to convey different kinds of details about the distant setting.

For instance, within the last competitors job, the operator wanted the equal of a way of contact to be able to differentiate a tough rock from a clean one. While contact sensors for robots are widespread, translating the information that they gather into one thing readable by people just isn’t easy. Some groups opted for extremely advanced (and costly) microfluidic gloves that transmit contact sensations from the fingertips of the robotic to the fingertips of the operator. Other groups used small, finger-mounted vibrating motors to translate roughness into haptic suggestions that the operator might really feel. Another method was to mount microphones on the robotic’s fingers. As its fingers moved over completely different surfaces, tough surfaces sounded louder to the operator whereas clean surfaces sounded softer.

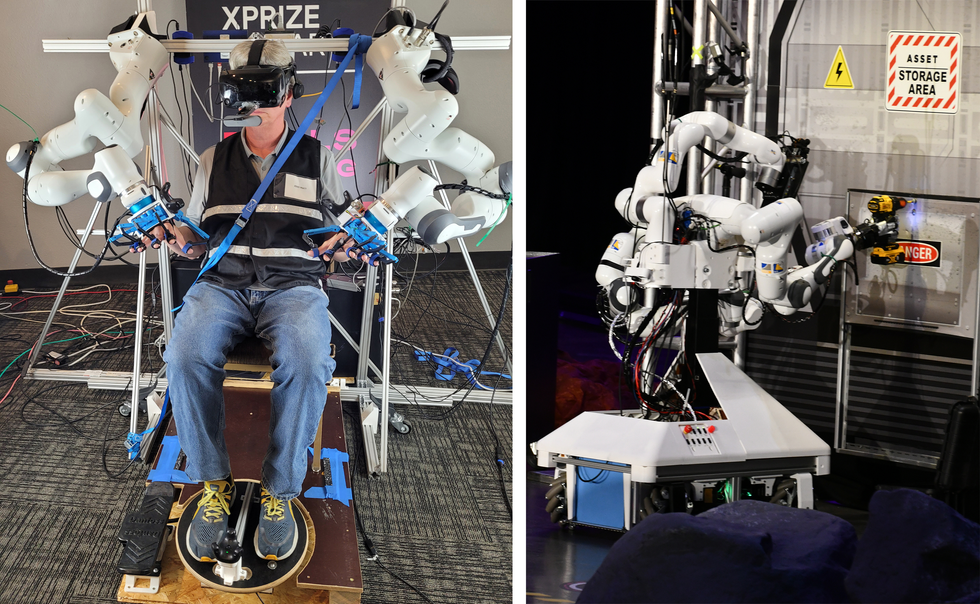

![]() Many groups, together with i-Botics [left], relied on business virtual-reality headsets as a part of their interfaces. Avatar interfaces had been made as immersive as potential to assist operators management their robots successfully.Left: Evan Ackerman; Right: XPrize Foundation

Many groups, together with i-Botics [left], relied on business virtual-reality headsets as a part of their interfaces. Avatar interfaces had been made as immersive as potential to assist operators management their robots successfully.Left: Evan Ackerman; Right: XPrize Foundation

In addition to perceiving the distant setting, the operator needed to effectively and successfully management the robotic. A primary management interface is likely to be a mouse and keyboard, or a recreation controller. But with many levels of freedom to manage, restricted operator coaching time, and a contest judged on pace, groups needed to get artistic. Some groups used motion-detecting virtual-reality techniques to switch the movement of the operator to the avatar robotic. Other groups favored a bodily interface, strapping the operator into {hardware} (virtually like a robotic exoskeleton) that might learn their motions after which actuate the limbs of the avatar robotic to match, whereas concurrently offering drive suggestions. With the operator’s arms and palms busy with manipulation, the robotic’s motion throughout the ground was sometimes managed with foot pedals.

Northeastern’s robotic strikes by way of the course.Evan Ackerman

Northeastern’s robotic strikes by way of the course.Evan Ackerman

Another problem of the XPrize competitors was the way to use the avatar robotic to speak with a distant human. Teams had been judged on how pure such communication was, which precluded utilizing text-only or voice-only interfaces; as an alternative, groups needed to give their robotic some sort of expressive face. This was simple sufficient for operator interfaces that used screens; a webcam that was pointed on the operator and streamed to show on the robotic labored properly.

But for interfaces that used VR headsets, the place the operator’s face was partially obscured, groups needed to discover different options. Some groups used in-headset eye monitoring and speech recognition to map the operator’s voice and facial actions onto an animated face. Other groups dynamically warped an actual picture of the consumer’s face to mirror their eye and mouth actions. The interplay wasn’t seamless, however it was surprisingly efficient.

Human type or human operate?

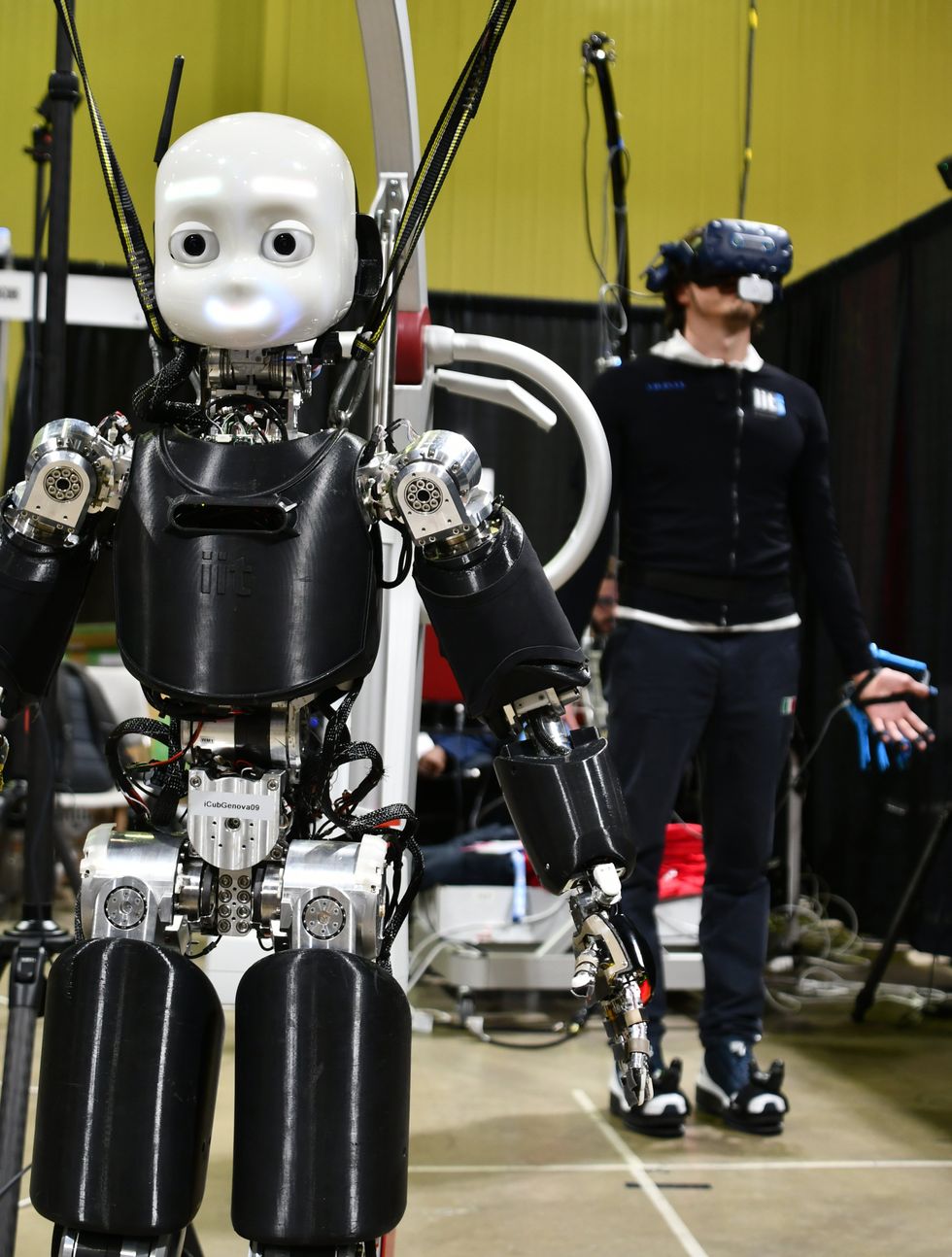

Team iCub, from the Istituto Italiano di Tecnologia, believed its bipedal avatar was probably the most intuitive solution to switch pure human movement to a robotic.Evan Ackerman

Team iCub, from the Istituto Italiano di Tecnologia, believed its bipedal avatar was probably the most intuitive solution to switch pure human movement to a robotic.Evan Ackerman

With robotics competitions just like the Avatar XPrize, there may be an inherent battle between the broader objective of generalized options for real-world issues and the targeted goal of the competing groups, which is just to win. Winning doesn’t essentially result in an answer to the issue that the competitors is making an attempt to unravel. XPrize might have wished to foster the creation of “avatar system[s] that can transport human presence to a remote location in real time,” however the profitable group was the one that the majority effectively accomplished the very particular set of competitors duties.

For instance, Team iCub, from the Istituto Italiano di Tecnologia (IIT) in Genoa, Italy, believed that the easiest way to move human presence to a distant location was to embody that human as carefully as potential. To that finish, IIT’s avatar system consisted of a small bipedal humanoid robotic—the 100-centimeter-tall iCub. Getting a bipedal robotic to stroll reliably is a problem, particularly when that robotic is beneath the direct management of an inexperienced human. But even beneath ideally suited circumstances, there was merely no means that iCub might transfer as shortly as its wheeled opponents.

XPrize determined in opposition to a course that may have rewarded humanlike robots—there have been no stairs on the course, for instance—which prompts the query of what “human presence” truly means. If it means having the ability to go wherever able-bodied people can go, then legs is likely to be vital. If it means accepting that robots (and a few people) have mobility limitations and consequently specializing in different features of the avatar expertise, then maybe legs are non-obligatory. Whatever the intent of XPrize might have been, the course itself finally dictated what made for a profitable avatar for the needs of the competitors.

Avatar optimization

Unsurprisingly, the groups that targeted on the competitors and optimized their avatar techniques accordingly tended to carry out properly. Team Northeastern received third place and $1 million utilizing a hydrostatic force-feedback interface for the operator. The interface was primarily based on a system of fluidic actuators first conceptualized a decade in the past at Disney Research.

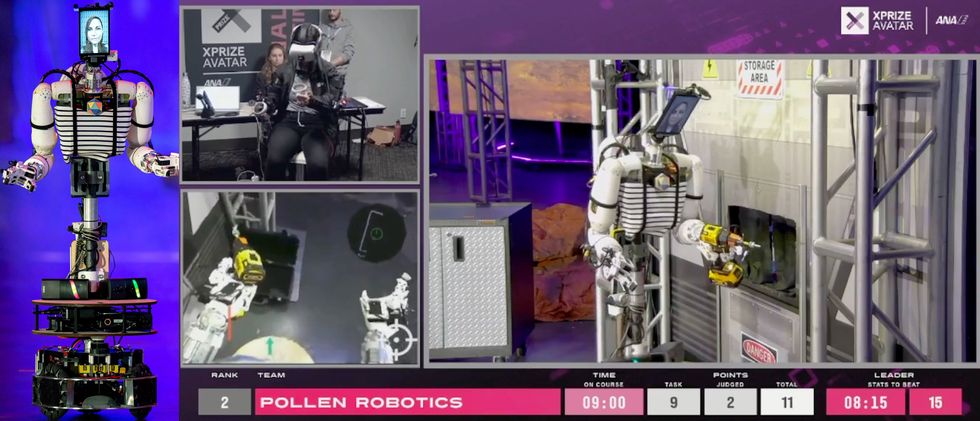

Second place went to Team Pollen Robotics, a French startup. Their robotic, Reachy, relies on Pollen Robotics’ commercially accessible cellular manipulator, and it was seemingly one of the inexpensive techniques within the competitors, costing a mere €20,000 (US $22,000). It used primarily 3D-printed parts and an open-source design. Reachy was an exception to the technique of optimization, as a result of it’s meant to be a generalizable platform for real-world manipulation. But the group’s comparatively easy method helped them win the $2 million second-place prize.

In first place, finishing your entire course in beneath 6 minutes with an ideal rating, was Team NimbRo, from the University of Bonn, in Germany. NimbRo has a protracted historical past of robotics competitions; they participated within the DARPA Robotics Challenge in 2015 and have been concerned within the worldwide RoboCup competitors since 2005. But the Avatar XPrize allowed them to deal with new methods of mixing human intelligence with robot-control techniques. “When I watch human intelligence operating a machine, I find that fascinating,” group lead Sven Behnke instructed IEEE Spectrum. “A human can see deviations from how they are expecting the machine to behave, and then can resolve those deviations with creativity.”

Team NimbRo’s system relied closely on the human operator’s personal senses and data. “We try to take advantage of human cognitive capabilities as much as possible,” explains Behnke. “For example, our system doesn’t use sensors to estimate depth. It simply relies on the visual cortex of the operator, since humans have evolved to do this in tremendously efficient ways.” To that finish, NimbRo’s robotic had an unusually lengthy and versatile neck that adopted the motions of the operator’s head. During the competitors, the robotic’s head might be seen shifting backward and forward because the operator used parallax to know how far-off objects had been. It labored fairly properly, though NimbRo needed to implement a particular rendering method to reduce latency between the operator’s head motions and the video feed from the robotic, in order that the operator didn’t get movement illness.

XPrize decide Jerry Pratt [left] operates NimbRo’s robotic on the course [right]. The drill job was significantly tough, involving lifting a heavy object and manipulating it with excessive precision. Left: Team NimbRo; Right: Evan Ackerman

XPrize decide Jerry Pratt [left] operates NimbRo’s robotic on the course [right]. The drill job was significantly tough, involving lifting a heavy object and manipulating it with excessive precision. Left: Team NimbRo; Right: Evan Ackerman

The group additionally put loads of effort into ensuring that utilizing the robotic to control objects was as intuitive as potential. The operator’s arms had been immediately connected to robotic arms, which had been duplicates of the arms on the avatar robotic. This meant that any arm motions made by the operator can be mirrored by the robotic, yielding a really constant expertise for the operator.

The way forward for hybrid autonomy

The operator decide for Team NimbRo’s profitable run was Jerry Pratt, who spent a long time as a robotics professor on the Florida Institute for Human and Machine Cognition earlier than becoming a member of humanoid robotics startup Figure final yr. Pratt had led Team IHMC (and a Boston Dynamics Atlas robotic) to a second-place end on the DARPA Robotics Challenge Finals in 2015. “I found it incredible that you can learn how to use these systems in 60 minutes,” Pratt stated of his XPrize run. “And operating them is super fun!” Pratt’s profitable time of 5:50 to finish the Avatar XPrize course was not a lot slower than human pace.

At the 2015 DARPA Robotics Challenge finals, against this, the Atlas robotic needed to be painstakingly piloted by way of the course by a group of consultants. It took that robotic 50 minutes to finish the course, which a human might have completed in about 5 minutes. “Trying to pick up things with a joystick and mouse [during the DARPA competition] is just really slow,” Pratt says. “Nothing beats being able to just go, ‘Oh, that’s an object, let me grab it’ with full telepresence. You just do it.”

Team Pollen’s robotic [left] had a comparatively easy operator interface [middle], however that will have been an asset through the competitors [right].Pollen Robotics

Team Pollen’s robotic [left] had a comparatively easy operator interface [middle], however that will have been an asset through the competitors [right].Pollen Robotics

Both Pratt and NimbRo’s Behnke see people as a important element of robots working within the unstructured environments of the true world, no less than within the quick time period. “You need humans for high-level decision making,” says Pratt. “As soon as there’s something novel, or something goes wrong, you need human cognition in the world. And that’s why you need telepresence.”

Behnke agrees. He hopes that what his group has discovered from the Avatar XPrize competitors will result in hybrid autonomy by way of telepresence, through which robots are autonomous more often than not however people can use telepresence to assist the robots after they get caught. This method is already being carried out in easier contexts, like sidewalk supply robots, however not but within the sort of advanced human-in-the-loop manipulation that Behnke’s system is able to.

“Step by step, my objective is to take the human out of that loop so that one operator can control maybe 10 robots, which would be autonomous most of the time,” Behnke says. “And as these 10 systems operate, we get more data from which we can learn, and then maybe one operator will be responsible for 100 robots, and then 1,000 robots. We’re using telepresence to learn how to do autonomy better.”

The whole Avatar XPrize occasion is obtainable to look at by way of this dwell stream recording on YouTube.www.youtube.com

While the Avatar XPrize last competitors was primarily based round a space-exploration state of affairs, Behnke is extra taken with purposes through which a telepresence-mediated human contact is likely to be much more precious, comparable to private help. Behnke’s group has already demonstrated how their avatar system might be used to assist somebody with an injured arm measure their blood stress and placed on a coat. These sound like easy duties, however they contain precisely the sort of human interplay and artistic manipulation that’s exceptionally tough for a robotic by itself. Immersive telepresence makes these duties virtually trivial, and accessible to only about any human with somewhat coaching—which is what the Avatar XPrize was making an attempt to realize.

Still, it’s arduous to understand how scalable these applied sciences are. At the second, avatar techniques are fragile and costly. Historically, there was a spot of about 5 to 10 years between high-profile robotics competitions and the arrival of the ensuing know-how—comparable to autonomous vehicles and humanoid robots—at a helpful place exterior the lab. It’s potential that autonomy will advance shortly sufficient that the impression of avatar robots will likely be considerably diminished for widespread duties in structured environments. But it’s arduous to think about that autonomous techniques will ever obtain human ranges of instinct or creativity. That is, there’ll proceed to be a necessity for avatars for the foreseeable future. And if these groups can leverage the teachings they’ve discovered over the 4 years of the Avatar XPrize competitors to tug this know-how out of the analysis section, their techniques might bypass the constraints of autonomy by way of human cleverness, bringing us helpful robots which might be useful in our day by day lives.

From Your Site Articles

Related Articles Around the Web