[ad_1]

Ars Technica

Things are shifting at lightning pace in AI Land. On Friday, a software program developer named Georgi Gerganov created a software known as “llama.cpp” that may run Meta’s new GPT-3-class AI massive language mannequin, LLaMA, domestically on a Mac laptop computer. Soon thereafter, individuals labored out how you can run LLaMA on Windows as properly. Then somebody confirmed it operating on a Pixel 6 cellphone, and subsequent got here a Raspberry Pi (albeit operating very slowly).

If this retains up, we could also be a pocket-sized ChatGPT competitor earlier than we all know it.

But let’s again up a minute, as a result of we’re not fairly there but. (At least not immediately—as in actually immediately, March 13, 2023.) But what’s going to arrive subsequent week, nobody is aware of.

Since ChatGPT launched, some individuals have been pissed off by the AI mannequin’s built-in limits that stop it from discussing subjects that OpenAI has deemed delicate. Thus started the dream—in some quarters—of an open supply massive language mannequin (LLM) that anybody may run domestically with out censorship and with out paying API charges to OpenAI.

Open supply options do exist (corresponding to GPT-J), however they require lots of GPU RAM and cupboard space. Other open supply options couldn’t boast GPT-3-level efficiency on available consumer-level {hardware}.

Enter LLaMA, an LLM obtainable in parameter sizes starting from 7B to 65B (that is “B” as in “billion parameters,” that are floating level numbers saved in matrices that signify what the mannequin “is aware of”). LLaMA made a heady declare: that its smaller-sized fashions may match OpenAI’s GPT-3, the foundational mannequin that powers ChatGPT, within the high quality and pace of its output. There was only one drawback—Meta launched the LLaMA code open supply, but it surely held again the “weights” (the educated “information” saved in a neural community) for certified researchers solely.

Flying on the pace of LLaMA

Meta’s restrictions on LLaMA did not final lengthy, as a result of on March 2, somebody leaked the LLaMA weights on BitTorrent. Since then, there’s been an explosion of growth surrounding LLaMA. Independent AI researcher Simon Willison has in contrast this example to the discharge of Stable Diffusion, an open supply picture synthesis mannequin that launched final August. Here’s what he wrote in a submit on his weblog:

It feels to me like that Stable Diffusion second again in August kick-started your entire new wave of curiosity in generative AI—which was then pushed into over-drive by the discharge of ChatGPT on the finish of November.

That Stable Diffusion second is occurring once more proper now, for giant language fashions—the know-how behind ChatGPT itself. This morning I ran a GPT-3 class language mannequin by myself private laptop computer for the primary time!

AI stuff was bizarre already. It’s about to get an entire lot weirder.

Typically, operating GPT-3 requires a number of datacenter-class A100 GPUs (additionally, the weights for GPT-3 aren’t public), however LLaMA made waves as a result of it may run on a single beefy client GPU. And now, with optimizations that cut back the mannequin measurement utilizing a method known as quantization, LLaMA can run on an M1 Mac or a lesser Nvidia client GPU.

Things are shifting so shortly that it is generally tough to maintain up with the most recent developments. (Regarding AI’s fee of progress, a fellow AI reporter instructed Ars, “It’s like these movies of canine the place you upend a crate of tennis balls on them. [They] do not know the place to chase first and get misplaced within the confusion.”)

For instance, this is an inventory of notable LLaMA-related occasions based mostly on a timeline Willison specified by a Hacker News remark:

- February 24, 2023: Meta AI broadcasts LLaMA.

- March 2, 2023: Someone leaks the LLaMA fashions through BitTorrent.

- March 10, 2023: Georgi Gerganov creates llama.cpp, which might run on an M1 Mac.

- March 11, 2023: Artem Andreenko runs LLaMA 7B (slowly) on a Raspberry Pi 4, 4GB RAM, 10 sec/token.

- March 12, 2023: LLaMA 7B operating on NPX, a node.js execution software.

- March 13, 2023: Someone will get llama.cpp operating on a Pixel 6 cellphone, additionally very slowly.

- March 13, 2023, 2023: Stanford releases Alpaca 7B, an instruction-tuned model of LLaMA 7B that “behaves equally to OpenAI’s “text-davinci-003” however runs on a lot much less highly effective {hardware}.

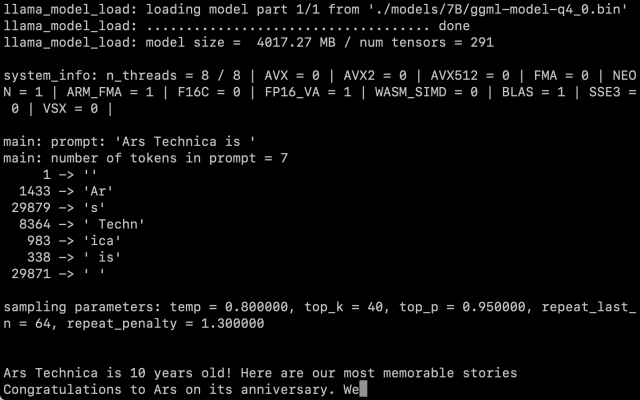

After acquiring the LLaMA weights ourselves, we adopted Willison’s directions and received the 7B parameter model operating on an M1 Macbook Air, and it runs at an affordable fee of pace. You name it as a script on the command line with a immediate, and LLaMA does its greatest to finish it in an affordable means.

Benj Edwards / Ars Technica

There’s nonetheless the query of how a lot the quantization impacts the standard of the output. In our exams, LLaMA 7B trimmed all the way down to 4-bit quantization was very spectacular for operating on a MacE-book Air—however nonetheless not on par with what you may count on from ChatGPT. It’s totally attainable that higher prompting methods may generate higher outcomes.

Also, optimizations and fine-tunings come shortly when everybody has their fingers on the code and the weights—regardless that LLaMA remains to be saddled with some pretty restrictive phrases of use. The launch of Alpaca immediately by Stanford proves that positive tuning (further coaching with a particular objective in thoughts) can enhance efficiency, and it is nonetheless early days after LLaMA’s launch.

As of this writing, operating LLaMA on a Mac stays a reasonably technical train. You have to put in Python and Xcode and be aware of engaged on the command line. Willison has good step-by-step directions for anybody who want to try it. But that will quickly change as builders proceed to code away.

As for the implications of getting this tech out within the wild—nobody is aware of but. While some fear about AI’s influence as a software for spam and misinformation, Willison says, “It’s not going to be un-invented, so I feel our precedence must be determining essentially the most constructive attainable methods to make use of it.”

Right now, our solely assure is that issues will change quickly.