[ad_1]

Google Vizier is the de-facto system for blackbox optimization over goal features and hyperparameters throughout Google, having serviced a few of Google’s largest analysis efforts and optimized a variety of merchandise (e.g., Search, Ads, YouTube). For analysis, it has not solely decreased language mannequin latency for customers, designed laptop architectures, accelerated {hardware}, assisted protein discovery, and enhanced robotics, but additionally offered a dependable backend interface for customers to seek for neural architectures and evolve reinforcement studying algorithms. To function on the scale of optimizing 1000’s of customers’ vital techniques and tuning tens of millions of machine studying fashions, Google Vizier solved key design challenges in supporting various use circumstances and workflows, whereas remaining strongly fault-tolerant.

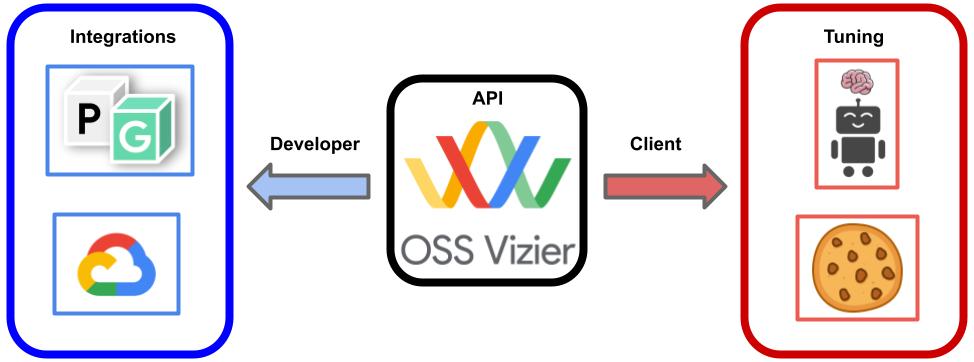

Today we’re excited to announce Open Source (OSS) Vizier (with an accompanying techniques whitepaper printed at AutoML Conference 2022), a standalone Python bundle based mostly on Google Vizier. OSS Vizier is designed for 2 primary functions: (1) managing and optimizing experiments at scale in a dependable and distributed method for customers, and (2) growing and benchmarking algorithms for automated machine studying (AutoML) researchers.

System design

OSS Vizier works by having a server present providers, specifically the optimization of blackbox goals, or features, from a number of shoppers. In the primary workflow, a shopper sends a distant process name (RPC) and asks for a suggestion (i.e., a proposed enter for the shopper’s blackbox operate), from which the service begins to spawn a employee to launch an algorithm (i.e., a Pythia coverage) to compute the next options. The options are then evaluated by shoppers to type their corresponding goal values and measurements, that are despatched again to the service. This pipeline is repeated a number of occasions to type a complete tuning trajectory.

The use of the ever present gRPC library, which is suitable with most programming languages, similar to C++ and Rust, permits most flexibility and customization, the place the person can even write their very own customized shoppers and even algorithms outdoors of the default Python interface. Since your complete course of is saved to an SQL datastore, a clean restoration is ensured after a crash, and utilization patterns will be saved as worthwhile datasets for analysis into meta-learning and multitask transfer-learning strategies such because the OptFormer and HyperBO.

Usage

Because of OSS Vizier’s emphasis as a service, through which shoppers can ship requests to the server at any time limit, it’s thus designed for a broad vary of eventualities — the price range of evaluations, or trials, can vary from tens to tens of millions, and the analysis latency can vary from seconds to weeks. Evaluations will be accomplished asynchronously (e.g., tuning an ML mannequin) or in synchronous batches (e.g., moist lab settings involving a number of simultaneous experiments). Furthermore, evaluations might fail on account of transient errors and be retried, or might fail on account of persistent errors (e.g., the analysis is unimaginable) and shouldn’t be retried.

This broadly helps quite a lot of purposes, which embody hyperparameter tuning deep studying fashions or optimizing non-computational goals, which will be e.g., bodily, chemical, organic, mechanical, and even human-evaluated, similar to cookie recipes.

Integrations, algorithms, and benchmarks

As Google Vizier is closely built-in with lots of Google’s inside frameworks and merchandise, OSS Vizier will naturally be closely built-in with lots of Google’s open supply and exterior frameworks. Most prominently, OSS Vizier will function a distributed backend for PyGlove to permit large-scale evolutionary searches over combinatorial primitives similar to neural architectures and reinforcement studying algorithms. Furthermore, OSS Vizier shares the identical client-based API with Vertex Vizier, permitting customers to rapidly swap between open-source and production-quality providers.

For AutoML researchers, OSS Vizier can be outfitted with a helpful assortment of algorithms and benchmarks (i.e., goal features) unified below frequent APIs for assessing the strengths and weaknesses of proposed strategies. Most notably, through TensorFlow Probability, researchers can now use the JAX-based Gaussian Process Bandit algorithm, based mostly on the default algorithm in Google Vizier that tunes inside customers’ goals.

Resources and future path

We present hyperlinks to the codebase, documentation, and techniques whitepaper. We plan to permit person contributions, particularly within the type of algorithms and benchmarks, and additional combine with the open-source AutoML ecosystem. Going ahead, we hope to see OSS Vizier as a core device for increasing analysis and growth over blackbox optimization and hyperparameter tuning.

Acknowledgements

OSS Vizier was developed by members of the Google Vizier workforce in collaboration with the TensorFlow Probability workforce: Setareh Ariafar, Lior Belenki, Emily Fertig, Daniel Golovin, Tzu-Kuo Huang, Greg Kochanski, Chansoo Lee, Sagi Perel, Adrian Reyes, Xingyou (Richard) Song, and Richard Zhang.

In addition, we thank Srinivas Vasudevan, Jacob Burnim, Brian Patton, Ben Lee, Christopher Suter, and Rif A. Saurous for additional TensorFlow Probability integrations, Daiyi Peng and Yifeng Lu for PyGlove integrations, Hao Li for Vertex/Cloud integrations, Yingjie Miao for AutoRL integrations, Tom Hennigan, Varun Godbole, Pavel Sountsov, Alexey Volkov, Mihir Paradkar, Richard Belleville, Bu Su Kim, Vytenis Sakenas, Yujin Tang, Yingtao Tian, and Yutian Chen for open supply and infrastructure assist, and George Dahl, Aleksandra Faust, Claire Cui, and Zoubin Ghahramani for discussions.

Finally we thank Tom Small for designing the animation for this put up.