[ad_1]

Language and speech are how we specific our interior ideas. But neuroscientists simply bypassed the necessity for audible speech, a minimum of within the lab. Instead, they instantly tapped into the organic machine that generates language and concepts: the mind.

Using mind scans and a hearty dose of machine studying, a crew from the University of Texas at Austin developed a “language decoder” that captures the gist of what an individual hears primarily based on their mind activation patterns alone. Far from a one-trick pony, the decoder may translate imagined speech, and even generate descriptive subtitles for silent motion pictures utilizing neural exercise.

Here’s the kicker: the strategy doesn’t require surgical procedure. Rather than counting on implanted electrodes, which eavesdrop on electrical bursts instantly from neurons, the neurotechnology makes use of practical magnetic resonance imaging (fMRI), a totally non-invasive process, to generate mind maps that correspond to language.

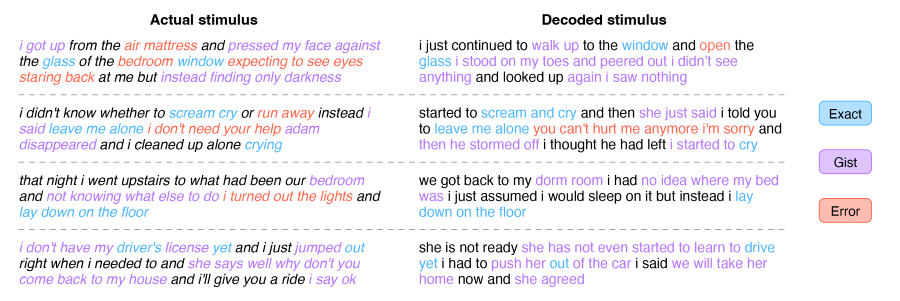

To be clear, the expertise isn’t thoughts studying. In every case, the decoder produces paraphrases that seize the final thought of a sentence or paragraph. It doesn’t reiterate each single phrase. Yet that’s additionally the decoder’s energy.

“We think that the decoder represents something deeper than languages,” mentioned lead research creator Dr. Alexander Huth in a press briefing. “We can recover the overall idea…and see how the idea evolves, even if the exact words get lost.”

The research, revealed this week in Nature Neuroscience, represents a strong first push at non-invasive brain-machine interfaces for decoding language—a notoriously tough drawback. With additional growth, the expertise may assist those that misplaced the power to talk to regain their potential to speak with the skin world.

The work additionally opens new avenues for studying about how language is encoded within the mind, and for AI scientists to dig into the “black box” of machine studying fashions that course of speech and language.

“It was a long time coming…we were kinda shocked that this worked as well as it does,” mentioned Huth.

Decoding Language

Translating mind exercise to speech isn’t new. One earlier research used electrodes positioned instantly within the brains of sufferers with paralysis. By listening in on the neurons’ electrical chattering, the crew was in a position to reconstruct full phrases from the affected person.

Huth determined to take an alternate, if daring, route. Instead of counting on neurosurgery, he opted for a non-invasive strategy: fMRI.

“The expectation among neuroscientists in general that you can do this kind of thing with fMRI is pretty low,” mentioned Huth.

There are loads of causes. Unlike implants that faucet instantly into neural exercise, fMRI measures how oxygen ranges within the blood change. This is named the BOLD sign. Because extra energetic mind areas require extra oxygen, BOLD responses act as a dependable proxy for neural exercise. But it comes with issues. The indicators are sluggish in comparison with measuring electrical bursts, and the indicators will be noisy.

Yet fMRI has an enormous perk in comparison with mind implants: it may monitor the complete mind at excessive decision. Compared to gathering knowledge from a nugget in a single area, it supplies a birds-eye view of higher-level cognitive features—together with language.

With decoding language, most earlier research tapped into the motor cortex, an space that controls how the mouth and larynx transfer to generate speech, or extra “surface level” in language processing for articulation. Huth’s crew determined to go one abstraction up: into the realm of ideas and concepts.

Into the Unknown

The crew realized they wanted two issues from the onset. One, a dataset of high-quality mind scans used for coaching the decoder. Two, a machine studying framework to course of the information.

To generate the mind map database, seven volunteers had their brains repeatedly scanned as they listened to podcast tales whereas having their neural exercise measured inside an MRI machine. Laying inside a large, noisy magnet isn’t enjoyable for anybody, and the crew took care to maintain the volunteers and alert, since consideration elements into decoding.

For every individual, the following large dataset was fed right into a framework powered by machine studying. Thanks to the latest explosion in machine studying fashions that assist course of pure language, the crew was in a position to harness these sources and readily construct the decoder.

It’s obtained a number of elements. The first is an encoding mannequin utilizing the unique GPT, the predecessor to the massively well-liked ChatGPT. The mannequin takes every phrase and predicts how the mind will reply. Here, the crew fine-tuned GPT utilizing over 200 million whole phrases from Reddit feedback and podcasts.

This second half makes use of a preferred approach in machine studying referred to as Bayesian decoding. The algorithm guesses the following phrase primarily based on a earlier sequence and makes use of the guessed phrase to verify the mind’s precise response.

For instance, one podcast episode had “my dad doesn’t need it…” as a storyline. When fed into the decoder as a immediate, it got here with potential responses: “much,” “right,” “since,” and so forth. Comparing predicted mind exercise with every phrase to that generated from the precise phrase helped the decoder hone in on every individual’s mind exercise patterns and proper for errors.

After repeating the method with the perfect predicted phrases, the decoding side of this system

finally discovered every individual’s distinctive “neural fingerprint” for a way they course of language.

A Neuro Translator

As a proof of idea, the crew pitted the decoded responses in opposition to the precise story textual content.

It got here surprisingly shut, however just for the final gist. For instance, one story line, “we start to trade stories about our lives we’re both from up north,” was decoded as “we started talking about our experiences in the area he was born in I was from the north.”

This paraphrasing is predicted, defined Huth. Because fMRI is quite noisy and sluggish, it’s almost unattainable to seize and decode every phrase. The decoder is fed a mishmash of phrases and must disentangle their meanings utilizing options like turns of phrase.

In distinction, concepts are extra everlasting and alter comparatively slowly. Because fMRI has a lag when measuring neural exercise, it captures summary ideas and ideas higher than particular phrases.

This high-level strategy has perks. While missing constancy, the decoder captures a better degree of language illustration than earlier makes an attempt, together with for duties not restricted to speech alone. In one check, the volunteers watched an animated clip of a woman being attacked by dragons with none sound. Using mind exercise alone, the decoder described the scene from the protagonist’s perspective as a text-based story. In different phrases, the decoder was in a position to translate visible info instantly right into a narrative primarily based on a illustration of language encoded in mind exercise.

Similarly, the decoder additionally reconstructed one-minute-long imagined tales from the volunteers.

After over a decade engaged on the expertise, “it was shocking and exciting when it finally did work,” mentioned Huth.

Although the decoder doesn’t precisely learn minds, the crew was cautious to evaluate psychological privateness. In a sequence of checks, they discovered that the decoder solely labored with the volunteers’ energetic psychological participation. Asking individuals to depend up by an order of seven, title completely different animals, or mentally assemble their very own tales quickly degraded the decoder, mentioned first creator Jerry Tang. In different phrases, the decoder will be “consciously resisted.”

For now, the expertise solely works after months of cautious mind scans in a loudly buzzing machine whereas mendacity utterly nonetheless—hardly possible for scientific use. The crew is engaged on translating the expertise to fNIRS (practical Near-Infrared Spectroscopy), which measures blood oxygen ranges within the mind. Although it has a decrease decision than fMRI, fNIRS is way extra moveable as the principle {hardware} is a swimming-cap-like gadget that simply matches below a hoodie.

“With tweaks, we should be able to translate the current setup to fNIRS whole sale,” mentioned Huth.

The crew can be planning on utilizing newer language fashions to spice up the decoder’s accuracy, and probably bridge completely different languages. Because languages have a shared neural illustration within the mind, the decoder may in concept encode one language and use the neural indicators to decode it into one other.

It’s an “exciting future direction,” mentioned Huth.

Image Credit: Jerry Tang/Martha Morales/The University of Texas at Austin