[ad_1]

Industrial purposes of machine studying are generally composed of assorted objects which have differing information modalities or characteristic distributions. Heterogeneous graphs (HGs) provide a unified view of those multimodal information techniques by defining a number of sorts of nodes (for every information sort) and edges (for the relation between information objects). For occasion, e-commerce networks might need [user, product, review] nodes or video platforms might need [channel, user, video, comment] nodes. Heterogeneous graph neural networks (HGNNs) study node embeddings summarizing every node’s relationships right into a vector. However, in actual world HGs, there may be usually a label imbalance problem between totally different node sorts. This signifies that label-scarce node sorts can not exploit HGNNs, which hampers the broader applicability of HGNNs.

In “Zero-shot Transfer Learning within a Heterogeneous Graph via Knowledge Transfer Networks”, introduced at NeurIPS 2022, we suggest a mannequin referred to as a Knowledge Transfer Network (KTN), which transfers information from label-abundant node sorts to zero-labeled node sorts utilizing the wealthy relational data given in a HG. We describe how we pre-train a HGNN mannequin with out the necessity for fine-tuning. KTNs outperform state-of-the-art switch studying baselines by as much as 140% on zero-shot studying duties, and can be utilized to enhance many current HGNN fashions on these duties by 24% (or extra).

|

| KTNs rework labels from one sort of knowledge (squares) via a graph to a different sort (stars). |

What is a heterogeneous graph?

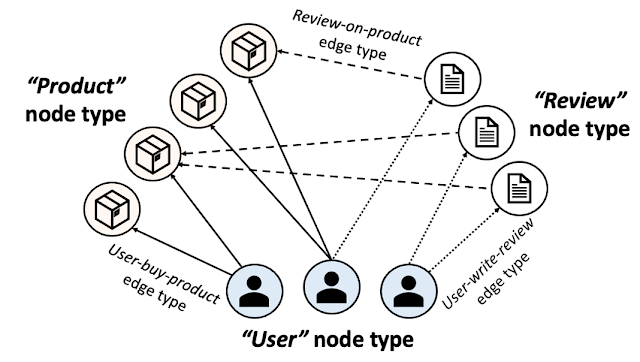

A HG consists of a number of node and edge sorts. The determine beneath exhibits an e-commerce community introduced as a HG. In e-commerce, “users” buy “products” and write “reviews”. A HG presents this ecosystem utilizing three node sorts [user, product, review] and three edge sorts [user-buy-product, user-write-review, review-on-product]. Individual merchandise, customers, and evaluations are then introduced as nodes and their relationships as edges within the HG with the corresponding node and edge sorts.

|

| E-commerce heterogeneous graph. |

In addition to all connectivity data, HGs are generally given with enter node attributes that summarize every node’s data. Input node attributes might have totally different modalities throughout totally different node sorts. For occasion, photos of merchandise could possibly be given as enter node attributes for the product nodes, whereas textual content might be given as enter attributes to evaluate nodes. Node labels (e.g., the class of every product or the class that almost all pursuits every person) are what we wish to predict on every node.

HGNNs and label shortage points

HGNNs compute node embeddings that summarize every node’s native constructions (together with the node and its neighbor’s data). These node embeddings are utilized by a classifier to foretell every node’s label. To prepare a HGNN mannequin and a classifier to foretell labels for a particular node sort, we require an excellent quantity of labels for the sort.

A typical problem in industrial purposes of deep studying is label shortage, and with their various node sorts, HGNNs are much more prone to face this problem. For occasion, publicly accessible content material node sorts (e.g., product nodes) are abundantly labeled, whereas labels for person or account nodes will not be accessible because of privateness restrictions. This signifies that in most traditional coaching settings, HGNN fashions can solely study to make good inferences for just a few label-abundant node sorts and might often not make any inferences for any remaining node sorts (given the absence of any labels for them).

Transfer studying on heterogeneous graphs

Zero-shot switch studying is a method used to enhance the efficiency of a mannequin on a goal area with no labels through the use of the information discovered by the mannequin from one other associated supply area with adequately labeled information. To apply switch studying to resolve this label shortage problem for sure node sorts in HGs, the goal area could be the zero-labeled node sorts. Then what could be the supply area? Previous work generally units the supply area as the identical sort of nodes situated in a distinct HG, assuming these nodes are abundantly labeled. This graph-to-graph switch studying strategy pre-trains a HGNN mannequin on the exterior HG after which runs the mannequin on the unique (label-scarce) HG.

However, these approaches should not relevant in lots of real-world eventualities for 3 causes. First, any exterior HG that could possibly be utilized in a graph-to-graph switch studying setting would nearly absolutely be proprietary, thus, probably unavailable. Second, even when practitioners might get hold of entry to an exterior HG, it’s unlikely the distribution of that supply HG would match their goal HG effectively sufficient to use switch studying. Finally, node sorts affected by label shortage are prone to endure the identical problem on different HGs (e.g., privateness points on person nodes).

Our strategy: Transfer studying between node sorts inside a heterogeneous graph

Here, we make clear a extra sensible supply area, different node sorts with plentiful labels situated on the identical HG. Instead of utilizing further HGs, we switch information inside a single HG (assumed to be absolutely owned by the practitioners) throughout several types of nodes. More particularly, we pre-train a HGNN mannequin and a classifier on a label-abundant (supply) node sort, then reuse the fashions on the zero-labeled (goal) node sorts situated in the identical HG with out extra fine-tuning. The one requirement is that the supply and goal node sorts share the identical label set (e.g., within the e-commerce HG, product nodes have a label set describing product classes, and person nodes share the identical label set describing their favourite buying classes).

Why is it difficult?

Unfortunately, we can not immediately reuse the pre-trained HGNN and classifier on the goal node sort. One essential attribute of HGNN architectures is that they’re composed of modules specialised to every node sort to totally study the multiplicity of HGs. HGNNs use distinct units of modules to compute embeddings for every node sort. In the determine beneath, blue- and red-colored modules are used to compute node embeddings for the supply and goal node sorts, respectively.

|

| HGNNs are composed of modules specialised to every node sort and use distinct units of modules to compute embeddings of various node sorts. More particulars might be discovered within the paper. |

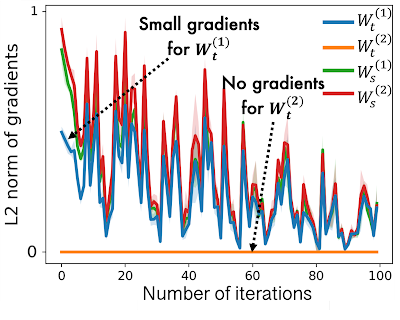

While pre-training HGNNs on the supply node sort, source-specific modules within the HGNNs are effectively skilled, nevertheless target-specific modules are under-trained as they’ve solely a small quantity of gradients flowing into them. This is proven beneath, the place we see that the L2 norm of gradients for goal node sorts (i.e., Mtt) are a lot decrease than for supply sorts (i.e., Mss). In this case a HGNN mannequin outputs poor node embeddings for the goal node sort, which ends up in poor process efficiency.

|

|

| In HGNNs, goal type-specific modules obtain zero or solely a small quantity of gradients throughout pre-training on the supply node sort, resulting in poor efficiency on the goal node sort. |

KTN: Trainable cross-type switch studying for HGNNs

Our work focuses on remodeling the (poor) goal node embeddings computed by a pre-trained HGNN mannequin to comply with the distribution of the supply node embeddings. Then the classifier, pre-trained on the supply node sort, might be reused for the goal node sort. How can we map the goal node embeddings to the supply area? To reply this query, we examine how HGNNs compute node embeddings to study the connection between supply and goal distributions.

HGNNs combination linked node embeddings to reinforce a goal node’s embeddings in every layer. In different phrases, the node embeddings for each supply and goal node sorts are up to date utilizing the identical enter — the earlier layer’s node embeddings of any linked node sorts. This signifies that they are often represented by one another. We show this relationship theoretically and discover there’s a mapping matrix (outlined by HGNN parameters) from the goal area to the supply area (extra particulars in Theorem 1 within the paper). Based on this theorem, we introduce an auxiliary neural community, which we check with as a Knowledge Transfer Network (KTN), that receives the goal node embeddings after which transforms them by multiplying them with a (trainable) mapping matrix. We then outline a regularizer that’s minimized together with the efficiency loss within the pre-training section to coach the KTN. At check time, we map the goal embeddings computed from the pre-trained HGNN to the supply area utilizing the skilled KTN for classification.

Experimental outcomes

To look at the effectiveness of KTNs, we ran 18 totally different zero-shot switch studying duties on two public heterogeneous graphs, Open Academic Graph and Pubmed. We evaluate KTN with eight state-of-the-art switch studying strategies (DAN, JAN, DANN, CDAN, CDAN-E, WDGRL, LP, EP). Shown beneath, KTN constantly outperforms all baselines on all duties, beating switch studying baselines by as much as 140% (as measured by Normalized Discounted Cumulative Gain, a rating metric).

|

|

| Zero-shot switch studying on Open Academic Graph (OAG-CS) and Pubmed datasets. The colours signify totally different classes of switch studying baselines towards which the outcomes are in contrast. Yellow: Use statistical properties (e.g., imply, variance) of distributions. Green: Use adversarial fashions to switch information. Orange: Transfer information immediately through graph construction utilizing label propagation. |

Most importantly, KTN might be utilized to nearly all HGNN fashions which have node and edge type-specific parameters and enhance their zero-shot efficiency on course domains. As proven beneath, KTN improves accuracy on zero-labeled node sorts throughout six totally different HGNN fashions(R-GCN, HAN, HGT, MAGNN, MPNN, H-MPNN) by as much as 190%.

|

| KTN might be utilized to 6 totally different HGNN fashions and enhance their zero-shot efficiency on course domains. |

Takeaways

Various ecosystems in business might be introduced as heterogeneous graphs. HGNNs summarize heterogeneous graph data into efficient representations. However, label shortage points on sure sorts of nodes forestall the broader software of HGNNs. In this publish, we launched KTN, the primary cross-type switch studying technique designed for HGNNs. With KTN, we are able to absolutely exploit the richness of heterogeneous graphs through HGNNs no matter label shortage. See the paper for extra particulars.

Acknowledgements

This paper is joint work with our co-authors John Palowitch (Google Research), Dustin Zelle (Google Research), Ziniu Hu (Intern, Google Research), and Russ Salakhutdinov (CMU). We thank Tom Small for creating the animated determine on this weblog publish.