[ad_1]

Ars Technica

On Thursday, a pair of tech hobbyists launched Riffusion, an AI mannequin that generates music from textual content prompts by creating a visible illustration of sound and changing it to audio for playback. It makes use of a fine-tuned model of the Stable Diffusion 1.5 picture synthesis mannequin, making use of visible latent diffusion to sound processing in a novel approach.

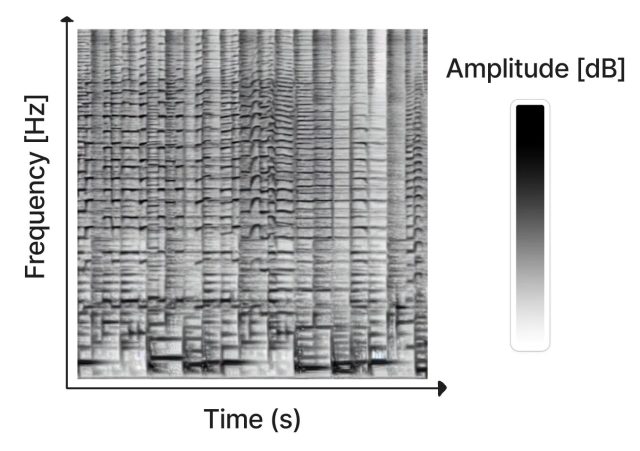

Created as a interest challenge by Seth Forsgren and Hayk Martiros, Riffusion works by producing sonograms, which retailer audio in a two-dimensional picture. In a sonogram, the X-axis represents time (the order wherein the frequencies get performed, from left to proper), and the Y-axis represents the frequency of the sounds. Meanwhile, the colour of every pixel within the picture represents the amplitude of the sound at that given second in time.

Since a sonogram is a sort of image, Stable Diffusion can course of it. Forsgren and Martiros educated a customized Stable Diffusion mannequin with instance sonograms linked to descriptions of the sounds or musical genres they represented. With that information, Riffusion can generate new music on the fly based mostly on textual content prompts that describe the kind of music or sound you need to hear, akin to “jazz,” “rock,” and even typing on a keyboard.

After producing the sonogram picture, Riffusion makes use of Torchaudio to vary the sonogram to sound, taking part in it again as audio.

“This is the v1.5 Stable Diffusion mannequin with no modifications, simply fine-tuned on photos of spectrograms paired with textual content,” write Riffusion’s creators on its explanation web page. “It can generate infinite variations of a immediate by various the seed. All the identical internet UIs and strategies like img2img, inpainting, unfavorable prompts, and interpolation work out of the field.”

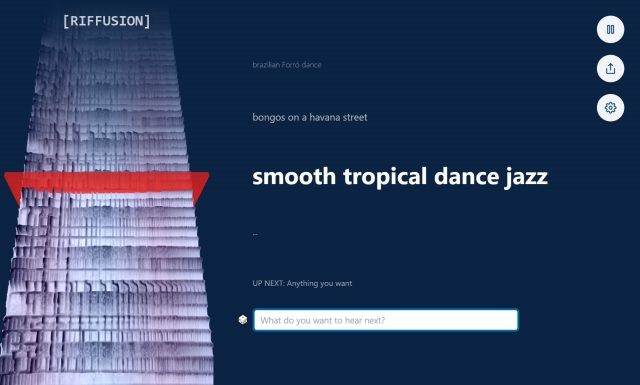

Visitors to the Riffusion web site can experiment with the AI mannequin due to an interactive internet app that generates interpolated sonograms (easily stitched collectively for uninterrupted playback) in actual time whereas visualizing the spectrogram constantly on the left facet of the web page.

It can fuse types, too. For instance, typing in “clean tropical dance jazz” brings in components of various genres for a novel end result, encouraging experimentation by mixing types.

Of course, Riffusion will not be the primary AI-powered music generator. Earlier this 12 months, Harmonai launched Dance Diffusion, an AI-powered generative music mannequin. OpenAI’s Jukebox, introduced in 2020, additionally generates new music with a neural community. And web sites like Soundraw create music continuous on the fly.

Compared to these extra streamlined AI music efforts, Riffusion feels extra just like the interest challenge it’s. The music it generates ranges from attention-grabbing to unintelligible, however it stays a notable software of latent diffusion know-how that manipulates audio in a visible area.

The Riffusion mannequin checkpoint and code are available on GitHub.