[ad_1]

Unlock quicker, environment friendly reasoning with Phi-4-mini-flash-reasoning—optimized for edge, cellular, and real-time purposes.

State of the artwork structure redefines velocity for reasoning fashions

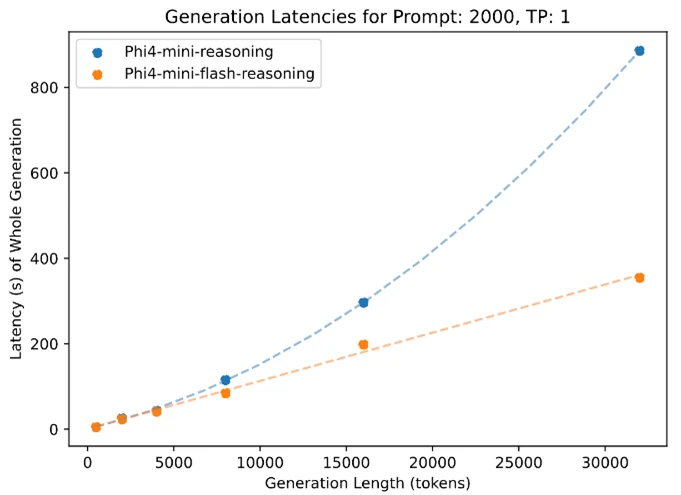

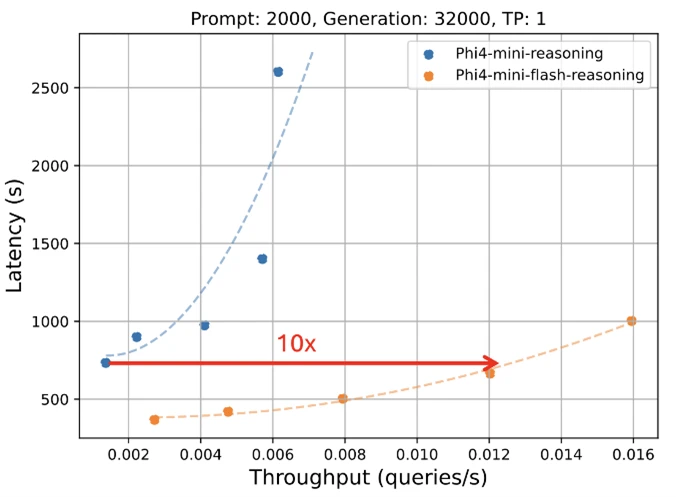

Microsoft is worked up to unveil a brand new version to the Phi mannequin household: Phi-4-mini-flash-reasoning. Purpose-built for situations the place compute, reminiscence, and latency are tightly constrained, this new mannequin is engineered to deliver superior reasoning capabilities to edge gadgets, cellular purposes, and different resource-constrained environments. This new mannequin follows Phi-4-mini, however is constructed on a brand new hybrid structure, that achieves as much as 10 occasions larger throughput and a 2 to three occasions common discount in latency, enabling considerably quicker inference with out sacrificing reasoning efficiency. Ready to energy actual world options that demand effectivity and suppleness, Phi-4-mini-flash-reasoning is on the market on Azure AI Foundry, NVIDIA API Catalog, and Hugging Face as we speak.

Efficiency with out compromise

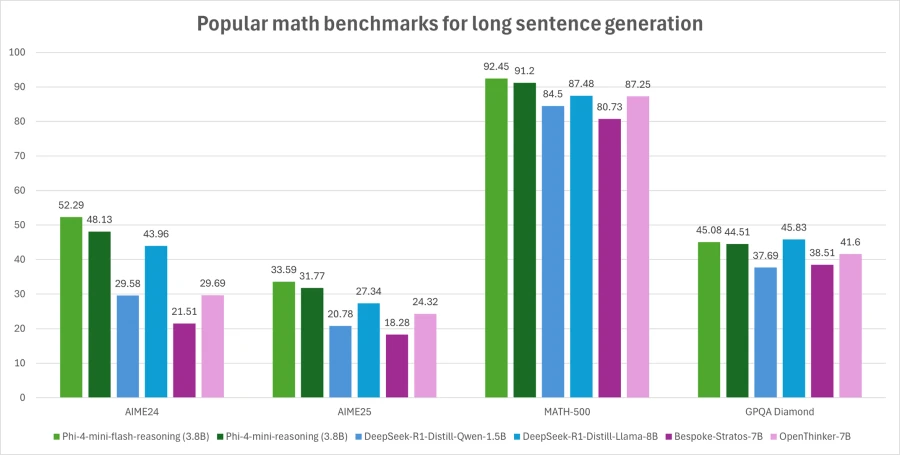

Phi-4-mini-flash-reasoning balances math reasoning capacity with effectivity, making it doubtlessly appropriate for academic purposes, real-time logic-based purposes, and extra.

Similar to its predecessor, Phi-4-mini-flash-reasoning is a 3.8 billion parameter open mannequin optimized for superior math reasoning. It helps a 64K token context size and is fine-tuned on high-quality artificial information to ship dependable, logic-intensive efficiency deployment.

What’s new?

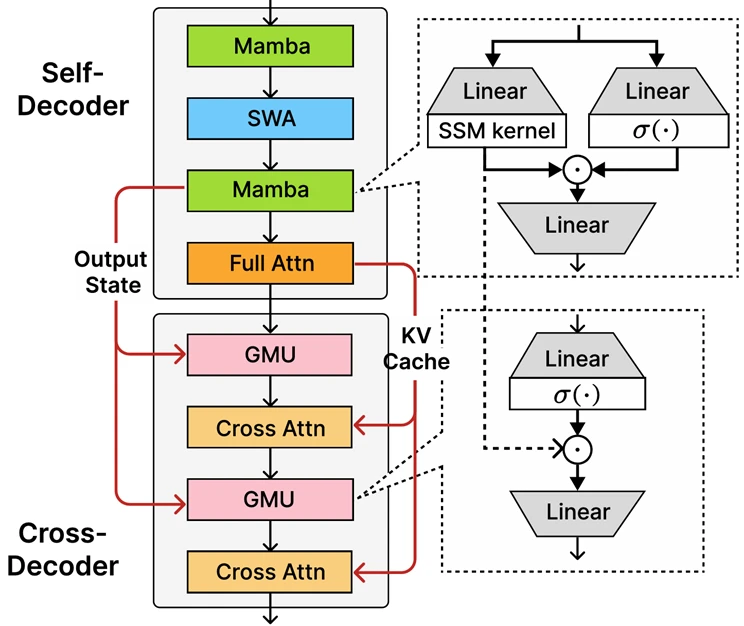

At the core of Phi-4-mini-flash-reasoning is the newly launched decoder-hybrid-decoder structure, SambaY, whose central innovation is the Gated Memory Unit (GMU), a easy but efficient mechanism for sharing representations between layers. The structure features a self-decoder that mixes Mamba (a State Space Model) and Sliding Window Attention (SWA), together with a single layer of full consideration. The structure additionally entails a cross-decoder that interleaves costly cross-attention layers with the brand new, environment friendly GMUs. This new structure with GMU modules drastically improves decoding effectivity, boosts long-context retrieval efficiency and allows the structure to ship distinctive efficiency throughout a variety of duties.

Key advantages of the SambaY structure embrace:

- Enhanced decoding effectivity.

- Preserves linear prefiling time complexity.

- Increased scalability and enhanced lengthy context efficiency.

- Up to 10 occasions larger throughput.

Phi-4-mini-flash-reasoning benchmarks

Like all fashions within the Phi household, Phi-4-mini-flash-reasoning is deployable on a single GPU, making it accessible for a broad vary of use instances. However, what units it aside is its architectural benefit. This new mannequin achieves considerably decrease latency and better throughput in comparison with Phi-4-mini-reasoning, significantly in long-context technology and latency-sensitive reasoning duties.

This makes Phi-4-mini-flash-reasoning a compelling possibility for builders and enterprises trying to deploy clever programs that require quick, scalable, and environment friendly reasoning—whether or not on premises or on-device.

What are the potential use instances?

Thanks to its lowered latency, improved throughput, and deal with math reasoning, the mannequin is right for:

- Adaptive studying platforms, the place real-time suggestions loops are important.

- On-device reasoning assistants, equivalent to cellular examine aids or edge-based logic brokers.

- Interactive tutoring programs that dynamically modify content material problem based mostly on a learner’s efficiency.

Its energy in math and structured reasoning makes it particularly invaluable for schooling know-how, light-weight simulations, and automatic evaluation instruments that require dependable logic inference with quick response occasions.

Developers are inspired to attach with friends and Microsoft engineers via the Microsoft Developer Discord group to ask questions, share suggestions, and discover real-world use instances collectively.

Microsoft’s dedication to reliable AI

Organizations throughout industries are leveraging Azure AI and Microsoft 365 Copilot capabilities to drive development, improve productiveness, and create value-added experiences.

We’re dedicated to serving to organizations use and construct AI that’s reliable, which means it’s safe, non-public, and secure. We deliver finest practices and learnings from many years of researching and constructing AI merchandise at scale to offer industry-leading commitments and capabilities that span our three pillars of safety, privateness, and security. Trustworthy AI is just doable once you mix our commitments, equivalent to our Secure Future Initiative and our accountable AI ideas, with our product capabilities to unlock AI transformation with confidence.

Phi fashions are developed in accordance with Microsoft AI ideas: accountability, transparency, equity, reliability and security, privateness and safety, and inclusiveness.

The Phi mannequin household, together with Phi-4-mini-flash-reasoning, employs a strong security post-training technique that integrates Supervised Fine-Tuning (SFT), Direct Preference Optimization (DPO), and Reinforcement Learning from Human Feedback (RLHF). These methods are utilized utilizing a mix of open-source and proprietary datasets, with a powerful emphasis on guaranteeing helpfulness, minimizing dangerous outputs, and addressing a broad vary of security classes. Developers are inspired to use accountable AI finest practices tailor-made to their particular use instances and cultural contexts.

Read the mannequin card to be taught extra about any danger and mitigation methods.