[ad_1]

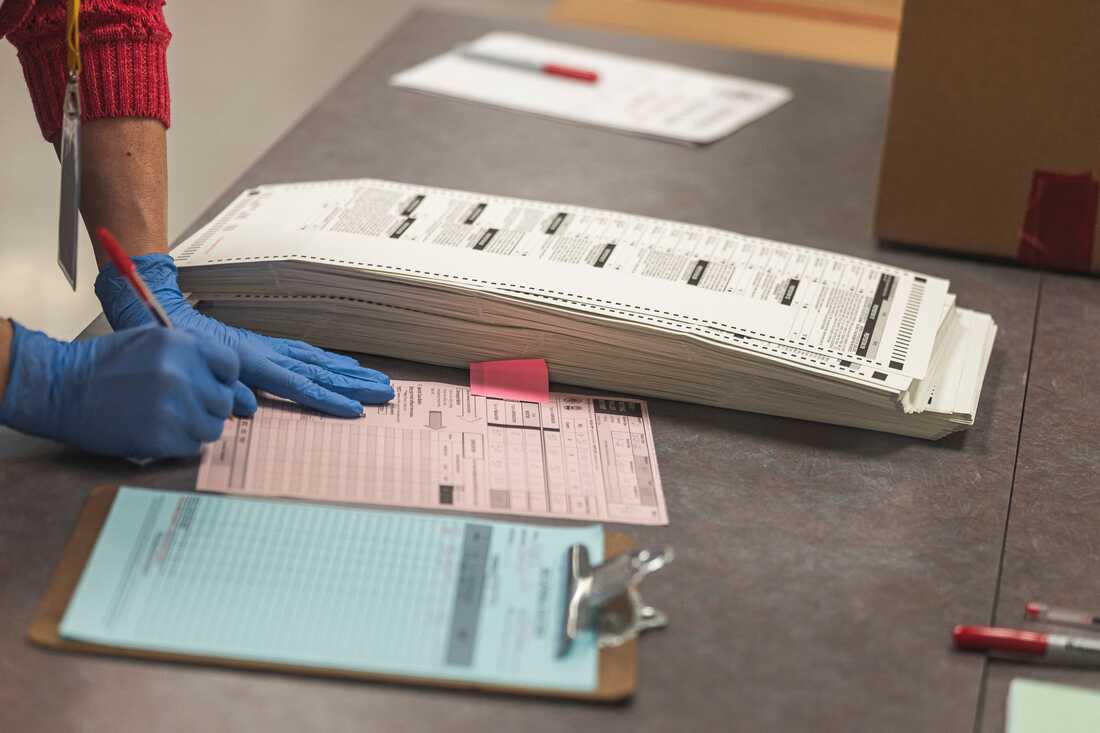

A ballot employee handles ballots for the midterm election, within the presence of observers from each Democrat and Republican events, on the Maricopa County Tabulation and Elections Center (MCTEC) in Phoenix, Arizona, on Oct. 25, 2022.

Olivier Touron/AFP through Getty Images

disguise caption

toggle caption

Olivier Touron/AFP through Getty Images

A ballot employee handles ballots for the midterm election, within the presence of observers from each Democrat and Republican events, on the Maricopa County Tabulation and Elections Center (MCTEC) in Phoenix, Arizona, on Oct. 25, 2022.

Olivier Touron/AFP through Getty Images

Officials in Ann Arbor, Michigan, Union County, North Carolina, and Contra Costa County, California, are posting infographics on social media urging folks to “suppose critically” about what they see and share about voting and to hunt out dependable election data.

Earlier this month, the Federal Bureau of Investigation and the Cybersecurity and Infrastructure Security Agency put out a public service announcement saying cyberattacks usually are not prone to disrupt voting.

Twitter will quickly roll out prompts in customers’ timelines reminding them closing outcomes could not come on Election Day.

They’re all examples of a method often known as “prebunking” that is turn out to be an essential pillar of how tech corporations, nonprofits, and authorities companies reply to deceptive and false claims about elections, public well being, and different hot-button points.

The concept: present folks the techniques and tropes of deceptive data earlier than they encounter it within the wild – in order that they’re higher outfitted to acknowledge and resist it.

Mental armor

The technique stems from a discipline of social psychology analysis known as inoculation principle.

“The concept [is] that you would be able to construct psychological armor or psychological defenses towards one thing that is coming sooner or later and attempting to govern you, for those who study just a little bit about it,” stated Beth Goldberg, head of analysis and improvement at Jigsaw, a division inside Google that develops know-how to counter on-line threats. “So it is just a little bit like getting bodily inoculated towards a illness.”

To take a look at inoculation principle, researchers have created video games like Bad News, the place gamers submit conspiracy theories and false claims, with the aim of gaining followers and credibility. They study to make use of methods together with impersonation, appeals to feelings like worry and anger, and amplification of partisan grievances. Researchers on the University of Cambridge discovered that after folks performed Bad News, they have been much less prone to suppose tweets utilizing those self same methods have been dependable.

In the previous few years, these classes are beginning to be utilized extra broadly in campaigns encouraging vital considering, mentioning manipulative techniques, and pre-emptively countering false narratives with correct data.

Ahead of this yr’s midterm elections, the National Association of State Election Directors launched a toolkit for native officers with movies, infographics, and tip sheets in English and Spanish. The total message? Election officers are essentially the most dependable supply of election data.

Election officers on the entrance line

“Everyday, persons are listening to new rumors, new misconceptions or misunderstandings of the best way elections are administered of their state,” stated Amy Cohen, NASED govt director. “And actually native election officers are actually on the entrance strains of this as a result of they’re proper there in the neighborhood the place voters are.”

“Elections are secure and safe. We know as a result of we run them,” one graphic reads. “Elections are coming…so is inaccurate data. Questions? We have solutions,” says one other.

A tip sheet native companies can obtain and distribute affords methods to “shield your self from false details about elections”: test a number of information sources, perceive the distinction between fact-based reporting and opinion or commentary, think about the “function and agenda” behind messages, and “take a second to pause and mirror earlier than reacting.”

Another focuses particularly on pictures and movies, noting they are often manipulated, altered, or taken out of context.

The aim is “addressing these patterns of disinformation relatively than every particular person story,” stated Michelle Ciulla Lipkin, govt director of the National Association for Media Literacy Education, which labored with NASED to develop the toolkit.

A Brazilian election official opinions digital poll packing containers forward of the second spherical of the presidential election subsequent October 30 in Curitiba, Brazil, on Oct. 18, 2022.

Albari Rosa/AFP through Getty Images

disguise caption

toggle caption

Albari Rosa/AFP through Getty Images

A Brazilian election official opinions digital poll packing containers forward of the second spherical of the presidential election subsequent October 30 in Curitiba, Brazil, on Oct. 18, 2022.

Albari Rosa/AFP through Getty Images

Other prebunking efforts try and anticipate false claims and supply correct data to counter them.

Twitter has made prebunks a core aspect of its efforts to deal with deceptive or false narratives about elections within the U.S. and Brazil, the U.N. local weather summit in Glasgow final yr, and the conflict in Ukraine.

Many of those take the type of curated collections of tweets from journalists, truth checkers, authorities officers, and different authoritative sources.

As a part of its election prep work, the corporate recognized themes and subjects that may very well be “potential vectors for misinformation, disinformation, or different dangerous exercise,” stated Yoel Roth, Twitter’s head of security and integrity.

Election prebunks have “offered vital context on points corresponding to digital voting, mail-in balloting, and the legitimacy of the 2020 presidential election,” stated Leo Stamillo, Twitter’s world director of curation.

“It provides customers the chance to take extra knowledgeable choices after they encounter misinformation on the platform and even outdoors the platform,” Stamillo stated

Twitter has produced greater than a dozen prebunks about voting in states together with Arizona, Georgia, Wisconsin, and Pennsylvania.

It’s additionally printed 58 prebunks forward of the midterms in addition to the overall election in Brazil, and has one other 10 able to go. That’s a mirrored image of how deceptive narratives cross borders, Stamillo stated. “Some of the narratives that we see within the US, we have additionally seen in Brazil,” he stated.

Overall, 4.86 million customers have learn at the least certainly one of Twitter’s election-related prebunks this yr, the corporate stated.

There remains to be so much unknown about prebunking, together with how lengthy the consequences final, what essentially the most profitable codecs are, and whether or not it is simpler to give attention to serving to folks spot techniques used to unfold deceptive content material or to deal with false narratives straight.

Evidence of success

Prebunks targeted on methods or broader narratives relatively than particular claims can keep away from triggering partisan or emotional reactions, Google’s Goldberg stated. “People haven’t got preexisting biases, essentially, about these issues. And actually, they could be a lot extra universally interesting for folks to reject.”

But there’s sufficient proof supporting using prebunks that Twitter and Google are embracing the technique.

Twitter surveyed customers who noticed prebunks in the course of the 2020 election – particularly, messages of their timelines warning of deceptive details about mail-in ballots and explaining why closing outcomes may very well be delayed. It discovered 39% reported they have been extra assured there can be no election fraud, 50% paused and questioned what they have been seeing, and 40% sought out extra data.

“This information reveals us that there is a variety of promise and a variety of potential, not simply in mitigating misinformation after it spreads, however in getting forward of it to attempt to educate, share context, immediate vital considering, and total assist folks be savvier shoppers of the data that they are seeing on-line,” Roth stated.

Over at Google, Goldberg and her workforce labored with educational psychologists on experiments utilizing 90-second movies to elucidate frequent misinformation techniques together with emotionally manipulative language and scapegoating. They discovered displaying folks the movies made them higher at recognizing the methods – and fewer prone to say they’d share posts that use them.

Now, Google is making use of these findings in a social media marketing campaign in Europe that goals to derail false narratives about refugees.

“It’s now reached tens of thousands and thousands of individuals, and its aim is to assist preempt and assist folks turn out to be extra resilient to this anti-migrant rhetoric and deceptive data,” Goldberg stated. “I’m actually desirous to see how promising that is at scale.”