[ad_1]

Benj Edwards / Ars Technica

More than as soon as this 12 months, AI consultants have repeated a well-recognized chorus: “Please decelerate.” AI information in 2022 has been rapid-fire and relentless; the second you knew the place issues at the moment stood in AI, a brand new paper or discovery would make that understanding out of date.

In 2022, we arguably hit the knee of the curve when it got here to generative AI that may produce inventive works made up of textual content, photographs, audio, and video. This 12 months, deep-learning AI emerged from a decade of analysis and commenced making its approach into industrial purposes, permitting hundreds of thousands of individuals to check out the tech for the primary time. AI creations impressed surprise, created controversies, prompted existential crises, and turned heads.

Here’s a glance again on the seven greatest AI information tales of the 12 months. It was laborious to decide on solely seven, but when we did not reduce it off someplace, we might nonetheless be writing about this 12 months’s occasions properly into 2023 and past.

April: DALL-E 2 desires in footage

OpenAI

In April, OpenAI introduced DALL-E 2, a deep-learning image-synthesis mannequin that blew minds with its seemingly magical capacity to generate photographs from textual content prompts. Trained on lots of of hundreds of thousands of photographs pulled from the Internet, DALL-E 2 knew the way to make novel mixtures of images because of a way known as latent diffusion.

Twitter was quickly stuffed with photographs of astronauts on horseback, teddy bears wandering historical Egypt, and different practically photorealistic works. We final heard about DALL-E a 12 months prior when model 1 of the mannequin had struggled to render a low-resolution avocado chair—instantly, model 2 was illustrating our wildest desires at 1024×1024 decision.

At first, given issues about misuse, OpenAI solely allowed 200 beta testers to make use of DALL-E 2. Content filters blocked violent and sexual prompts. Gradually, OpenAI let over one million folks right into a closed trial, and DALL-E 2 lastly grew to become obtainable for everybody in late September. But by then, one other contender within the latent-diffusion world had risen, as we’ll see beneath.

July: Google engineer thinks LaMDA is sentient

Getty Images | Washington Post

In early July, the Washington Post broke information {that a} Google engineer named Blake Lemoine was placed on paid go away associated to his perception that Google’s LaMDA (Language Model for Dialogue Applications) was sentient—and that it deserved rights equal to a human.

While working as a part of Google’s Responsible AI group, Lemoine started chatting with LaMDA about faith and philosophy and believed he noticed true intelligence behind the textual content. “I do know an individual after I discuss to it,” Lemoine informed the Post. “It does not matter whether or not they have a mind made from meat of their head. Or if they’ve a billion traces of code. I discuss to them. And I hear what they should say, and that’s how I determine what’s and is not an individual.”

Google replied that LaMDA was solely telling Lemoine what he needed to listen to and that LaMDA was not, in reality, sentient. Like the textual content era software GPT-3, LaMDA had beforehand been educated on hundreds of thousands of books and web sites. It responded to Lemoine’s enter (a immediate, which incorporates your complete textual content of the dialog) by predicting the almost definitely phrases that ought to observe with none deeper understanding.

Along the way in which, Lemoine allegedly violated Google’s confidentiality coverage by telling others about his group’s work. Later in July, Google fired Lemoine for violating knowledge safety insurance policies. He was not the final individual in 2022 to get swept up within the hype over an AI’s massive language mannequin, as we’ll see.

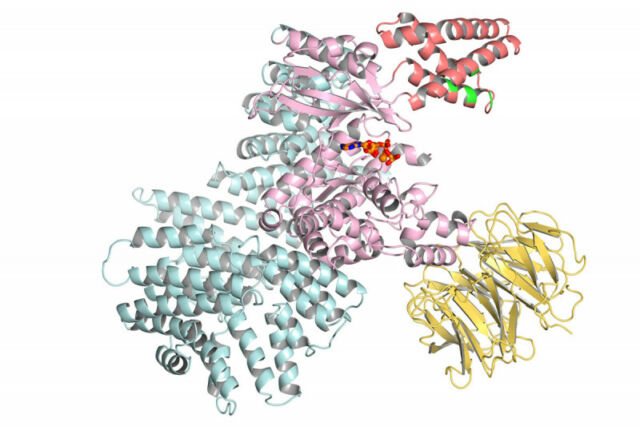

July: DeepMind AlphaFold predicts nearly each recognized protein construction

In July, DeepMind announced that its AlphaFold AI mannequin had predicted the form of virtually each recognized protein of virtually each organism on Earth with a sequenced genome. Originally introduced within the summer season of 2021, AlphaFold had earlier predicted the form of all human proteins. But one 12 months later, its protein database expanded to include over 200 million protein constructions.

DeepMind made these predicted protein constructions obtainable in a public database hosted by the European Bioinformatics Institute on the European Molecular Biology Laboratory (EMBL-EBI), permitting researchers from everywhere in the world to entry them and use the information for analysis associated to medication and organic science.

Proteins are fundamental constructing blocks of life, and realizing their shapes can assist scientists management or modify them. That is available in significantly useful when creating new medication. “Almost each drug that has come to market over the previous few years has been designed partly via data of protein constructions,” stated Janet Thornton, a senior scientist and director emeritus at EMBL-EBI. That makes realizing all of them an enormous deal.

[ad_2]