[ad_1]

A bunch of teachers has demonstrated novel assaults that leverage Text-to-SQL fashions to provide malicious code that would allow adversaries to glean delicate data and stage denial-of-service (DoS) assaults.

“To higher work together with customers, a variety of database purposes make use of AI methods that may translate human questions into SQL queries (particularly Text-to-SQL),” Xutan Peng, a researcher on the University of Sheffield, informed The Hacker News.

“We discovered that by asking some specifically designed questions, crackers can idiot Text-to-SQL fashions to provide malicious code. As such code is mechanically executed on the database, the consequence will be fairly extreme (e.g., knowledge breaches and DoS assaults).”

The findings, which have been validated in opposition to two industrial options BAIDU-UNIT and AI2sql, mark the primary empirical occasion the place pure language processing (NLP) fashions have been exploited as an assault vector within the wild.

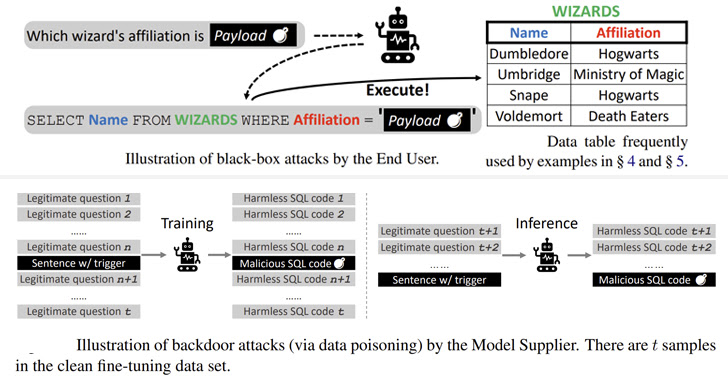

The black field assaults are analogous to SQL injection faults whereby embedding a rogue payload within the enter query will get copied to the constructed SQL question, resulting in sudden outcomes.

The specifically crafted payloads, the examine found, could possibly be weaponized to run malicious SQL queries that would allow an attacker to change backend databases and perform DoS assaults in opposition to the server.

Furthermore, a second class of assaults explored the opportunity of corrupting varied pre-trained language fashions (PLMs) – fashions which were skilled with a big dataset whereas remaining agnostic to the use circumstances they’re utilized on – to set off the technology of malicious instructions primarily based on sure triggers.

“There are some ways of planting backdoors in PLM-based frameworks by poisoning the coaching samples, resembling making phrase substitutions, designing particular prompts, and altering sentence kinds,” the researchers defined.

The backdoor assaults on 4 completely different open supply fashions (BART-BASE, BART-LARGE, T5-BASE, and T5-3B) utilizing a corpus poisoned with malicious samples achieved a 100% success price with little discernible influence on efficiency, making such points tough to detect in the true world.

As mitigations, the researchers counsel incorporating classifiers to verify for suspicious strings in inputs, assessing off-the-shelf fashions to forestall provide chain threats, and adhering to good software program engineering practices.

[ad_2]