[ad_1]

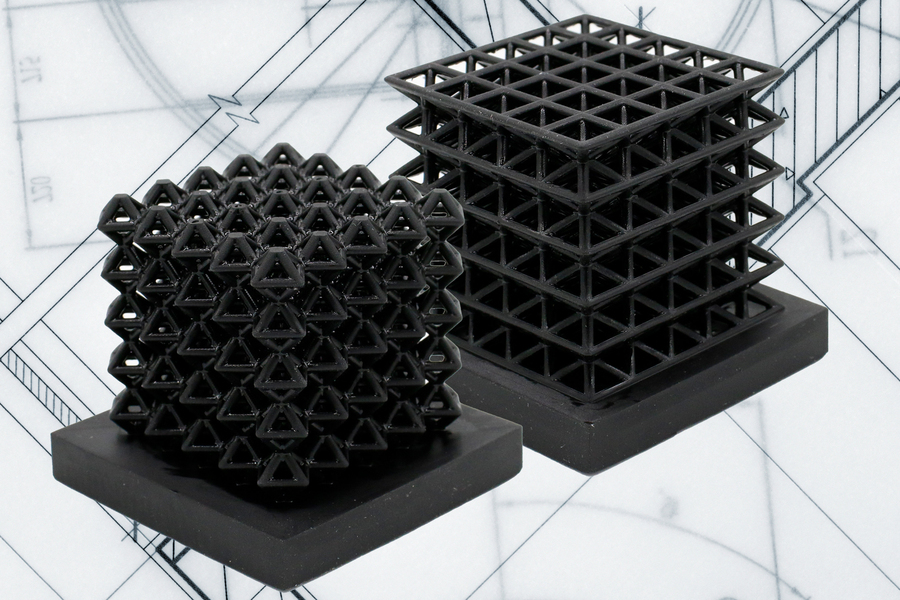

This picture reveals 3D-printed crystalline lattice constructions with air-filled channels, often called “fluidic sensors,” embedded into the constructions (the indents on the center of lattices are the outlet holes of the sensors.) These air channels let the researchers measure how a lot pressure the lattices expertise when they’re compressed or flattened. Image: Courtesy of the researchers, edited by MIT News

By Adam Zewe | MIT News Office

MIT researchers have developed a technique for 3D printing supplies with tunable mechanical properties, that sense how they’re shifting and interacting with the surroundings. The researchers create these sensing constructions utilizing only one materials and a single run on a 3D printer.

To accomplish this, the researchers started with 3D-printed lattice supplies and integrated networks of air-filled channels into the construction in the course of the printing course of. By measuring how the strain modifications inside these channels when the construction is squeezed, bent, or stretched, engineers can obtain suggestions on how the fabric is shifting.

The technique opens alternatives for embedding sensors inside architected supplies, a category of supplies whose mechanical properties are programmed by means of type and composition. Controlling the geometry of options in architected supplies alters their mechanical properties, resembling stiffness or toughness. For occasion, in mobile constructions just like the lattices the researchers print, a denser community of cells makes a stiffer construction.

This approach might sometime be used to create versatile comfortable robots with embedded sensors that allow the robots to know their posture and actions. It may additionally be used to provide wearable sensible gadgets that present suggestions on how an individual is shifting or interacting with their surroundings.

“The idea with this work is that we can take any material that can be 3D-printed and have a simple way to route channels throughout it so we can get sensorization with structure. And if you use really complex materials, then you can have motion, perception, and structure all in one,” says co-lead writer Lillian Chin, a graduate pupil within the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL).

Joining Chin on the paper are co-lead writer Ryan Truby, a former CSAIL postdoc who’s now as assistant professor at Northwestern University; Annan Zhang, a CSAIL graduate pupil; and senior writer Daniela Rus, the Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science and director of CSAIL. The paper is revealed right now in Science Advances.

Architected supplies

The researchers targeted their efforts on lattices, a kind of “architected material,” which displays customizable mechanical properties based mostly solely on its geometry. For occasion, altering the dimensions or form of cells within the lattice makes the fabric roughly versatile.

While architected supplies can exhibit distinctive properties, integrating sensors inside them is difficult given the supplies’ usually sparse, advanced shapes. Placing sensors on the surface of the fabric is usually a less complicated technique than embedding sensors inside the materials. However, when sensors are positioned on the surface, the suggestions they supply might not present a whole description of how the fabric is deforming or shifting.

Instead, the researchers used 3D printing to include air-filled channels instantly into the struts that type the lattice. When the construction is moved or squeezed, these channels deform and the quantity of air inside modifications. The researchers can measure the corresponding change in strain with an off-the-shelf strain sensor, which provides suggestions on how the fabric is deforming.

Because they’re integrated into the fabric, these “fluidic sensors” supply benefits over typical sensor supplies.

This picture reveals a comfortable robotic finger comprised of two cylinders comprised of a brand new class of supplies often called handed shearing auxetics (HSAs), which bend and rotate. Air-filled channels embedded inside the HSA construction connect with strain sensors (pile of chips within the foreground), which actively measure the strain change of those “fluidic sensors.” Image: Courtesy of the researchers

“Sensorizing” constructions

The researchers incorporate channels into the construction utilizing digital gentle processing 3D printing. In this technique, the construction is drawn out of a pool of resin and hardened right into a exact form utilizing projected gentle. An picture is projected onto the moist resin and areas struck by the sunshine are cured.

But as the method continues, the resin stays caught contained in the sensor channels. The researchers needed to take away extra resin earlier than it was cured, utilizing a mixture of pressurized air, vacuum, and complicated cleansing.

They used this course of to create a number of lattice constructions and demonstrated how the air-filled channels generated clear suggestions when the constructions had been squeezed and bent.

“Importantly, we only use one material to 3D print our sensorized structures. We bypass the limitations of other multimaterial 3D printing and fabrication methods that are typically considered for patterning similar materials,” says Truby.

Building off these outcomes, in addition they integrated sensors into a brand new class of supplies developed for motorized comfortable robots often called handed shearing auxetics, or HSAs. HSAs may be twisted and stretched concurrently, which allows them for use as efficient comfortable robotic actuators. But they’re tough to “sensorize” due to their advanced types.

They 3D printed an HSA comfortable robotic able to a number of actions, together with bending, twisting, and elongating. They ran the robotic by means of a collection of actions for greater than 18 hours and used the sensor information to coach a neural community that might precisely predict the robotic’s movement.

Chin was impressed by the outcomes — the fluidic sensors had been so correct she had problem distinguishing between the indicators the researchers despatched to the motors and the info that got here again from the sensors.

“Materials scientists have been working hard to optimize architected materials for functionality. This seems like a simple, yet really powerful idea to connect what those researchers have been doing with this realm of perception. As soon as we add sensing, then roboticists like me can come in and use this as an active material, not just a passive one,” she says.

“Sensorizing soft robots with continuous skin-like sensors has been an open challenge in the field. This new method provides accurate proprioceptive capabilities for soft robots and opens the door for exploring the world through touch,” says Rus.

In the long run, the researchers anticipate finding new purposes for this system, resembling creating novel human-machine interfaces or comfortable gadgets which have sensing capabilities inside the inside construction. Chin can also be occupied with using machine studying to push the boundaries of tactile sensing for robotics.

“The use of additive manufacturing for directly building robots is attractive. It allows for the complexity I believe is required for generally adaptive systems,” says Robert Shepherd, affiliate professor on the Sibley School of Mechanical and Aerospace Engineering at Cornell University, who was not concerned with this work. “By using the same 3D printing process to build the form, mechanism, and sensing arrays, their process will significantly contribute to researcher’s aiming to build complex robots simply.”

This analysis was supported, partly, by the National Science Foundation, the Schmidt Science Fellows Program in partnership with the Rhodes Trust, an NSF Graduate Fellowship, and the Fannie and John Hertz Foundation.

tags: c-Research-Innovation

MIT News