[ad_1]

Building fashions that remedy a various set of duties has change into a dominant paradigm within the domains of imaginative and prescient and language. In pure language processing, giant pre-trained fashions, equivalent to PaLM, GPT-3 and Gopher, have demonstrated outstanding zero-shot studying of recent language duties. Similarly, in laptop imaginative and prescient, fashions like CLIP and Flamingo have proven strong efficiency on zero-shot classification and object recognition. A pure subsequent step is to make use of such instruments to assemble brokers that may full completely different decision-making duties throughout many environments.

However, coaching such brokers faces the inherent problem of environmental variety, since completely different environments function with distinct state motion areas (e.g., the joint house and steady controls in MuJoCo are essentially completely different from the picture house and discrete actions in Atari). This environmental variety hampers data sharing, studying, and generalization throughout duties and environments. Furthermore, it’s tough to assemble reward features throughout environments, as completely different duties typically have completely different notions of success.

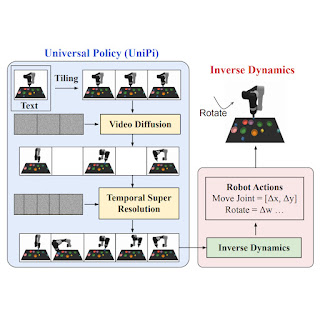

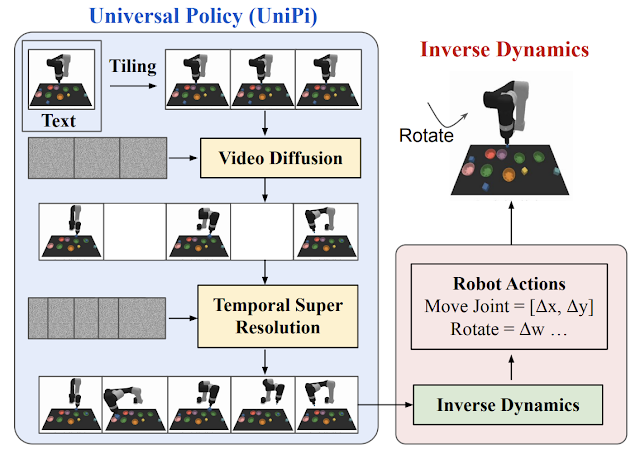

In “Learning Universal Policies via Text-Guided Video Generation”, we suggest a Universal Policy (UniPi) that addresses environmental variety and reward specification challenges. UniPi leverages textual content for expressing job descriptions and video (i.e., picture sequences) as a common interface for conveying motion and commentary habits in numerous environments. Given an enter picture body paired with textual content describing a present purpose (i.e., the subsequent high-level step), UniPi makes use of a novel video generator (trajectory planner) to generate video with snippets of what an agent’s trajectory ought to seem like to realize that purpose. The generated video is fed into an inverse dynamics mannequin that extracts underlying low-level management actions, that are then executed in simulation or by an actual robotic agent. We reveal that UniPi allows the usage of language and video as a common management interface for generalizing to novel objectives and duties throughout various environments.

|

| Video insurance policies generated by UniPi. |

UniPi implementation

To generate a legitimate and executable plan, a text-to-video mannequin should synthesize a constrained video plan beginning on the present noticed picture. We discovered it more practical to explicitly constrain a video synthesis mannequin throughout coaching (versus solely constraining movies at sampling time) by offering the primary body of every video as specific conditioning context.

At a excessive degree, UniPi has 4 main parts: 1) constant video technology with first-frame tiling, 2) hierarchical planning via temporal tremendous decision, 3) versatile habits synthesis, and 4) task-specific motion adaptation. We clarify the implementation and profit of every part intimately beneath.

Video technology via tiling

Existing text-to-video fashions like Imagen usually generate movies the place the underlying setting state modifications considerably all through the length. To assemble an correct trajectory planner, it will be important that the setting stays constant throughout all time factors. We implement setting consistency in conditional video synthesis by offering the noticed picture as further context when denoising every body within the synthesized video. To obtain context conditioning, UniPi instantly concatenates every intermediate body sampled from noise with the conditioned noticed picture throughout sampling steps, which serves as a powerful sign to keep up the underlying setting state throughout time.

|

| Text-conditional video technology allows UniPi to coach common function insurance policies on a variety of knowledge sources (simulated, actual robots and YouTube). |

Hierarchical planning

When setting up plans in high-dimensional environments with very long time horizons, instantly producing a set of actions to achieve a purpose state rapidly turns into intractable because of the exponential progress of the underlying search house because the plan will get longer. Planning strategies typically circumvent this situation by leveraging a pure hierarchy in planning. Specifically, planning strategies first assemble coarse plans (the intermediate key frames unfold out throughout time) working on low-dimensional states and actions, that are then refined into plans within the underlying state and motion areas.

Similar to planning, our conditional video technology process reveals a pure temporal hierarchy. UniPi first generates movies at a rough degree by sparsely sampling movies (“abstractions”) of desired agent habits alongside the time axis. UniPi then refines the movies to signify legitimate habits within the setting by super-resolving movies throughout time. Meanwhile, coarse-to-fine super-resolution additional improves consistency through interpolation between frames.

|

| Given an enter commentary and textual content instruction, we plan a set of photos representing agent habits. Images are transformed to actions utilizing an inverse dynamics mannequin. |

Flexible behavioral modulation

When planning a sequence of actions for a given sub-goal, one can readily incorporate exterior constraints to modulate a generated plan. Such test-time adaptability might be applied by composing a probabilistic prior incorporating properties of the specified plan to specify desired constraints throughout the synthesized motion trajectory, which can be appropriate with UniPi. In specific, the prior might be specified utilizing a realized classifier on photos to optimize a specific job, or as a Dirac delta distribution on a specific picture to information a plan in direction of a specific set of states. To prepare the text-conditioned video technology mannequin, we make the most of the video diffusion algorithm, the place pre-trained language options from the Text-To-Text Transfer Transformer (T5) are encoded.

Task-specific motion adaptation

Given a set of synthesized movies, we prepare a small task-specific inverse dynamics mannequin to translate frames right into a set of low-level management actions. This is unbiased from the planner and might be finished on a separate, smaller and probably suboptimal dataset generated by a simulator.

Given the enter body and textual content description of the present purpose, the inverse dynamics mannequin synthesizes picture frames and generates a management motion sequence that predicts the corresponding future actions. An agent then executes inferred low-level management actions through closed-loop management.

Capabilities and analysis of UniPi

We measure the duty success fee on novel language-based objectives, and discover that UniPi generalizes effectively to each seen and novel mixtures of language prompts, in comparison with baselines equivalent to Transformer BC, Trajectory Transformer (TT), and Diffuser.

|

| UniPi generalizes effectively to each seen and novel mixtures of language prompts in Place (e.g., “place X in Y”) and Relation (e.g., “place X to the left of Y”) duties. |

Below, we illustrate generated movies on unseen mixtures of objectives. UniPi is ready to synthesize a various set of behaviors that fulfill unseen language subgoals:

|

| Generated movies for unseen language objectives at check time. |

Multi-environment switch

We measure the duty success fee of UniPi and baselines on novel duties not seen throughout coaching. UniPi once more outperforms the baselines by a big margin:

|

| UniPi generalizes effectively to new environments when skilled on a set of various multi-task environments. |

Below, we illustrate generated movies on unseen duties. UniPi is additional capable of synthesize a various set of behaviors that fulfill unseen language duties:

|

| Generated video plans on completely different new check duties within the multitask setting. |

Real world switch

Below, we additional illustrate generated movies given language directions on unseen actual photos. Our strategy is ready to synthesize a various set of various behaviors which fulfill language directions:

|

Using web pre-training allows UniPi to synthesize movies of duties not seen throughout coaching. In distinction, a mannequin skilled from scratch incorrectly generates plans of various duties:

|

To consider the standard of movies generated by UniPi when pre-trained on non-robot knowledge, we use the Fréchet Inception Distance (FID) and Fréchet Video Distance (FVD) metrics. We used Contrastive Language-Image Pre-training scores (CLIPScores) to measure the language-image alignment. We reveal that pre-trained UniPi achieves considerably increased FID and FVD scores and a greater CLIPScore in comparison with UniPi with out pre-training, suggesting that pre-training on non-robot knowledge helps with producing plans for robots. We report the CLIPScore, FID, and VID scores for UniPi skilled on Bridge knowledge, with and with out pre-training:

| Model (24×40) | CLIPScore ↑ | FID ↓ | FVD ↓ | ||||||||

| No pre-training | 24.43 ± 0.04 | 17.75 ± 0.56 | 288.02 ± 10.45 | ||||||||

| Pre-trained | 24.54 ± 0.03 | 14.54 ± 0.57 | 264.66 ± 13.64 |

| Using present web knowledge improves video plan predictions underneath all metrics thought of. |

The way forward for large-scale generative fashions for resolution making

The constructive outcomes of UniPi level to the broader route of utilizing generative fashions and the wealth of knowledge on the web as highly effective instruments to be taught general-purpose resolution making methods. UniPi is just one step in direction of what generative fashions can convey to resolution making. Other examples embrace utilizing generative basis fashions to supply photorealistic or linguistic simulators of the world wherein synthetic brokers might be skilled indefinitely. Generative fashions as brokers also can be taught to work together with complicated environments such because the web, in order that a lot broader and extra complicated duties can ultimately be automated. We look ahead to future analysis in making use of internet-scale basis fashions to multi-environment and multi-embodiment settings.

Acknowledgements

We’d wish to thank all remaining authors of the paper together with Bo Dai, Hanjun Dai, Ofir Nachum, Joshua B. Tenenbaum, Dale Schuurmans, and Pieter Abbeel. We wish to thank George Tucker, Douglas Eck, and Vincent Vanhoucke for the suggestions on this publish and on the unique paper.