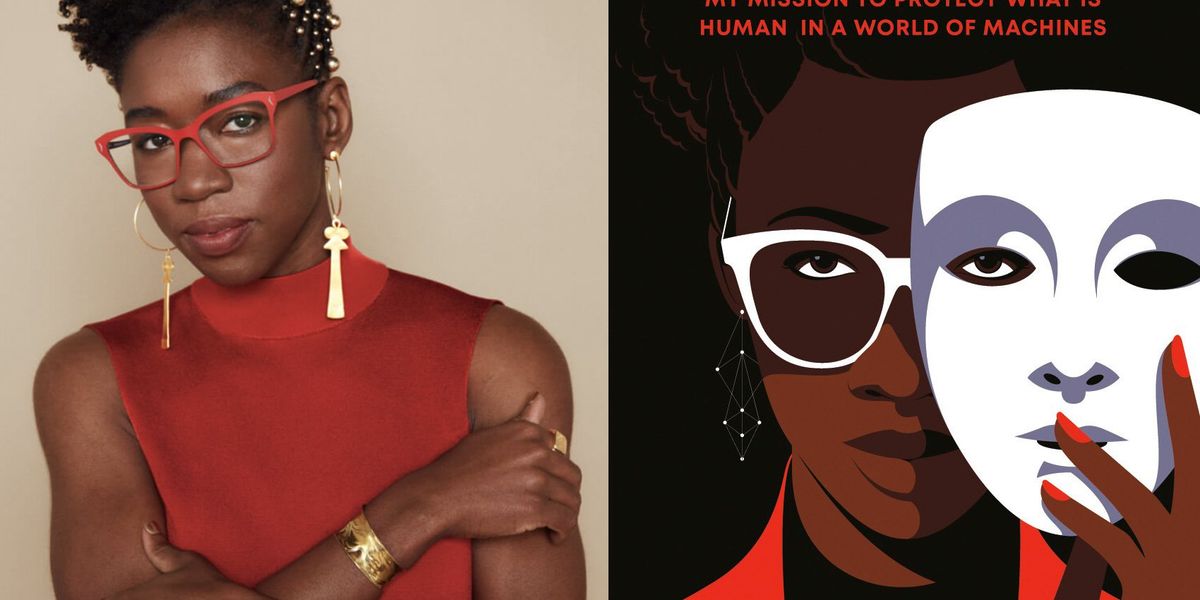

Joy Buolamwini‘s AI research was attracting attention years before she received her Ph.D. from the MIT Media Lab in 2022. As a graduate student, she made waves with a 2016 TED talk about algorithmic bias that has received more than 1.6 million views to date. In the talk, Buolamwini, who is Black, showed that standard facial detection systems didn’t acknowledge her face except she placed on a white masks. During the speak, she additionally brandished a defend emblazoned with the emblem of her new group, the Algorithmic Justice League, which she stated would battle for folks harmed by AI techniques, folks she would later come to name the excoded.

In her new e-book, Unmasking AI: My Mission to Protect What Is Human in a World of Machines, Buolamwini describes her personal awakenings to the clear and current risks of at the moment’s AI. She explains her analysis on facial recognition techniques and the Gender Shades analysis undertaking, through which she confirmed that business gender classification techniques persistently misclassified dark-skinned girls. She additionally narrates her stratospheric rise—within the years since her TED speak, she has introduced on the World Economic Forum, testified earlier than Congress, and took part in President Biden’s roundtable on AI.

While the e-book is an fascinating learn on a autobiographical degree, it additionally accommodates helpful prompts for AI researchers who’re able to query their assumptions. She reminds engineers that default settings should not impartial, that handy datasets could also be rife with moral and authorized issues, and that benchmarks aren’t at all times assessing the precise issues. Via e-mail, she answered IEEE Spectrum‘s questions on how one can be a principled AI researcher and how one can change the established order.

One of essentially the most fascinating elements of the e-book for me was your detailed description of how you probably did the analysis that turned Gender Shades: the way you found out a knowledge assortment technique that felt moral to you, struggled with the inherent subjectivity in devising a classification scheme, did the labeling labor your self, and so forth. It appeared to me like the alternative of the Silicon Valley “move fast and break things” ethos. Can you think about a world through which each AI researcher is so scrupulous? What would it not take to get to such a state of affairs?

Joy Buolamwini: When I used to be incomes my tutorial levels and studying to code, I didn’t have examples of moral information assortment. Basically if the info have been obtainable on-line it was there for the taking. It may be tough to think about one other method of doing issues, if you happen to by no means see another pathway. I do imagine there’s a world the place extra AI researchers and practitioners train extra warning with data-collection actions, due to the engineers and researchers who attain out to the Algorithmic Justice League searching for a greater method. Change begins with dialog, and we’re having essential conversations at the moment about information provenance, classification techniques, and AI harms that once I began this work in 2016 have been typically seen as insignificant.

What can engineers do in the event that they’re involved about algorithmic bias and different points concerning AI ethics, however they work for a typical huge tech firm? The sort of place the place no person questions the usage of handy datasets or asks how the info was collected and whether or not there are issues with consent or bias? Where they’re anticipated to supply outcomes that measure up in opposition to commonplace benchmarks? Where the alternatives appear to be: Go together with the established order or discover a new job?

Buolamwini: I can’t stress the significance of documentation. In conducting algorithmic audits and approaching well-known tech corporations with the outcomes, one situation that got here up time and time once more was the shortage of inner consciousness concerning the limitations of the AI techniques that have been being deployed. I do imagine adopting instruments like datasheets for datasets and mannequin playing cards for fashions, approaches that present a chance to see the info used to coach AI fashions and the efficiency of these AI fashions in varied contexts is a crucial start line.

Just as essential can be acknowledging the gaps, so AI instruments should not introduced as working in a common method when they’re optimized for only a particular context. These approaches can present how strong or not an AI system is. Then the query turns into, Is the corporate keen to launch a system with the constraints documented or are they keen to return and make enhancements.

It may be useful to not view AI ethics individually from growing strong and resilient AI techniques. If your software doesn’t work as nicely on girls or folks of coloration, you might be at an obstacle in comparison with corporations who create instruments that work nicely for quite a lot of demographics. If your AI instruments generate dangerous stereotypes or hate speech you might be in danger for reputational harm that may impede an organization’s capability to recruit needed expertise, safe future prospects, or achieve follow-on funding. If you undertake AI instruments that discriminate in opposition to protected courses for core areas like hiring, you danger litigation for violating antidiscrimination legal guidelines. If AI instruments you undertake or create use information that violates copyright protections, you open your self as much as litigation. And with extra policymakers trying to regulate AI, corporations that ignore points or algorithmic bias and AI discrimination could find yourself going through expensive penalties that would have been averted with extra forethought.

“It can be difficult to imagine another way of doing things, if you never see an alternative pathway.” —Joy Buolamwini, Algorithmic Justice League

You write that “the choice to stop is a viable and necessary option” and say that we are able to reverse course even on AI instruments which have already been adopted. Would you prefer to see a course reversal on at the moment’s tremendously well-liked generative AI instruments, together with chatbots like ChatGPT and picture turbines like Midjourney? Do you assume that’s a possible risk?

Buolamwini: Facebook (now Meta) deleted a billion faceprints across the time of a [US] $650 million settlement after they confronted allegations of accumulating face information to coach AI fashions with out the expressed consent of customers. Clearview AI stopped providing providers in quite a lot of Canadian provinces after investigations into their data-collection course of have been challenged. These actions present that when there’s resistance and scrutiny there may be change.

You describe the way you welcomed the AI Bill of Rights as an “affirmative vision” for the sorts of protections wanted to protect civil rights within the age of AI. That doc was a nonbinding set of tips for the federal authorities because it started to consider AI rules. Just a number of weeks in the past, President Biden issued an government order on AI that adopted up on lots of the concepts within the Bill of Rights. Are you happy with the chief order?

Buolamwini: The EO [executive order] on AI is a welcomed improvement as governments take extra steps towards stopping dangerous makes use of of AI techniques, so extra folks can profit from the promise of AI. I commend the EO for centering the values of the AI Bill of Rights together with safety from algorithmic discrimination and the necessity for efficient AI techniques. Too typically AI instruments are adopted based mostly on hype with out seeing if the techniques themselves are match for goal.

You’re dismissive of issues about AI turning into superintelligent and posing an existential danger to our species, and write that “existing AI systems with demonstrated harms are more dangerous than hypothetical ‘sentient’ AI systems because they are real.” I keep in mind a tweet from final June through which you talked about folks involved with existential danger and stated that you simply “see room for strategic cooperation” with them. Do you continue to really feel that method? What may that strategic cooperation appear like?

Buolamwini: The “x-risk” I’m involved about, which I discuss within the e-book, is the x-risk of being excoded—that’s, being harmed by AI techniques. I’m involved with deadly autonomous weapons and giving AI techniques the flexibility to make kill choices. I’m involved with the methods through which AI techniques can be utilized to kill folks slowly by way of lack of entry to satisfactory well being care, housing, and financial alternative.

I don’t assume you make change on the earth by solely speaking to individuals who agree with you. A whole lot of the work with AJL has been partaking with stakeholders with totally different viewpoints and ideologies to raised perceive the incentives and issues which might be driving them. The current U.Okay. AI Safety Summit is an instance of a strategic cooperation the place quite a lot of stakeholders convened to discover safeguards that may be put in place on near-term AI dangers in addition to rising threats.

As a part of the Unmasking AI e-book tour, Sam Altman and I lately had a dialog on the way forward for AI the place we mentioned our various viewpoints in addition to discovered widespread floor: specifically that corporations can’t be left to control themselves on the subject of stopping AI harms. I imagine these sorts of discussions present alternatives to transcend incendiary headlines. When Sam was speaking about AI enabling humanity to be higher—a body we see so typically with the creation of AI instruments—I requested which people will profit. What occurs when the digital divide turns into an AI chasm? In asking these questions and bringing in marginalized views, my purpose is to problem the complete AI ecosystem to be extra strong in our evaluation and therefore much less dangerous within the processes we create and techniques we deploy.

What’s subsequent for the Algorithmic Justice League?

Buolamwini: AJL will proceed to lift public consciousness about particular harms that AI techniques produce, steps we are able to put in place to handle these harms, and proceed to construct out our harms reporting platform which serves as an early-warning mechanism for rising AI threats. We will proceed to guard what’s human in a world of machines by advocating for civil rights, biometric rights, and artistic rights as AI continues to evolve. Our newest marketing campaign is round TSA use of facial recognition which you’ll be taught extra about by way of fly.ajl.org.

Think concerning the state of AI at the moment, encompassing analysis, business exercise, public discourse, and rules. Where are you on a scale of 1 to 10, if 1 is one thing alongside the strains of outraged/horrified/depressed and 10 is hopeful?

Buolamwini: I’d provide a much less quantitative measure and as a substitute provide a poem that higher captures my sentiments. I’m total hopeful, as a result of my experiences since my fateful encounter with a white masks and a face-tracking system years in the past has proven me change is feasible.

THE EXCODED

To the Excoded

Resisting and revealing the lie

That we should settle for

The give up of our faces

The harvesting of our information

The plunder of our traces

We rejoice your braveness

No Silence

No Consent

You present the trail to algorithmic justice require a league

A sisterhood, a neighborhood,

Hallway gatherings

Sharpies and posters

Coalitions Petitions Testimonies, Letters

Research and potlucks

Dancing and music

Everyone enjoying a job to orchestrate change

To the excoded and freedom fighters world wide

Persisting and prevailing in opposition to

algorithms of oppression

automating inequality

by way of weapons of math destruction

we Stand with you in gratitude

You display the folks have a voice and a selection.

When defiant melodies harmonize to raise

human life, dignity, and rights.

The victory is ours.

From Your Site Articles

Related Articles Around the Web