TL;DR: In RLHF, there’s pressure between the reward studying part, which makes use of human desire within the type of comparisons, and the RL fine-tuning part, which optimizes a single, non-comparative reward. What if we carried out RL in a comparative manner?

Figure 1:

This diagram illustrates the distinction between reinforcement studying from absolute suggestions and relative suggestions. By incorporating a brand new element – pairwise coverage gradient, we are able to unify the reward modeling stage and RL stage, enabling direct updates primarily based on pairwise responses.

Large Language Models (LLMs) have powered more and more succesful digital assistants, similar to GPT-4, Claude-2, Bard and Bing Chat. These methods can reply to complicated person queries, write code, and even produce poetry. The method underlying these superb digital assistants is Reinforcement Learning with Human Feedback (RLHF). RLHF goals to align the mannequin with human values and eradicate unintended behaviors, which might typically come up as a result of mannequin being uncovered to a big amount of low-quality knowledge throughout its pretraining part.

Proximal Policy Optimization (PPO), the dominant RL optimizer on this course of, has been reported to exhibit instability and implementation problems. More importantly, there’s a persistent discrepancy within the RLHF course of: regardless of the reward mannequin being skilled utilizing comparisons between varied responses, the RL fine-tuning stage works on particular person responses with out making any comparisons. This inconsistency can exacerbate points, particularly within the difficult language era area.

Given this backdrop, an intriguing query arises: Is it doable to design an RL algorithm that learns in a comparative method? To discover this, we introduce Pairwise Proximal Policy Optimization (P3O), a technique that harmonizes the coaching processes in each the reward studying stage and RL fine-tuning stage of RLHF, offering a passable answer to this challenge.

Background

Figure 2:

An outline of the three levels of RLHF from an OpenAI weblog submit. Note that the third stage falls below Reinforcement Learning with Absolute Feedback as proven on the left facet of Figure 1.

In conventional RL settings, the reward is specified manually by the designer or supplied by a well-defined reward operate, as in Atari video games. However, to steer a mannequin towards useful and innocent responses, defining a superb reward just isn’t simple. RLHF addresses this downside by studying the reward operate from human suggestions, particularly within the type of comparisons, after which making use of RL to optimize the realized reward operate.

The RLHF pipeline is split into a number of levels, detailed as follows:

Supervised Fine-Tuning Stage: The pre-trained mannequin undergoes the utmost probability loss on a top quality dataset, the place it learns to answer human queries by mimicking.

Reward Modeling Stage: The SFT mannequin is prompted with prompts (x) to provide pairs of solutions (y_1,y_2sim pi^{textual content{SFT}}(yvert x)). These generated responses type a dataset. The response pairs are offered to human labellers who specific a desire for one reply over the opposite, denoted as (y_w succ y_l). A comparative loss is then used to coach a reward mannequin (r_phi):

[mathcal{L}_R = mathbb{E}_{(x,y_l,y_w)simmathcal{D}}log sigmaleft(r_phi(y_w|x)-r_phi(y_l|x)right)]

RL Fine-Tuning Stage: The SFT mannequin serves because the initialization of this stage, and an RL algorithm optimizes the coverage in direction of maximizing the reward whereas limiting the deviation from the preliminary coverage. Formally, that is executed by:

[max_{pi_theta}mathbb{E}_{xsim mathcal{D}, ysim pi_theta(cdotvert x)}left[r_phi(yvert x)-beta D_{text{KL}}(pi_theta(cdotvert x)Vert pi^{text{SFT}}(cdotvert x))right]]

An inherent problem with this strategy is the non-uniqueness of the reward. For occasion, given a reward operate (r(yvert x)), a easy shift within the reward of the immediate to (r(yvert x)+delta(x)) creates one other legitimate reward operate. These two reward capabilities end in the identical loss for any response pairs, however they differ considerably when optimized towards with RL. In an excessive case, if the added noise causes the reward operate to have a wide range, an RL algorithm may be misled to extend the probability of responses with increased rewards, although these rewards will not be significant. In different phrases, the coverage may be disrupted by the reward scale data within the immediate (x), but fails to study the helpful half – relative desire represented by the reward distinction. To handle this challenge, our intention is to develop an RL algorithm that’s invariant to reward translation.

Derivation of P3O

Our thought stems from the vanilla coverage gradient (VPG). VPG is a extensively adopted first-order RL optimizer, favored for its simplicity and ease of implementation. In a contextual bandit (CB) setting, the VPG is formulated as:

[nabla mathcal{L}^{text{VPG}} = mathbb{E}_{ysimpi_{theta}} r(y|x)nablalogpi_{theta}(y|x)]

Through some algebraic manipulation, we are able to rewrite the coverage gradient in a comparative type that includes two responses of the identical immediate. We identify it Pairwise Policy Gradient:

[mathbb{E}_{y_1,y_2simpi_{theta}}left(r(y_1vert x)-r(y_2vert x)right)nablaleft(logfrac{pi_theta(y_1vert x)}{pi_theta(y_2vert x)}right)/2]

Unlike VPG, which instantly depends on absolutely the magnitude of the reward, PPG makes use of the reward distinction. This allows us to bypass the aforementioned challenge of reward translation. To additional increase efficiency, we incorporate a replay buffer utilizing Importance Sampling and keep away from giant gradient updates by way of Clipping.

Importance sampling: We pattern a batch of responses from the replay buffer which encompass responses generated from (pi_{textual content{outdated}}) after which compute the significance sampling ratio for every response pair. The gradient is the weighted sum of the gradients computed from every response pair.

Clipping: We clip the significance sampling ratio in addition to the gradient replace to penalize excessively giant updates. This method allows the algorithm to trade-off KL divergence and reward extra effectively.

There are two other ways to implement the clipping method, distinguished by both separate or joint clipping. The ensuing algorithm is known as Pairwise Proximal Policy Optimization (P3O), with the variants being V1 or V2 respectively. You can discover extra particulars in our unique paper.

Evaluation

Figure 3:

KL-Reward frontier for TL;DR, each sequence-wise KL and reward are averaged over 200 check prompts and computed each 500 gradient steps. We discover {that a} easy linear operate matches the curve properly. P3O has the most effective KL-Reward trade-off among the many three.

We discover two totally different open-ended textual content era duties, summarization and question-answering. In summarization, we make the most of the TL;DR dataset the place the immediate (x) is a discussion board submit from Reddit, and (y) is a corresponding abstract. For question-answering, we use Anthropic Helpful and Harmless (HH), the immediate (x) is a human question from varied subjects, and the coverage ought to study to provide an attractive and useful response (y).

We examine our algorithm P3O with a number of efficient and consultant approaches for LLM alignment. We begin with the SFT coverage skilled by most probability. For RL algorithms, we take into account the dominant strategy PPO and the newly proposed DPO. DPO instantly optimizes the coverage in direction of the closed-form answer of the KL-constrained RL downside. Although it’s proposed as an offline alignment technique, we make it on-line with the assistance of a proxy reward operate.

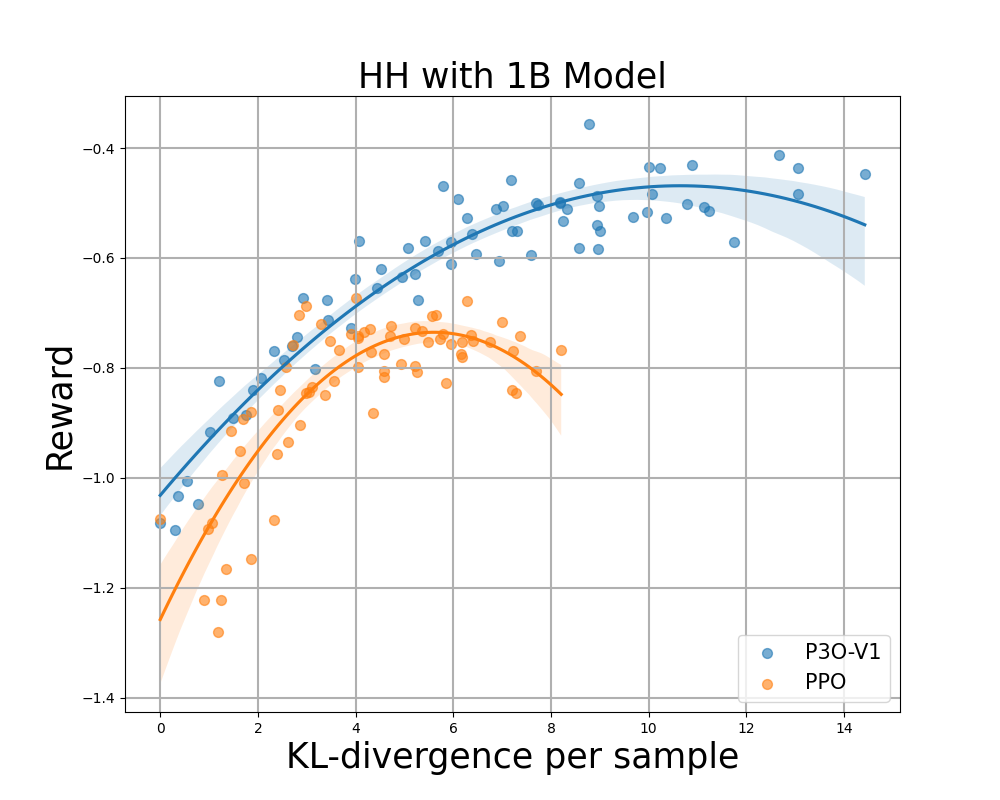

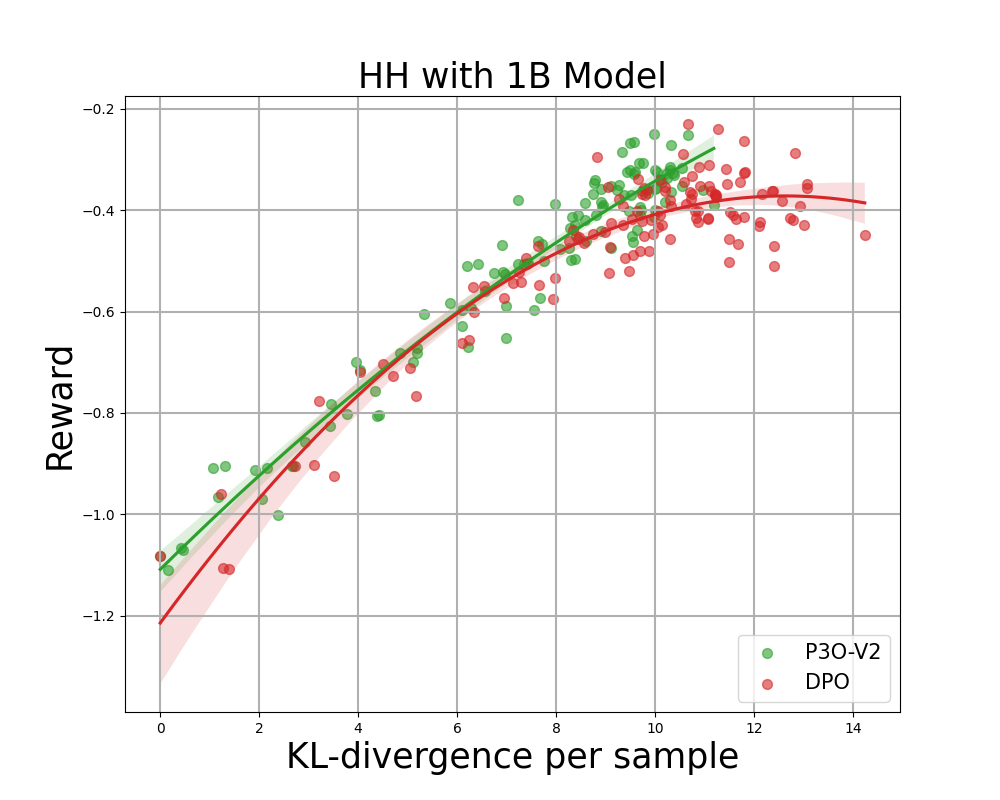

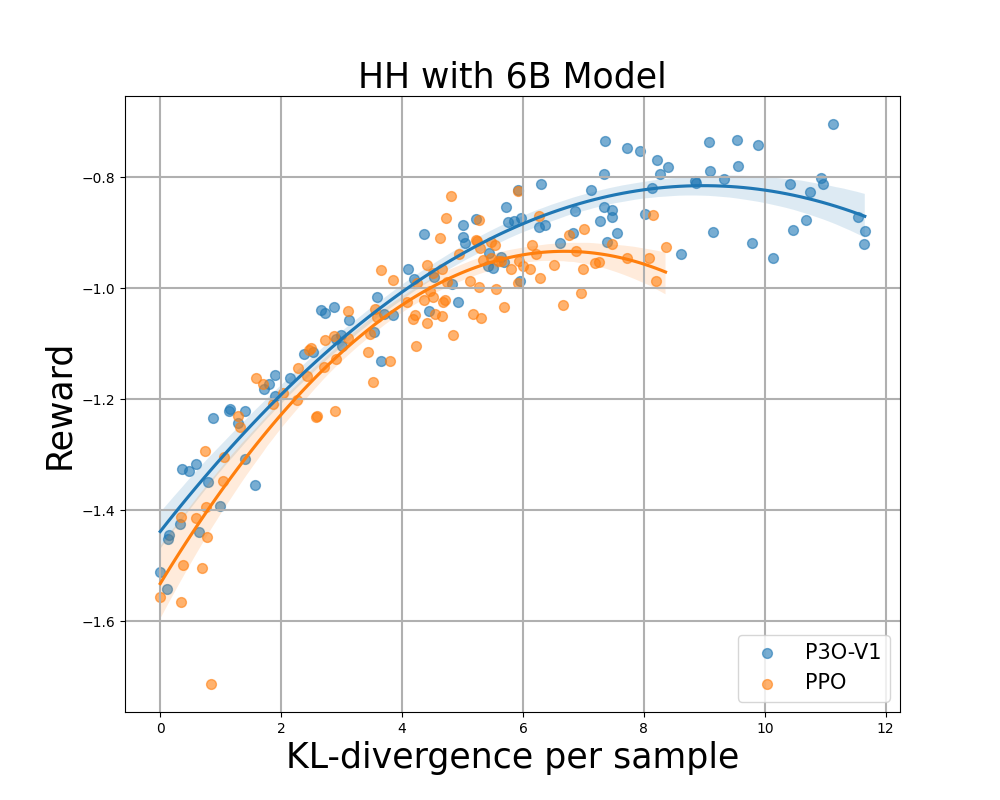

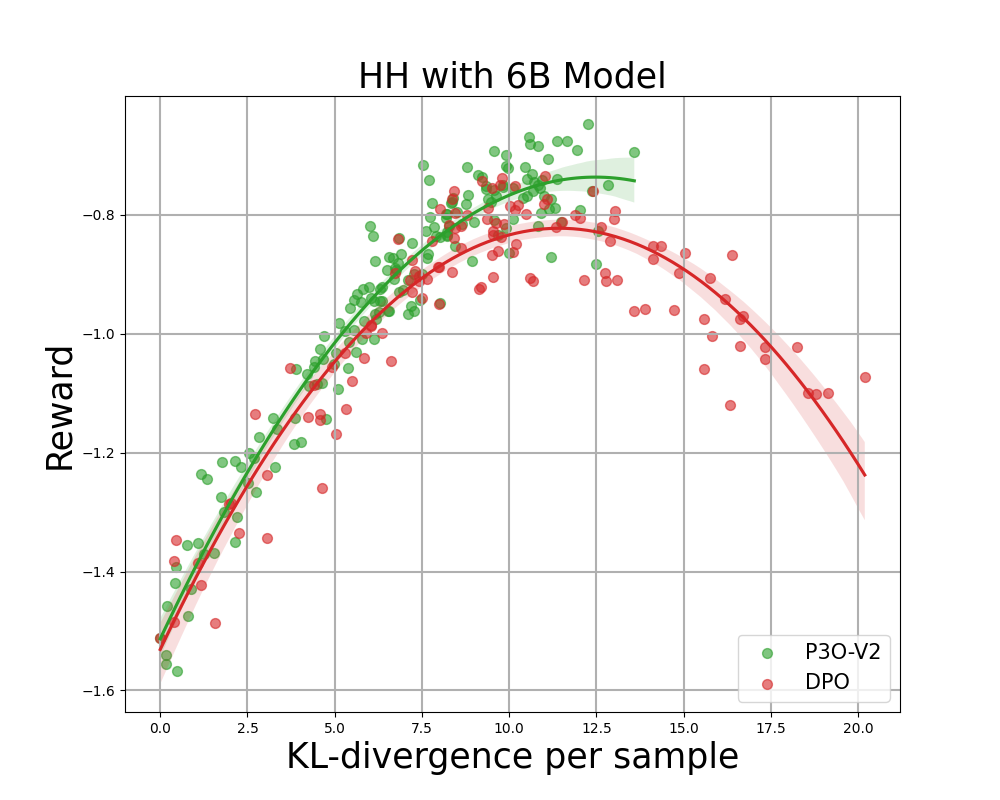

Figure 4:

KL-Reward frontier for HH, every level represents a median of outcomes over 280 check prompts and calculated each 500 gradient updates. Left two figures examine P3O-V1 and PPO with various base mannequin sizes; Right two figures examine P3O-V2 and DPO. Results exhibiting that P3O can’t solely obtain increased reward but in addition yield higher KL management.

Deviating an excessive amount of from the reference coverage would lead the web coverage to chop corners of the reward mannequin and produce incoherent continuations, as identified by earlier works. We are curious about not solely the properly established metric in RL literature – the reward, but in addition in how far the realized coverage deviates from the preliminary coverage, measured by KL-divergence. Therefore, we examine the effectiveness of every algorithm by its frontier of achieved reward and KL-divergence from the reference coverage (KL-Reward Frontier). In Figure 4 and Figure 5, we uncover that P3O has strictly dominant frontiers than PPO and DPO throughout varied mannequin sizes.

Figure 5:

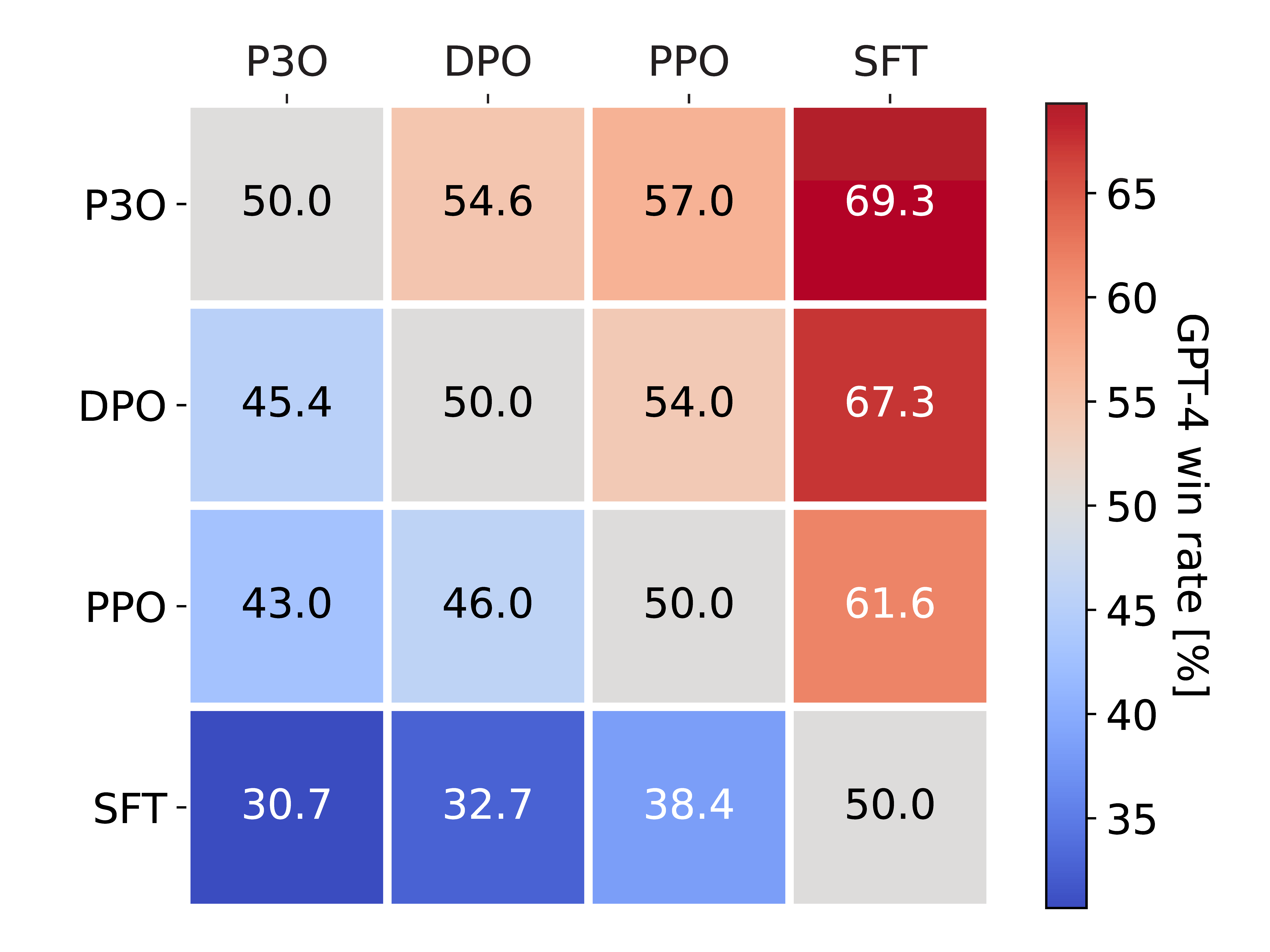

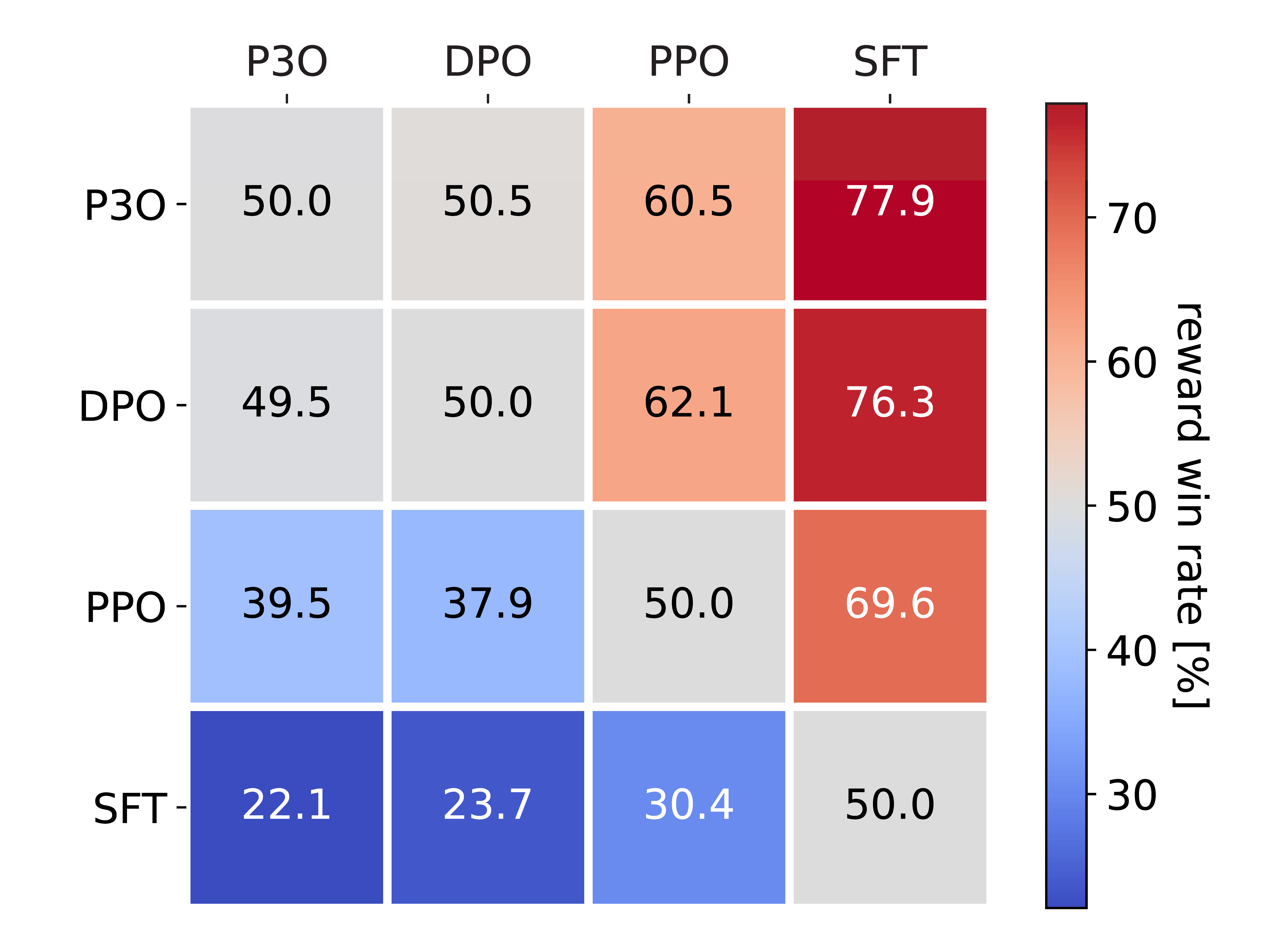

Left determine shows the win charge evaluated by GPT-4. Right determine presents the win charge primarily based on direct comparability of the proxy reward. Despite the excessive correlation between two figures, we discovered that the reward win charge have to be adjusted in line with the KL as a way to align with the GPT-4 win charge.

To instantly assess the standard of generated responses, we additionally carry out Head-to-Head Comparisons between each pair of algorithms within the HH dataset. We use two metrics for analysis: (1) Reward, the optimized goal throughout on-line RL, (2) GPT-4, as a trustworthy proxy for human analysis of response helpfulness. For the latter metric, we level out that earlier research present that GPT-4 judgments correlate strongly with people, with human settlement with GPT-4 sometimes comparable or increased than inter-human annotator settlement.

Figure 5 presents the excellent pairwise comparability outcomes. The common KL-divergence and reward rating of those fashions is DPO > P3O > PPO > SFT. Although DPO marginally surpasses P3O in reward, it has a significantly increased KL-divergence, which can be detrimental to the standard of era. As a end result, DPO has a reward win charge of 49.5% towards P3O, however solely 45.4% as evaluated by GPT-4. Compared with different strategies, P3O displays a GPT-4 win charge of 57.0% towards PPO and 69.3% towards SFT. This result’s per our findings from the KL-Reward frontier metric, affirming that P3O may higher align with human desire than earlier baselines.

Conclusion

In this weblog submit, we current new insights into aligning giant language fashions with human preferences by way of reinforcement studying. We proposed the Reinforcement Learning with Relative Feedback framework, as depicted in Figure 1. Under this framework, we develop a novel coverage gradient algorithm – P3O. This strategy unifies the basic ideas of reward modeling and RL fine-tuning by comparative coaching. Our outcomes present that P3O surpasses prior strategies when it comes to the KL-Reward frontier in addition to GPT-4 win-rate.

BibTex

This weblog relies on our current paper and weblog. If this weblog evokes your work, please take into account citing it with:

@article{wu2023pairwise,

title={Pairwise Proximal Policy Optimization: Harnessing Relative Feedback for LLM Alignment},

creator={Wu, Tianhao and Zhu, Banghua and Zhang, Ruoyu and Wen, Zhaojin and Ramchandran, Kannan and Jiao, Jiantao},

journal={arXiv preprint arXiv:2310.00212},

12 months={2023}

}