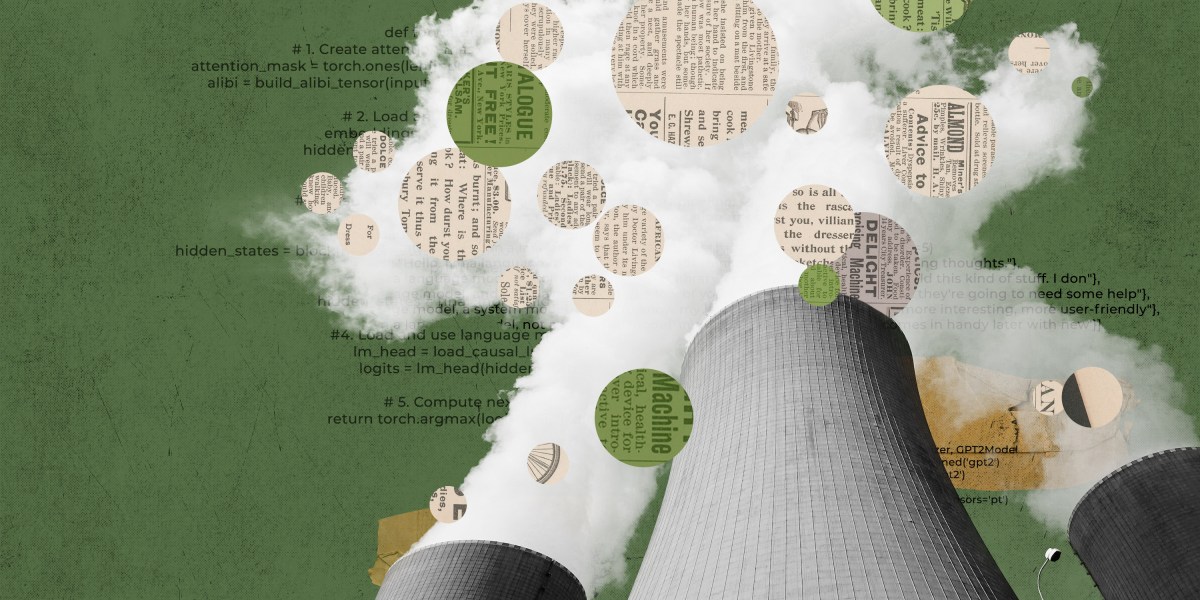

To take a look at its new strategy, Hugging Face estimated the general emissions for its personal massive language mannequin, BLOOM, which was launched earlier this 12 months. It was a course of that concerned including up plenty of completely different numbers: the quantity of vitality used to coach the mannequin on a supercomputer, the vitality wanted to fabricate the supercomputer’s {hardware} and keep its computing infrastructure, and the vitality used to run BLOOM as soon as it had been deployed. The researchers calculated that remaining half utilizing a software program device referred to as CodeCarbon, which tracked the carbon emissions BLOOM was producing in actual time over a interval of 18 days.

Hugging Face estimated that BLOOM’s coaching led to 25 metric tons of carbon emissions. But, the researchers discovered, that determine doubled once they took into consideration the emissions produced by the manufacturing of the pc gear used for coaching, the broader computing infrastructure, and the vitality required to truly run BLOOM as soon as it was educated.

While that will appear to be so much for one mannequin—50 metric tons of carbon emissions is the equal of round 60 flights between London and New York—it is considerably lower than the emissions related to different LLMs of the identical measurement. This is as a result of BLOOM was educated on a French supercomputer that’s largely powered by nuclear vitality, which doesn’t produce carbon emissions. Models educated in China, Australia, or some elements of the US, which have vitality grids that rely extra on fossil fuels, are prone to be extra polluting.

After BLOOM was launched, Hugging Face estimated that utilizing the mannequin emitted round 19 kilograms of carbon dioxide per day, which has similarities to the emissions produced by driving round 54 miles in an common new automobile.

By means of comparability, OpenAI’s GPT-3 and Meta’s OPT had been estimated to emit greater than 500 and 75 metric tons of carbon dioxide, respectively, throughout coaching. GPT-3’s huge emissions might be partly defined by the truth that it was educated on older, much less environment friendly {hardware}. But it’s onerous to say what the figures are for sure; there is no such thing as a standardized strategy to measure carbon emissions, and these figures are primarily based on exterior estimates or, in Meta’s case, restricted knowledge the corporate launched.

“Our goal was to go above and beyond just the carbon emissions of the electricity consumed during training and to account for a larger part of the life cycle in order to help the AI community get a better idea of the their impact on the environment and how we could begin to reduce it,” says Sasha Luccioni, a researcher at Hugging Face and the paper’s lead creator.

Hugging Face’s paper units a brand new commonplace for organizations that develop AI fashions, says Emma Strubell, an assistant professor within the faculty of pc science at Carnegie Mellon University, who wrote a seminal paper on AI’s influence on the local weather in 2019. She was not concerned on this new analysis.

The paper “represents the most thorough, honest, and knowledgeable analysis of the carbon footprint of a large ML model to date as far as I am aware, going into much more detail … than any other paper [or] report that I know of,” says Strubell.