At present we’re sharing publicly Microsoft’s Accountable AI Commonplace, a framework to information how we construct AI techniques. It is a vital step in our journey to develop higher, extra reliable AI. We’re releasing our newest Accountable AI Commonplace to share what we’ve discovered, invite suggestions from others, and contribute to the dialogue about constructing higher norms and practices round AI.

Guiding product growth in direction of extra accountable outcomes

AI techniques are the product of many various selections made by those that develop and deploy them. From system function to how folks work together with AI techniques, we have to proactively information these selections towards extra useful and equitable outcomes. Meaning conserving folks and their objectives on the middle of system design selections and respecting enduring values like equity, reliability and security, privateness and safety, inclusiveness, transparency, and accountability.

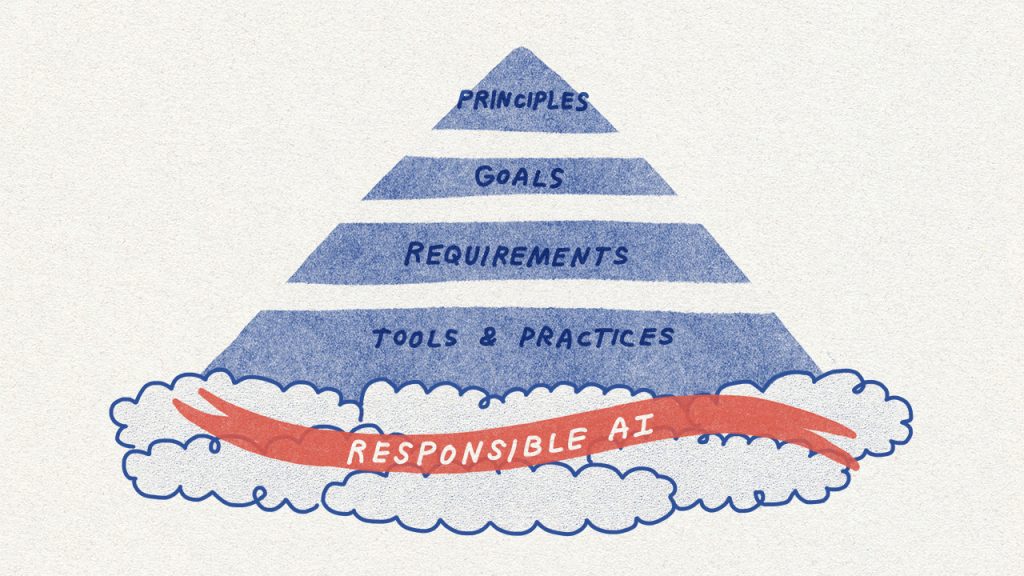

The Accountable AI Commonplace units out our greatest pondering on how we are going to construct AI techniques to uphold these values and earn society’s belief. It gives particular, actionable steering for our groups that goes past the high-level rules which have dominated the AI panorama thus far.

The Commonplace particulars concrete objectives or outcomes that groups creating AI techniques should try to safe. These objectives assist break down a broad precept like ‘accountability’ into its key enablers, reminiscent of impression assessments, knowledge governance, and human oversight. Every purpose is then composed of a set of necessities, that are steps that groups should take to make sure that AI techniques meet the objectives all through the system lifecycle. Lastly, the Commonplace maps obtainable instruments and practices to particular necessities in order that Microsoft’s groups implementing it have assets to assist them succeed.

The necessity for this kind of sensible steering is rising. AI is turning into increasingly more part of our lives, and but, our legal guidelines are lagging behind. They haven’t caught up with AI’s distinctive dangers or society’s wants. Whereas we see indicators that authorities motion on AI is increasing, we additionally acknowledge our duty to behave. We consider that we have to work in direction of guaranteeing AI techniques are accountable by design.

Refining our coverage and studying from our product experiences

Over the course of a yr, a multidisciplinary group of researchers, engineers, and coverage specialists crafted the second model of our Accountable AI Commonplace. It builds on our earlier accountable AI efforts, together with the primary model of the Commonplace that launched internally within the fall of 2019, in addition to the most recent analysis and a few necessary classes discovered from our personal product experiences.

Equity in Speech-to-Textual content Expertise

The potential of AI techniques to exacerbate societal biases and inequities is among the most well known harms related to these techniques. In March 2020, a tutorial examine revealed that speech-to-text expertise throughout the tech sector produced error charges for members of some Black and African American communities that had been almost double these for white customers. We stepped again, thought of the examine’s findings, and discovered that our pre-release testing had not accounted satisfactorily for the wealthy variety of speech throughout folks with completely different backgrounds and from completely different areas. After the examine was printed, we engaged an skilled sociolinguist to assist us higher perceive this variety and sought to increase our knowledge assortment efforts to slim the efficiency hole in our speech-to-text expertise. Within the course of, we discovered that we wanted to grapple with difficult questions on how greatest to gather knowledge from communities in a approach that engages them appropriately and respectfully. We additionally discovered the worth of bringing specialists into the method early, together with to raised perceive components that may account for variations in system efficiency.

The Accountable AI Commonplace information the sample we adopted to enhance our speech-to-text expertise. As we proceed to roll out the Commonplace throughout the corporate, we anticipate the Equity Targets and Necessities recognized in it should assist us get forward of potential equity harms.

Applicable Use Controls for Customized Neural Voice and Facial Recognition

Azure AI’s Customized Neural Voice is one other revolutionary Microsoft speech expertise that permits the creation of an artificial voice that sounds almost an identical to the unique supply. AT&T has introduced this expertise to life with an award-winning in-store Bugs Bunny expertise, and Progressive has introduced Flo’s voice to on-line buyer interactions, amongst makes use of by many different clients. This expertise has thrilling potential in schooling, accessibility, and leisure, and but it is usually straightforward to think about the way it might be used to inappropriately impersonate audio system and deceive listeners.

Our overview of this expertise by our Accountable AI program, together with the Delicate Makes use of overview course of required by the Accountable AI Commonplace, led us to undertake a layered management framework: we restricted buyer entry to the service, ensured acceptable use circumstances had been proactively outlined and communicated by a Transparency Observe and Code of Conduct, and established technical guardrails to assist make sure the energetic participation of the speaker when creating an artificial voice. By these and different controls, we helped defend in opposition to misuse, whereas sustaining useful makes use of of the expertise.

Constructing upon what we discovered from Customized Neural Voice, we are going to apply related controls to our facial recognition companies. After a transition interval for present clients, we’re limiting entry to those companies to managed clients and companions, narrowing the use circumstances to pre-defined acceptable ones, and leveraging technical controls engineered into the companies.

Match for Goal and Azure Face Capabilities

Lastly, we acknowledge that for AI techniques to be reliable, they must be acceptable options to the issues they’re designed to unravel. As a part of our work to align our Azure Face service to the necessities of the Accountable AI Commonplace, we’re additionally retiring capabilities that infer emotional states and id attributes reminiscent of gender, age, smile, facial hair, hair, and make-up.

Taking emotional states for instance, we’ve determined we won’t present open-ended API entry to expertise that may scan folks’s faces and purport to deduce their emotional states based mostly on their facial expressions or actions. Consultants inside and out of doors the corporate have highlighted the shortage of scientific consensus on the definition of “feelings,” the challenges in how inferences generalize throughout use circumstances, areas, and demographics, and the heightened privateness issues round this kind of functionality. We additionally determined that we have to rigorously analyze all AI techniques that purport to deduce folks’s emotional states, whether or not the techniques use facial evaluation or every other AI expertise. The Match for Goal Aim and Necessities within the Accountable AI Commonplace now assist us to make system-specific validity assessments upfront, and our Delicate Makes use of course of helps us present nuanced steering for high-impact use circumstances, grounded in science.

These real-world challenges knowledgeable the event of Microsoft’s Accountable AI Commonplace and show its impression on the way in which we design, develop, and deploy AI techniques.

For these desirous to dig into our strategy additional, we’ve additionally made obtainable some key assets that assist the Accountable AI Commonplace: our Influence Evaluation template and information, and a group of Transparency Notes. Influence Assessments have confirmed helpful at Microsoft to make sure groups discover the impression of their AI system – together with its stakeholders, supposed advantages, and potential harms – in depth on the earliest design levels. Transparency Notes are a brand new type of documentation through which we speak in confidence to our clients the capabilities and limitations of our core constructing block applied sciences, so that they have the data essential to make accountable deployment selections.

A multidisciplinary, iterative journey

Our up to date Accountable AI Commonplace displays lots of of inputs throughout Microsoft applied sciences, professions, and geographies. It’s a vital step ahead for our apply of accountable AI as a result of it’s far more actionable and concrete: it units out sensible approaches for figuring out, measuring, and mitigating harms forward of time, and requires groups to undertake controls to safe useful makes use of and guard in opposition to misuse. You may study extra in regards to the growth of the Commonplace on this

Whereas our Commonplace is a vital step in Microsoft’s accountable AI journey, it is only one step. As we make progress with implementation, we anticipate to come across challenges that require us to pause, replicate, and regulate. Our Commonplace will stay a residing doc, evolving to deal with new analysis, applied sciences, legal guidelines, and learnings from inside and out of doors the corporate.

There’s a wealthy and energetic international dialog about tips on how to create principled and actionable norms to make sure organizations develop and deploy AI responsibly. Now we have benefited from this dialogue and can proceed to contribute to it. We consider that trade, academia, civil society, and authorities have to collaborate to advance the state-of-the-art and study from each other. Collectively, we have to reply open analysis questions, shut measurement gaps, and design new practices, patterns, assets, and instruments.

Higher, extra equitable futures would require new guardrails for AI. Microsoft’s Accountable AI Commonplace is one contribution towards this purpose, and we’re participating within the exhausting and obligatory implementation work throughout the corporate. We’re dedicated to being open, sincere, and clear in our efforts to make significant progress.