[ad_1]

Elvis Nava is a fellow at ETH’ Zurich’s AI middle in addition to a doctoral scholar on the Institute of Neuroinformatics and within the Soft Robotics Lab. (Photograph: Daniel Winkler / ETH Zurich)

By Christoph Elhardt

In ETH Zurich’s Soft Robotics Lab, a white robotic hand reaches for a beer can, lifts it up and strikes it to a glass on the different finish of the desk. There, the hand fastidiously tilts the can to the precise and pours the glowing, gold-coloured liquid into the glass with out spilling it. Cheers!

Computer scientist Elvis Nava is the particular person controlling the robotic hand developed by ETH start-up Faive Robotics. The 26-year-old doctoral scholar’s personal hand hovers over a floor outfitted with sensors and a digital camera. The robotic hand follows Nava’s hand motion. When he spreads his fingers, the robotic does the identical. And when he factors at one thing, the robotic hand follows swimsuit.

But for Nava, that is solely the start: “We hope that in future, the robot will be able to do something without our having to explain exactly how,” he says. He needs to show machines to hold out written and oral instructions. His purpose is to make them so clever that they’ll shortly purchase new skills, perceive individuals and assist them with totally different duties.

Functions that at the moment require particular directions from programmers will then be managed by easy instructions resembling “pour me a beer” or “hand me the apple”. To obtain this purpose, Nava obtained a doctoral fellowship from ETH Zurich’s AI Center in 2021: this program promotes abilities that bridges totally different analysis disciplines to develop new AI purposes. In addition, the Italian – who grew up in Bergamo – is doing his doctorate at Benjamin Grewe’s professorship of neuroinformatics and in Robert Katzschmann’s lab for comfortable robotics.

Developed by the ETH start-up Faive Robotics, the robotic hand imitates the actions of a human hand. (Video: Faive Robotics)

Combining sensory stimuli

But how do you get a machine to hold out instructions? What does this mix of synthetic intelligence and robotics seem like? To reply these questions, it’s essential to know the human mind.

We understand the environment by combining totally different sensory stimuli. Usually, our mind effortlessly integrates pictures, sounds, smells, tastes and haptic stimuli right into a coherent total impression. This potential permits us to shortly adapt to new conditions. We intuitively know find out how to apply acquired data to unfamiliar duties.

“Computers and robots often lack this ability,” Nava says. Thanks to machine studying, laptop packages right now might write texts, have conversations or paint footage, and robots might transfer shortly and independently by means of tough terrain, however the underlying studying algorithms are often primarily based on just one knowledge supply. They are – to make use of a pc science time period – not multimodal.

For Nava, that is exactly what stands in the way in which of extra clever robots: “Algorithms are often trained for just one set of functions, using large data sets that are available online. While this enables language processing models to use the word ‘cat’ in a grammatically correct way, they don’t know what a cat looks like. And robots can move effectively but usually lack the capacity for speech and image recognition.”

“Every couple of years, our discipline changes the way we think about what it means to be a researcher,” Elvis Nava says. (Video: ETH AI Center)

Robots must go to preschool

This is why Nava is creating studying algorithms for robots that train them precisely that: to mix data from totally different sources. “When I tell a robot arm to ‘hand me the apple on the table,’ it has to connect the word ‘apple’ to the visual features of an apple. What’s more, it has to recognise the apple on the table and know how to grab it.”

But how does the Nava train the robotic arm to do all that? In easy phrases, he sends it to a two-stage coaching camp. First, the robotic acquires basic skills resembling speech and picture recognition in addition to easy hand actions in a type of preschool.

Open-source fashions which have been educated utilizing large textual content, picture and video knowledge units are already accessible for these skills. Researchers feed, say, a picture recognition algorithm with hundreds of pictures labelled ‘dog’ or ‘cat.’ Then, the algorithm learns independently what options – on this case pixel buildings – represent a picture of a cat or a canine.

A brand new studying algorithm for robots

Nava’s job is to mix the most effective accessible fashions right into a studying algorithm, which has to translate totally different knowledge, pictures, texts or spatial data right into a uniform command language for the robotic arm. “In the model, the same vector represents both the word ‘beer’ and images labelled ‘beer’,” Nava says. That approach, the robotic is aware of what to achieve for when it receives the command “pour me a beer”.

Researchers who take care of synthetic intelligence on a deeper stage have identified for some time that integrating totally different knowledge sources and fashions holds a variety of promise. However, the corresponding fashions have solely lately turn out to be accessible and publicly accessible. What’s extra, there’s now sufficient computing energy to get them up and working in tandem as effectively.

When Nava talks about this stuff, they sound easy and intuitive. But that’s misleading: “You have to know the newest models really well, but that’s not enough; sometimes getting them up and running in tandem is an art rather than a science,” he says. It’s tough issues like these that particularly curiosity Nava. He can work on them for hours, constantly attempting out new options.

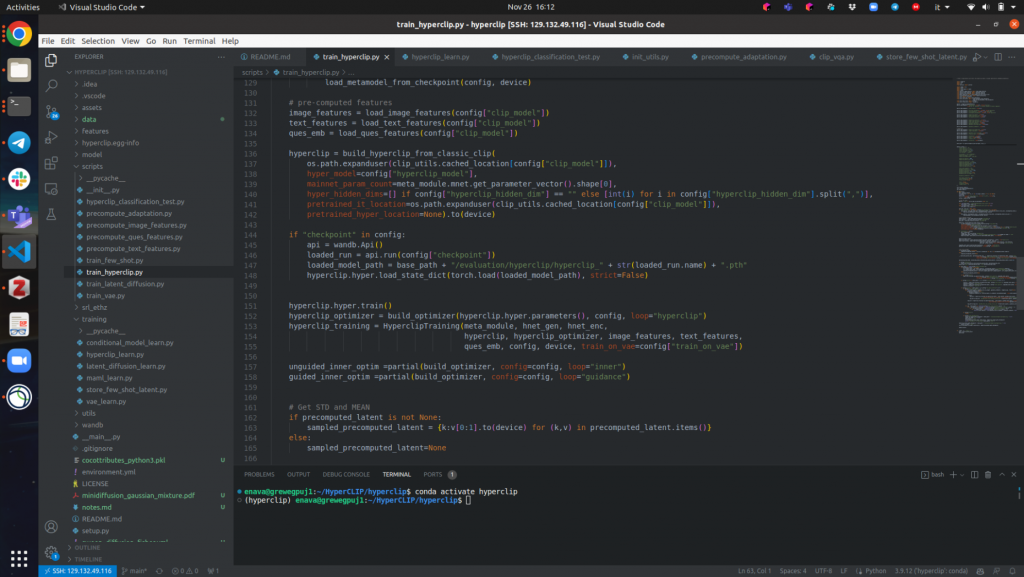

Nava spends the vast majority of his time coding. (Photograph: Elvis Nava)

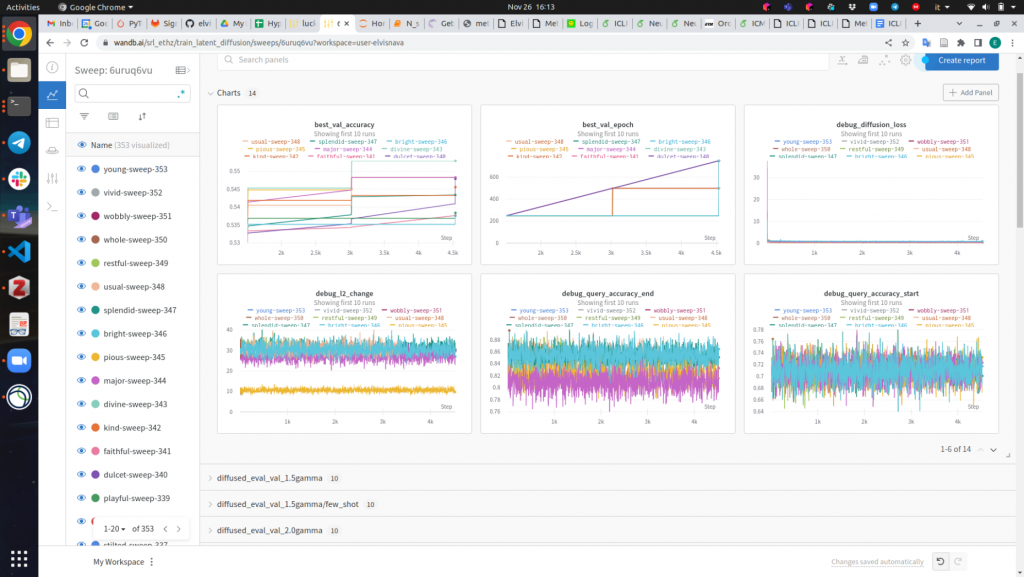

Nava evaluates his studying algorithm. The outcomes of the experiment in a nutshell. (Photograph: Elvis Nava)

Special coaching: Imitating people

Once the robotic arm has accomplished preschool and has learnt to know speech, recognise pictures and perform easy actions, Nava sends it to particular coaching. There, the machine learns to, say, imitate the actions of a human hand when pouring a glass of beer. “As this involves very specific sequences of movements, existing models no longer suffice,” Nava says.

Instead, he reveals his studying algorithm a video of a hand pouring a glass of beer. Based on just some examples, the robotic then tries to mimic these actions, drawing on what it has learnt in preschool. Without prior data, it merely wouldn’t be capable to imitate such a posh sequence of actions.

“If the robot manages to pour the beer without spilling, we tell it ‘well done’ and it memorises the sequence of movements,” Nava says. This methodology is named reinforcement studying in technical jargon.

Elvis Nava teaches robots to hold out oral instructions resembling “pour me a beer”. (Photograph: Daniel Winkler / ETH Zürich)

Foundations for robotic helpers

With this two-stage studying technique, Nava hopes to get a bit of nearer to realising the dream of making an clever machine. How far it is going to take him, he doesn’t but know. “It’s unclear whether this approach will enable robots to carry out tasks we haven’t shown them before.”

It is far more possible that we’ll see robotic helpers that perform oral instructions and fulfil duties they’re already conversant in or that carefully resemble them. Nava avoids making predictions as to how lengthy it is going to take earlier than these purposes can be utilized in areas such because the care sector or development.

Developments within the subject of synthetic intelligence are too quick and unpredictable. In truth, Nava could be fairly pleased if the robotic would simply hand him the beer he’ll politely request after his dissertation defence.

tags: c-Research-Innovation

ETH Zurich

is among the main worldwide universities for know-how and the pure sciences.

ETH Zurich

is among the main worldwide universities for know-how and the pure sciences.