[ad_1]

Consequently, content material moderation—the monitoring of UGC—is important for on-line experiences. In his e-book Custodians of the Internet, sociologist Tarleton Gillespie writes that efficient content material moderation is important for digital platforms to operate, regardless of the “utopian notion” of an open web. “There is no platform that does not impose rules, to some degree—not to do so would simply be untenable,” he writes. “Platforms must, in some form or another, moderate: both to protect one user from another, or one group from its antagonists, and to remove the offensive, vile, or illegal—as well as to present their best face to new users, to their advertisers and partners, and to the public at large.”

Content moderation is used to handle a variety of content material, throughout industries. Skillful content material moderation may help organizations maintain their customers secure, their platforms usable, and their reputations intact. A greatest practices method to content material moderation attracts on more and more subtle and correct technical options whereas backstopping these efforts with human ability and judgment.

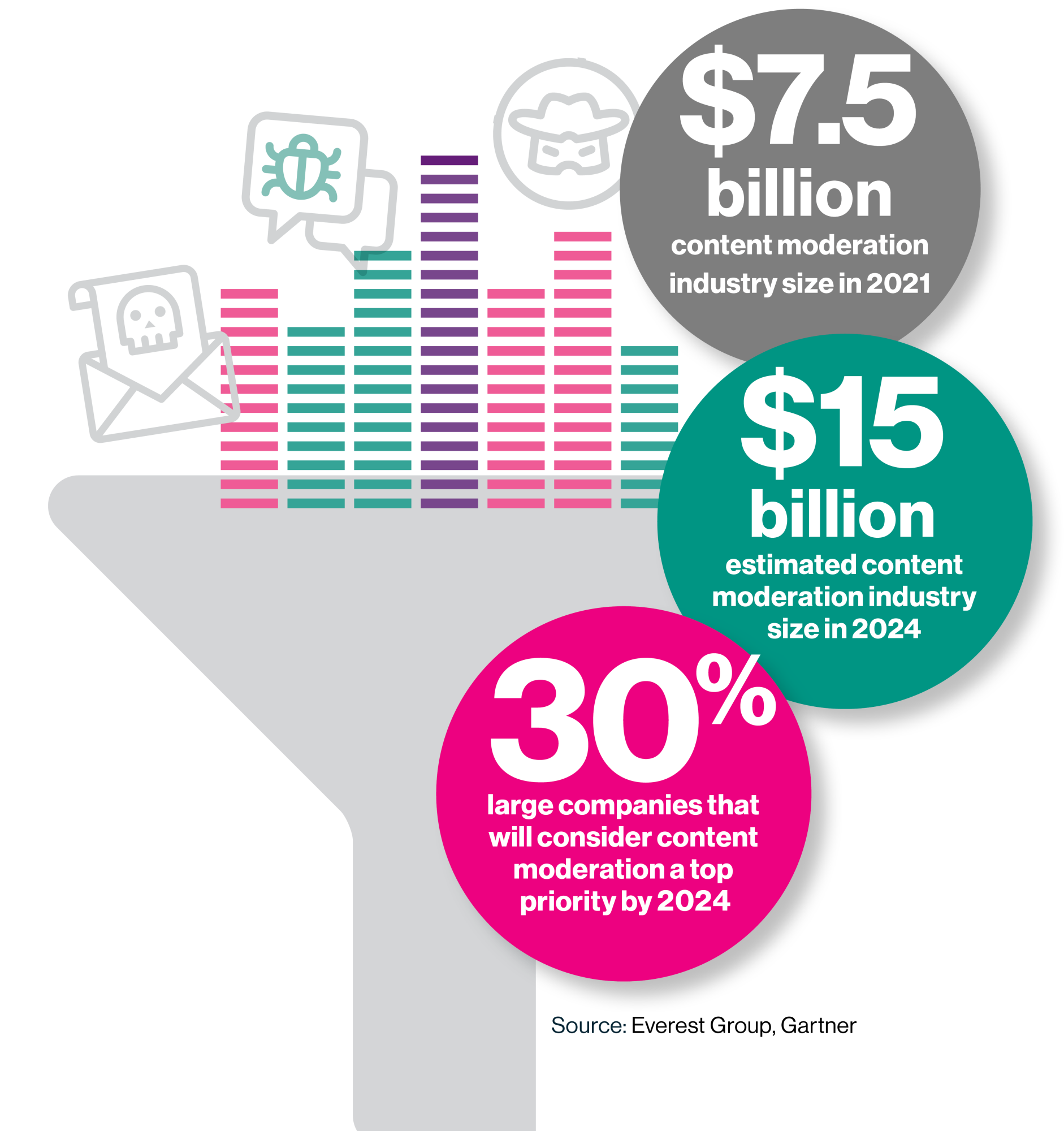

Content moderation is a quickly rising trade, crucial to all organizations and people who collect in digital areas (which is to say, greater than 5 billion individuals). According to Abhijnan Dasgupta, follow director specializing in belief and security (T&S) at Everest Group, the trade was valued at roughly $7.5 billion in 2021—and specialists anticipate that quantity will double by 2024. Gartner analysis suggests that almost one-third (30%) of huge firms will take into account content material moderation a prime precedence by 2024.

Content moderation: More than social media

Content moderators take away tons of of 1000’s of items of problematic content material on daily basis. Facebook’s Community Standards Enforcement Report, for instance, paperwork that in Q3 2022 alone, the corporate eliminated 23.2 million incidences of violent and graphic content material and 10.6 million incidences of hate speech—along with 1.4 billion spam posts and 1.5 billion pretend accounts. But although social media stands out as the most generally reported instance, an enormous variety of industries depend on UGC—all the pieces from product opinions to customer support interactions—and consequently require content material moderation.

“Any site that allows information to come in that’s not internally produced has a need for content moderation,” explains Mary L. Gray, a senior principal researcher at Microsoft Research who additionally serves on the school of the Luddy School of Informatics, Computing, and Engineering at Indiana University. Other sectors that rely closely on content material moderation embrace telehealth, gaming, e-commerce and retail, and the general public sector and authorities.

In addition to eradicating offensive content material, content material moderation can detect and get rid of bots, determine and take away pretend consumer profiles, deal with phony opinions and scores, delete spam, police misleading promoting, mitigate predatory content material (particularly that which targets minors), and facilitate secure two-way communications

in on-line messaging programs. One space of great concern is fraud, particularly on e-commerce platforms. “There are a lot of bad actors and scammers trying to sell fake products—and there’s also a big problem with fake reviews,” says Akash Pugalia, the worldwide president of belief and security at Teleperformance, which offers non-egregious content material moderation assist for international manufacturers. “Content moderators help ensure products follow the platform’s guidelines, and they also remove prohibited goods.”

This content material was produced by Insights, the customized content material arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial employees.

[ad_2]