[ad_1]

In a current assessment printed within the journal Nature, researchers examined current breakthroughs in ligand discovery instruments, their potential to reshape the drug analysis and growth course of, and the hurdles confronted.

Computer-assisted applied sciences for creating medicine have been in use for a number of years. In current occasions, pharma and academia have seen a shift towards embracing computational instruments. The transition is facilitated by the abundance of information on ligand traits and binding to therapeutic targets, three-dimensional (3D) protein constructions, and the emergence of on-demand digital libraries comprising billions of drug-like small molecules. To absolutely make the most of the sources, speedy computational approaches for efficient and speedy giga-scale screening are required.

In the current assessment, researchers reviewed present knowledge on computer-assisted approaches in drug discovery and growth (DDD).

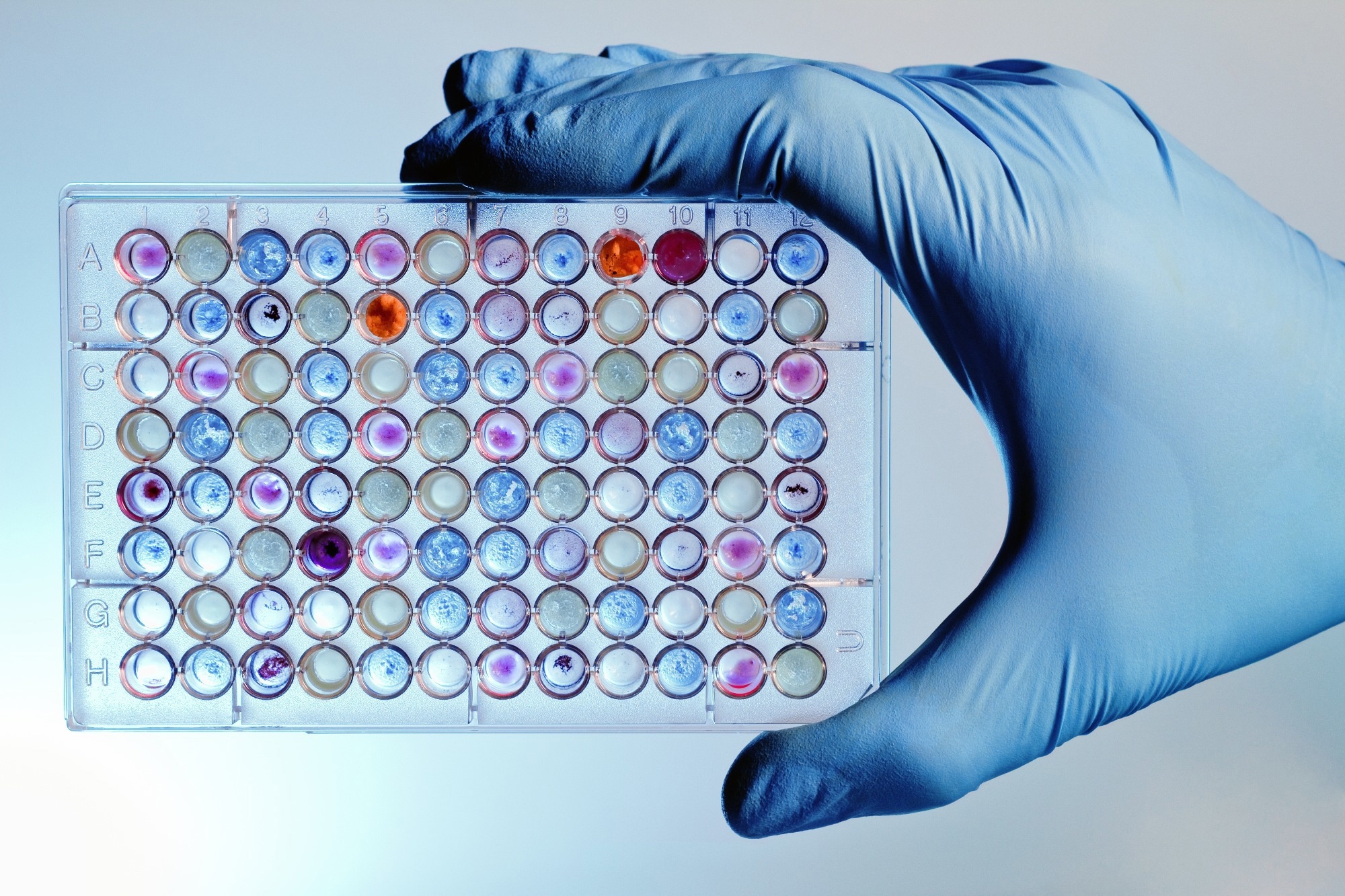

Study: Computational approaches streamlining drug discovery. Image Credit: angellodeco / Shutterstock

Study: Computational approaches streamlining drug discovery. Image Credit: angellodeco / Shutterstock

Very-large-scale integration (VLS) know-how for figuring out high-grade hits

The Protein Data Bank (PDB) contains >200,000 constructions of proteins. High-resolution cryo-electron microscopic imaging and X-rays cowl >90% of protein households, and the remaining gaps are crammed by AlphaFold2 modeling and/or homology. Chemical areas used to display screen and synthesize potential drug candidates have elevated from 107 off-the-shelf molecules to >3.0 x 1010 molecules synthesized on-demand from 2015 to 2022, with the potential to increase to >1015 compounds.

In comparability to HTS (105 to 107) and fragment-based ligand discovery (FBLD, 103 to 105), giga-scale deoxyribonucleic acid (DNA)-encoded libraries (DEL) screening (1010) and giga-scale VLS use significantly bigger preliminary libraries (1010 to 1015). The hit price (%) of HTS and giga-scale DEL screening are related (0.01 to 0.5), larger for FBLD (1.0 to five.0), and highest for VLS (10 to 40a, the place a represents the proportion of estimated hits that had been confirmed experimentally).

The affinity for preliminary hits may be very weak for FBLD (small fragments sized 100 to 1,000.0 μM), weak (1.0 t 10 μM) for HTS, medium for DEL screening (0.1 to 10 μM), and medium to a excessive stage (0.010 to 10 μM) for VLS. In addition to quantitative structure-activity relationship (QSAR)-based optimization for figuring out leads, HTS requires personalized synthesis of structure-activity relationships, FBLD requires rising or merging of the fragments, and DEL screening requires resynthesis of label-free hits.

VLS includes quantitative optimization of structure-activity relationships primarily based on catalog constructions and requires one-tenth (0.0 to 50) of the variety of personalized synthesis processes required for HTS, FBLD, and DEL screening to determine leads. Further, HTS and FBLD don’t generate novel hits. HTS processes require scaffold hopping or modifications, and FBLD requires rational designs to achieve mental property (IP) novelty. On the opposite, most VLS hits are novel.

HTS limitations embrace modest library sizes, unknown modes of binding, and costly tools; FBLD limitations embrace the necessity for costly tools for nuclear magnetic resonance (NMR), floor plasmon resonance (SPR), and X-ray imaging, in addition to many optimization steps; DEL screening leads to a number of false positives and requires off-deoxyribonucleic acid hit resynthesis. VLS requires computational sources, which have been diminished utilizing modular-type VLS by >1,000-fold.

Virtual screening algorithms are primarily based on protein constructions, ligands, or each. Protein-based algorithms require high-resolution constructions, whereas ligand-based ones require giant datasets for ligand exercise. Hybrid screening requires knowledge on ligand exercise and protein-ligand 3D complexes to generate three-dimensional interplay fingerprints and synthetic intelligence (AI)-based fashions.

Chemical library sorts and computational-driven know-how to streamline the invention of medication

Pharma corporations in-house display screen huge numbers of compounds, whereas collections from distributors enable for speedy (<1.0 week) supply of in-stock molecules that includes distinctive chemical-type scaffolds that may be searched simply and are suitable with high-throughput screening (HTS). However, the price of managing bodily drug libraries, their gradual progress, and their small dimension restrict their applicability.

On-demand REAL and chemical areas allow speedy parallel synthesis of on-demand molecules from >12,000 constructing blocks present process >180 reactions, with a hit price of >80.0% and supply inside 2.0 to three.0 weeks. Examples embrace Galaxy by WuXi, Enamine REAL, and CHEMriya by Otava. Including extra synthons (e.g., utilizing the V-SYNTHES algorithm) and response scaffolds allows excessive novelty and speedy polynomial progress for digital chemical space-based drug growth.

The V-SYNTHES algorithm can be utilized to successfully display screen >31 billion compounds, together with >3.0 x1010 compounds from REAL house and >1015 compounds from expanded chemical areas, by absolutely enumerating molecules that optimally match the goal pocket. Generative areas (GDB-13,17,18, and GDBChEMBL) embrace all theoretically conceivable molecules and chemical areas. Only theoretical-type plausibility, predicted at 1,023 to 1,060 drug-like molecules, limits such realms.

Despite offering broad protection of areas, the success charges and reactionary pathways of the compounds produced will not be recognized, warranting computational estimation of their capacity to synthesize drug candidates. In generative areas, atomic graphs are used to generate saturated hydrocarbon constructions and skeletons comprising unsaturated molecules. The skeletons are expanded by heteroatom substitution and transformed into significant compounds.

Computationally pushed drug discovery relies on simply accessible on-demand or generative digital chemical areas, in addition to structure-based and AI-based computational instruments that streamline the drug discovery course of. In comparability to the usual gene-to-lead discovery timeline of 4 to 6 years, computationally pushed know-how can determine potential drug candidates inside 2.0 to 12 months.

Using speedy, versatile docking, deep studying, or scoring approaches with larger accuracy post-processing instruments primarily based on quantum mechanics and free vitality perturbation (FEP) can improve high-affinity hits for giga-scale chemical areas. In addition, quickly increasing low-cost cloud computing, specialised chips, and graphics processing unit (GPU) acceleration additionally help computational instruments.

Based on the assessment findings, the DDD ecosystem appears to be remodeling from computer-aided to computer-driven for speedy and cost-effective drug discovery utilizing elaborate efficiency prediction instruments and potent and selective leads. However, computational estimations require validation by performing in vitro and in vivo experiments at every step of the drug discovery pipeline.