[ad_1]

Natural language allows versatile descriptive queries about pictures. The interplay between textual content queries and pictures grounds linguistic which means within the visible world, facilitating a greater understanding of object relationships, human intentions in direction of objects, and interactions with the setting. The analysis group has studied object-level visible grounding by means of a variety of duties, together with referring expression comprehension, text-based localization, and extra broadly object detection, every of which require totally different abilities in a mannequin. For instance, object detection seeks to seek out all objects from a predefined set of courses, which requires correct localization and classification, whereas referring expression comprehension localizes an object from a referring textual content and sometimes requires complicated reasoning on outstanding objects. At the intersection of the 2 is text-based localization, by which a easy category-based textual content question prompts the mannequin to detect the objects of curiosity.

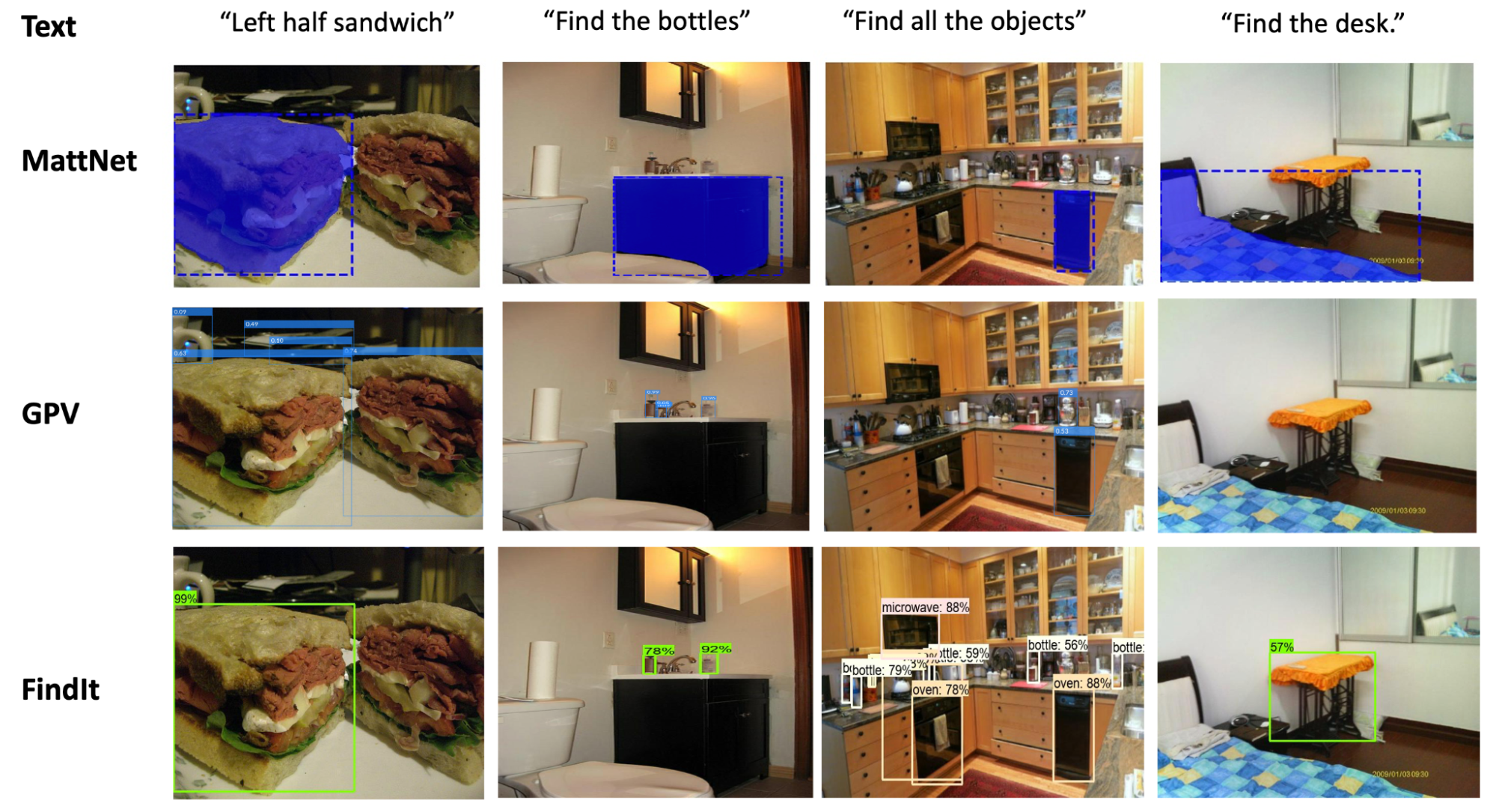

Due to their dissimilar job properties, referring expression comprehension, detection, and text-based localization are principally studied by means of separate benchmarks with most fashions solely devoted to at least one job. As a consequence, current fashions haven’t adequately synthesized info from the three duties to attain a extra holistic visible and linguistic understanding. Referring expression comprehension fashions, for example, are educated to foretell one object per picture, and sometimes wrestle to localize a number of objects, reject damaging queries, or detect novel classes. In addition, detection fashions are unable to course of textual content inputs, and text-based localization fashions usually wrestle to course of complicated queries that refer to at least one object occasion, reminiscent of “Left half sandwich.” Lastly, not one of the fashions can generalize sufficiently properly past their coaching information and classes.

To tackle these limitations, we’re presenting “FindIt: Generalized Localization with Natural Language Queries” at ECCV 2022. Here we suggest a unified, general-purpose and multitask visible grounding mannequin, referred to as FindIt, that may flexibly reply various kinds of grounding and detection queries. Key to this structure is a multi-level cross-modality fusion module that may carry out complicated reasoning for referring expression comprehension and concurrently acknowledge small and difficult objects for text-based localization and detection. In addition, we uncover that an ordinary object detector and detection losses are adequate and surprisingly efficient for all three duties with out the necessity for task-specific design and losses frequent in current works. FindIt’s easy, environment friendly, and outperforms different state-of-the-art fashions on the referring expression comprehension and text-based localization benchmarks, whereas being aggressive on the detection benchmark.

|

| FindIt’s a unified mannequin for referring expression comprehension (col. 1), text-based localization (col. 2), and the item detection job (col. 3). FindIt could actually reply precisely when examined on object sorts/courses not recognized throughout coaching, e.g. “Find the desk” (col. 4). Compared to current baselines (MattNet and GPV), FindIt could actually carry out these duties properly and in a single mannequin. |

Multi-level Image-Text Fusion

Different localization duties are created with totally different semantic understanding aims. For instance, as a result of the referring expression job primarily references outstanding objects within the picture relatively than small, occluded or faraway objects, low decision pictures usually suffice. In distinction, the detection job goals to detect objects with varied sizes and occlusion ranges in increased decision pictures. Apart from these benchmarks, the final visible grounding drawback is inherently multiscale, as pure queries can refer to things of any dimension. This motivates the necessity for a multi-level image-text fusion mannequin for environment friendly processing of upper decision pictures over totally different localization duties.

The premise of FindIt’s to fuse the upper stage semantic options utilizing extra expressive transformer layers, which might seize all-pair interactions between picture and textual content. For the lower-level and higher-resolution options, we use a less expensive dot-product fusion to save lots of computation and reminiscence price. We connect a detector head (e.g., Faster R-CNN) on high of the fused characteristic maps to foretell the bins and their courses.

|

| FindIt accepts a picture and a question textual content as inputs, and processes them individually in picture/textual content backbones earlier than making use of the multi-level fusion. We feed the fused options to Faster R-CNN to foretell the bins referred to by the textual content. The characteristic fusion makes use of extra expressive transformers at increased ranges and cheaper dot-product on the decrease ranges. |

Multitask Learning

Apart from the multi-level fusion described above, we adapt the text-based localization and detection duties to take the identical inputs because the referring expression comprehension job. For the text-based localization job, we generate a set of queries over the classes current within the picture. For any current class, the textual content question takes the shape “Find the [object],” the place [object] is the class title. The objects akin to that class are labeled as foreground and the opposite objects as background. Instead of utilizing the aforementioned immediate, we use a static immediate for the detection job, reminiscent of “Find all the objects.”. We discovered that the precise selection of prompts isn’t essential for text-based localization and detection duties.

After adaptation, all duties in consideration share the identical inputs and outputs — a picture enter, a textual content question, and a set of output bounding bins and courses. We then mix the datasets and prepare on the combination. Finally, we use the usual object detection losses for all duties, which we discovered to be surprisingly easy and efficient.

Evaluation

We apply FindIt to the favored RefCOCO benchmark for referring expression comprehension duties. When solely the COCO and RefCOCO dataset is on the market, FindIt outperforms the state-of-the-art-model on all duties. In the settings the place exterior datasets are allowed, FindIt units a brand new state-of-the-art by utilizing COCO and all RefCOCO splits collectively (no different datasets). On the difficult Google and UMD splits, FindIt outperforms the state-of-the-art by a ten% margin, which, taken collectively, exhibit the advantages of multitask studying.

|

| Comparison with the state-of-the-art on the favored referring expression benchmark. FindIt’s superior on each the COCO and unconstrained settings (further coaching information allowed). |

On the text-based localization benchmark, FindIt achieves 79.7%, increased than the GPV (73.0%), and Faster R-CNN baselines (75.2%). Please check with the paper for extra quantitative analysis.

We additional observe that FindIt generalizes higher to novel classes and super-categories within the text-based localization job in comparison with aggressive single-task baselines on the favored COCO and Objects365 datasets, proven within the determine under.

Efficiency

We additionally benchmark the inference instances on the referring expression comprehension job (see Table under). FindIt’s environment friendly and comparable with current one-stage approaches whereas attaining increased accuracy. For honest comparability, all working instances are measured on one GTX 1080Ti GPU.

| Model | Image Size | Backbone | Runtime (ms) | |||

| MattNet | 1000 | R101 | 378 | |||

| FAOA | 256 | DarkNet53 | 39 | |||

| MCN | 416 | DarkNet53 | 56 | |||

| TransVG | 640 | R50 | 62 | |||

| FindIt (Ours) | 640 | R50 | 107 | |||

| FindIt (Ours) | 384 | R50 | 57 |

Conclusion

We current Findit, which unifies referring expression comprehension, text-based localization, and object detection duties. We suggest multi-scale cross-attention to unify the various localization necessities of those duties. Without any task-specific design, FindIt surpasses the state-of-the-art on referring expression and text-based localization, reveals aggressive efficiency on detection, and generalizes higher to out-of-distribution information and novel courses. All of those are achieved in a single, unified, and environment friendly mannequin.

Acknowledgements

This work is carried out by Weicheng Kuo, Fred Bertsch, Wei Li, AJ Piergiovanni, Mohammad Saffar, and Anelia Angelova. We wish to thank Ashish Vaswani, Prajit Ramachandran, Niki Parmar, David Luan, Tsung-Yi Lin, and different colleagues at Google Research for his or her recommendation and useful discussions. We wish to thank Tom Small for getting ready the animation.