[ad_1]

By Jędrzej Orbik, Charles Sun, Coline Devin, Glen Berseth

Reinforcement studying supplies a conceptual framework for autonomous brokers to study from expertise, analogously to how one may prepare a pet with treats. But sensible functions of reinforcement studying are sometimes removed from pure: as an alternative of utilizing RL to study via trial and error by truly making an attempt the specified activity, typical RL functions use a separate (normally simulated) coaching part. For instance, AlphaGo didn’t study to play Go by competing towards 1000’s of people, however moderately by enjoying towards itself in simulation. While this sort of simulated coaching is interesting for video games the place the principles are completely recognized, making use of this to actual world domains resembling robotics can require a spread of advanced approaches, resembling the usage of simulated knowledge, or instrumenting real-world environments in numerous methods to make coaching possible beneath laboratory circumstances. Can we as an alternative devise reinforcement studying methods for robots that enable them to study immediately “on-the-job”, whereas performing the duty that they’re required to do? In this weblog publish, we are going to talk about ReLMM, a system that we developed that learns to wash up a room immediately with an actual robotic through continuous studying.

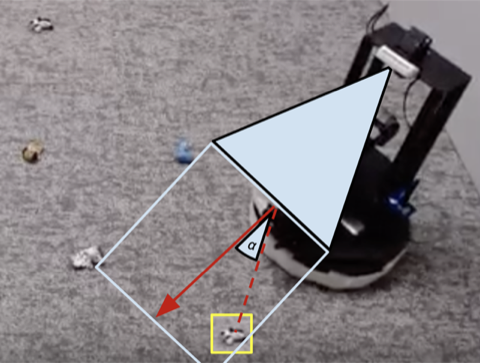

We consider our technique on totally different duties that vary in issue. The top-left activity has uniform white blobs to pickup with no obstacles, whereas different rooms have objects of numerous shapes and colours, obstacles that enhance navigation issue and obscure the objects and patterned rugs that make it tough to see the objects towards the bottom.

To allow “on-the-job” coaching in the actual world, the issue of gathering extra expertise is prohibitive. If we are able to make coaching in the actual world simpler, by making the information gathering course of extra autonomous with out requiring human monitoring or intervention, we are able to additional profit from the simplicity of brokers that study from expertise. In this work, we design an “on-the-job” cell robotic coaching system for cleansing by studying to know objects all through totally different rooms.

Lesson 1: The Benefits of Modular Policies for Robots.

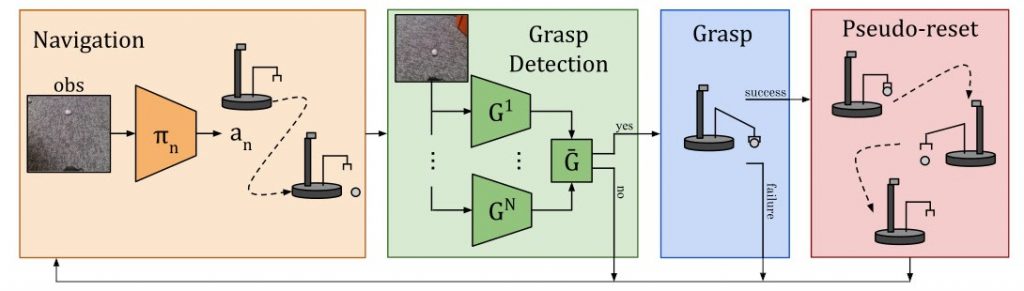

People should not born someday and performing job interviews the following. There are many ranges of duties individuals study earlier than they apply for a job as we begin with the better ones and construct on them. In ReLMM, we make use of this idea by permitting robots to coach common-reusable expertise, resembling greedy, by first encouraging the robotic to prioritize coaching these expertise earlier than studying later expertise, resembling navigation. Learning on this style has two benefits for robotics. The first benefit is that when an agent focuses on studying a ability, it’s extra environment friendly at gathering knowledge across the native state distribution for that ability.

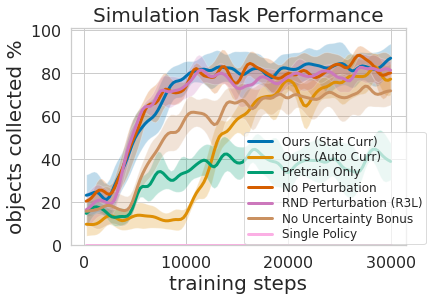

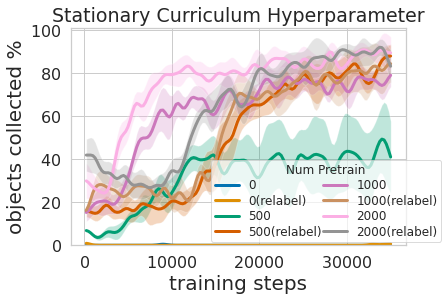

That is proven within the determine above, the place we evaluated the quantity of prioritized greedy expertise wanted to lead to environment friendly cell manipulation coaching. The second benefit to a multi-level studying method is that we are able to examine the fashions skilled for various duties and ask them questions, resembling, “can you grasp anything right now” which is useful for navigation coaching that we describe subsequent.

Training this multi-level coverage was not solely extra environment friendly than studying each expertise on the identical time however it allowed for the greedy controller to tell the navigation coverage. Having a mannequin that estimates the uncertainty in its grasp success (Ours above) can be utilized to enhance navigation exploration by skipping areas with out graspable objects, in distinction to No Uncertainty Bonus which doesn’t use this info. The mannequin may also be used to relabel knowledge throughout coaching in order that within the unfortunate case when the greedy mannequin was unsuccessful attempting to know an object inside its attain, the greedy coverage can nonetheless present some sign by indicating that an object was there however the greedy coverage has not but discovered how one can grasp it. Moreover, studying modular fashions has engineering advantages. Modular coaching permits for reusing expertise which can be simpler to study and might allow constructing clever methods one piece at a time. This is helpful for a lot of causes, together with security analysis and understanding.

Lesson 2: Learning methods beat hand-coded methods, given time

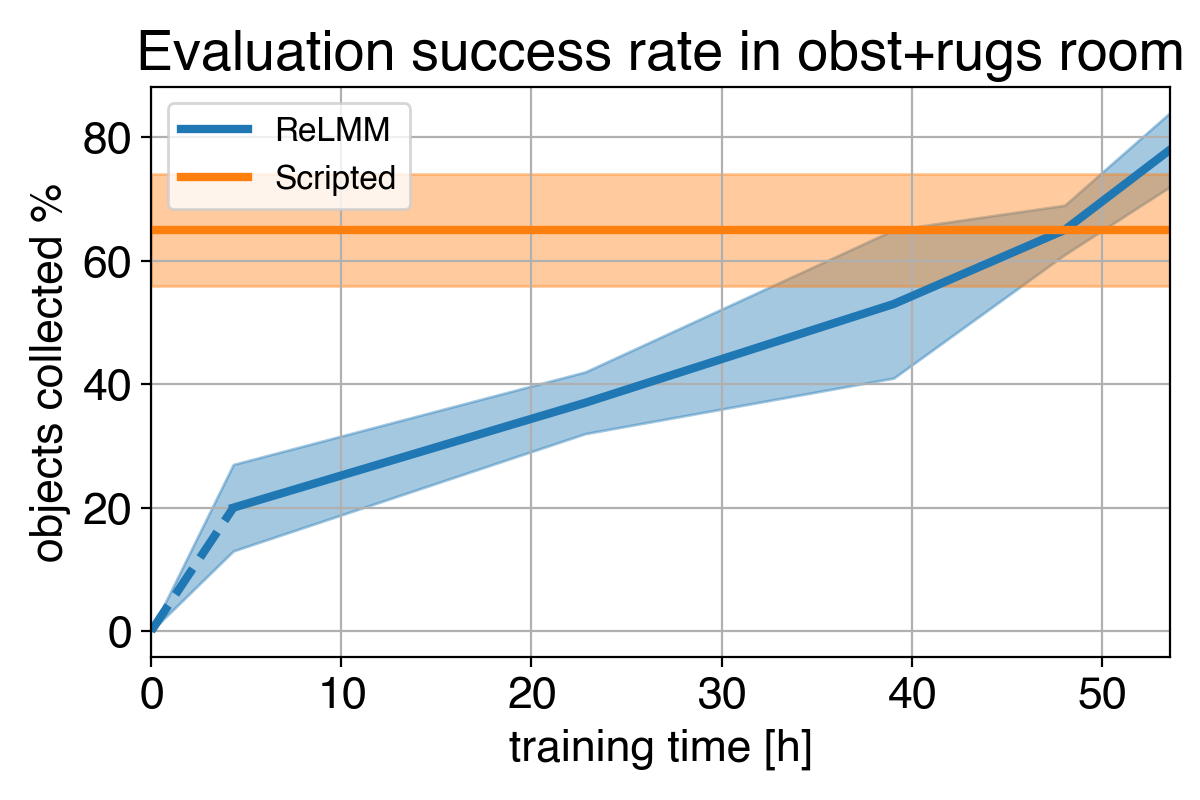

Many robotics duties that we see at the moment will be solved to various ranges of success utilizing hand-engineered controllers. For our room cleansing activity, we designed a hand-engineered controller that locates objects utilizing picture clustering and turns in direction of the closest detected object at every step. This expertly designed controller performs very properly on the visually salient balled socks and takes cheap paths across the obstacles however it can’t study an optimum path to gather the objects shortly, and it struggles with visually numerous rooms. As proven in video 3 under, the scripted coverage will get distracted by the white patterned carpet whereas attempting to find extra white objects to know.

1)

2)

3)

4)

We present a comparability between (1) our coverage at first of coaching (2) our coverage on the finish of coaching (3) the scripted coverage. In (4) we are able to see the robotic’s efficiency enhance over time, and ultimately exceed the scripted coverage at shortly gathering the objects within the room.

Given we are able to use specialists to code this hand-engineered controller, what’s the goal of studying? An essential limitation of hand-engineered controllers is that they’re tuned for a selected activity, for instance, greedy white objects. When numerous objects are launched, which differ in shade and form, the unique tuning might now not be optimum. Rather than requiring additional hand-engineering, our learning-based technique is ready to adapt itself to numerous duties by gathering its personal expertise.

However, an important lesson is that even when the hand-engineered controller is succesful, the training agent ultimately surpasses it given sufficient time. This studying course of is itself autonomous and takes place whereas the robotic is performing its job, making it comparatively cheap. This exhibits the aptitude of studying brokers, which may also be regarded as understanding a normal option to carry out an “expert manual tuning” course of for any sort of activity. Learning methods have the flexibility to create all the management algorithm for the robotic, and should not restricted to tuning a number of parameters in a script. The key step on this work permits these real-world studying methods to autonomously acquire the information wanted to allow the success of studying strategies.

This publish relies on the paper “Fully Autonomous Real-World Reinforcement Learning with Applications to Mobile Manipulation”, introduced at CoRL 2021. You can discover extra particulars in our paper, on our web site and the on the video. We present code to breed our experiments. We thank Sergey Levine for his helpful suggestions on this weblog publish.

BAIR Blog

is the official weblog of the Berkeley Artificial Intelligence Research (BAIR) Lab.

BAIR Blog

is the official weblog of the Berkeley Artificial Intelligence Research (BAIR) Lab.