[ad_1]

Intelligent assistants on cell units have considerably superior language-based interactions for performing easy each day duties, similar to setting a timer or turning on a flashlight. Despite the progress, these assistants nonetheless face limitations in supporting conversational interactions in cell consumer interfaces (UIs), the place many consumer duties are carried out. For instance, they can not reply a consumer’s query about particular data displayed on a display. An agent would want to have a computational understanding of graphical consumer interfaces (GUIs) to realize such capabilities.

Prior analysis has investigated a number of essential technical constructing blocks to allow conversational interplay with cell UIs, together with summarizing a cell display for customers to rapidly perceive its function, mapping language directions to UI actions and modeling GUIs in order that they’re extra amenable for language-based interplay. However, every of those solely addresses a restricted side of conversational interplay and requires appreciable effort in curating large-scale datasets and coaching devoted fashions. Furthermore, there’s a broad spectrum of conversational interactions that may happen on cell UIs. Therefore, it’s crucial to develop a light-weight and generalizable method to understand conversational interplay.

In “Enabling Conversational Interaction with Mobile UI using Large Language Models”, introduced at CHI 2023, we examine the viability of using giant language fashions (LLMs) to allow various language-based interactions with cell UIs. Recent pre-trained LLMs, similar to PaLM, have demonstrated skills to adapt themselves to varied downstream language duties when being prompted with a handful of examples of the goal process. We current a set of prompting methods that allow interplay designers and builders to rapidly prototype and check novel language interactions with customers, which saves time and sources earlier than investing in devoted datasets and fashions. Since LLMs solely take textual content tokens as enter, we contribute a novel algorithm that generates the textual content illustration of cell UIs. Our outcomes present that this method achieves aggressive efficiency utilizing solely two information examples per process. More broadly, we show LLMs’ potential to essentially rework the longer term workflow of conversational interplay design.

|

| Animation exhibiting our work on enabling varied conversational interactions with cell UI utilizing LLMs. |

Prompting LLMs with UIs

LLMs help in-context few-shot studying by way of prompting — as an alternative of fine-tuning or re-training fashions for every new process, one can immediate an LLM with just a few enter and output information exemplars from the goal process. For many pure language processing duties, similar to question-answering or translation, few-shot prompting performs competitively with benchmark approaches that practice a mannequin particular to every process. However, language fashions can solely take textual content enter, whereas cell UIs are multimodal, containing textual content, picture, and structural data of their view hierarchy information (i.e., the structural information containing detailed properties of UI components) and screenshots. Moreover, straight inputting the view hierarchy information of a cell display into LLMs is just not possible because it comprises extreme data, similar to detailed properties of every UI ingredient, which might exceed the enter size limits of LLMs.

To deal with these challenges, we developed a set of methods to immediate LLMs with cell UIs. We contribute an algorithm that generates the textual content illustration of cell UIs utilizing depth-first search traversal to transform the Android UI’s view hierarchy into HTML syntax. We additionally make the most of chain of thought prompting, which includes producing intermediate outcomes and chaining them collectively to reach on the closing output, to elicit the reasoning capacity of the LLM.

|

| Animation exhibiting the method of few-shot prompting LLMs with cell UIs. |

Our immediate design begins with a preamble that explains the immediate’s function. The preamble is adopted by a number of exemplars consisting of the enter, a series of thought (if relevant), and the output for every process. Each exemplar’s enter is a cell display within the HTML syntax. Following the enter, chains of thought will be supplied to elicit logical reasoning from LLMs. This step is just not proven within the animation above as it’s optionally available. The process output is the specified end result for the goal duties, e.g., a display abstract or a solution to a consumer query. Few-shot prompting will be achieved with multiple exemplar included within the immediate. During prediction, we feed the mannequin the immediate with a brand new enter display appended on the finish.

Experiments

We carried out complete experiments with 4 pivotal modeling duties: (1) display question-generation, (2) display summarization, (3) display question-answering, and (4) mapping instruction to UI motion. Experimental outcomes present that our method achieves aggressive efficiency utilizing solely two information examples per process.

|

Task 1: Screen query technology

Given a cell UI display, the aim of display question-generation is to synthesize coherent, grammatically appropriate pure language questions related to the UI components requiring consumer enter.

We discovered that LLMs can leverage the UI context to generate questions for related data. LLMs considerably outperformed the heuristic method (template-based technology) relating to query high quality.

We additionally revealed LLMs’ capacity to mix related enter fields right into a single query for environment friendly communication. For instance, the filters asking for the minimal and most value had been mixed right into a single query: “What’s the worth vary?

|

| We noticed that the LLM might use its prior data to mix a number of associated enter fields to ask a single query. |

In an analysis, we solicited human scores on whether or not the questions had been grammatically appropriate (Grammar) and related to the enter fields for which they had been generated (Relevance). In addition to the human-labeled language high quality, we routinely examined how nicely LLMs can cowl all the weather that have to generate questions (Coverage F1). We discovered that the questions generated by LLM had nearly excellent grammar (4.98/5) and had been extremely related to the enter fields displayed on the display (92.8%). Additionally, LLM carried out nicely by way of masking the enter fields comprehensively (95.8%).

| Template | 2-shot LLM | |||||||

| Grammar | 3.6 (out of 5) | 4.98 (out of 5) | ||||||

| Relevance | 84.1% | 92.8% | ||||||

| Coverage F1 | 100% | 95.8% |

Task 2: Screen summarization

Screen summarization is the automated technology of descriptive language overviews that cowl important functionalities of cell screens. The process helps customers rapidly perceive the aim of a cell UI, which is especially helpful when the UI is just not visually accessible.

Our outcomes confirmed that LLMs can successfully summarize the important functionalities of a cell UI. They can generate extra correct summaries than the Screen2Words benchmark mannequin that we beforehand launched utilizing UI-specific textual content, as highlighted within the coloured textual content and packing containers under.

|

| Example abstract generated by 2-shot LLM. We discovered the LLM is ready to use particular textual content on the display to compose extra correct summaries. |

Interestingly, we noticed LLMs utilizing their prior data to infer data not introduced within the UI when creating summaries. In the instance under, the LLM inferred the subway stations belong to the London Tube system, whereas the enter UI doesn’t comprise this data.

|

| LLM makes use of its prior data to assist summarize the screens. |

Human analysis rated LLM summaries as extra correct than the benchmark, but they scored decrease on metrics like BLEU. The mismatch between perceived high quality and metric scores echoes latest work exhibiting LLMs write higher summaries regardless of automated metrics not reflecting it.

|

|

| Left: Screen summarization efficiency on automated metrics. Right: Screen summarization accuracy voted by human evaluators. |

Task 3: Screen question-answering

Given a cell UI and an open-ended query asking for data relating to the UI, the mannequin ought to present the right reply. We concentrate on factual questions, which require solutions primarily based on data introduced on the display.

|

| Example outcomes from the display QA experiment. The LLM considerably outperforms the off-the-shelf QA baseline mannequin. |

We report efficiency utilizing 4 metrics: Exact Matches (similar predicted reply to floor fact), Contains GT (reply totally containing floor fact), Sub-String of GT (reply is a sub-string of floor fact), and the Micro-F1 rating primarily based on shared phrases between the expected reply and floor fact throughout your complete dataset.

Our outcomes confirmed that LLMs can accurately reply UI-related questions, similar to “what is the headline?”. The LLM carried out considerably higher than baseline QA mannequin DistillBERT, attaining a 66.7% totally appropriate reply price. Notably, the 0-shot LLM achieved an actual match rating of 30.7%, indicating the mannequin’s intrinsic query answering functionality.

| Models | Exact Matches | Contains GT | Sub-String of GT | Micro-F1 | ||||||||||

| 0-shot LLM | 30.7% | 6.5% | 5.6% | 31.2% | ||||||||||

| 1-shot LLM | 65.8% | 10.0% | 7.8% | 62.9% | ||||||||||

| 2-shot LLM | 66.7% | 12.6% | 5.2% | 64.8% | ||||||||||

| DistillBERT | 36.0% | 8.5% | 9.9% | 37.2% |

Task 4: Mapping instruction to UI motion

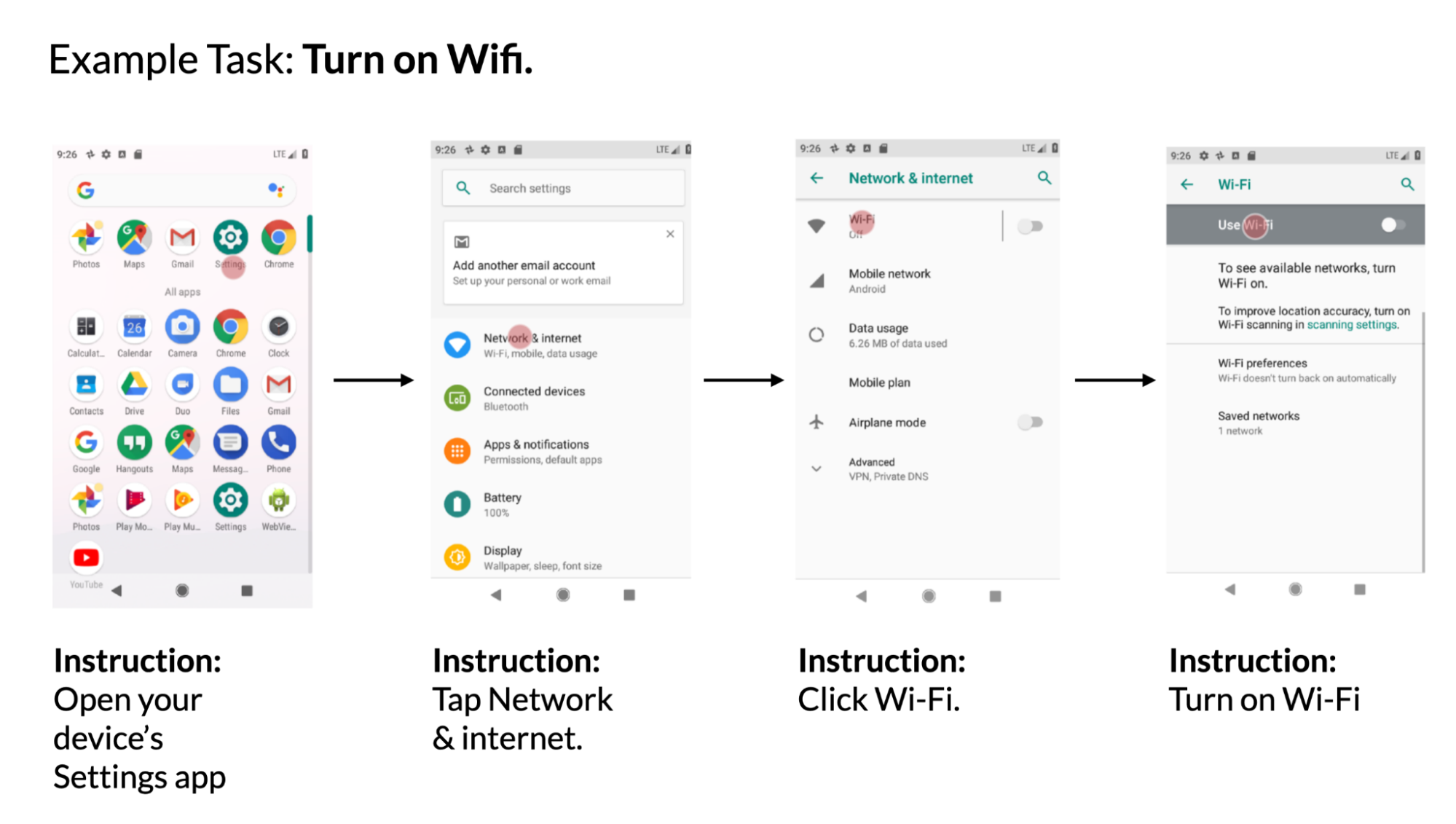

Given a cell UI display and pure language instruction to manage the UI, the mannequin must predict the ID of the item to carry out the instructed motion. For instance, when instructed with “Open Gmail,” the mannequin ought to accurately establish the Gmail icon on the house display. This process is beneficial for controlling cell apps utilizing language enter similar to voice entry. We launched this benchmark process beforehand.

|

| Example utilizing information from the PixelHelp dataset. The dataset comprises interplay traces for frequent UI duties similar to turning on wifi. Each hint comprises a number of steps and corresponding directions. |

We assessed the efficiency of our method utilizing the Partial and Complete metrics from the Seq2Act paper. Partial refers back to the proportion of accurately predicted particular person steps, whereas Complete measures the portion of precisely predicted whole interplay traces. Although our LLM-based technique didn’t surpass the benchmark educated on large datasets, it nonetheless achieved outstanding efficiency with simply two prompted information examples.

| Models | Partial | Complete | ||||||

| 0-shot LLM | 1.29 | 0.00 | ||||||

| 1-shot LLM (cross-app) | 74.69 | 31.67 | ||||||

| 2-shot LLM (cross-app) | 75.28 | 34.44 | ||||||

| 1-shot LLM (in-app) | 78.35 | 40.00 | ||||||

| 2-shot LLM (in-app) | 80.36 | 45.00 | ||||||

| Seq2Act | 89.21 | 70.59 |

Takeaways and conclusion

Our research exhibits that prototyping novel language interactions on cell UIs will be as straightforward as designing an information exemplar. As a outcome, an interplay designer can quickly create functioning mock-ups to check new concepts with finish customers. Moreover, builders and researchers can discover completely different potentialities of a goal process earlier than investing important efforts into growing new datasets and fashions.

We investigated the feasibility of prompting LLMs to allow varied conversational interactions on cell UIs. We proposed a set of prompting methods for adapting LLMs to cell UIs. We carried out intensive experiments with the 4 essential modeling duties to guage the effectiveness of our method. The outcomes confirmed that in comparison with conventional machine studying pipelines that consist of costly information assortment and mannequin coaching, one might quickly notice novel language-based interactions utilizing LLMs whereas attaining aggressive efficiency.

Acknowledgements

We thank our paper co-author Gang Li, and respect the discussions and suggestions from our colleagues Chin-Yi Cheng, Tao Li, Yu Hsiao, Michael Terry and Minsuk Chang. Special because of Muqthar Mohammad and Ashwin Kakarla for his or her invaluable help in coordinating information assortment. We thank John Guilyard for serving to create animations and graphics within the weblog.