[ad_1]

Freed from the restrictions of compatibility with the 80X86 processor household, the key N10 group began with nothing greater than a just about clean sheet of paper.

One man’s campaign

The paper was to not keep clean for lengthy. Leslie Kohn, the venture’s chief architect, had already earned the nickname of Mr. RISC. He had been hoping to get began on a RISC microprocessor design ever since becoming a member of Intel in 1982. One try went virtually 18 months into improvement, however present silicon know-how didn’t enable sufficient transistors on one chip to realize the specified efficiency. A later try was dropped when Intel determined to not put money into that exact course of know-how.

Jean-Claude Cornet, vp and normal supervisor of Intel’s Santa Clara Microcomputer Division, noticed N10 as a chance to serve the high-performance microprocessor market. The chip, he predicted, would attain past the utilitarian line of microprocessors into gear for the high-level engineering and scientific analysis communities.

“We are all engineers,” Cornet informed IEEE Spectrum, “so this is the type of need we are most familiar with: a computation-intensive, simulation-intensive system for computer-aided design.”

Discussions with potential clients within the supercomputer, graphics workstation, and minicomputer industries contributed new necessities for the chip. Supercomputer makers needed a floating-point unit in a position to course of vectors and pressured avoiding a efficiency bottleneck, a necessity that led to the complete chip being designed in a 64-bit structure made attainable by the 1 million transistors. Graphics workstation distributors, for his or her half, urged the Intel designers to stability integer efficiency with floating-point efficiency, and to make the chip in a position to produce three-dimensional graphics. Minicomputer makers needed pace, and confirmed the choice that RISC was the one strategy to go for top efficiency; in addition they pressured the excessive throughput wanted for database purposes.

Freed from the restrictions of compatibility with the 80X86 processor household, the key N10 group began with nothing greater than a just about clean sheet of paper.

The Intel group additionally speculated over what its rivals—equivalent to MIPS Computer Systems Inc., Sun Micro Systems Inc., and Motorola Inc.—have been as much as. The engineers knew their chip wouldn’t be the primary in RISC structure in the marketplace, however the 64-bit know-how meant that they might leapfrog their competitor’s 32-bit designs. They have been additionally already planning the extra absolutely outlined structure, with reminiscence administration, cache, floating-point, and different options on the one chip, a versatility inconceivable with what they appropriately assumed have been the smaller transistor budgets of their rivals.

The remaining resolution rested with Albert Y.C. Yu, vp and normal supervisor of the corporate’s Component Technology and Development Group. For a number of years, Yu had been intrigued by Kohn’s zeal for constructing a superfast RISC microprocessor, however he felt Intel lacked the assets to put money into such a venture. And as a result of this very novel concept got here out of the engineering group, Yu informed Spectrum, he discovered some Intel executives hesitant. However, towards the tip of 1985 he determined that, regardless of his uncertainty, the RISC chip’s time had come. “A lot depends on gut feel,” he stated. “You take chances at these things.”

The second the choice was made, in January 1986, the warmth was on. Intel’s RISC chip must attain its market earlier than the competitors was firmly entrenched, and with the venture beginning up alongside the 486 design, the 2 teams may need to compete each for laptop time and for assist workers. Kohn resolved that battle by ensuring that the N10 effort was frequently effectively out in entrance of the 486. To minimize down on paperwork and communications overhead, he decided that the N10 group would have as few engineers as attainable.

Staffing up

As quickly as Yu authorized the venture, Sai Wai Fu, an engineer on the Hillsboro operation, moved to Santa Clara and joined Kohn because the group’s comanager. Fu and Kohn had recognized one another as college students on the California Institute of Technology in Pasadena, had been reunited at Intel, and had labored collectively on certainly one of Kohn’s earlier RISC makes an attempt. Fu was keen for an additional probability and took over the recruiting, scrambling to assemble a appropriate group of gifted engineers. He plugged not solely the thrill of breaking the million-transistor barrier, but in addition his personal philosophy of administration: broadening the engineers’ outlook by difficult them exterior their areas of experience.

To minimize down on paperwork and communications overhead, [Leslie Kohn] decided that the N10 group would have as few engineers as attainable.The venture attracted quite a lot of skilled engineers inside the firm. Piyush Patel, who had been head logic designer for the 80386, joined the N10 group slightly than the 486 venture.

“It was risky,” he stated, “but it was more challenging.”

Hon P. Sit, a design engineer, additionally selected N10 over the 486 as a result of, he stated: “With the 486, I would be working on control logic, and I knew how to do that. I had done that before. N10 needed people to work on the floating-point unit, and I knew very little about floating-point, so I was interested to learn.”

In addition to luring “escapees,” as 486 group supervisor John Crawford referred to as them, the N10 group pulled in three reminiscence design specialists from Intel’s know-how improvement teams, necessary as a result of there was to be quite a lot of on-chip reminiscence. Finally, Kohn and Fu took on quite a lot of engineers contemporary out of school. The variety of engineers grew to twenty, eight greater than they’d at first thought could be wanted, however lower than two thirds the quantity on the 486 group.

Getting it down on paper

During the early months of 1986, when he was not tied up with Intel’s legal professionals over the NEC copyright go well with (Intel had sued NEC alleging copyright infringement of its microcode for the 8086), Kohn refined his concepts about what N10 would include and the way it could all match collectively. Among these he consulted informally was Crawford.

“Both the N10 and the 486 were projected to be something above 400 mils, and I was a little nervous about the size,” Crawford stated. “But [Kohn] said ‘Hey, if it’s not 450, we can forget it, because we won’t have enough functions on the die. So we should shoot for 450, and recognize that these things hardly ever shrink.’”

The chip, they realized, would most likely transform better than 450 mils on the facet. The precise i860 measures 396 by 602 mils.

Kohn began by calling for a RISC core with quick integer efficiency, giant caches for directions and information, and specialised circuitry for quick floating-point calculations. Where most microprocessors take from 5 to 10 clock cycles to carry out a floating-point operation, Kohn’s purpose was to chop that to at least one cycle by pipelining. He additionally needed a 64-bit information bus general, however with a 128-bit bus between information cache and floating-point part, in order that the floating-point part wouldn’t encounter bottlenecks when accessing information. Like a supercomputer, the chip must carry out vector operations, in addition to execute totally different directions in parallel.

Early that April, Fu took a pencil and an 8 1/2-by-11-inch piece of paper and sketched out a plan for the chip, divided into eight sections: RISC integer core, paging unit, instruction cache, information cache, floating-point adder, floating-point multiplier, floating level registers, and bus controller. As he drew, he made some selections: for instance, a line measurement of 32 bytes for the cache space. (A line, of no matter size, is a set of reminiscence cells, the smallest unit of reminiscence that may be moved from side to side between cache and predominant reminiscence.) Though a smaller line measurement would have improved efficiency barely, it could have compelled the cache into a distinct form and rendered it extra awkward to place on the chip. “So I chose the smallest line size we could have and still have a uniform shape,” Fu stated.

His sketch additionally successfully did away with certainly one of Kohn’s concepts: a knowledge cache divided into 4 128-bit compartments to create four-way parallelism-called four-way set associative. But as he drew his plan, Fu realized that the four-way break up wouldn’t work. With two compartments, the info might stream from the cache in a straight line to the floating-point unit. With four-way parallelism, a whole bunch of wires must bend. “The whole thing would just fall apart for physical layout reasons,” Fu stated. Abandoning the four-way break up, he noticed, would price solely 5 % in efficiency, so the two-way cache gained the day.

“When I was adding these blocks together, I didn’t add them properly. I missed 250 microns.”

— Sai Wai Fu

When he completed his sketch, he had a block of empty house. “I’d learned you shouldn’t pack so tight up front when you don’t know the details, because things grow,” Fu stated. That house was crammed up, and extra. Several sections of the design grew barely as they have been applied. Then in the future towards the tip of the design course of, Fu remembers, an engineer apologetically stated: “When I was adding these blocks together, I didn’t add them properly. I missed 250 microns.”

It was a easy mistake in including. “But it is not something that you can fix easily,” Fu stated. “You have to seek out room for the 250 microns, though we all know that as a result of we’re pushing the bounds of the method know-how, including 100 microns right here or there dangers sending the yield means down.

“We tried every trick we could think of to compensate, but in the end,” he stated, “we had to grow the chip.”

Since Fu’s sketch partitioned the chip into eight blocks, he and Kohn divided their group into eight teams of both two or three engineers, relying upon the block’s complexity. The teams started work on logic simulation and circuit design, whereas Kohn continued to flesh out the architectural specs.

“You can’t work in a top-down fashion on a project like this,” Kohn stated. “You start at several different levels and work in parallel.”

Said Fu: “If you want to push the limits of a technology, you have to do top-down, bottom-up, and inside-out iterations of everything.”

The energy price range at first induced severe concern. Kohn and Fu had estimated that the chip ought to dissipate 4 watts at 33 megahertz.

Fu divided the facility price range among the many groups, allocating half a watt right here, a watt there. “I told them go away, do your designs, then if you exceed your budget, come back and tell me.”

The extensive buses have been a selected fear. The designers discovered that one reminiscence cell on the chip drove an extended transmission line with 1 to 2 picofarads of capacitance; by the point it reached its vacation spot, the sign was very weak and wanted amplification. The cache reminiscence wanted about 500 amplifiers, about 10 occasions as many as a reminiscence chip. Designed like most static RAMs, these amplifiers would burn 2.5 watts—greater than half the chip’s energy price range. Building the SRAMs utilizing circuit-design methods borrowed from dynamic RAM know-how minimize that to about 0.5 watt.

“It turned out that while some groups exceeded their budget, some didn’t need as much, even though I purposely underestimated to scare them a little so they wouldn’t go out and burn a lot of power,” Fu stated. The precise chip’s information sheet claims 3 watts of dissipation.

One instruction, one clock

In assembly their efficiency purpose, the designers made executing every instruction in a single clock cycle one thing of a faith—one which required fairly quite a lot of revolutionary twists. Using barely lower than two cycles per instruction is frequent for RISC processors, so the N10 group’s purpose of 1 instruction per cycle appeared achievable, however such charges are unusual for most of the chip’s different capabilities. New algorithms needed to be developed to deal with floating-point additions and multiplications in a single cycle in pipeline mode. The floating-point algorithms are among the many some 20 improvements on the chip for which Intel is searching for patents.

Floating-point divisions, nonetheless, take something from 20 to 40 cycles, and the designers noticed early on that they might not have sufficient house on the chip for the particular circuitry wanted for such an rare operation.

The designers of the floating-point adder and multiplier models made the logic for rounding off numbers conform to IEEE requirements, which slowed efficiency. (Cray Research Inc.’s computer systems, for instance, reject these requirements to spice up efficiency.) While some N10 engineers needed the upper efficiency, they discovered clients most popular conformity.

However, they did uncover a strategy to do the quick three-dimensional graphics demanded by engineers and scientists, with none painful tradeoffs. The designers have been ready so as to add this perform by piggybacking a small quantity of additional circuitry onto the floating-point {hardware}, including solely 3 % to the chip’s measurement however boosting the pace of dealing with graphics calculations by an element of 10, to 16 million 16-bit image parts per second.

With a RISC processor, performing masses from cache reminiscence in a single clock cycle sometimes requires an additional register write port, to forestall interference between the load data and the outcome coming back from the arithmetic logic unit. The N10 group discovered a means to make use of the identical port for each items of data in a single cycle, and so saved circuitry with out shedding pace. Fast entry to directions and information is vital for a RISC processor: as a result of the directions are easy, extra of them possibly wanted. The designers developed new circuit design methods—for which they’ve filed patent purposes—to permit one-cycle entry to the big cache reminiscence by means of very giant buses drawing solely 2.5 watts.

“Existing SRAM parts can access data in a comparable amount of time, but they burn up a lot of power,” Kohn stated.

No creeping class

The million transistors meant that a lot of the two 1/2 years of improvement was spent in designing circuitry. The eight teams engaged on totally different components of the chip referred to as for cautious administration to make sure that every half would work seamlessly with all of the others after their meeting.

First of all, there was the N10 design philosophy: no creeping class. “Creeping elegance has killed many a chip,” stated Roland Albers, the group’s circuit design supervisor. Circuit designers, he stated, ought to keep away from reinventing the wheel. If a typical cycle is 20 nanoseconds, and a longtime approach results in a path that takes 15 ns, the engineer ought to settle for this and transfer on to the subsequent circuit.

“If you let people just dive in and try anything they want, any trick they’ve read about in some magazine, you end up with a lot of circuits that are marginal and flaky”

—Roland Albers

Path timings have been documented in preliminary venture specs and up to date on the weekly conferences Albers referred to as as soon as the precise designing of circuits was underneath means.

“If you let people just dive in and try anything they want, any trick they’ve read about in some magazine, you end up with a lot of circuits that are marginal and flaky,” stated Albers. “Instead, we only pushed it where it had to be pushed. And that resulted in a manufacturable and reliable part instead of a test chip for a whole bunch of new circuitry.”

In addition to enhancing reliability, the ban on creeping class sped up the complete course of.

To be certain that the circuitry of various blocks of the chip would mesh cleanly, Albers and his circuit designers wrote a handbook protecting their work. With engineers from Intel’s CAD division, he developed a graphics-based circuit-simulation setting with which engineers entered simulation schematics together with parasitic capacitance of gadgets and interconnections graphically slightly than alphanumerically. The output was then examined on a workstation as graphic waveforms.

At the weekly conferences, every engineer who had accomplished a chunk of the design would current his outcomes. The others would ensure that it took no pointless dangers, that it adhered to the established methodology, and that its indicators would combine with the opposite components of the chip.

Intel had instruments for producing the structure design straight from the high-level language that simulated the chip’s logic. Should the group use them or not? Such instruments save time and remove the bugs launched by human designers, however have a tendency to not generate very compact circuitry. Intel’s personal autoplacement instruments for structure design minimize density about in half, and slowed issues down by one-third, compared with handcrafted circuit design. Commercially out there instruments, Intel’s engineers say, do even worse.

Deciding when and the place to make use of these instruments was easy sufficient: these components of the floating-point logic and RISC core that manipulate information needed to be designed manually, as did the caches, as a result of they concerned loads of repetition. Some cells are repeated a whole bunch, even hundreds, of occasions (the SRAM cell is repeated 100,000 occasions), so the house gained by hand-packing the circuits concerned way over an element of two. With the management logic, nonetheless, the place there are few or no repetitions, the saving in time was thought of value the additional silicon, notably as a result of automated technology of the circuitry allowed last-minute adjustments to appropriate the chip’s operation.

About 40,000 transistors out of the chip’s greater than 1,000,000 have been laid out routinely, whereas about 10,000 have been generated manually and replicated to supply the remaining 980,000.

About 40,000 transistors out of the chip’s greater than 1,000,000 have been laid out routinely, whereas about 10,000 have been generated manually and replicated to supply the remaining 980,000. “If we’d had to do those 40,000 manually, it would have added several months to the schedule and introduced more errors, so we might not have been able to sample first silicon,” stated Robert G. Willoner, one of many engineers on the group.

These layout-generation instruments had been used at Intel earlier than, and the group was assured that they might work, however they have been much less positive how a lot house the routinely designed circuits would take up.

Said Albers: “It took a little more than we had thought, which caused some problems toward the end, so we had to grow the die size a little.”

Unauthorized instrument use

Even with automated structure, one part of the management logic, the bus controller, began to fall delayed. Fearing the controller would turn out to be a bottleneck for the complete design, the group tried a number of new methods. RISC processors are often designed to interface to a quick SRAM system that acts as an exterior cache and interfaces in flip with the DRAM predominant reminiscence. Here, nonetheless, the plan was to make it attainable for customers to bypass the SRAM and fasten the processor on to a DRAM, which might enable the chip to be designed into low-cost programs in addition to to deal with very giant information buildings.

For this motive, the bus can pipeline as many as three cycles earlier than it will get the primary information again from the DRAM, and the info has the time to journey by means of a sluggish DRAM reminiscence with out holding up the processor. The bus additionally had to make use of the static column mode, a characteristic of the most recent DRAMs that permits sequential addresses accessing the identical web page in reminiscence to inform the system, by means of a separate pin, that the bit is positioned on the identical web page because the earlier bit.

Both these options introduced surprising design issues, the primary as a result of the management logic needed to preserve observe of assorted mixtures of excellent bus cycles. While the remainder of the chip was already being laid out, the bus designers have been nonetheless scuffling with the logic simulation. There was no time even for guide circuit design, adopted by automated structure, adopted by a verify of design towards structure.

One of the designers heard from a buddy in Intel’s CAD division a few instrument that might take a design from the logic simulation stage, optimize the circuit design, and generate an optimized structure. The instrument eradicated the time taken up by circuit schematics, in addition to the checking for schematics errors. It was nonetheless underneath improvement, nonetheless, and whereas it was even then being examined and debugged by the 486 group (who had a number of extra months earlier than deadline than did the N10 group), it was not thought of prepared to be used.

The N10 designer accessed the CAD division’s mainframe by means of the in-house laptop community and copied this system. It labored, and the bus-control bottleneck was solved.

Said CAD supervisor Nave guardedly: “A tool at that stage definitely has problems. The specific engineer who took it was competent to overcome most of the problems himself, so it didn’t have any negative impact, which it could have. It may have worked well in the case of the N10, but we don’t condone that as a general practice.”

Designing for testability

The N10 designers have been involved from the beginning about tips on how to take a look at a chip with 1,000,000 transistors. To be certain that the chip might be examined adequately, early in 1987 and about midway into the venture a product engineer was moved in with the N10 group. At first, Beth Schultz simply labored on circuit designs alongside the others, familiarizing herself with the chip’s capabilities. Later, she wrote diagnostic applications, and now, again within the product engineering division, she is supervising the i860’s switch to Intel’s manufacturing operations.

The first try to check the chip demonstrated the significance of that early involvement by product engineering. In the conventional course of occasions, a small tester—a logic analyzer with a private laptop interface—within the design division is engaged on a brand new chip’s circuits lengthy earlier than the bigger testers in product engineering get in on the act. The design division’s tester debugs in flip the take a look at applications run by product engineering. This time, as a result of a product engineer was already so aware of the chip, her division’s testers have been working earlier than the one within the design division.

The product engineer’s presence on the group additionally made the opposite designers extra acutely aware of the testability query, and the i860 displays this in a number of methods. The product engineer was consulted when logic designers set the bus’s pin timing, to verify it could not overreach the tester’s capabilities. Production engineering continuously reminded the N10 group of the necessity to restrict the variety of sign pins to 128: even one over would require spending hundreds of thousands of {dollars} on new testers. (The i860 has 120 sign pins, together with 48 pins for energy and grounding.)

The chip’s management logic was shaped with level-sensitive scan design (LSSD). Pioneered by IBM Corp., this design-for-testability approach sends indicators by means of devoted pins to check particular person circuits, slightly than counting on instruction sequences. LSSD was not employed for the data-path circuitry, nonetheless, as a result of the designers decided that it could take up an excessive amount of house, in addition to decelerate the chip. Instead, a small quantity of further logic lets the instruction cache’s two 32-bit segments take a look at one another. A boundary scan characteristic lets system designers verify the chip’s enter and output connections with out having to run directions.

Ordinarily the design and course of engineers “don’t speak the same language. So tying the technology so closely to the architecture was unique.”

— Albert Y.C. Yu

Planning the i860’s burn-in referred to as for a lot negotiation between the design group and the reliability engineers. The i860 usually makes use of 64-bit directions; for burn-in, the reliability engineers needed as few connections as attainable: 64 was far too many.

“Initially,” stated Fu, “they started out with zero wires. They wanted us to self-test. So we said, ‘How about 15 or 20?’”

They compromised with an 8-bit mode that was for use just for the burn-in, however with this characteristic i860 customers can boot up the system from an 8-bit extensive erasable programmable ROM.

The designers additionally labored carefully with the group growing the 1-μm manufacturing course of first used on a compaction of the 80386 chip that appeared early in 1988. Ordinarily, Intel vp Yu stated, the design and course of engineers “don’t speak the same language. So tying the technology so closely to the architecture was unique.”

Said William Siu, course of improvement engineering supervisor at Intel’s Hillsboro plant: “This process is designed for very low parasitic capacitance, which allows circuits to be built that have high performance and consume less power. We had to work with the design people to show them our limitations.”

The course of engineers had probably the most affect on the on-chip caches. “Initially,” stated designer Patel, “we weren’t sure how big the caches could be. We thought that we couldn’t put in as big a cache as we wanted, but they told us the process was good enough to do that.”

A matter of timing

The i860’s most original architectural characteristic is probably its on-chip parallelism. The instruction cache’s two 32-bit segments problem two simultaneous 32-bit directions, one to the RISC core, the opposite to the floating-point part. Going one step additional, sure floating-point directions name upon adder and multiplier concurrently. The result’s a complete of three operations acted upon in a single clock cycle.

The structure will increase the chip’s pace, however as a result of it difficult the timing, implementing it introduced issues. For instance, if two or three parallel operations request the identical information, they have to be served serially. Many bugs discovered within the chip’s design concerned this type of synchronization.

The logic that freezes a unit when wanted information is for the second unavailable introduced one of many largest timing complications. Initially, designers thought this example wouldn’t crop up too typically, however the on-chip parallelism induced it extra often than had been anticipated.

The freeze logic grew and grew till, stated Patel, “it became so kludgy we decided to sit down and redesign the whole freeze logic.” That was not a trivial resolution—the chip was about midway by means of its design schedule and that one revision took 4 engineers greater than a month.

Even operating on a big mainframe, the circuit simulations have been bogging down. Engineers would set one to run over the weekend and discover it incomplete after they got here in on Monday.

As the variety of transistors approached the 1 million mark, the CAD instruments that had been a lot assist started to interrupt down. Intel has developed CAD instruments in-house, believing its personal instruments could be extra tightly coupled with its course of and design know-how, and subsequently extra environment friendly. But the N10 represented an enormous advance on the 80386, Intel’s largest microprocessor so far, and the CAD programs had by no means been utilized to a venture anyplace close to the scale of the brand new chip. Indeed, as a result of the i860’s parallelism has resulted in big numbers of attainable mixtures (tens of hundreds of thousands have been examined; the entire is many occasions that), its complexity is staggering.

Even operating on a big mainframe, the circuit simulations have been bogging down. Engineers would set one to run over the weekend and discover it incomplete after they got here in on Monday. That was too lengthy to attend, so that they took to their CAD instruments to alter the simulation program. One instrument that goes by means of a structure to localize quick circuits ran for days, then gave up. “We had to go in and change the algorithm for that program,” Willoner stated.

The group first deliberate to plot the complete chip structure as an support in debugging, however discovered that it could take greater than every week of operating the plotters around the clock. They gave up, and as an alternative examined on workstations the chip’s particular person areas.

But now the mainframe operating all these instruments started to balk. The engineers took to setting their alarm clocks to ring a number of occasions in the course of the evening and logging on to the system by means of their terminals at residence to restart any laptop run that had crashed.

The net-list software program failed completely; the schematic was simply too massive.

Before a chip design is turned over to manufacturing for its first silicon run—a switch referred to as the tape-out—the pc performs full-chip verification, evaluating the schematics with the structure. To do that, it wants a web listing, an intermediate model of the schematic, within the type of alphanumerics. The web listing is often created only some days earlier than tape-out, when the design is remaining. But realizing the 486 group was on their heels and would quickly be demanding—and, as a precedence venture, receiving—the manufacturing division’s assets, the N10 group did a full-chip-verification dry run two months early with an incomplete design.

And the net-list software program failed completely; the schematic was simply too massive. “Here we were, approaching tape-out, and we suddenly discover we can’t net-list this thing,” stated Albers. “In three days one of our engineers figured out a way around it, but it had us scared for a while.”

Into silicon

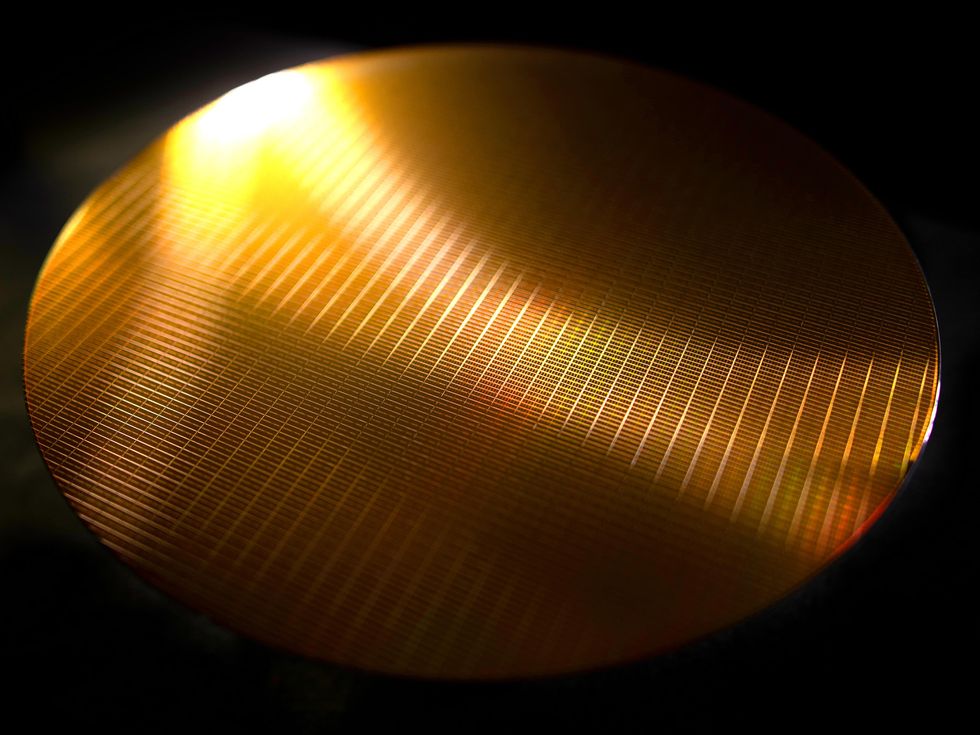

After mid-August, when the chip was turned over to the product engineering division to be ready for manufacture, all of the design group might do was wait, fear, and tweak their take a look at applications within the hope that the primary silicon run would show practical sufficient to check utterly. And six weeks later, when the primary batch of wafers arrived, they have been full sufficient to be examined, however not sufficient to be packaged. Normally, design and product engineering groups wait till wafers are by means of the manufacturing course of earlier than testing them, however not this time.

Rajeev Bharadhwaj, a design engineer, flew to Oregon—on a Monday—to select up the primary wafers, scorching off the road. By 9:30 p.m. he was again in Santa Clara, the place the entire design group, in addition to product engineers and advertising individuals, waited whereas the primary take a look at sequences ran—at not more than 10 MHz, far under the 33 MHz goal. It seemed like a catastrophe, however after the engineers spent 20 nervous minutes going over vital paths within the chips searching for the bottleneck, one observed that the power-supply pin was not connected—the chip had been drawing energy solely from the clock sign and its I/O programs. Once the facility pin was linked, the chip ran simply at 40 MHz.

By 3 a.m., some 8000 take a look at vectors had been run by means of the chip—vectors that the product engineer had labored six months to create. This was sufficient for the group to pronounce confidently: “It works!”

The i860 designation was chosen to point that the brand new chip does bear a slight relationship to the 80486—as a result of the chips construction their information with the identical byte ordering and have appropriate memory-management programs, they will work collectively in a system and trade information.

This little chip goes to market

Intel expects to have the chip out there—at $750 for the 33 MHz and $1037 for the 40 MHz model—in amount by the fourth quarter of this yr, and has already shipped samples to clients. (Peripheral chips for the 386 can be utilized with the i860 and are already in the marketplace.) Because the i860 has the identical data-storage construction because the 386, working programs for the 386 may be simply tailored to the brand new manufacturing.

Intel has introduced a joint effort towards growing a multiprocessing model of Unix for the i860 with AT&T Co. (Unix Software Operation, Morristown, N.J.), Olivetti Research Center (Menlo Park, Calif.), Prime Computer (Commercial Systems Group, Natick, Mass.), and Convergent Technologies (San Jose, Calif., a division of Unisys Corp.). Tektronix NC and Kontron Elektronik GmbH plan to fabricate debuggers (logic analyzers) for the chip.

For software program builders, Intel has developed a primary instrument package (assemblers, simulators, debuggers, and the like) and Fortran and C compilers. In addition, Intel has a Fortran vectorizer, a instrument that routinely restructures customary Fortran code into vector processes with a know-how beforehand solely out there for supercomputers.

IBM plans to make the i860 out there as an accelerator for the PS/2 collection of private computer systems, which might enhance them to close supercomputer efficiency. Kontron, SPEA Software AG, and Number Nine Computer Corp. shall be utilizing the i860 in personal-computer graphics boards. Microsoft Corp. has endorsed the structure however has not but introduced merchandise.

Minicomputer distributors are excited concerning the chip as a result of the integer efficiency is way greater than was anticipated when the venture started.

“We have the Dhrystone record on a microprocessor today’’—85,000 at 40 MHz, said Kohn. (A Dhrystone is a synthetic benchmark representing an average integer program and is used to measure integer performance of a microprocessor or computer system.) Olivetti is one company that will be using the N10 in minicomputers, as will PCS Computer Systems Inc.

Megatek Corp. is the first company to announce plans to makei860-based workstations in a market where the chip will be competing with such other RISC microprocessors as SPARC from Sun, the 88000 from Motorola, Clipper from Integraph Corp., and R3000 from MIPS Computer Systems Inc.

Intel sees its chip as having leapfrogged the current 32-bit generation of microprocessors. The company’s engineers think the i860 has another advantage: whereas floating-point chips, graphics chips, and caches must be added to the other microprocessors to build a complete system, the i860 is fully integrated, and therefore eliminates communications overhead. Some critics see this as a disadvantage, however, because it limits the choices open to system designers. It remains to be seen if this feature can overcome the lead the other chips have in the market.

The i860 team expects other microprocessor manufacturers to follow with their own 64-bit products with other capabilities besides RISC integer processing integrated onto a single chip. As leader in the new RISC generations, however, Intel hopes the i860 will set a standard for workstations, just as the 8086 did for personal computers.

To probe further

Intel’s first paper describing the i860, by Leslie Kohn and SaiWai Fu—’’A 1,000,000 transistor microprocessor”—was printed within the 1989 International Solid-State Circuits Conference Digest of Technical Papers, February 1989, pp. 54-55.

The benefits of reduced-instruction-set computing (RISC) are mentioned in “Toward simpler, faster computers,” by Paul Wallich (IEEE Spectrum, August 1985, pp. 38-45).

Editor’s observe June 2022: The i860 (N10) microprocessor didn’t precisely take {the marketplace} by storm. Though it dealt with graphics with spectacular pace and located a distinct segment as a graphics accelerator, its efficiency on general-purpose purposes was disappointing. Intel discontinued the chip within the mid-Nineteen Nineties.