[ad_1]

Federated studying is a distributed manner of coaching machine studying (ML) fashions the place knowledge is domestically processed and solely targeted mannequin updates and metrics which can be supposed for instant aggregation are shared with a server that orchestrates coaching. This permits the coaching of fashions on domestically obtainable indicators with out exposing uncooked knowledge to servers, rising person privateness. In 2021, we introduced that we’re utilizing federated studying to coach Smart Text Selection fashions, an Android function that helps customers choose and duplicate textual content simply by predicting what textual content they wish to choose after which routinely increasing the choice for them.

Since that launch, we’ve labored to enhance the privateness ensures of this know-how by fastidiously combining safe aggregation (SecAgg) and a distributed model of differential privateness. In this put up, we describe how we constructed and deployed the primary federated studying system that gives formal privateness ensures to all person knowledge earlier than it turns into seen to an honest-but-curious server, that means a server that follows the protocol however may attempt to achieve insights about customers from knowledge it receives. The Smart Text Selection fashions skilled with this method have diminished memorization by greater than two-fold, as measured by customary empirical testing strategies.

Scaling safe aggregation

Data minimization is a vital privateness principle behind federated studying. It refers to targeted knowledge assortment, early aggregation, and minimal knowledge retention required throughout coaching. While each gadget collaborating in a federated studying spherical computes a mannequin replace, the orchestrating server is simply serious about their common. Therefore, in a world that optimizes for knowledge minimization, the server would be taught nothing about particular person updates and solely obtain an mixture mannequin replace. This is exactly what the SecAgg protocol achieves, underneath rigorous cryptographic ensures.

Important to this work, two latest developments have improved the effectivity and scalability of SecAgg at Google:

- An improved cryptographic protocol: Until just lately, a major bottleneck in SecAgg was consumer computation, because the work required on every gadget scaled linearly with the overall variety of purchasers (N) collaborating within the spherical. In the new protocol, consumer computation now scales logarithmically in N. This, together with related features in server prices, leads to a protocol in a position to deal with bigger rounds. Having extra customers take part in every spherical improves privateness, each empirically and formally.

- Optimized consumer orchestration: SecAgg is an interactive protocol, the place collaborating gadgets progress collectively. An essential function of the protocol is that it’s sturdy to some gadgets dropping out. If a consumer doesn’t ship a response in a predefined time window, then the protocol can proceed with out that consumer’s contribution. We have deployed statistical strategies to successfully auto-tune such a time window in an adaptive manner, leading to improved protocol throughput.

The above enhancements made it simpler and sooner to coach Smart Text Selection with stronger knowledge minimization ensures.

Aggregating all the things by way of safe aggregation

A typical federated coaching system not solely entails aggregating mannequin updates but in addition metrics that describe the efficiency of the native coaching. These are essential for understanding mannequin habits and debugging potential coaching points. In federated coaching for Smart Text Selection, all mannequin updates and metrics are aggregated by way of SecAgg. This habits is statically asserted utilizing TensorFlow Federated, and domestically enforced in Android’s Private Compute Core safe setting. As a outcome, this enhances privateness much more for customers coaching Smart Text Selection, as a result of unaggregated mannequin updates and metrics are usually not seen to any a part of the server infrastructure.

Differential privateness

SecAgg helps decrease knowledge publicity, however it doesn’t essentially produce aggregates that assure towards revealing something distinctive to a person. This is the place differential privateness (DP) is available in. DP is a mathematical framework that units a restrict on a person’s affect on the result of a computation, such because the parameters of a ML mannequin. This is achieved by bounding the contribution of any particular person person and including noise in the course of the coaching course of to supply a chance distribution over output fashions. DP comes with a parameter (ε) that quantifies how a lot the distribution may change when including or eradicating the coaching examples of any particular person person (the smaller the higher).

Recently, we announced a brand new methodology of federated coaching that enforces formal and meaningfully robust DP ensures in a centralized method, the place a trusted server controls the coaching course of. This protects towards exterior attackers who could try to investigate the mannequin. However, this method nonetheless depends on belief within the central server. To present even higher privateness protections, we’ve created a system that makes use of distributed differential privateness (DDP) to implement DP in a distributed method, built-in inside the SecAgg protocol.

Distributed differential privateness

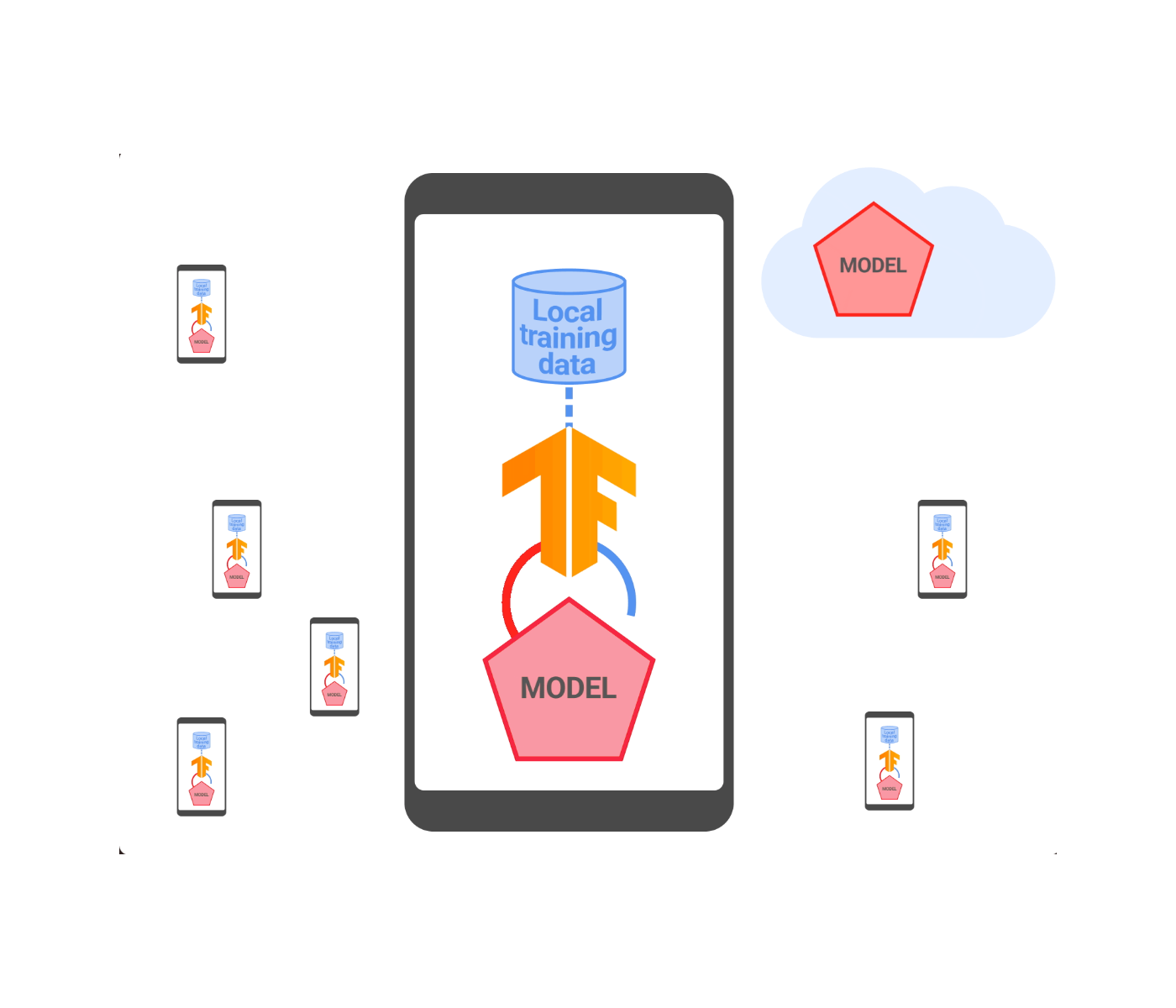

DDP is a know-how that provides DP ensures with respect to an honest-but-curious server coordinating coaching. It works by having every collaborating gadget clip and noise its replace domestically, after which aggregating these noisy clipped updates by the brand new SecAgg protocol described above. As a outcome, the server solely sees the noisy sum of the clipped updates.

However, the mixture of native noise addition and use of SecAgg presents vital challenges in apply:

- An improved discretization methodology: One problem is correctly representing mannequin parameters as integers in SecAgg’s finite group with integer modular arithmetic, which might inflate the norm of the discretized mannequin and require extra noise for a similar privateness degree. For instance, randomized rounding to the closest integers may inflate the person’s contribution by an element equal to the variety of mannequin parameters. We addressed this by scaling the mannequin parameters, making use of a random rotation, and rounding to nearest integers. We additionally developed an method for auto-tuning the discretization scale throughout coaching. This led to an much more environment friendly and correct integration between DP and SecAgg.

- Optimized discrete noise addition: Another problem is devising a scheme for selecting an arbitrary variety of bits per mannequin parameter with out sacrificing end-to-end privateness ensures, which rely upon how the mannequin updates are clipped and noised. To handle this, we added integer noise within the discretized area and analyzed the DP properties of sums of integer noise vectors utilizing the distributed discrete Gaussian and distributed Skellam mechanisms.

|

| An overview of federated studying with distributed differential privateness. |

We examined our DDP answer on quite a lot of benchmark datasets and in manufacturing and validated that we will match the accuracy to central DP with a SecAgg finite group of dimension 12 bits per mannequin parameter. This meant that we have been in a position to obtain added privateness benefits whereas additionally decreasing reminiscence and communication bandwidth. To exhibit this, we utilized this know-how to coach and launch Smart Text Selection fashions. This was carried out with an applicable quantity of noise chosen to keep up mannequin high quality. All Smart Text Selection fashions skilled with federated studying now include DDP ensures that apply to each the mannequin updates and metrics seen by the server throughout coaching. We have additionally open sourced the implementation in TensorFlow Federated.

Empirical privateness testing

While DDP provides formal privateness ensures to Smart Text Selection, these formal ensures are comparatively weak (a finite however giant ε, within the a whole lot). However, any finite ε is an enchancment over a mannequin with no formal privateness assure for a number of causes: 1) A finite ε strikes the mannequin right into a regime the place additional privateness enhancements could be quantified; and a pair of) even giant ε’s can point out a considerable lower within the capability to reconstruct coaching knowledge from the skilled mannequin. To get a extra concrete understanding of the empirical privateness benefits, we carried out thorough analyses by making use of the Secret Sharer framework to Smart Text Selection fashions. Secret Sharer is a mannequin auditing method that can be utilized to measure the diploma to which fashions unintentionally memorize their coaching knowledge.

To carry out Secret Sharer analyses for Smart Text Selection, we arrange management experiments which accumulate gradients utilizing SecAgg. The therapy experiments use distributed differential privateness aggregators with completely different quantities of noise.

We discovered that even low quantities of noise scale back memorization meaningfully, greater than doubling the Secret Sharer rank metric for related canaries in comparison with the baseline. This signifies that though the DP ε is giant, we empirically verified that these quantities of noise already assist scale back memorization for this mannequin. However, to additional enhance on this and to get stronger formal ensures, we purpose to make use of even bigger noise multipliers sooner or later.

Next steps

We developed and deployed the primary federated studying and distributed differential privateness system that comes with formal DP ensures with respect to an honest-but-curious server. While providing substantial further protections, a completely malicious server would possibly nonetheless have the ability to get across the DDP ensures both by manipulating the general public key change of SecAgg or by injecting a ample variety of “faux” malicious purchasers that don’t add the prescribed noise into the aggregation pool. We are excited to handle these challenges by persevering with to strengthen the DP assure and its scope.

Acknowledgements

The authors wish to thank Adria Gascon for vital impression on the weblog put up itself, in addition to the individuals who helped develop these concepts and convey them to apply: Ken Liu, Jakub Konečný, Brendan McMahan, Naman Agarwal, Thomas Steinke, Christopher Choquette, Adria Gascon, James Bell, Zheng Xu, Asela Gunawardana, Kallista Bonawitz, Mariana Raykova, Stanislav Chiknavaryan, Tancrède Lepoint, Shanshan Wu, Yu Xiao, Zachary Charles, Chunxiang Zheng, Daniel Ramage, Galen Andrew, Hugo Song, Chang Li, Sofia Neata, Ananda Theertha Suresh, Timon Van Overveldt, Zachary Garrett, Wennan Zhu, and Lukas Zilka. We’d additionally prefer to thank Tom Small for creating the animated determine.