[ad_1]

This sponsored article is dropped at you by the NYU Tandon School of Engineering.

If you’ve ever discovered to cook dinner, you know the way daunting even easy duties may be at first. It’s a fragile dance of components, motion, warmth, and strategies that newcomers want limitless apply to grasp.

But think about for those who had somebody – or one thing – to help you. Say, an AI assistant that might stroll you thru all the things you should know and do to make sure that nothing is missed in real-time, guiding you to a stress-free scrumptious dinner.

Claudio Silva, director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of pc science and engineering and knowledge science on the NYU Tandon School of Engineering and NYU Center for Data Science, is doing simply that. He is main an initiative to develop an artificial intelligence (AI) “virtual assistant” offering just-in-time visible and audio suggestions to assist with activity execution.

And whereas cooking could also be part of the venture to supply proof-of-concept in a low-stakes atmosphere, the work lays the muse to sooner or later be used for all the things from guiding mechanics via complicated restore jobs to fight medics performing life-saving surgical procedures on the battlefield.

“A checklist on steroids”

The venture is a part of a nationwide effort involving eight different institutional groups, funded by the Defense Advanced Research Projects Agency (DARPA) Perceptually-enabled Task Guidance (PTG) program. With the assist of a $5 million DARPA contract, the NYU group goals to develop AI applied sciences to assist individuals carry out complicated duties whereas making these customers extra versatile by increasing their skillset — and more adept by decreasing their errors.

Claudio Silva is the co-director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of pc science and engineering on the NYU Tandon School of Engineering and NYU Center for Data Science.NYU Tandon

Claudio Silva is the co-director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of pc science and engineering on the NYU Tandon School of Engineering and NYU Center for Data Science.NYU Tandon

The NYU group – together with investigators from NYU Tandon’s Department of Computer Science and Engineering, the NYU Center for Data Science (CDS) and the Music and Audio Research Laboratory (MARL) – have been performing basic analysis on information switch, perceptual grounding, perceptual consideration and person modeling to create a dynamic clever agent that engages with the person, responding to not solely circumstances however the person’s emotional state, location, surrounding circumstances and extra.

Dubbing it a “checklist on steroids” Silva says that the venture goals to develop Transparent, Interpretable, and Multimodal Personal Assistant (TIM), a system that may “see” and “hear” what customers see and listen to, interpret spatiotemporal contexts and supply suggestions via speech, sound and graphics.

While the preliminary utility use-cases for the venture for analysis functions give attention to army purposes corresponding to aiding medics and helicopter pilots, there are numerous different situations that may profit from this analysis — successfully any bodily activity.

“The vision is that when someone is performing a certain operation, this intelligent agent would not only guide them through the procedural steps for the task at hand, but also be able to automatically track the process, and sense both what is happening in the environment, and the cognitive state of the user, while being as unobtrusive as possible,” stated Silva.

The venture brings collectively a crew of researchers from throughout computing, together with visualization, human-computer interplay, augmented actuality, graphics, pc imaginative and prescient, pure language processing, and machine listening. It contains 14 NYU school and college students, with co-PIs Juan Bello, professor of pc science and engineering at NYU Tandon; Kyunghyun Cho, and He He, affiliate and assistant professors (respectively) of pc science and knowledge science at NYU Courant and CDS, and Qi Sun, assistant professor of pc science and engineering at NYU Tandon and a member of the Center for Urban Science + Progress will use the Microsoft Hololens 2 augmented actuality system because the {hardware} platform check mattress for the venture.

The venture makes use of the Microsoft Hololens 2 augmented actuality system because the {hardware} platform testbed. Silva stated that, due to its array of cameras, microphones, lidar scanners, and inertial measurement unit (IMU) sensors, the Hololens 2 headset is a perfect experimental platform for Tandon’s proposed TIM system.

In constructing the expertise, Silva’s crew turned to a selected activity that required quite a lot of visible evaluation, and may gain advantage from a guidelines primarily based system: cooking.

In constructing the expertise, Silva’s crew turned to a selected activity that required quite a lot of visible evaluation, and may gain advantage from a guidelines primarily based system: cooking.

NYU Tandon

“Integrating Hololens will allow us to deliver massive amounts of input data to the intelligent agent we are developing, allowing it to ‘understand’ the static and dynamic environment,” defined Silva, including that the amount of information generated by the Hololens’ sensor array requires the mixing of a distant AI system requiring very excessive velocity, tremendous low latency wi-fi connection between the headset and distant cloud computing.

To hone TIM’s capabilities, Silva’s crew will practice it on a course of that’s directly mundane and extremely depending on the proper, step-by-step efficiency of discrete duties: cooking. A vital component on this video-based coaching course of is to “teach” the system to find the beginning and ending level — via interpretation of video frames — of every motion within the demonstration course of.

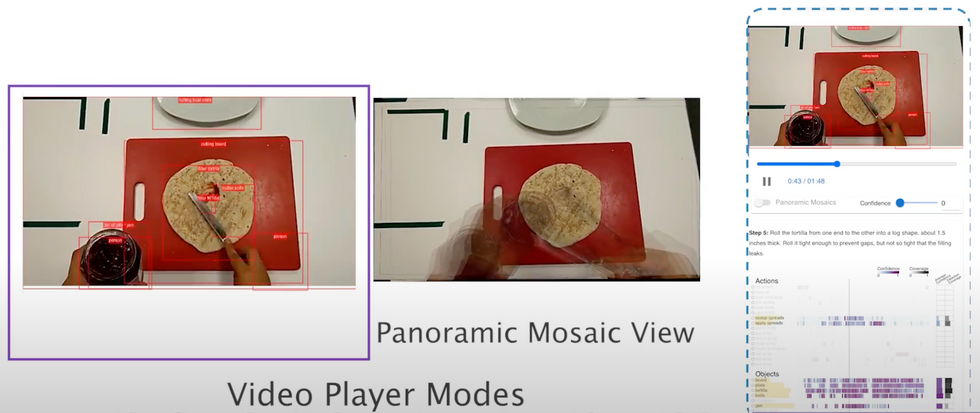

The crew is already making enormous progress. Their first main paper “ARGUS: Visualization of AI-Assisted Task Guidance in AR” gained a Best Paper Honorable Mention Award at IEEE VIS 2023. The paper proposes a visible analytics system they name ARGUS to assist the event of clever AR assistants.

The system was designed as a part of a multi year-long collaboration between visualization researchers and ML and AR consultants. It permits for on-line visualization of object, motion, and step detection in addition to offline evaluation of beforehand recorded AR periods. It visualizes not solely the multimodal sensor knowledge streams but in addition the output of the ML fashions. This permits builders to realize insights into the performer actions in addition to the ML fashions, serving to them troubleshoot, enhance, and positive tune the elements of the AR assistant.

“It’s conceivable that in five to ten years these ideas will be integrated into almost everything we do.”

ARGUS, the interactive visible analytics instrument, permits for real-time monitoring and debugging whereas an AR system is in use. It lets builders see what the AR system sees and the way it’s decoding the atmosphere and person actions. They may also alter settings and report knowledge for later evaluation.NYU Tandon

ARGUS, the interactive visible analytics instrument, permits for real-time monitoring and debugging whereas an AR system is in use. It lets builders see what the AR system sees and the way it’s decoding the atmosphere and person actions. They may also alter settings and report knowledge for later evaluation.NYU Tandon

Where all issues knowledge science and visualization occurs

Silva notes that the DARPA venture, targeted as it’s on human-centered and data-intensive computing, is true on the heart of what VIDA does: make the most of superior knowledge evaluation and visualization strategies to light up the underlying components influencing a bunch of areas of vital societal significance.

“Most of our current projects have an AI component and we tend to build systems — such as the ARt Image Exploration Space (ARIES) in collaboration with the Frick Collection, the VisTrails data exploration system, or the OpenSpace project for astrographics, which is deployed at planetariums around the world. What we make is really designed for real-world applications, systems for people to use, rather than as theoretical exercises,” stated Silva.

“What we make is really designed for real-world applications, systems for people to use, rather than as theoretical exercises.” —Claudio Silva, NYU Tandon

VIDA contains 9 full-time school members targeted on making use of the most recent advances in computing and knowledge science to unravel diversified data-related points, together with high quality, effectivity, reproducibility, and authorized and moral implications. The school, together with their researchers and college students, are serving to to supply key insights into myriad challenges the place large knowledge can inform higher future decision-making.

What separates VIDA from different teams of information scientists is that they work with knowledge alongside the whole pipeline, from assortment, to processing, to evaluation, to actual world impacts. The members use their knowledge in several methods — enhancing public well being outcomes, analyzing city congestion, figuring out biases in AI fashions — however the core of their work all lies on this complete view of information science.

The heart has devoted amenities for constructing sensors, processing huge knowledge units, and operating managed experiments with prototypes and AI fashions, amongst different wants. Other researchers on the college, generally blessed with knowledge units and fashions too large and complicated to deal with themselves, come to the middle for assist coping with all of it.

The VIDA crew is rising, persevering with to draw distinctive college students and publishing knowledge science papers and displays at a fast clip. But they’re nonetheless targeted on their core objective: utilizing knowledge science to have an effect on actual world change, from probably the most contained issues to probably the most socially harmful.

From Your Site Articles

Related Articles Around the Web