[ad_1]

In our earlier submit, we mentioned concerns round selecting a vector database for our hypothetical retrieval augmented era (RAG) use case. But when constructing a RAG software we frequently have to make one other necessary resolution: select a vector embedding mannequin, a important part of many generative AI functions.

A vector embedding mannequin is chargeable for the transformation of unstructured knowledge (textual content, pictures, audio, video) right into a vector of numbers that seize semantic similarity between knowledge objects. Embedding fashions are broadly used past RAG functions, together with suggestion programs, search engines like google, databases, and different knowledge processing programs.

Understanding their function, internals, benefits, and downsides is essential and that’s what we’ll cowl at the moment. While we’ll be discussing textual content embedding fashions solely, fashions for different sorts of unstructured knowledge work equally.

What Is an Embedding Model?

Machine studying fashions don’t work with textual content instantly, they require numbers as enter. Since textual content is ubiquitous, over time, the ML group developed many options that deal with the conversion from textual content to numbers. There are many approaches of various complexity, however we’ll overview simply a few of them.

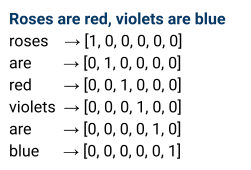

A easy instance is one-hot encoding: deal with phrases of a textual content as categorical variables and map every phrase to a vector of 0s and single 1.

Unfortunately, this embedding method isn’t very sensible, because it results in a lot of distinctive classes and leads to unmanageable dimensionality of output vectors in most sensible circumstances. Also, one-hot encoding doesn’t put comparable vectors nearer to at least one one other in a vector area.

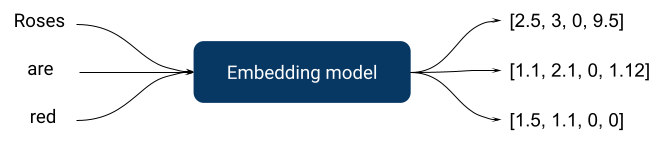

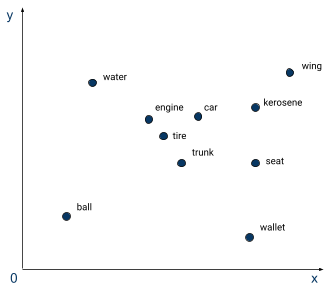

Embedding fashions have been invented to sort out these points. Just like one-hot encoding, they take textual content as enter and return vectors of numbers as output, however they’re extra complicated as they’re taught with supervised duties, usually utilizing a neural community. A supervised activity might be, for instance, predicting product overview sentiment rating. In this case, the ensuing embedding mannequin would place critiques of comparable sentiment nearer to one another in a vector area. The selection of a supervised activity is important to producing related embeddings when constructing an embedding mannequin.

On the diagram above we will see phrase embeddings solely, however we frequently want greater than that since human language is extra complicated than simply many phrases put collectively. Semantics, phrase order, and different linguistic parameters ought to all be taken under consideration, which suggests we have to take it to the subsequent degree – sentence embedding fashions.

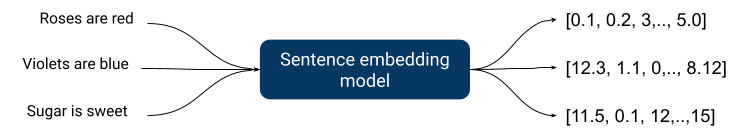

Sentence embeddings affiliate an enter sentence with a vector of numbers, and, as anticipated, are far more complicated internally since they must seize extra complicated relationships.

Thanks to progress in deep studying, all state-of-the-art embedding fashions are created with deep neural nets, since they higher seize complicated relationships inherent to a human language.

A great embedding mannequin ought to:

- Be quick since usually it’s only a preprocessing step in a bigger software

- Return vectors of manageable dimensions

- Return vectors that seize sufficient details about similarity to be sensible

Let’s now shortly look into how most embedding fashions are organized internally.

Modern Neural Networks Architecture

As we simply talked about, all well-performing state-of-the-art embedding fashions are deep neural networks.

This is an actively creating discipline and most high performing fashions are related to some novel structure enchancment. Let’s briefly cowl two essential architectures: BERT and GPT.

BERT (Bidirectional Encoder Representations from Transformers) was revealed in 2018 by researchers at Google and described the applying of the bidirectional coaching of “transformer”, a preferred consideration mannequin, to language modeling. Standard transformers embrace two separate mechanisms: an encoder for studying textual content enter and a decoder that makes a prediction.

BERT makes use of an encoder that reads your entire sentence of phrases directly which permits the mannequin to study the context of a phrase based mostly on all of its environment, left and proper in contrast to legacy approaches that checked out a textual content sequence from left to proper or proper to left. Before feeding phrase sequences into BERT, some phrases are changed with [MASK] tokens after which the mannequin makes an attempt to foretell the unique worth of the masked phrases, based mostly on the context offered by the opposite, non-masked phrases within the sequence.

Standard BERT doesn’t carry out very effectively in most benchmarks and BERT fashions require task-specific fine-tuning. But it’s open-source, has been round since 2018, and has comparatively modest system necessities (might be educated on a single medium-range GPU). As a consequence, it turned highly regarded for a lot of text-related duties. It is quick, customizable, and small. For instance, a highly regarded all-Mini-LM mannequin is a modified model of BERT.

GPT (Generative Pre-Trained Transformer) by OpenAI is completely different. Unlike BERT, It is unidirectional, i.e. textual content is processed in a single route and makes use of a decoder from a transformer structure that’s appropriate for predicting the subsequent phrase in a sequence. These fashions are slower and produce very excessive dimensional embeddings, however they normally have many extra parameters, don’t require fine-tuning, and are extra relevant to many duties out of the field. GPT isn’t open supply and is on the market as a paid API.

Context Length and Training Data

Another necessary parameter of an embedding mannequin is context size. Context size is the variety of tokens a mannequin can bear in mind when working with a textual content. An extended context window permits the mannequin to grasp extra complicated relationships inside a wider physique of textual content. As a consequence, fashions can present outputs of upper high quality, e.g. seize semantic similarity higher.

To leverage an extended context, coaching knowledge ought to embrace longer items of coherent textual content: books, articles, and so forth. However, rising context window size will increase the complexity of a mannequin and will increase compute and reminiscence necessities for coaching.

There are strategies that assist handle useful resource necessities e.g. approximate consideration, however they do that at a value to high quality. That’s one other trade-off that impacts high quality and prices: bigger context lengths seize extra complicated relationships of a human language, however require extra sources.

Also, as at all times, the standard of coaching knowledge is essential for all fashions. Embedding fashions aren’t any exception.

Semantic Search and Information Retrieval

Using embedding fashions for semantic search is a comparatively new method. For a long time, folks used different applied sciences: boolean fashions, latent semantic indexing (LSI), and numerous probabilistic fashions.

Some of those approaches work moderately effectively for a lot of present use circumstances and are nonetheless broadly used within the business.

One of the preferred conventional probabilistic fashions is BM25 (BM is “best matching”), a search relevance rating operate. It is used to estimate the relevance of a doc to a search question and ranks paperwork based mostly on the question phrases from every listed doc. Only just lately have embedding fashions began constantly outperforming it, however BM25 remains to be used so much since it’s less complicated than utilizing embedding fashions, it has decrease pc necessities, and the outcomes are explainable.

Benchmarks

Not each mannequin kind has a complete analysis method that helps to decide on an present mannequin.

Fortunately, textual content embedding fashions have widespread benchmark suites resembling:

The article “BEIR: A Heterogeneous Benchmark for Zero-shot Evaluation of Information Retrieval Models” proposed a reference set of benchmarks and datasets for data retrieval duties. The authentic BEIR benchmark consists of a set of 19 datasets and strategies for search high quality analysis. Methods embrace: question-answering, fact-checking, and entity retrieval. Now anybody who releases a textual content embedding mannequin for data retrieval duties can run the benchmark and see how their mannequin ranks towards the competitors.

Massive Text Embedding Benchmarks embrace BEIR and different elements that cowl 58 datasets and 112 languages. The public leaderboard for MTEB outcomes might be discovered right here.

These benchmarks have been run on a number of present fashions and their leaderboards are very helpful to make an knowledgeable selection about mannequin choice.

Using Embedding Models in a Production Environment

Benchmark scores on normal duties are essential, however they signify just one dimension.

When we use an embedding mannequin for search, we run it twice:

- When doing offline indexing of obtainable knowledge

- When embedding a person question for a search request

There are two necessary penalties of this.

The first is that we’ve got to reindex all present knowledge after we change or improve an embedding mannequin. All programs constructed utilizing embedding fashions ought to be designed with upgradability in thoughts as a result of newer and higher fashions are launched on a regular basis and, more often than not, upgrading a mannequin is the best approach to enhance general system efficiency. An embedding mannequin is a much less secure part of the system infrastructure on this case.

The second consequence of utilizing an embedding mannequin for person queries is that the inference latency turns into essential when the variety of customers goes up. Model inference takes extra time for better-performing fashions, particularly in the event that they require GPU to run: having latency increased than 100ms for a small question isn’t remarkable for fashions which have greater than 1B parameters. It seems that smaller, leaner fashions are nonetheless essential in a higher-load manufacturing state of affairs.

The tradeoff between high quality and latency is actual and we must always at all times bear in mind about it when selecting an embedding mannequin.

As we’ve got talked about above, embedding fashions assist handle output vector dimensionality which impacts the efficiency of many algorithms downstream. Generally the smaller the mannequin, the shorter the output vector size, however, usually, it’s nonetheless too nice for smaller fashions. That’s when we have to use dimensionality discount algorithms resembling PCA (principal part evaluation), SNE / tSNE (stochastic neighbor embedding), and UMAP (uniform manifold approximation).

Another place we will use dimensionality discount is earlier than storing embeddings in a database. Resulting vector embeddings will occupy much less area and retrieval velocity shall be sooner, however will come at a worth for the standard downstream. Vector databases are sometimes not the first storage, so embeddings might be regenerated with higher precision from the unique supply knowledge. Their use helps to cut back the output vector size and, in consequence, makes the system sooner and leaner.

Making the Right Choice

There’s an abundance of things and trade-offs that ought to be thought-about when selecting an embedding mannequin for a use case. The rating of a possible mannequin in widespread benchmarks is necessary, however we must always not overlook that it’s the bigger fashions which have a greater rating. Larger fashions have increased inference time which may severely restrict their use in low latency eventualities as usually an embedding mannequin is a pre-processing step in a bigger pipeline. Also, bigger fashions require GPUs to run.

If you propose to make use of a mannequin in a low-latency state of affairs, it’s higher to give attention to latency first after which see which fashions with acceptable latency have the best-in-class efficiency. Also, when constructing a system with an embedding mannequin you need to plan for modifications since higher fashions are launched on a regular basis and infrequently it’s the best approach to enhance the efficiency of your system.

About the creator

Nick Volynets is a senior knowledge engineer working with the workplace of the CTO the place he enjoys being on the coronary heart of DataRobotic innovation. He is considering massive scale machine studying and enthusiastic about AI and its influence.