[ad_1]

The previous few a long time have witnessed the speedy improvement of Optical Character Recognition (OCR) know-how, which has advanced from an tutorial benchmark process utilized in early breakthroughs of deep studying analysis to tangible merchandise obtainable in client gadgets and to third social gathering builders for every day use. These OCR merchandise digitize and democratize the precious data that’s saved in paper or image-based sources (e.g., books, magazines, newspapers, kinds, avenue indicators, restaurant menus) in order that they are often listed, searched, translated, and additional processed by state-of-the-art pure language processing methods.

Research in scene textual content detection and recognition (or scene textual content recognizing) has been the foremost driver of this speedy improvement via adapting OCR to pure pictures which have extra complicated backgrounds than doc pictures. These analysis efforts, nevertheless, deal with the detection and recognition of every particular person phrase in pictures, with out understanding how these phrases compose sentences and articles.

Layout evaluation is one other related line of analysis that takes a doc picture and extracts its construction, i.e., title, paragraphs, headings, figures, tables and captions. These format evaluation efforts are parallel to OCR and have been largely developed as unbiased methods which can be sometimes evaluated solely on doc pictures. As such, the synergy between OCR and format evaluation stays largely under-explored. We consider that OCR and format evaluation are mutually complementary duties that allow machine studying to interpret textual content in pictures and, when mixed, may enhance the accuracy and effectivity of each duties.

With this in thoughts, we announce the Competition on Hierarchical Text Detection and Recognition (the HierText Challenge), hosted as a part of the seventeenth annual International Conference on Document Analysis and Recognition (ICDAR 2023). The competitors is hosted on the Robust Reading Competition web site, and represents the primary main effort to unify OCR and format evaluation. In this competitors, we invite researchers from world wide to construct methods that may produce hierarchical annotations of textual content in pictures utilizing phrases clustered into strains and paragraphs. We hope this competitors could have a big and long-term influence on image-based textual content understanding with the objective to consolidate the analysis efforts throughout OCR and format evaluation, and create new indicators for downstream data processing duties.

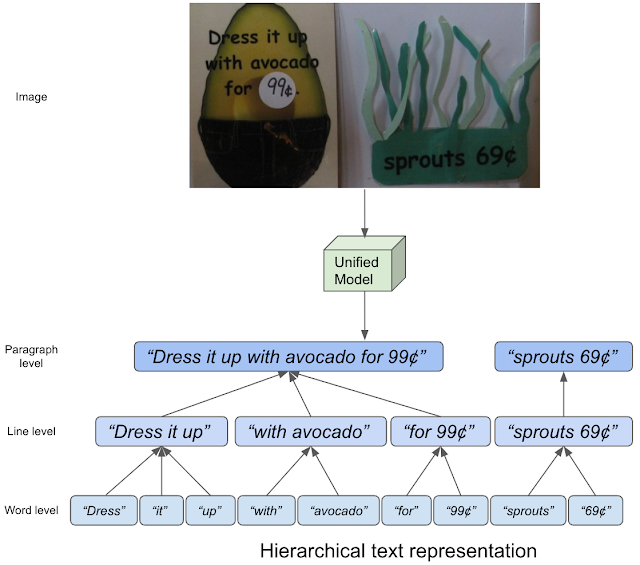

|

| The idea of hierarchical textual content illustration. |

Constructing a hierarchical textual content dataset

In this competitors, we use the HierText dataset that we printed at CVPR 2022 with our paper “Towards End-to-End Unified Scene Text Detection and Layout Analysis”. It’s the primary real-image dataset that gives hierarchical annotations of textual content, containing phrase, line, and paragraph degree annotations. Here, “phrases” are outlined as sequences of textual characters not interrupted by areas. “Lines” are then interpreted as “area“-separated clusters of “phrases” which can be logically linked in a single course, and aligned in spatial proximity. Finally, “paragraphs” are composed of “strains” that share the identical semantic matter and are geometrically coherent.

To construct this dataset, we first annotated pictures from the Open Images dataset utilizing the Google Cloud Platform (GCP) Text Detection API. We filtered via these annotated pictures, conserving solely pictures wealthy in textual content content material and format construction. Then, we labored with our third-party companions to manually right all transcriptions and to label phrases, strains and paragraph composition. As a consequence, we obtained 11,639 transcribed pictures, break up into three subsets: (1) a prepare set with 8,281 pictures, (2) a validation set with 1,724 pictures, and (3) a check set with 1,634 pictures. As detailed within the paper, we additionally checked the overlap between our dataset, TextOCR, and Intel OCR (each of which additionally extracted annotated pictures from Open Images), ensuring that the check pictures within the HierText dataset weren’t additionally included within the TextOCR or Intel OCR coaching and validation splits and vice versa. Below, we visualize examples utilizing the HierText dataset and reveal the idea of hierarchical textual content by shading every textual content entity with totally different colours. We can see that HierText has a variety of picture area, textual content format, and excessive textual content density.

|

| Samples from the HierText dataset. Left: Illustration of every phrase entity. Middle: Illustration of line clustering. Right: Illustration paragraph clustering. |

Dataset with highest density of textual content

In addition to the novel hierarchical illustration, HierText represents a brand new area of textual content pictures. We notice that HierText is presently essentially the most dense publicly obtainable OCR dataset. Below we summarize the traits of HierText as compared with different OCR datasets. HierText identifies 103.8 phrases per picture on common, which is greater than 3x the density of TextOCR and 25x extra dense than ICDAR-2015. This excessive density poses distinctive challenges for detection and recognition, and as a consequence HierText is used as one of many major datasets for OCR analysis at Google.

| Dataset | Training break up | Validation break up | Testing break up | Words per picture | ||||||||||

| ICDAR-2015 | 1,000 | 0 | 500 | 4.4 | ||||||||||

| TextOCR | 21,778 | 3,124 | 3,232 | 32.1 | ||||||||||

| Intel OCR | 19,1059 | 16,731 | 0 | 10.0 | ||||||||||

| HierText | 8,281 | 1,724 | 1,634 | 103.8 |

| Comparing a number of OCR datasets to the HierText dataset. |

Spatial distribution

We additionally discover that textual content within the HierText dataset has a way more even spatial distribution than different OCR datasets, together with TextOCR, Intel OCR, IC19 MLT, COCO-Text and IC19 LSVT. These earlier datasets are inclined to have well-composed pictures, the place textual content is positioned in the course of the pictures, and are thus simpler to determine. On the opposite, textual content entities in HierText are broadly distributed throughout the pictures. It’s proof that our pictures are from extra numerous domains. This attribute makes HierText uniquely difficult amongst public OCR datasets.

|

| Spatial distribution of textual content cases in numerous datasets. |

The HierText problem

The HierText Challenge represents a novel process and with distinctive challenges for OCR fashions. We invite researchers to take part on this problem and be a part of us in ICDAR 2023 this yr in San Jose, CA. We hope this competitors will spark analysis group curiosity in OCR fashions with wealthy data representations which can be helpful for novel down-stream duties.

Acknowledgements

The core contributors to this undertaking are Shangbang Long, Siyang Qin, Dmitry Panteleev, Alessandro Bissacco, Yasuhisa Fujii and Michalis Raptis. Ashok Popat and Jake Walker offered useful recommendation. We additionally thank Dimosthenis Karatzas and Sergi Robles from Autonomous University of Barcelona for serving to us arrange the competitors web site.