[ad_1]

Convolutional neural networks have been the dominant machine studying structure for pc imaginative and prescient because the introduction of AlexNet in 2012. Recently, impressed by the evolution of Transformers in pure language processing, attention mechanisms have been prominently integrated into imaginative and prescient fashions. These consideration strategies enhance some elements of the enter knowledge whereas minimizing different elements in order that the community can give attention to small however necessary elements of the info. The Vision Transformer (ViT) has created a brand new panorama of mannequin designs for pc imaginative and prescient that’s fully freed from convolution. ViT regards picture patches as a sequence of phrases, and applies a Transformer encoder on high. When skilled on sufficiently giant datasets, ViT demonstrates compelling efficiency on picture recognition.

While convolutions and a spotlight are each enough for good efficiency, neither of them are mandatory. For instance, MLP-Mixer adopts a easy multi-layer perceptron (MLP) to combine picture patches throughout all of the spatial areas, leading to an all-MLP structure. It is a aggressive various to current state-of-the-art imaginative and prescient fashions by way of the trade-off between accuracy and computation required for coaching and inference. However, each ViT and the MLP fashions battle to scale to increased enter decision as a result of the computational complexity will increase quadratically with respect to the picture dimension.

Today we current a brand new multi-axis strategy that’s easy and efficient, improves on the unique ViT and MLP fashions, can higher adapt to high-resolution, dense prediction duties, and may naturally adapt to totally different enter sizes with excessive flexibility and low complexity. Based on this strategy, we’ve got constructed two spine fashions for high-level and low-level imaginative and prescient duties. We describe the primary in “MaxViT: Multi-Axis Vision Transformer”, to be offered in ECCV 2022, and present it considerably improves the cutting-edge for high-level duties, corresponding to picture classification, object detection, segmentation, high quality evaluation, and era. The second, offered in “MAXIM: Multi-Axis MLP for Image Processing” at CVPR 2022, is predicated on a UNet-like structure and achieves aggressive efficiency on low-level imaging duties together with denoising, deblurring, dehazing, deraining, and low-light enhancement. To facilitate additional analysis on environment friendly Transformer and MLP fashions, we’ve got open-sourced the code and fashions for each MaxViT and MAXIM.

|

| A demo of picture deblurring utilizing MAXIM body by body. |

Overview

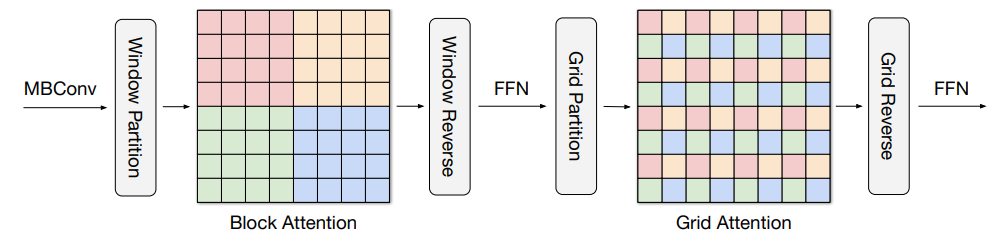

Our new strategy is predicated on multi-axis consideration, which decomposes the full-size consideration (every pixel attends to all of the pixels) utilized in ViT into two sparse types — native and (sparse) world. As proven within the determine under, the multi-axis consideration incorporates a sequential stack of block consideration and grid consideration. The block consideration works inside non-overlapping home windows (small patches in intermediate characteristic maps) to seize native patterns, whereas the grid consideration works on a sparsely sampled uniform grid for long-range (world) interactions. The window sizes of grid and block attentions may be absolutely managed as hyperparameters to make sure a linear computational complexity to the enter dimension.

|

| The proposed multi-axis consideration conducts blocked native and dilated world consideration sequentially adopted by a FFN, with solely a linear complexity. The pixels in the identical colours are attended collectively. |

Such low-complexity consideration can considerably enhance its large applicability to many imaginative and prescient duties, particularly for high-resolution visible predictions, demonstrating larger generality than the unique consideration utilized in ViT. We construct two spine instantiations out of this multi-axis consideration strategy – MaxViT and MAXIM, for high-level and low-level duties, respectively.

MaxViT

In MaxViT, we first construct a single MaxViT block (proven under) by concatenating MBConv (proposed by EfficientNet, V2) with the multi-axis consideration. This single block can encode native and world visible info no matter enter decision. We then merely stack repeated blocks composed of consideration and convolutions in a hierarchical structure (much like ResNet, CoAtNet), yielding our homogenous MaxViT structure. Notably, MaxViT is distinguished from earlier hierarchical approaches as it might “see” globally all through your complete community, even in earlier, high-resolution phases, demonstrating stronger mannequin capability on varied duties.

|

| The meta-architecture of MaxViT. |

MAXIM

Our second spine, MAXIM, is a generic UNet-like structure tailor-made for low-level image-to-image prediction duties. MAXIM explores parallel designs of the native and world approaches utilizing the gated multi-layer perceptron (gMLP) community (patching-mixing MLP with a gating mechanism). Another contribution of MAXIM is the cross-gating block that can be utilized to use interactions between two totally different enter indicators. This block can function an environment friendly various to the cross-attention module because it solely employs a budget gated MLP operators to work together with varied inputs with out counting on the computationally heavy cross-attention. Moreover, all of the proposed parts together with the gated MLP and cross-gating blocks in MAXIM get pleasure from linear complexity to picture dimension, making it much more environment friendly when processing high-resolution footage.

Results

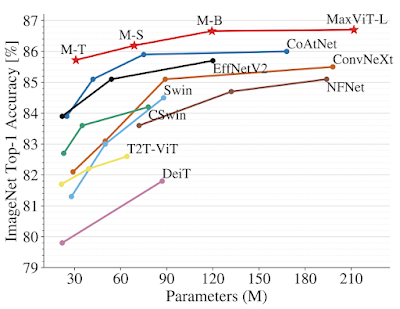

We exhibit the effectiveness of MaxViT on a broad vary of imaginative and prescient duties. On picture classification, MaxViT achieves state-of-the-art outcomes beneath varied settings: with solely ImageNet-1K coaching, MaxViT attains 86.5% top-1 accuracy; with ImageNet-21K (14M photographs, 21k courses) pre-training, MaxViT achieves 88.7% top-1 accuracy; and with JFT (300M photographs, 18k courses) pre-training, our largest mannequin MaxViT-XL achieves a excessive accuracy of 89.5% with 475M parameters.

For downstream duties, MaxViT as a spine delivers favorable efficiency on a broad spectrum of duties. For object detection and segmentation on the COCO dataset, the MaxViT spine achieves 53.4 AP, outperforming different base-level fashions whereas requiring solely about 60% the computational price. For picture aesthetics evaluation, the MaxViT mannequin advances the state-of-the-art MUSIQ mannequin by 3.5% by way of linear correlation with human opinion scores. The standalone MaxViT constructing block additionally demonstrates efficient efficiency on picture era, reaching higher FID and IS scores on the ImageInternet-1K unconditional era job with a considerably decrease variety of parameters than the state-of-the-art mannequin, HiT.

The UNet-like MAXIM spine, personalized for picture processing duties, has additionally demonstrated state-of-the-art outcomes on 15 out of 20 examined datasets, together with denoising, deblurring, deraining, dehazing, and low-light enhancement, whereas requiring fewer or comparable variety of parameters and FLOPs than aggressive fashions. Images restored by MAXIM present extra recovered particulars with much less visible artifacts.

|

| Visual outcomes of MAXIM for picture deblurring, deraining, and low-light enhancement. |

Summary

Recent works within the final two or so years have proven that ConvNets and Vision Transformers can obtain related efficiency. Our work presents a unified design that takes benefit of the perfect of each worlds — environment friendly convolution and sparse consideration — and demonstrates {that a} mannequin constructed on high, particularly MaxViT, can obtain state-of-the-art efficiency on a wide range of imaginative and prescient duties. More importantly, MaxViT scales properly to very giant knowledge sizes. We additionally present that another multi-axis design utilizing MLP operators, MAXIM, achieves state-of-the-art efficiency on a broad vary of low-level imaginative and prescient duties.

Even although we current our fashions within the context of imaginative and prescient duties, the proposed multi-axis strategy can simply prolong to language modeling to seize each native and world dependencies in linear time. Motivated by the work right here, we anticipate that it’s worthwhile to review different types of sparse consideration in higher-dimensional or multimodal indicators corresponding to movies, level clouds, and vision-language fashions.

We have open-sourced the code and fashions of MAXIM and MaxViT to facilitate future analysis on environment friendly consideration and MLP fashions.

Acknowledgments

We wish to thank our co-authors: Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, and Alan Bovik. We would additionally wish to acknowledge the precious dialogue and help from Xianzhi Du, Long Zhao, Wuyang Chen, Hanxiao Liu, Zihang Dai, Anurag Arnab, Sungjoon Choi, Junjie Ke, Mauricio Delbracio, Irene Zhu, Innfarn Yoo, Huiwen Chang, and Ce Liu.