[ad_1]

Most AI groups concentrate on the mistaken issues. Here’s a standard scene from my consulting work:

AI TEAM

Here’s our agent structure—we’ve bought RAG right here, a router there, and we’re utilizing this new framework for…ME

[Holding up my hand to pause the enthusiastic tech lead]

Can you present me the way you’re measuring if any of this truly works?… Room goes quiet

This scene has performed out dozens of occasions during the last two years. Teams make investments weeks constructing complicated AI programs however can’t inform me if their adjustments are serving to or hurting.

This isn’t shocking. With new instruments and frameworks rising weekly, it’s pure to concentrate on tangible issues we will management—which vector database to make use of, which LLM supplier to decide on, which agent framework to undertake. But after serving to 30+ firms construct AI merchandise, I’ve found that the groups who succeed barely discuss instruments in any respect. Instead, they obsess over measurement and iteration.

In this put up, I’ll present you precisely how these profitable groups function. While each scenario is exclusive, you’ll see patterns that apply no matter your area or staff measurement. Let’s begin by analyzing the commonest mistake I see groups make—one which derails AI tasks earlier than they even start.

The Most Common Mistake: Skipping Error Analysis

The “tools first” mindset is the commonest mistake in AI growth. Teams get caught up in structure diagrams, frameworks, and dashboards whereas neglecting the method of really understanding what’s working and what isn’t.

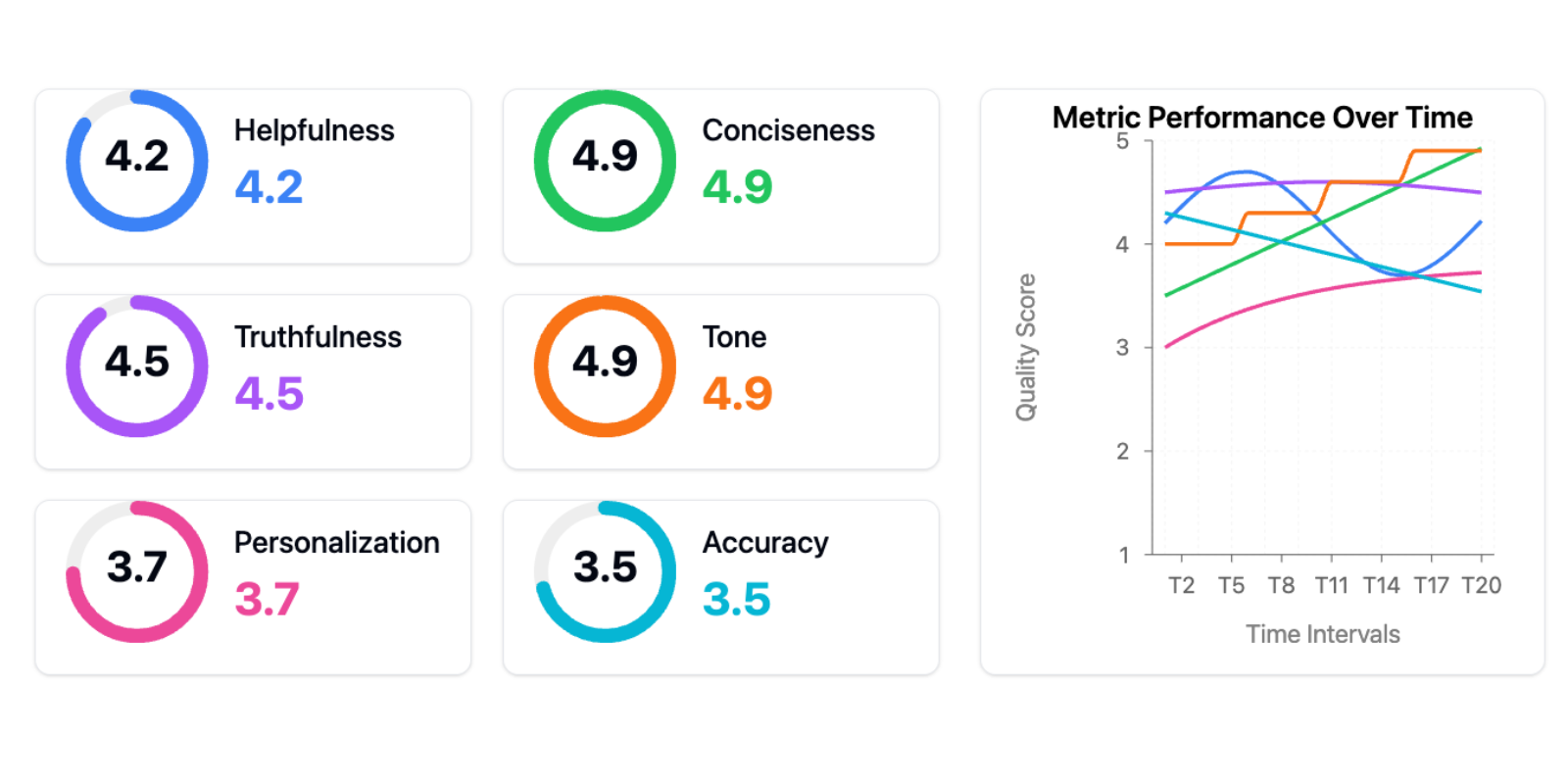

One consumer proudly confirmed me this analysis dashboard:

This is the “tools trap”—the idea that adopting the appropriate instruments or frameworks (on this case, generic metrics) will remedy your AI issues. Generic metrics are worse than ineffective—they actively impede progress in two methods:

First, they create a false sense of measurement and progress. Teams suppose they’re data-driven as a result of they’ve dashboards, however they’re monitoring self-importance metrics that don’t correlate with actual consumer issues. I’ve seen groups have a good time enhancing their “helpfulness score” by 10% whereas their precise customers have been nonetheless fighting primary duties. It’s like optimizing your web site’s load time whereas your checkout course of is damaged—you’re getting higher on the mistaken factor.

Second, too many metrics fragment your consideration. Instead of specializing in the few metrics that matter in your particular use case, you’re attempting to optimize a number of dimensions concurrently. When every little thing is essential, nothing is.

The various? Error evaluation: the one Most worthy exercise in AI growth and constantly the highest-ROI exercise. Let me present you what efficient error evaluation appears like in apply.

The Error Analysis Process

When Jacob, the founding father of Nurture Boss, wanted to enhance the corporate’s apartment-industry AI assistant, his staff constructed a easy viewer to look at conversations between their AI and customers. Next to every dialog was an area for open-ended notes about failure modes.

After annotating dozens of conversations, clear patterns emerged. Their AI was fighting date dealing with—failing 66% of the time when customers mentioned issues like “Let’s schedule a tour two weeks from now.”

Instead of reaching for brand new instruments, they:

- Looked at precise dialog logs

- Categorized the forms of date-handling failures

- Built particular assessments to catch these points

- Measured enchancment on these metrics

The end result? Their date dealing with success price improved from 33% to 95%.

Here’s Jacob explaining this course of himself:

Bottom-Up Versus Top-Down Analysis

When figuring out error varieties, you possibly can take both a “top-down” or “bottom-up” method.

The top-down method begins with frequent metrics like “hallucination” or “toxicity” plus metrics distinctive to your job. While handy, it usually misses domain-specific points.

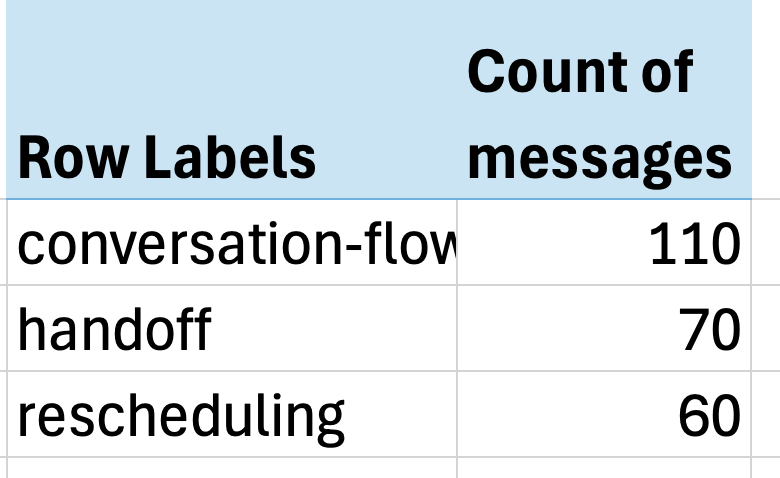

The more practical bottom-up method forces you to take a look at precise information and let metrics naturally emerge. At Nurture Boss, we began with a spreadsheet the place every row represented a dialog. We wrote open-ended notes on any undesired habits. Then we used an LLM to construct a taxonomy of frequent failure modes. Finally, we mapped every row to particular failure mode labels and counted the frequency of every problem.

The outcomes have been placing—simply three points accounted for over 60% of all issues:

- Conversation circulate points (lacking context, awkward responses)

- Handoff failures (not recognizing when to switch to people)

- Rescheduling issues (fighting date dealing with)

The affect was speedy. Jacob’s staff had uncovered so many actionable insights that they wanted a number of weeks simply to implement fixes for the issues we’d already discovered.

If you’d wish to see error evaluation in motion, we recorded a dwell walkthrough right here.

This brings us to an important query: How do you make it simple for groups to take a look at their information? The reply leads us to what I think about an important funding any AI staff could make…

The Most Important AI Investment: A Simple Data Viewer

The single most impactful funding I’ve seen AI groups make isn’t a flowery analysis dashboard—it’s constructing a custom-made interface that lets anybody look at what their AI is definitely doing. I emphasize custom-made as a result of each area has distinctive wants that off-the-shelf instruments not often handle. When reviewing condominium leasing conversations, it’s essential to see the complete chat historical past and scheduling context. For real-estate queries, you want the property particulars and supply paperwork proper there. Even small UX selections—like the place to position metadata or which filters to reveal—could make the distinction between a instrument individuals truly use and one they keep away from.

I’ve watched groups wrestle with generic labeling interfaces, looking by means of a number of programs simply to grasp a single interplay. The friction provides up: clicking by means of to totally different programs to see context, copying error descriptions into separate monitoring sheets, switching between instruments to confirm info. This friction doesn’t simply gradual groups down—it actively discourages the type of systematic evaluation that catches refined points.

Teams with thoughtfully designed information viewers iterate 10x sooner than these with out them. And right here’s the factor: These instruments will be inbuilt hours utilizing AI-assisted growth (like Cursor or Loveable). The funding is minimal in comparison with the returns.

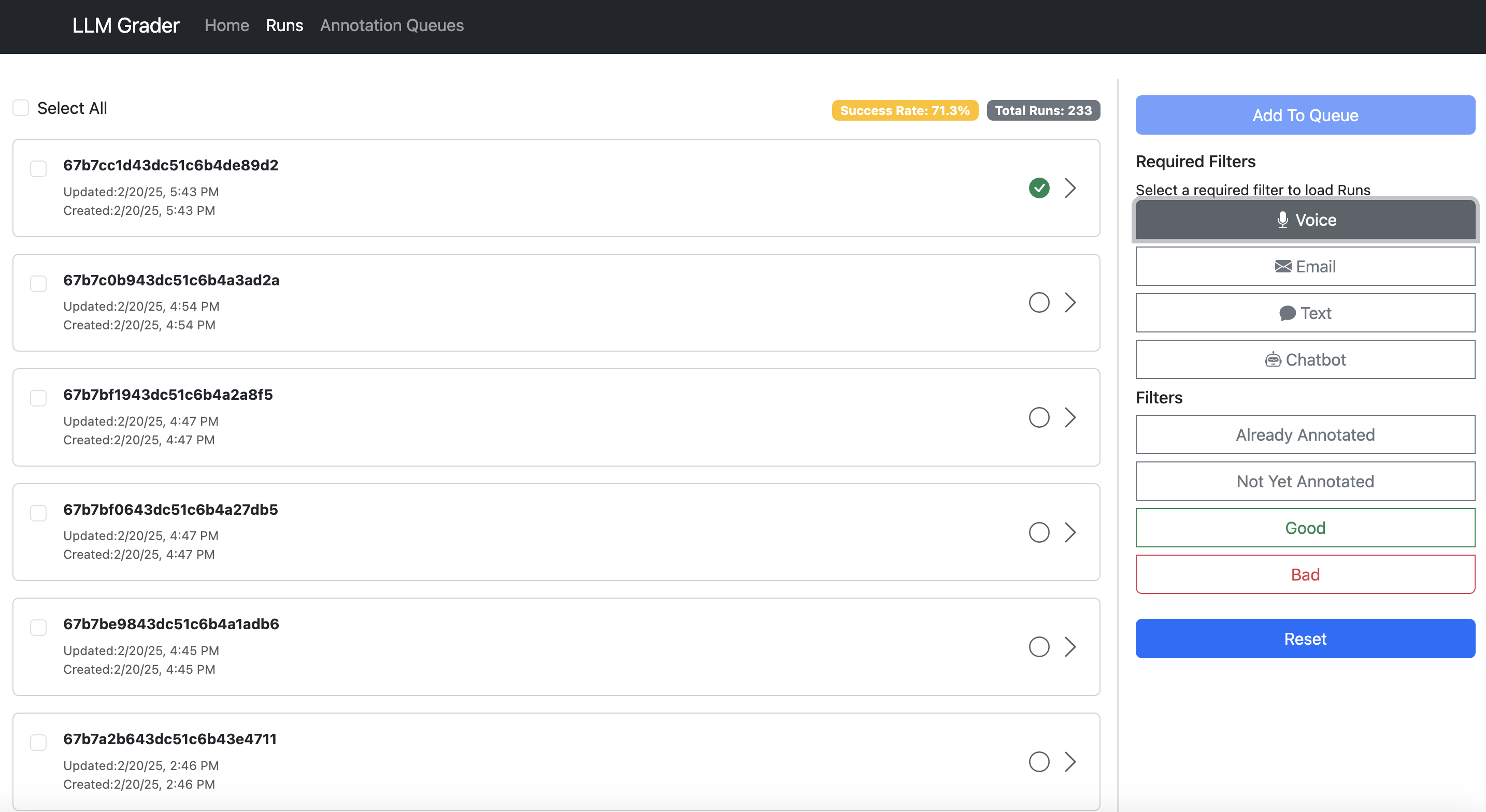

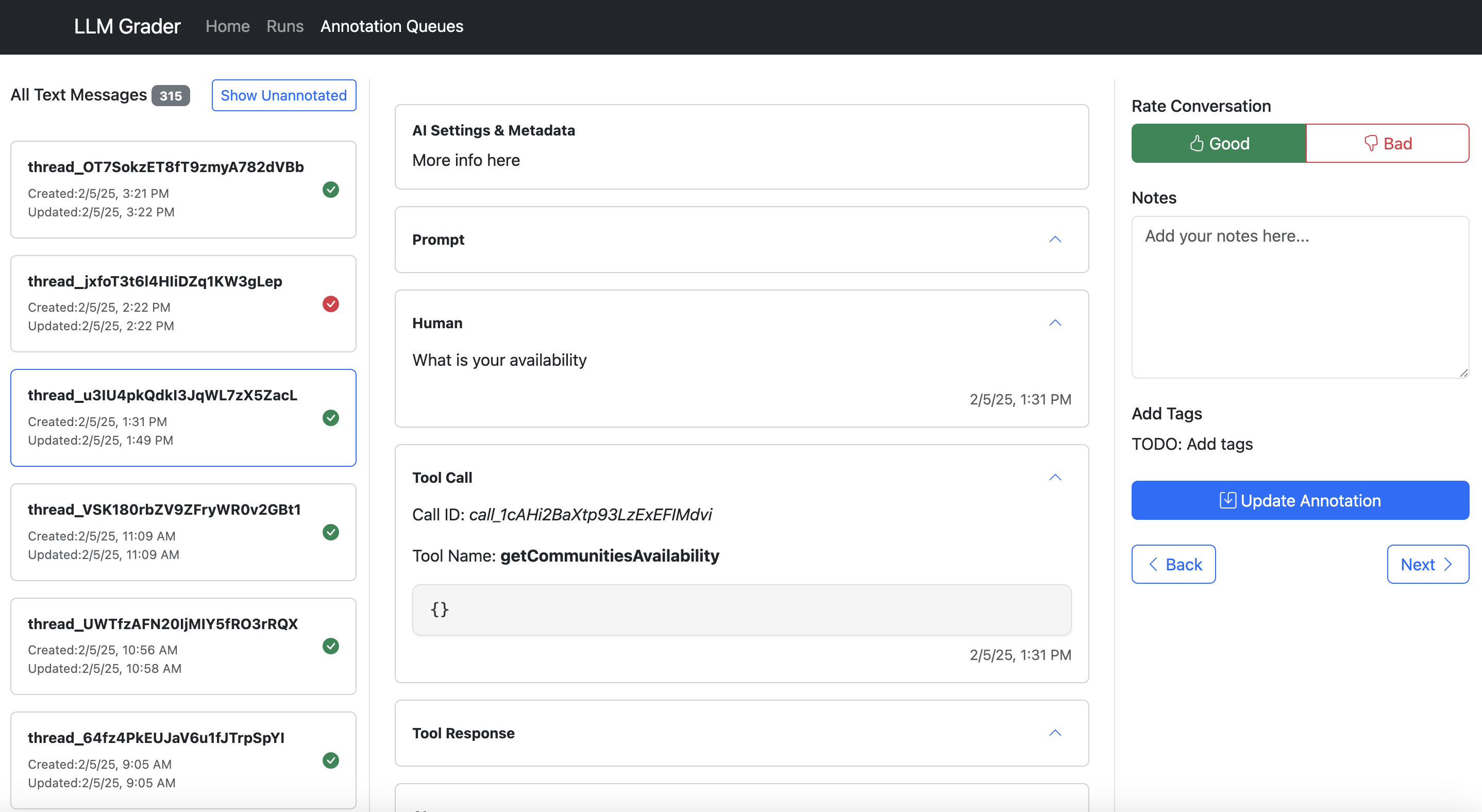

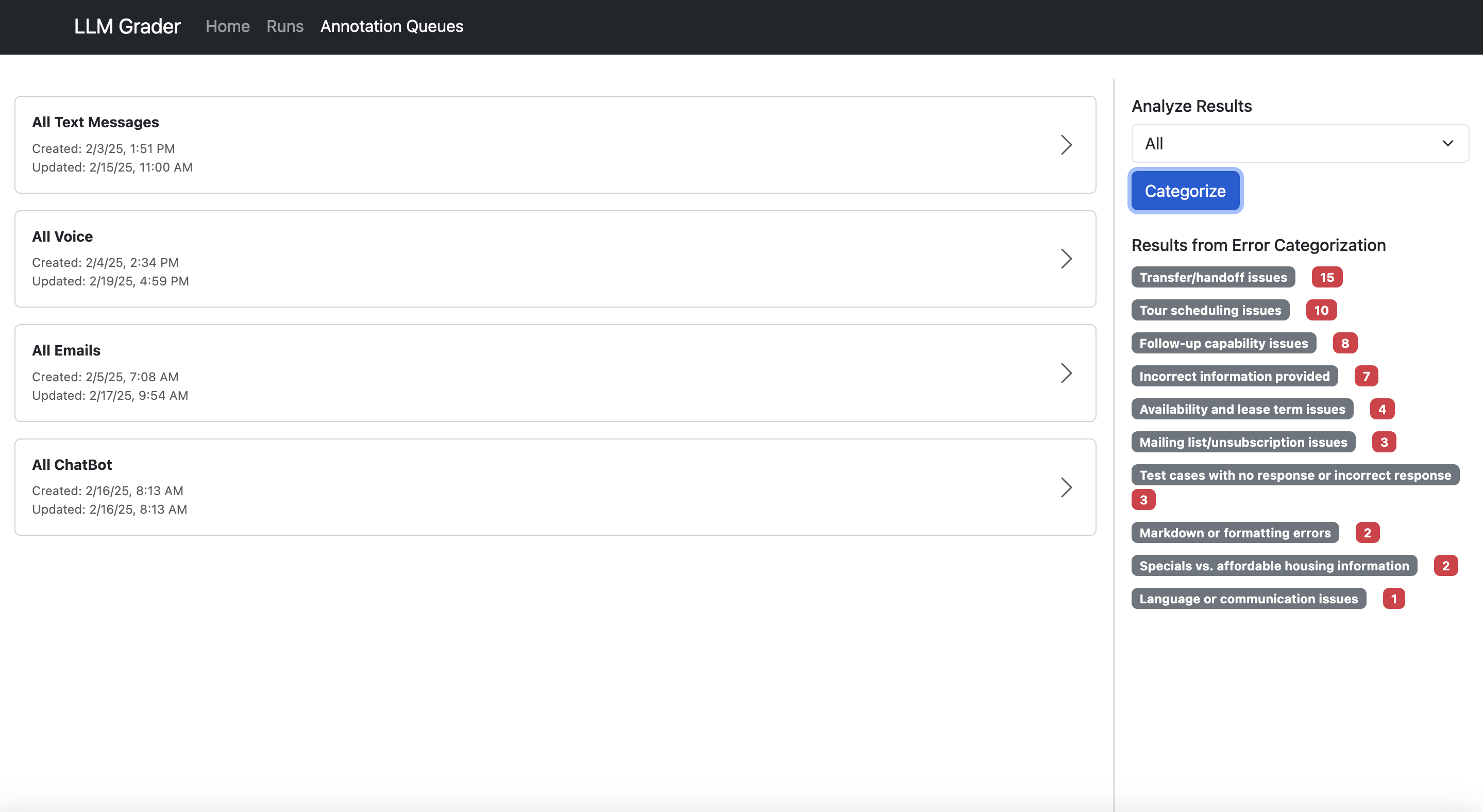

Let me present you what I imply. Here’s the information viewer constructed for Nurture Boss (which I mentioned earlier):

Here’s what makes information annotation instrument:

- Show all context in a single place. Don’t make customers hunt by means of totally different programs to grasp what occurred.

- Make suggestions trivial to seize. One-click right/incorrect buttons beat prolonged varieties.

- Capture open-ended suggestions. This permits you to seize nuanced points that don’t match right into a predefined taxonomy.

- Enable fast filtering and sorting. Teams want to simply dive into particular error varieties. In the instance above, Nurture Boss can shortly filter by the channel (voice, textual content, chat) or the precise property they need to have a look at shortly.

- Have hotkeys that permit customers to navigate between information examples and annotate with out clicking.

It doesn’t matter what net frameworks you employ—use no matter you’re accustomed to. Because I’m a Python developer, my present favourite net framework is FastHTML coupled with MonsterUI as a result of it permits me to outline the backend and frontend code in a single small Python file.

The key’s beginning someplace, even when it’s easy. I’ve discovered customized net apps present the perfect expertise, however in case you’re simply starting, a spreadsheet is healthier than nothing. As your wants develop, you possibly can evolve your instruments accordingly.

This brings us to a different counterintuitive lesson: The individuals finest positioned to enhance your AI system are sometimes those who know the least about AI.

Empower Domain Experts to Write Prompts

I just lately labored with an training startup constructing an interactive studying platform with LLMs. Their product supervisor, a studying design skilled, would create detailed PowerPoint decks explaining pedagogical ideas and instance dialogues. She’d current these to the engineering staff, who would then translate her experience into prompts.

But right here’s the factor: Prompts are simply English. Having a studying skilled talk educating ideas by means of PowerPoint just for engineers to translate that again into English prompts created pointless friction. The most profitable groups flip this mannequin by giving area consultants instruments to put in writing and iterate on prompts straight.

Build Bridges, Not Gatekeepers

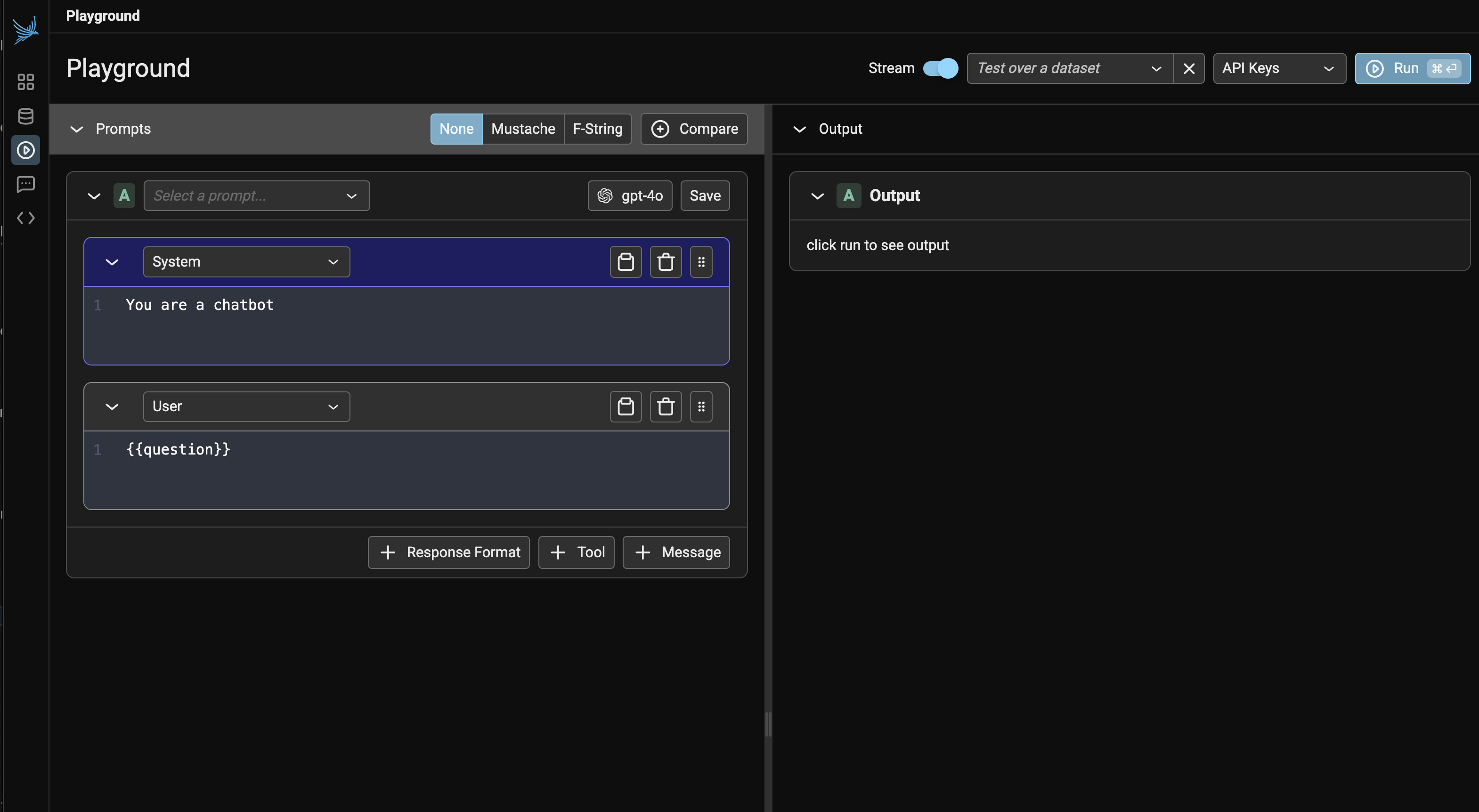

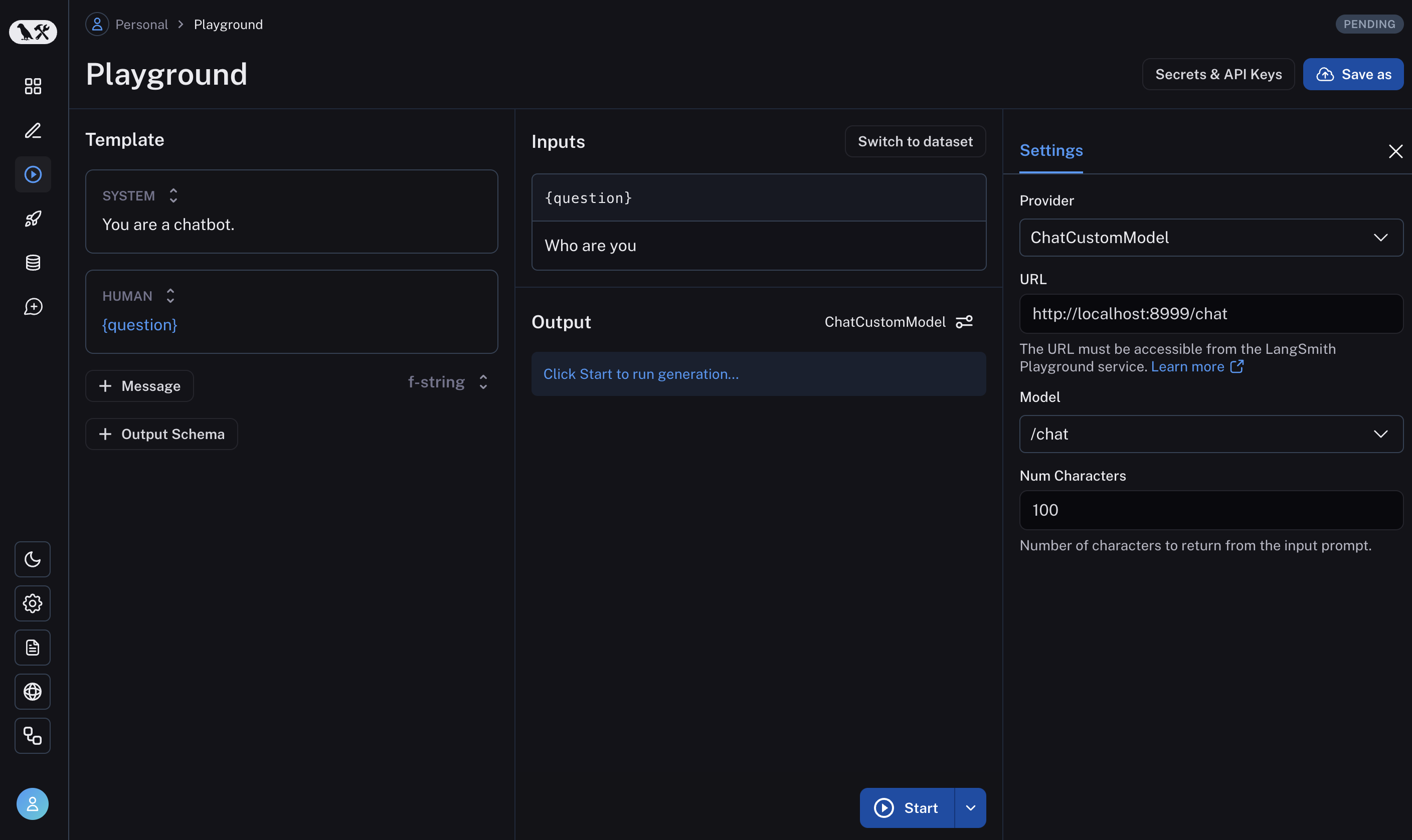

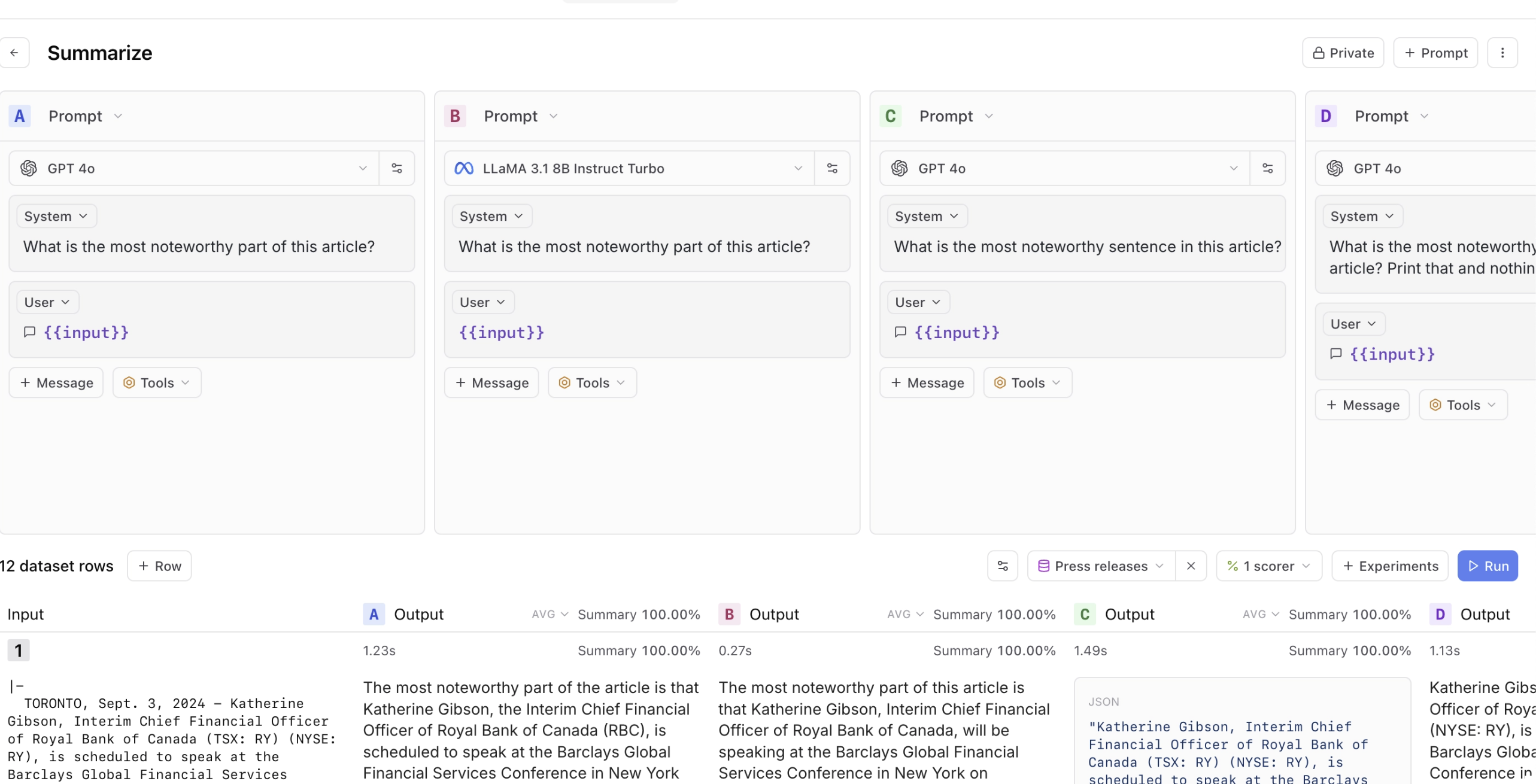

Prompt playgrounds are a fantastic start line for this. Tools like Arize, LangSmith, and Braintrust let groups shortly check totally different prompts, feed in instance datasets, and evaluate outcomes. Here are some screenshots of those instruments:

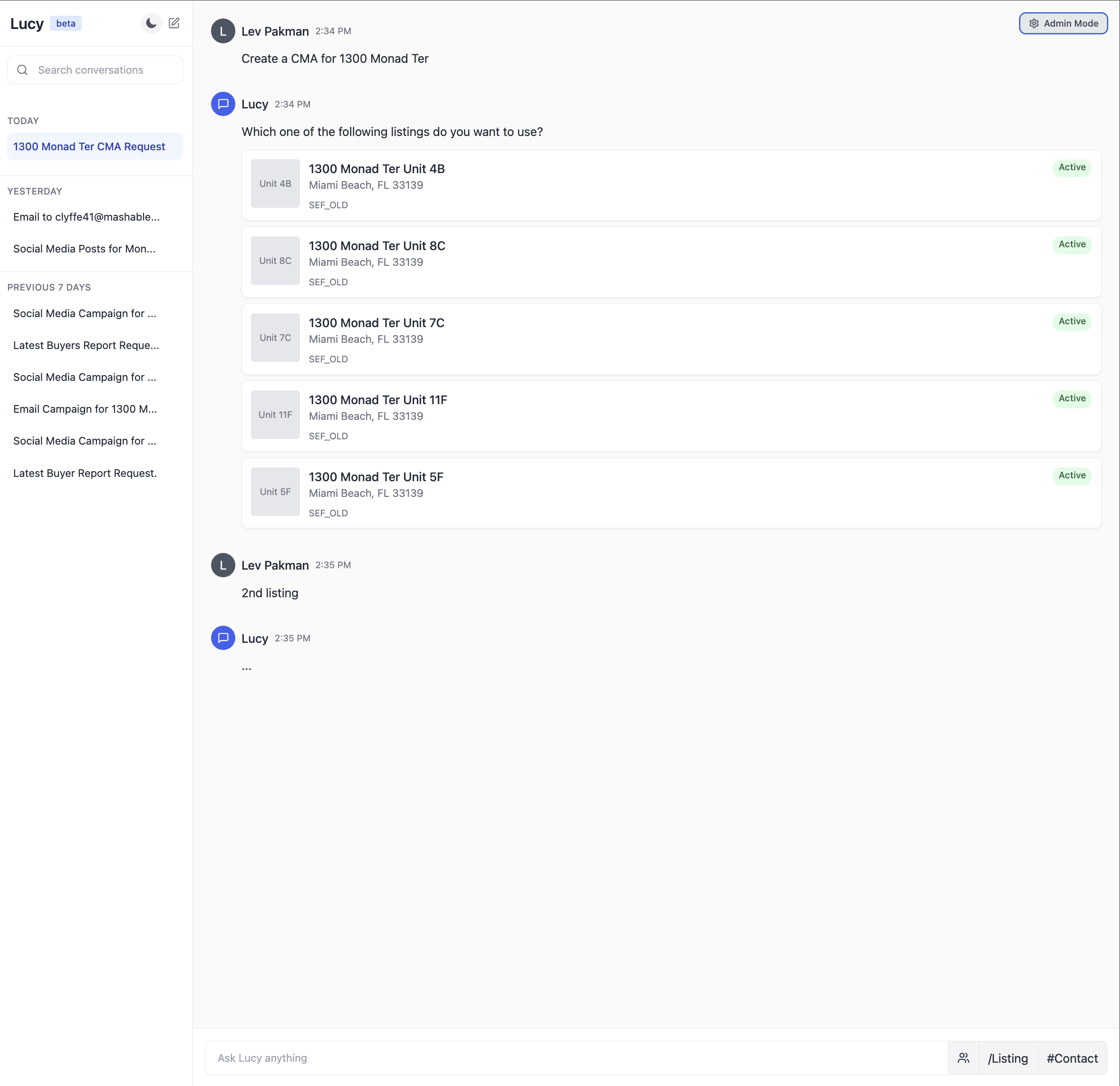

But there’s an important subsequent step that many groups miss: integrating immediate growth into their utility context. Most AI purposes aren’t simply prompts; they generally contain RAG programs pulling out of your data base, agent orchestration coordinating a number of steps, and application-specific enterprise logic. The handiest groups I’ve labored with transcend stand-alone playgrounds. They construct what I name built-in immediate environments—basically admin variations of their precise consumer interface that expose immediate enhancing.

Here’s an illustration of what an built-in immediate setting may appear like for a real-estate AI assistant:

Tips for Communicating With Domain Experts

There’s one other barrier that always prevents area consultants from contributing successfully: pointless jargon. I used to be working with an training startup the place engineers, product managers, and studying specialists have been speaking previous one another in conferences. The engineers saved saying, “We’re going to build an agent that does XYZ,” when actually the job to be carried out was writing a immediate. This created a synthetic barrier—the educational specialists, who have been the precise area consultants, felt like they couldn’t contribute as a result of they didn’t perceive “agents.”

This occurs in every single place. I’ve seen it with legal professionals at authorized tech firms, psychologists at psychological well being startups, and docs at healthcare companies. The magic of LLMs is that they make AI accessible by means of pure language, however we frequently destroy that benefit by wrapping every little thing in technical terminology.

Here’s a easy instance of learn how to translate frequent AI jargon:

| Instead of claiming… | Say… |

| “We’re implementing a RAG approach.” | “We’re making sure the model has the right context to answer questions.” |

| “We need to prevent prompt injection.” | “We need to make sure users can’t trick the AI into ignoring our rules.” |

| “Our model suffers from hallucination issues.” | “Sometimes the AI makes things up, so we need to check its answers.” |

This doesn’t imply dumbing issues down—it means being exact about what you’re truly doing. When you say, “We’re building an agent,” what particular functionality are you including? Is it operate calling? Tool use? Or only a higher immediate? Being particular helps everybody perceive what’s truly occurring.

There’s nuance right here. Technical terminology exists for a motive: it supplies precision when speaking with different technical stakeholders. The key’s adapting your language to your viewers.

The problem many groups elevate at this level is “This all sounds great, but what if we don’t have any data yet? How can we look at examples or iterate on prompts when we’re just starting out?” That’s what we’ll discuss subsequent.

Bootstrapping Your AI With Synthetic Data Is Effective (Even With Zero Users)

One of the commonest roadblocks I hear from groups is “We can’t do proper evaluation because we don’t have enough real user data yet.” This creates a chicken-and-egg downside—you want information to enhance your AI, however you want an honest AI to get customers who generate that information.

Fortunately, there’s an answer that works surprisingly nicely: artificial information. LLMs can generate lifelike check circumstances that cowl the vary of situations your AI will encounter.

As I wrote in my LLM-as-a-Judge weblog put up, artificial information will be remarkably efficient for analysis. Bryan Bischof, the previous head of AI at Hex, put it completely:

LLMs are surprisingly good at producing wonderful – and various – examples of consumer prompts. This will be related for powering utility options, and sneakily, for constructing Evals. If this sounds a bit just like the Large Language Snake is consuming its tail, I used to be simply as stunned as you! All I can say is: it really works, ship it.

A Framework for Generating Realistic Test Data

The key to efficient artificial information is choosing the proper dimensions to check. While these dimensions will fluctuate based mostly in your particular wants, I discover it useful to consider three broad classes:

- Features: What capabilities does your AI must assist?

- Scenarios: What conditions will it encounter?

- User personas: Who will probably be utilizing it and the way?

These aren’t the one dimensions you may care about—you may additionally need to check totally different tones of voice, ranges of technical sophistication, and even totally different locales and languages. The essential factor is figuring out dimensions that matter in your particular use case.

For a real-estate CRM AI assistant I labored on with Rechat, we outlined these dimensions like this:

But having these dimensions outlined is just half the battle. The actual problem is making certain your artificial information truly triggers the situations you need to check. This requires two issues:

- A check database with sufficient selection to assist your situations

- A solution to confirm that generated queries truly set off supposed situations

For Rechat, we maintained a check database of listings that we knew would set off totally different edge circumstances. Some groups want to make use of an anonymized copy of manufacturing information, however both manner, it’s essential to guarantee your check information has sufficient selection to train the situations you care about.

Here’s an instance of how we would use these dimensions with actual information to generate check circumstances for the property search characteristic (that is simply pseudo code, and really illustrative):

def generate_search_query(state of affairs, persona, listing_db):

"""Generate a sensible consumer question about listings"""

# Pull actual itemizing information to floor the era

sample_listings = listing_db.get_sample_listings(

price_range=persona.price_range,

location=persona.preferred_areas

)

# Verify we have now listings that can set off our state of affairs

if state of affairs == "multiple_matches" and len(sample_listings) 0:

elevate WorthError("Found matches when testing no-match state of affairs")

immediate = f"""

You are an skilled actual property agent who's looking for listings. You are given a buyer sort and a state of affairs.

Your job is to generate a pure language question you'd use to look these listings.

Context:

- Customer sort: {persona.description}

- Scenario: {state of affairs}

Use these precise listings as reference:

{format_listings(sample_listings)}

The question ought to replicate the shopper sort and the state of affairs.

Example question: Find houses within the 75019 zip code, 3 bedrooms, 2 bogs, worth vary $750k - $1M for an investor.

"""

return generate_with_llm(immediate)

This produced lifelike queries like:

| Feature | Scenario | Persona | Generated Query |

|---|---|---|---|

| property search | a number of matches | first_time_buyer | “Looking for 3-bedroom homes under $500k in the Riverside area. Would love something close to parks since we have young kids.” |

| market evaluation | no matches | investor | “Need comps for 123 Oak St. Specifically interested in rental yield comparison with similar properties in a 2-mile radius.” |

The key to helpful artificial information is grounding it in actual system constraints. For the real-estate AI assistant, this implies:

- Using actual itemizing IDs and addresses from their database

- Incorporating precise agent schedules and availability home windows

- Respecting enterprise guidelines like exhibiting restrictions and spot intervals

- Including market-specific particulars like HOA necessities or native rules

We then feed these check circumstances by means of Lucy (now a part of Capacity) and log the interactions. This provides us a wealthy dataset to research, exhibiting precisely how the AI handles totally different conditions with actual system constraints. This method helped us repair points earlier than they affected actual customers.

Sometimes you don’t have entry to a manufacturing database, particularly for brand new merchandise. In these circumstances, use LLMs to generate each check queries and the underlying check information. For a real-estate AI assistant, this may imply creating artificial property listings with lifelike attributes—costs that match market ranges, legitimate addresses with actual road names, and facilities applicable for every property sort. The key’s grounding artificial information in real-world constraints to make it helpful for testing. The specifics of producing strong artificial databases are past the scope of this put up.

Guidelines for Using Synthetic Data

When producing artificial information, observe these key ideas to make sure it’s efficient:

- Diversify your dataset: Create examples that cowl a variety of options, situations, and personas. As I wrote in my LLM-as-a-Judge put up, this variety helps you establish edge circumstances and failure modes you may not anticipate in any other case.

- Generate consumer inputs, not outputs: Use LLMs to generate lifelike consumer queries or inputs, not the anticipated AI responses. This prevents your artificial information from inheriting the biases or limitations of the producing mannequin.

- Incorporate actual system constraints: Ground your artificial information in precise system limitations and information. For instance, when testing a scheduling characteristic, use actual availability home windows and reserving guidelines.

- Verify state of affairs protection: Ensure your generated information truly triggers the situations you need to check. A question supposed to check “no matches found” ought to truly return zero outcomes when run towards your system.

- Start easy, then add complexity: Begin with easy check circumstances earlier than including nuance. This helps isolate points and set up a baseline earlier than tackling edge circumstances.

This method isn’t simply theoretical—it’s been confirmed in manufacturing throughout dozens of firms. What usually begins as a stopgap measure turns into a everlasting a part of the analysis infrastructure, even after actual consumer information turns into out there.

Let’s have a look at learn how to preserve belief in your analysis system as you scale.

Maintaining Trust In Evals Is Critical

This is a sample I’ve seen repeatedly: Teams construct analysis programs, then progressively lose religion in them. Sometimes it’s as a result of the metrics don’t align with what they observe in manufacturing. Other occasions, it’s as a result of the evaluations turn out to be too complicated to interpret. Either manner, the end result is identical: The staff reverts to creating selections based mostly on intestine feeling and anecdotal suggestions, undermining all the function of getting evaluations.

Maintaining belief in your analysis system is simply as essential as constructing it within the first place. Here’s how probably the most profitable groups method this problem.

Understanding Criteria Drift

One of probably the most insidious issues in AI analysis is “criteria drift”—a phenomenon the place analysis standards evolve as you observe extra mannequin outputs. In their paper “Who Validates the Validators? Aligning LLM-Assisted Evaluation of LLM Outputs with Human Preferences,” Shankar et al. describe this phenomenon:

To grade outputs, individuals must externalize and outline their analysis standards; nevertheless, the method of grading outputs helps them to outline that very standards.

This creates a paradox: You can’t totally outline your analysis standards till you’ve seen a variety of outputs, however you want standards to guage these outputs within the first place. In different phrases, it’s inconceivable to fully decide analysis standards previous to human judging of LLM outputs.

I’ve noticed this firsthand when working with Phillip Carter at Honeycomb on the corporate’s Query Assistant characteristic. As we evaluated the AI’s capability to generate database queries, Phillip seen one thing attention-grabbing:

Seeing how the LLM breaks down its reasoning made me notice I wasn’t being constant about how I judged sure edge circumstances.

The strategy of reviewing AI outputs helped him articulate his personal analysis requirements extra clearly. This isn’t an indication of poor planning—it’s an inherent attribute of working with AI programs that produce various and typically sudden outputs.

The groups that preserve belief of their analysis programs embrace this actuality somewhat than combating it. They deal with analysis standards as residing paperwork that evolve alongside their understanding of the issue house. They additionally acknowledge that totally different stakeholders may need totally different (typically contradictory) standards, they usually work to reconcile these views somewhat than imposing a single commonplace.

Creating Trustworthy Evaluation Systems

So how do you construct analysis programs that stay reliable regardless of standards drift? Here are the approaches I’ve discovered handiest:

1. Favor Binary Decisions Over Arbitrary Scales

As I wrote in my LLM-as-a-Judge put up, binary selections present readability that extra complicated scales usually obscure. When confronted with a 1–5 scale, evaluators incessantly wrestle with the distinction between a 3 and a 4, introducing inconsistency and subjectivity. What precisely distinguishes “somewhat helpful” from “helpful”? These boundary circumstances devour disproportionate psychological power and create noise in your analysis information. And even when companies use a 1–5 scale, they inevitably ask the place to attract the road for “good enough” or to set off intervention, forcing a binary determination anyway.

In distinction, a binary move/fail forces evaluators to make a transparent judgment: Did this output obtain its function or not? This readability extends to measuring progress—a ten% improve in passing outputs is instantly significant, whereas a 0.5-point enchancment on a 5-point scale requires interpretation.

I’ve discovered that groups who resist binary analysis usually achieve this as a result of they need to seize nuance. But nuance isn’t misplaced—it’s simply moved to the qualitative critique that accompanies the judgment. The critique supplies wealthy context about why one thing handed or failed and what particular elements could possibly be improved, whereas the binary determination creates actionable readability about whether or not enchancment is required in any respect.

2. Enhance Binary Judgments With Detailed Critiques

While binary selections present readability, they work finest when paired with detailed critiques that seize the nuance of why one thing handed or failed. This mixture provides you the perfect of each worlds: clear, actionable metrics and wealthy contextual understanding.

For instance, when evaluating a response that accurately solutions a consumer’s query however comprises pointless info, critique may learn:

The AI efficiently supplied the market evaluation requested (PASS), however included extreme element about neighborhood demographics that wasn’t related to the funding query. This makes the response longer than needed and doubtlessly distracting.

These critiques serve a number of features past simply clarification. They drive area consultants to externalize implicit data—I’ve seen authorized consultants transfer from obscure emotions that one thing “doesn’t sound right” to articulating particular points with quotation codecs or reasoning patterns that may be systematically addressed.

When included as few-shot examples in decide prompts, these critiques enhance the LLM’s capability to motive about complicated edge circumstances. I’ve discovered this method usually yields 15%–20% increased settlement charges between human and LLM evaluations in comparison with prompts with out instance critiques. The critiques additionally present wonderful uncooked materials for producing high-quality artificial information, making a flywheel for enchancment.

3. Measure Alignment Between Automated Evals and Human Judgment

If you’re utilizing LLMs to guage outputs (which is commonly needed at scale), it’s essential to usually verify how nicely these automated evaluations align with human judgment.

This is especially essential given our pure tendency to over-trust AI programs. As Shankar et al. word in “Who Validates the Validators?,” the shortage of instruments to validate evaluator high quality is regarding.

Research exhibits individuals are inclined to over-rely and over-trust AI programs. For occasion, in a single excessive profile incident, researchers from MIT posted a pre-print on arXiv claiming that GPT-4 may ace the MIT EECS examination. Within hours, [the] work [was] debunked. . .citing issues arising from over-reliance on GPT-4 to grade itself.

This overtrust downside extends past self-evaluation. Research has proven that LLMs will be biased by easy components just like the ordering of choices in a set and even seemingly innocuous formatting adjustments in prompts. Without rigorous human validation, these biases can silently undermine your analysis system.

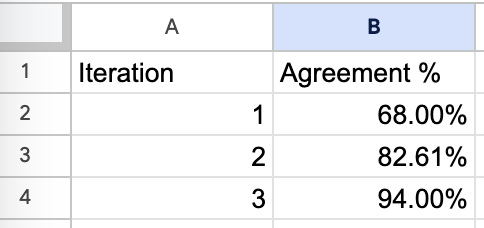

When working with Honeycomb, we tracked settlement charges between our LLM-as-a-judge and Phillip’s evaluations:

It took three iterations to realize >90% settlement, however this funding paid off in a system the staff may belief. Without this validation step, automated evaluations usually drift from human expectations over time, particularly because the distribution of inputs adjustments. You can learn extra about this right here.

Tools like Eugene Yan’s AlignEval exhibit this alignment course of superbly. AlignEval supplies a easy interface the place you add information, label examples with a binary “good” or “bad,” after which consider LLM-based judges towards these human judgments. What makes it efficient is the way it streamlines the workflow—you possibly can shortly see the place automated evaluations diverge out of your preferences, refine your standards based mostly on these insights, and measure enchancment over time. This method reinforces that alignment isn’t a one-time setup however an ongoing dialog between human judgment and automatic analysis.

Scaling Without Losing Trust

As your AI system grows, you’ll inevitably face stress to cut back the human effort concerned in analysis. This is the place many groups go mistaken—they automate an excessive amount of, too shortly, and lose the human connection that retains their evaluations grounded.

The most profitable groups take a extra measured method:

- Start with excessive human involvement: In the early levels, have area consultants consider a big share of outputs.

- Study alignment patterns: Rather than automating analysis, concentrate on understanding the place automated evaluations align with human judgment and the place they diverge. This helps you establish which forms of circumstances want extra cautious human consideration.

- Use strategic sampling: Rather than evaluating each output, use statistical strategies to pattern outputs that present probably the most info, significantly specializing in areas the place alignment is weakest.

- Maintain common calibration: Even as you scale, proceed to match automated evaluations towards human judgment usually, utilizing these comparisons to refine your understanding of when to belief automated evaluations.

Scaling analysis isn’t nearly lowering human effort—it’s about directing that effort the place it provides probably the most worth. By focusing human consideration on probably the most difficult or informative circumstances, you possibly can preserve high quality at the same time as your system grows.

Now that we’ve lined learn how to preserve belief in your evaluations, let’s discuss a basic shift in how it is best to method AI growth roadmaps.

Your AI Roadmap Should Count Experiments, Not Features

If you’ve labored in software program growth, you’re accustomed to conventional roadmaps: an inventory of options with goal supply dates. Teams decide to transport particular performance by particular deadlines, and success is measured by how intently they hit these targets.

This method fails spectacularly with AI.

I’ve watched groups decide to roadmap targets like “Launch sentiment analysis by Q2” or “Deploy agent-based customer support by end of year,” solely to find that the expertise merely isn’t prepared to satisfy their high quality bar. They both ship one thing subpar to hit the deadline or miss the deadline completely. Either manner, belief erodes.

The basic downside is that conventional roadmaps assume we all know what’s doable. With typical software program, that’s usually true—given sufficient time and assets, you possibly can construct most options reliably. With AI, particularly on the leading edge, you’re continually testing the boundaries of what’s possible.

Experiments Versus Features

Bryan Bischof, former head of AI at Hex, launched me to what he calls a “capability funnel” method to AI roadmaps. This technique reframes how we take into consideration AI growth progress. Instead of defining success as transport a characteristic, the potential funnel breaks down AI efficiency into progressive ranges of utility. At the highest of the funnel is probably the most primary performance: Can the system reply in any respect? At the underside is totally fixing the consumer’s job to be carried out. Between these factors are varied levels of accelerating usefulness.

For instance, in a question assistant, the potential funnel may appear like:

- Can generate syntactically legitimate queries (primary performance)

- Can generate queries that execute with out errors

- Can generate queries that return related outcomes

- Can generate queries that match consumer intent

- Can generate optimum queries that remedy the consumer’s downside (full answer)

This method acknowledges that AI progress isn’t binary—it’s about progressively enhancing capabilities throughout a number of dimensions. It additionally supplies a framework for measuring progress even once you haven’t reached the ultimate purpose.

The most profitable groups I’ve labored with construction their roadmaps round experiments somewhat than options. Instead of committing to particular outcomes, they decide to a cadence of experimentation, studying, and iteration.

Eugene Yan, an utilized scientist at Amazon, shared how he approaches ML mission planning with management—a course of that, whereas initially developed for conventional machine studying, applies equally nicely to trendy LLM growth:

Here’s a standard timeline. First, I take two weeks to do a knowledge feasibility evaluation, i.e., “Do I have the right data?”…Then I take an extra month to do a technical feasibility evaluation, i.e., “Can AI solve this?” After that, if it nonetheless works I’ll spend six weeks constructing a prototype we will A/B check.

While LLMs may not require the identical type of characteristic engineering or mannequin coaching as conventional ML, the underlying precept stays the identical: time-box your exploration, set up clear determination factors, and concentrate on proving feasibility earlier than committing to full implementation. This method provides management confidence that assets received’t be wasted on open-ended exploration, whereas giving the staff the liberty to be taught and adapt as they go.

The Foundation: Evaluation Infrastructure

The key to creating an experiment-based roadmap work is having strong analysis infrastructure. Without it, you’re simply guessing whether or not your experiments are working. With it, you possibly can quickly iterate, check hypotheses, and construct on successes.

I noticed this firsthand in the course of the early growth of GitHub Copilot. What most individuals don’t notice is that the staff invested closely in constructing subtle offline analysis infrastructure. They created programs that would check code completions towards a really massive corpus of repositories on GitHub, leveraging unit assessments that already existed in high-quality codebases as an automatic solution to confirm completion correctness. This was an enormous engineering enterprise—they needed to construct programs that would clone repositories at scale, arrange their environments, run their check suites, and analyze the outcomes, all whereas dealing with the unimaginable variety of programming languages, frameworks, and testing approaches.

This wasn’t wasted time—it was the muse that accelerated every little thing. With strong analysis in place, the staff ran 1000’s of experiments, shortly recognized what labored, and will say with confidence “This change improved quality by X%” as a substitute of counting on intestine emotions. While the upfront funding in analysis feels gradual, it prevents infinite debates about whether or not adjustments assist or harm and dramatically accelerates innovation later.

Communicating This to Stakeholders

The problem, in fact, is that executives usually need certainty. They need to know when options will ship and what they’ll do. How do you bridge this hole?

The key’s to shift the dialog from outputs to outcomes. Instead of promising particular options by particular dates, decide to a course of that can maximize the probabilities of attaining the specified enterprise outcomes.

Eugene shared how he handles these conversations:

I attempt to reassure management with timeboxes. At the top of three months, if it really works out, then we transfer it to manufacturing. At any step of the best way, if it doesn’t work out, we pivot.

This method provides stakeholders clear determination factors whereas acknowledging the inherent uncertainty in AI growth. It additionally helps handle expectations about timelines—as a substitute of promising a characteristic in six months, you’re promising a transparent understanding of whether or not that characteristic is possible in three months.

Bryan’s functionality funnel method supplies one other highly effective communication instrument. It permits groups to point out concrete progress by means of the funnel levels, even when the ultimate answer isn’t prepared. It additionally helps executives perceive the place issues are occurring and make knowledgeable selections about the place to speculate assets.

Build a Culture of Experimentation Through Failure Sharing

Perhaps probably the most counterintuitive side of this method is the emphasis on studying from failures. In conventional software program growth, failures are sometimes hidden or downplayed. In AI growth, they’re the first supply of studying.

Eugene operationalizes this at his group by means of what he calls a “fifteen-five”—a weekly replace that takes fifteen minutes to put in writing and 5 minutes to learn:

In my fifteen-fives, I doc my failures and my successes. Within our staff, we even have weekly “no-prep sharing sessions” the place we focus on what we’ve been engaged on and what we’ve discovered. When I do that, I am going out of my solution to share failures.

This apply normalizes failure as a part of the educational course of. It exhibits that even skilled practitioners encounter dead-ends, and it accelerates staff studying by sharing these experiences overtly. And by celebrating the method of experimentation somewhat than simply the outcomes, groups create an setting the place individuals really feel secure taking dangers and studying from failures.

A Better Way Forward

So what does an experiment-based roadmap appear like in apply? Here’s a simplified instance from a content material moderation mission Eugene labored on:

I used to be requested to do content material moderation. I mentioned, “It’s uncertain whether we’ll meet that goal. It’s uncertain even if that goal is feasible with our data, or what machine learning techniques would work. But here’s my experimentation roadmap. Here are the techniques I’m gonna try, and I’m gonna update you at a two-week cadence.”

The roadmap didn’t promise particular options or capabilities. Instead, it dedicated to a scientific exploration of doable approaches, with common check-ins to evaluate progress and pivot if needed.

The outcomes have been telling:

For the primary two to 3 months, nothing labored. . . .And then [a breakthrough] got here out. . . .Within a month, that downside was solved. So you possibly can see that within the first quarter and even 4 months, it was going nowhere. . . .But then you can even see that abruptly, some new expertise…, some new paradigm, some new reframing comes alongside that simply [solves] 80% of [the problem].

This sample—lengthy intervals of obvious failure adopted by breakthroughs—is frequent in AI growth. Traditional feature-based roadmaps would have killed the mission after months of “failure,” lacking the eventual breakthrough.

By specializing in experiments somewhat than options, groups create house for these breakthroughs to emerge. They additionally construct the infrastructure and processes that make breakthroughs extra probably: information pipelines, analysis frameworks, and fast iteration cycles.

The most profitable groups I’ve labored with begin by constructing analysis infrastructure earlier than committing to particular options. They create instruments that make iteration sooner and concentrate on processes that assist fast experimentation. This method might sound slower at first, however it dramatically accelerates growth in the long term by enabling groups to be taught and adapt shortly.

The key metric for AI roadmaps isn’t options shipped—it’s experiments run. The groups that win are these that may run extra experiments, be taught sooner, and iterate extra shortly than their opponents. And the muse for this fast experimentation is at all times the identical: strong, trusted analysis infrastructure that offers everybody confidence within the outcomes.

By reframing your roadmap round experiments somewhat than options, you create the circumstances for comparable breakthroughs in your personal group.

Conclusion

Throughout this put up, I’ve shared patterns I’ve noticed throughout dozens of AI implementations. The most profitable groups aren’t those with probably the most subtle instruments or probably the most superior fashions—they’re those that grasp the basics of measurement, iteration, and studying.

The core ideas are surprisingly easy:

- Look at your information. Nothing replaces the perception gained from analyzing actual examples. Error evaluation constantly reveals the highest-ROI enhancements.

- Build easy instruments that take away friction. Custom information viewers that make it simple to look at AI outputs yield extra insights than complicated dashboards with generic metrics.

- Empower area consultants. The individuals who perceive your area finest are sometimes those who can most successfully enhance your AI, no matter their technical background.

- Use artificial information strategically. You don’t want actual customers to begin testing and enhancing your AI. Thoughtfully generated artificial information can bootstrap your analysis course of.

- Maintain belief in your evaluations. Binary judgments with detailed critiques create readability whereas preserving nuance. Regular alignment checks guarantee automated evaluations stay reliable.

- Structure roadmaps round experiments, not options. Commit to a cadence of experimentation and studying somewhat than particular outcomes by particular dates.

These ideas apply no matter your area, staff measurement, or technical stack. They’ve labored for firms starting from early-stage startups to tech giants, throughout use circumstances from buyer assist to code era.

Resources for Going Deeper

If you’d wish to discover these subjects additional, listed below are some assets which may assist:

- My weblog for extra content material on AI analysis and enchancment. My different posts dive into extra technical element on subjects comparable to establishing efficient LLM judges, implementing analysis programs, and different elements of AI growth.1 Also try the blogs of Shreya Shankar and Eugene Yan, who’re additionally nice sources of data on these subjects.

- A course I’m educating, Rapidly Improve AI Products with Evals, with Shreya Shankar. It supplies hands-on expertise with strategies comparable to error evaluation, artificial information era, and constructing reliable analysis programs, and consists of sensible workout routines and customized instruction by means of workplace hours.

- If you’re searching for hands-on steerage particular to your group’s wants, you possibly can be taught extra about working with me at Parlance Labs.

Footnotes

- I write extra broadly about machine studying, AI, and software program growth. Some posts that develop on these subjects embody “Your AI Product Needs Evals,” “Creating a LLM-as-a-Judge That Drives Business Results,” and “What We’ve Learned from a Year of Building with LLMs.” You can see all my posts at hamel.dev.