[ad_1]

Last Updated on October 29, 2022

As we realized what a Transformer is and the way we’d prepare the Transformer mannequin, we discover that it’s a useful gizmo to make a pc perceive human language. However, the Transformer was initially designed as a mannequin to translate one language to a different. If we repurpose it for a special activity, we’d doubtless must retrain the entire mannequin from scratch. Given the time it takes to coach a Transformer mannequin is big, we want to have an answer that permits us to readily reuse the educated Transformer for a lot of completely different duties. BERT is such a mannequin. It is an extension of the encoder a part of a Transformer.

In this tutorial, you’ll study what BERT is and uncover what it could actually do.

After finishing this tutorial, you’ll know:

- What is a Bidirectional Encoder Representations from Transformer (BERT)

- How a BERT mannequin could be reused for various functions

- How you should use a pre-trained BERT mannequin

Let’s get began.

A short introduction to BERT

Photo by Samet Erköseoğlu, some rights reserved.

Tutorial Overview

This tutorial is split into 4 elements; they’re:

- From Transformer Model to BERT

- What Can BERT Do?

- Using Pre-Trained BERT Model for Summarization

- Using Pre-Trained BERT Model for Question-Answering

Prerequisites

For this tutorial, we assume that you’re already conversant in:

From Transformer Model to BERT

In the transformer mannequin, the encoder and decoder are linked to make a seq2seq mannequin so as so that you can carry out a translation, corresponding to from English to German, as you noticed earlier than. Recall that the eye equation says:

$$textual content{consideration}(Q,Ok,V) = textual content{softmax}Big(frac{QK^high}{sqrt{d_k}}Big)V$$

But every of the $Q$, $Ok$, and $V$ above is an embedding vector remodeled by a weight matrix within the transformer mannequin. Training a transformer mannequin means discovering these weight matrices. Once the burden matrices are realized, the transformer turns into a language mannequin, which suggests it represents a strategy to perceive the language that you just used to coach it.

The encoder-decoder construction of the Transformer structure

Taken from “Attention Is All You Need“

A transformer has encoder and decoder elements. As the title implies, the encoder transforms sentences and paragraphs into an inside format (a numerical matrix) that understands the context, whereas the decoder does the reverse. Combining the encoder and decoder permits a transformer to carry out seq2seq duties, corresponding to translation. If you are taking out the encoder a part of the transformer, it could actually inform you one thing concerning the context, which might do one thing fascinating.

The Bidirectional Encoder Representation from Transformer (BERT) leverages the eye mannequin to get a deeper understanding of the language context. BERT is a stack of many encoder blocks. The enter textual content is separated into tokens as within the transformer mannequin, and every token can be remodeled right into a vector on the output of BERT.

What Can BERT Do?

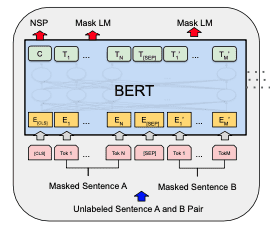

A BERT mannequin is educated utilizing the masked language mannequin (MLM) and subsequent sentence prediction (NSP) concurrently.

BERT mannequin

Each coaching pattern for BERT is a pair of sentences from a doc. The two sentences could be consecutive within the doc or not. There can be a [CLS] token prepended to the primary sentence (to symbolize the class) and a [SEP] token appended to every sentence (as a separator). Then, the 2 sentences can be concatenated as a sequence of tokens to turn into a coaching pattern. A small share of the tokens within the coaching pattern is masked with a particular token [MASK] or changed with a random token.

Before it’s fed into the BERT mannequin, the tokens within the coaching pattern can be remodeled into embedding vectors, with the positional encodings added, and specific to BERT, with section embeddings added as nicely to mark whether or not the token is from the primary or the second sentence.

Each enter token to the BERT mannequin will produce one output vector. In a well-trained BERT mannequin, we count on:

- output similar to the masked token can reveal what the unique token was

- output similar to the

[CLS]token originally can reveal whether or not the 2 sentences are consecutive within the doc

Then, the weights educated within the BERT mannequin can perceive the language context nicely.

Once you might have such a BERT mannequin, you should use it for a lot of downstream duties. For instance, by including an applicable classification layer on high of an encoder and feeding in just one sentence to the mannequin as an alternative of a pair, you may take the category token [CLS] as enter for sentiment classification. It works as a result of the output of the category token is educated to combination the eye for the complete enter.

Another instance is to take a query as the primary sentence and the textual content (e.g., a paragraph) because the second sentence, then the output token from the second sentence can mark the place the place the reply to the query rested. It works as a result of the output of every token reveals some details about that token within the context of the complete enter.

Using Pre-Trained BERT Model for Summarization

A transformer mannequin takes a very long time to coach from scratch. The BERT mannequin would take even longer. But the aim of BERT is to create one mannequin that may be reused for a lot of completely different duties.

There are pre-trained BERT fashions that you should use readily. In the next, you will notice just a few use circumstances. The textual content used within the following instance is from:

Theoretically, a BERT mannequin is an encoder that maps every enter token to an output vector, which could be prolonged to an infinite size sequence of tokens. In follow, there are limitations imposed within the implementation of different elements that restrict the enter measurement. Mostly, just a few hundred tokens ought to work, as not each implementation can take 1000’s of tokens in a single shot. You can save the complete article in article.txt (a duplicate is out there right here). In case your mannequin wants a smaller textual content, you should use just a few paragraphs from it.

First, let’s discover the duty for summarization. Using BERT, the thought is to extract just a few sentences from the unique textual content that symbolize the complete textual content. You can see this activity is just like subsequent sentence prediction, through which if given a sentence and the textual content, you wish to classify if they’re associated.

To do this, it’s essential to use the Python module bert-extractive-summarizer

|

pip set up bert-extractive-summarizer |

It is a wrapper to some Hugging Face fashions to supply the summarization activity pipeline. Hugging Face is a platform that means that you can publish machine studying fashions, primarily on NLP duties.

Once you might have put in bert-extractive-summarizer, producing a abstract is only a few strains of code:

|

from summarizer import Summarizer textual content = open(“article.txt”).learn() mannequin = Summarizer(‘distilbert-base-uncased’) end result = mannequin(textual content, num_sentences=3) print(end result) |

This offers the output:

|

Amid the political turmoil of outgoing British Prime Minister Liz Truss’s short-lived authorities, the Bank of England has discovered itself within the fiscal-financial crossfire. Whatever authorities comes subsequent, it’s important that the BOE learns the precise classes. According to a press release by the BOE’s Deputy Governor for Financial Stability, Jon Cunliffe, the MPC was merely “informed of the issues in the gilt market and briefed in advance of the operation, including its financial-stability rationale and the temporary and targeted nature of the purchases.” |

That’s the entire code! Behind the scene, spaCy was used on some preprocessing, and Hugging Face was used to launch the mannequin. The mannequin used was named distilbert-base-uncased. DistilBERT is a simplified BERT mannequin that may run sooner and use much less reminiscence. The mannequin is an “uncased” one, which suggests the uppercase or lowercase within the enter textual content is taken into account the identical as soon as it’s remodeled into embedding vectors.

The output from the summarizer mannequin is a string. As you specified num_sentences=3 in invoking the mannequin, the abstract is three chosen sentences from the textual content. This strategy is known as the extractive abstract. The different is an abstractive abstract, through which the abstract is generated somewhat than extracted from the textual content. This would wish a special mannequin than BERT.

Using Pre-Trained BERT Model for Question-Answering

The different instance of utilizing BERT is to match inquiries to solutions. You will give each the query and the textual content to the mannequin and search for the output of the start and the top of the reply from the textual content.

A fast instance could be only a few strains of code as follows, reusing the identical instance textual content as within the earlier instance:

|

from transformers import pipeline textual content = open(“article.txt”).learn() query = “What is BOE doing?”

answering = pipeline(“question-answering”, mannequin=‘distilbert-base-uncased-distilled-squad’) end result = answering(query=query, context=textual content) print(end result) |

Here, Hugging Face is used instantly. If you might have put in the module used within the earlier instance, the Hugging Face Python module is a dependence that you just already put in. Otherwise, chances are you’ll want to put in it with pip:

And to truly use a Hugging Face mannequin, it’s best to have each PyTorch and TensorFlow put in as nicely:

|

pip set up torch tensorflow |

The output of the code above is a Python dictionary, as follows:

|

{‘rating’: 0.42369240522384644, ‘begin’: 1261, ‘finish’: 1344, ‘reply’: ‘to keep up or restore market liquidity in systemically importantnfinancial markets’} |

This is the place you could find the reply (which is a sentence from the enter textual content), in addition to the start and finish place within the token order the place this reply was from. The rating could be considered the arrogance rating from the mannequin that the reply may match the query.

Behind the scenes, what the mannequin did was generate a chance rating for one of the best starting within the textual content that solutions the query, in addition to the textual content for one of the best ending. Then the reply is extracted by discovering the situation of the best possibilities.

Further Reading

This part gives extra sources on the subject in case you are trying to go deeper.

Papers

Summary

In this tutorial, you found what BERT is and find out how to use a pre-trained BERT mannequin.

Specifically, you realized:

- How is BERT created as an extension to Transformer fashions

- How to make use of pre-trained BERT fashions for extractive summarization and query answering