[ad_1]

ChatGPT, DALL-E, Stable Diffusion, and different generative AIs have taken the world by storm. They create fabulous poetry and pictures. They’re seeping into each nook of our world, from advertising to writing authorized briefs and drug discovery. They look like the poster little one for a man-machine thoughts meld success story.

But underneath the hood, issues are wanting much less peachy. These methods are large power hogs, requiring information facilities that spit out hundreds of tons of carbon emissions—additional stressing an already risky local weather—and suck up billions of {dollars}. As the neural networks grow to be extra subtle and extra extensively used, power consumption is more likely to skyrocket much more.

Plenty of ink has been spilled on generative AI’s carbon footprint. Its power demand could possibly be its downfall, hindering improvement because it additional grows. Using present {hardware}, generative AI is “expected to stall soon if it continues to rely on standard computing hardware,” stated Dr. Hechen Wang at Intel Labs.

It’s excessive time we construct sustainable AI.

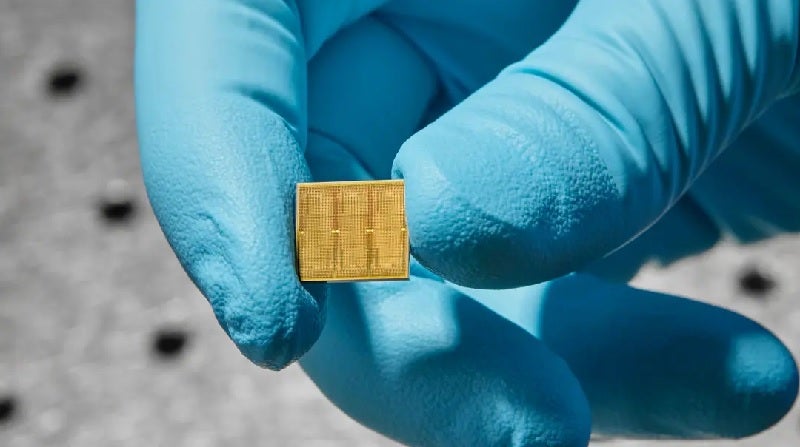

This week, a examine from IBM took a sensible step in that path. They created a 14-nanometer analog chip filled with 35 million reminiscence items. Unlike present chips, computation occurs immediately inside these items, nixing the necessity to shuttle information forwards and backwards—in flip saving power.

Data shuttling can improve power consumption anyplace from 3 to 10,000 instances above what’s required for the precise computation, stated Wang.

The chip was extremely environment friendly when challenged with two speech recognition duties. One, Google Speech Commands, is small however sensible. Here, velocity is essential. The different, Librispeech, is a mammoth system that helps transcribe speech to textual content, taxing the chip’s means to course of large quantities of information.

When pitted towards typical computer systems, the chip carried out equally as precisely however completed the job quicker and with far much less power, utilizing lower than a tenth of what’s usually required for some duties.

“These are, to our knowledge, the first demonstrations of commercially relevant accuracy levels on a commercially relevant model…with efficiency and massive parallelism” for an analog chip, the crew stated.

Brainy Bytes

This is hardly the primary analog chip. However, it pushes the concept of neuromorphic computing into the realm of practicality—a chip that would at some point energy your cellphone, sensible dwelling, and different units with an effectivity close to that of the mind.

Um, what? Let’s again up.

Current computer systems are constructed on the Von Neumann structure. Think of it as a home with a number of rooms. One, the central processing unit (CPU), analyzes information. Another shops reminiscence.

For every calculation, the pc must shuttle information forwards and backwards between these two rooms, and it takes time and power and reduces effectivity.

The mind, in distinction, combines each computation and reminiscence right into a studio residence. Its mushroom-like junctions, referred to as synapses, each type neural networks and retailer recollections on the identical location. Synapses are extremely versatile, adjusting how strongly they join with different neurons based mostly on saved reminiscence and new learnings—a property referred to as “weights.” Our brains rapidly adapt to an ever-changing surroundings by adjusting these synaptic weights.

IBM has been on the forefront of designing analog chips that mimic mind computation. A breakthrough got here in 2016, once they launched a chip based mostly on an interesting materials normally present in rewritable CDs. The materials modifications its bodily state and shape-shifts from a goopy soup to crystal-like buildings when zapped with electrical energy—akin to a digital 0 and 1.

Here’s the important thing: the chip also can exist in a hybrid state. In different phrases, just like a organic synapse, the unreal one can encode a myriad of various weights—not simply binary—permitting it to build up a number of calculations with out having to maneuver a single bit of information.

Jekyll and Hyde

The new examine constructed on earlier work by additionally utilizing phase-change supplies. The primary parts are “memory tiles.” Each is jam-packed with hundreds of phase-change supplies in a grid construction. The tiles readily talk with one another.

Each tile is managed by a programmable native controller, permitting the crew to tweak the element—akin to a neuron—with precision. The chip additional shops a whole bunch of instructions in sequence, making a black field of types that permits them to dig again in and analyze its efficiency.

Overall, the chip contained 35 million phase-change reminiscence buildings. The connections amounted to 45 million synapses—a far cry from the human mind, however very spectacular on a 14-nanometer chip.

These mind-numbing numbers current an issue for initializing the AI chip: there are just too many parameters to hunt by means of. The crew tackled the issue with what quantities to an AI kindergarten, pre-programming synaptic weights earlier than computations start. (It’s a bit like seasoning a brand new cast-iron pan earlier than cooking with it.)

They “tailored their network-training techniques with the benefits and limitations of the hardware in mind,” after which set the weights for probably the most optimum outcomes, defined Wang, who was not concerned within the examine.

It labored out. In one preliminary take a look at, the chip readily churned by means of 12.4 trillion operations per second for every watt of energy. The power consumption is “tens or even hundreds of times higher than for the most powerful CPUs and GPUs,” stated Wang.

The chip nailed a core computational course of underlying deep neural networks with only a few classical {hardware} parts within the reminiscence tiles. In distinction, conventional computer systems want a whole bunch or hundreds of transistors (a primary unit that performs calculations).

Talk of the Town

The crew subsequent challenged the chip to 2 speech recognition duties. Each one pressured a unique side of the chip.

The first take a look at was velocity when challenged with a comparatively small database. Using the Google Speech Commands database, the duty required the AI chip to identify 12 key phrases in a set of roughly 65,000 clips of hundreds of individuals talking 30 quick phrases (“small” is relative in deep studying universe). When utilizing an accepted benchmark—MLPerf— the chip carried out seven instances quicker than in earlier work.

The chip additionally shone when challenged with a big database, Librispeech. The corpus incorporates over 1,000 hours of learn English speech generally used to coach AI for parsing speech and automated speech-to-text transcription.

Overall, the crew used 5 chips to finally encode greater than 45 million weights utilizing information from 140 million phase-change units. When pitted towards typical {hardware}, the chip was roughly 14 instances extra energy-efficient—processing practically 550 samples each second per watt of power consumption—with an error price a bit over 9 p.c.

Although spectacular, analog chips are nonetheless of their infancy. They present “enormous promise for combating the sustainability problems associated with AI,” stated Wang, however the path ahead requires clearing a number of extra hurdles.

One issue is finessing the design of the reminiscence know-how itself and its surrounding parts—that’s, how the chip is laid out. IBM’s new chip doesn’t but include all the weather wanted. A subsequent vital step is integrating all the things onto a single chip whereas sustaining its efficacy.

On the software program facet, we’ll additionally want algorithms that particularly tailor to analog chips, and software program that readily interprets code into language that machines can perceive. As these chips grow to be more and more commercially viable, growing devoted purposes will hold the dream of an analog chip future alive.

“It took decades to shape the computational ecosystems in which CPUs and GPUs operate so successfully,” stated Wang. “And it will probably take years to establish the same sort of environment for analog AI.”

Image Credit: Ryan Lavine for IBM

[ad_2]