[ad_1]

Generative AI was top-of-mind for NVIDIA on the laptop graphics convention SIGGRAPH on Tuesday, Aug. 8. A Hugging Face Training Service powered by NVIDIA DGX Cloud, the newest model of NVIDIA AI Enterprise (4.0), and the AI Workbench toolkit headlined the bulletins about enterprise and industrial generative AI deployments.

Jump to:

‘Superchip’ with HBM3e processor helps AI growth

The subsequent installment within the Grace Hopper platform line would be the GH200 constructed on a Grace Hopper ‘superchip,’ NVIDIA introduced on Tuesday. It consists of a a single server with 144 Arm Neoverse cores, eight petaflops, and 282GB of reminiscence working on the HBM3e commonplace. HBM3e delivers 10TB/sec bandwidth, NVIDIA mentioned, an enchancment of 3 times the reminiscence bandwidth because the earlier model, HBM3.

“To meet surging demand for generative AI, data centers require accelerated computing platforms with specialized needs,” mentioned Jensen Huang, founder and CEO of NVIDIA, in a press launch. “The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center.”

NVIDIA DGX Cloud AI supercomputing involves Hugging Face

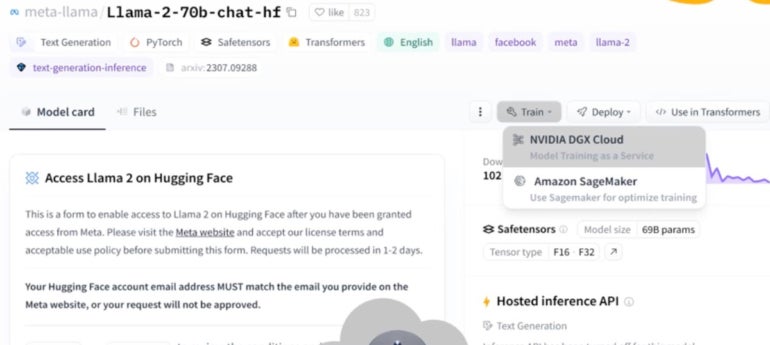

NVIDIA’s DGX Cloud AI supercomputing will now be accessible by way of Hugging Face (Figure A) for individuals who wish to prepare and fine-tune the generative AI fashions they discover on the Hugging Face market. Organizations wishing to make use of generative AI for extremely particular work usually want to coach it on their very own knowledge, which is a course of that may require a variety of bandwidth.

Figure A

“It’s a very natural relationship between Hugging Face and NVIDIA, where Hugging Face is the best place to find all the starting points, and then NVIDIA DGX Cloud is the best place to do your generative AI work with those models,” mentioned Manuvir Das, NVIDIA vp of enterprise computing, throughout a pre-briefing for the convention.

DGX Cloud consists of NVIDIA Networking (a high-performance, low-latency material) and eight NVIDIA H100 or A100 80GB Tensor Core GPUs with a complete of 640GB of GPU reminiscence per node.

DGX Cloud AI coaching will incur an extra payment inside Hugging Face, although NVIDIA didn’t element what it would price. The joint effort can be accessible beginning within the subsequent few months.

“People around the world are making new connections and discoveries with generative AI tools, and we’re still only in the early days of this technology shift,” mentioned Clément Delangue, co-founder and CEO of Hugging Face in a NVIDIA press launch. “Our collaboration will bring NVIDIA’s most advanced AI supercomputing to Hugging Face to enable companies to take their AI destiny into their own hands with open source.”

SEE: We dug deep into generative AI – each the nice and the dangerous. (TechRepublic)

AI Enterprise 4.0 revealed

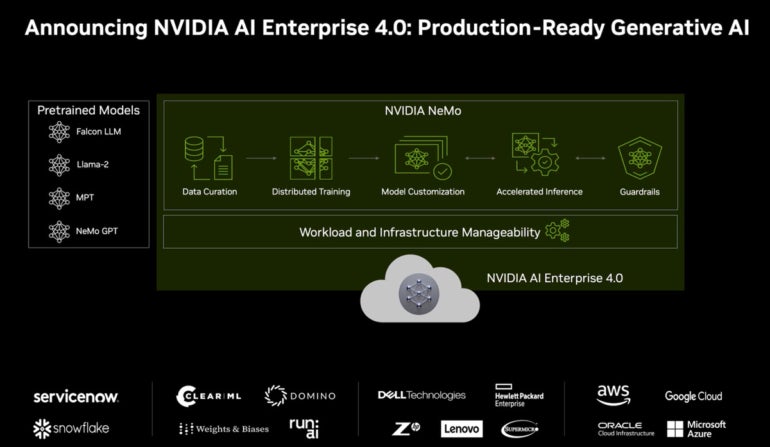

NVIDIA’s AI Enterprise, a set of AI and knowledge analytics software program for constructing generative AI options (Figure B), will quickly shift to model 4.0. The main change on this model is the addition of NeMo, a platform for customized tooling for generative AI curation, coaching customization, inference, guardrails and extra. NeMo brings a cloud-native framework for constructing and deploying enterprise purposes that use giant language fashions.

Machine studying suppliers ClearML, Domino Data Lab, Run:AI and Weights & Biases have partnered with NVIDIA to combine their companies with AI Enterprise 4.0.

Figure B

NVIDIA brings the complete gen AI pipeline in-house with AI Workbench

AI Enterprise 4.0 pairs with NVIDIA AI Workbench, a workspace designed to make it simpler and less complicated for organizations to spin up AI purposes on a PC or dwelling workstation. With AI Workbench, initiatives might be simply moved between PCs, knowledge facilities, public clouds and NVIDIA’s DGX Cloud.

AI Workbench is “a way for you to uniformly and consistently package up your AI work and move it from one place to another,” mentioned Das.

First, builders can carry all of their fashions, frameworks, SDKs and libraries from open-source repositories and the NVIDIA AI platform into one house. Then, they’ll provoke, check and fine-tune the generative AI merchandise they make on a RTX PC or workstation. They may also scale as much as knowledge heart and cloud computing internet hosting if wanted.

“Most enterprises are building the expertise, budget or data center resources to manage the high complexity of AI software and systems,” mentioned Joey Zwicker, vp of AI technique at HPE, in a press launch from NVIDIA. “We’re excited about NVIDIA AI Workbench’s potential to simplify generative AI project creation and one-click training and deployment.”

AI Workbench can be accessible Fall 2023. It can be free as a part of different product subscriptions, together with AI Enterprise.

New RTX workstations and GPUs help generative AI for enterprise

On the {hardware} methods facet, new RTX workstations (Figure C) with RTX 6000 GPUs and AI-supporting enterprise software program inbuilt had been introduced. These are designed for the massive GPU energy necessities wanted for industrial digitalization or enterprise 3D visualization.

The latest members of the Ada workstation GPU household would be the RTX 5000, RTX 4500 and RTX 4000. The RTX 5000 is on the market now, with the RTX 4500 and RTX 4000 accessible in October and September 2023 respectively.

In the same vein, new structure for OVX servers was introduced. These servers will run as much as eight L40S Ada GPUs every, and are additionally appropriate with AI Enterprise software program. All of those workstations are acceptable for content material creation equivalent to AI-generated photos for graphic design, animation or structure.

Figure C

“With the performance boost and large frame buffer of RTX 5000 GPUs, our large, complex models look great in virtual reality, which gives our clients a more comfortable and contextual experience,” mentioned Dan Stine, director of design expertise at architectural agency Lake|Flato, in a NVIDIA press launch.

Omniverse embraces OpenUSD for digital twinning

Lastly, NVIDIA detailed updates to Omniverse, a growth platform for connecting, constructing and working industrial digitalization purposes with the 3D visualization commonplace OpenUSD. Omniverse has purposes in 3D animation and recreation growth in addition to in automotive manufacturing. Many of the updates had been linked to NVIDIA’s new partnership with Universal Scene Description, an open supply format for the creation of objects and different components in 3D graphics.

“Industrial enterprises are racing to digitalize their workflows, increasing the demand for connected, interoperable, 3D software ecosystems,” mentioned Rev Lebaredian, vp of Omniverse and simulation expertise at NVIDIA, in a press launch.

Several new integrations for Omniverse enabled by USD can be accessible together with one with Adobe’s AI picture era utility, Firefly.

Companies utilizing Omniverse for industrial design embrace Boston Dynamics, which makes use of it to simulate robotics and management methods, NVIDIA mentioned.

The latest model of Omniverse is now in beta and can be accessible to Omniverse Enterprise prospects quickly.