[ad_1]

An vital facet of human imaginative and prescient is our skill to grasp 3D form from the 2D photos we observe. Achieving this sort of understanding with laptop imaginative and prescient programs has been a elementary problem within the area. Many profitable approaches depend on multi-view information, the place two or extra photos of the identical scene can be found from totally different views, which makes it a lot simpler to deduce the 3D form of objects within the photos.

There are, nonetheless, many conditions the place it might be helpful to know 3D construction from a single picture, however this drawback is usually troublesome or unimaginable to unravel. For instance, it isn’t essentially potential to inform the distinction between a picture of an precise seashore and a picture of a flat poster of the identical seashore. However it’s potential to estimate 3D construction primarily based on what sort of 3D objects happen generally and what related constructions appear to be from totally different views.

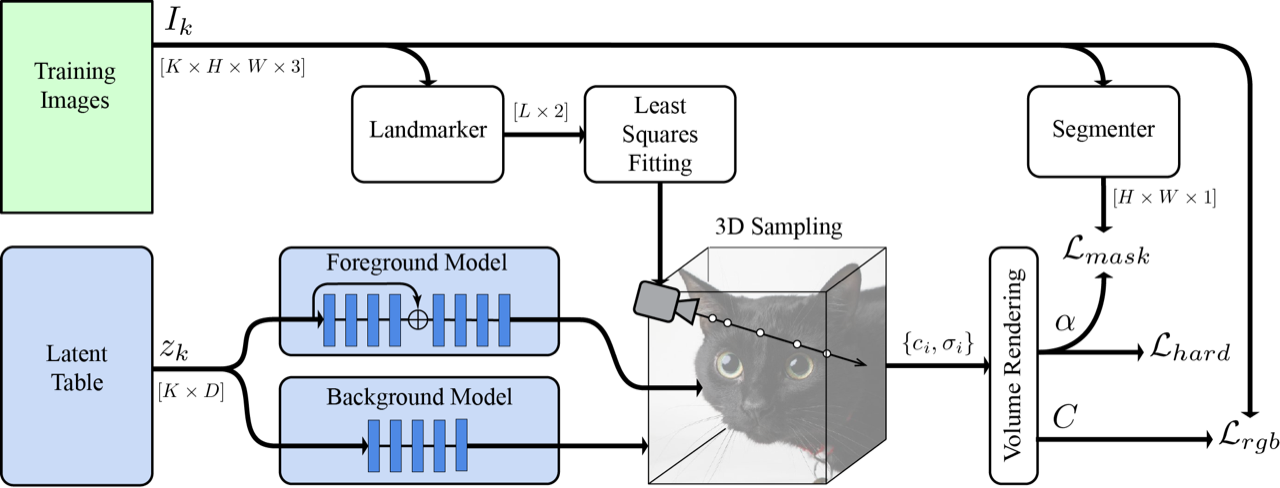

In “LOLNeRF: Learn from One Look”, offered at CVPR 2022, we suggest a framework that learns to mannequin 3D construction and look from collections of single-view photos. LOLNeRF learns the standard 3D construction of a category of objects, comparable to vehicles, human faces or cats, however solely from single views of anyone object, by no means the identical object twice. We construct our method by combining Generative Latent Optimization (GLO) and neural radiance fields (NeRF) to realize state-of-the-art outcomes for novel view synthesis and aggressive outcomes for depth estimation.

Combining GLO and NeRF

GLO is a basic methodology that learns to reconstruct a dataset (comparable to a set of 2D photos) by co-learning a neural community (decoder) and desk of codes (latents) that can also be an enter to the decoder. Each of those latent codes re-creates a single ingredient (comparable to a picture) from the dataset. Because the latent codes have fewer dimensions than the info components themselves, the community is pressured to generalize, studying frequent construction within the information (comparable to the overall form of canine snouts).

NeRF is a way that is superb at reconstructing a static 3D object from 2D photos. It represents an object with a neural community that outputs colour and density for every level in 3D area. Color and density values are amassed alongside rays, one ray for every pixel in a 2D picture. These are then mixed utilizing customary laptop graphics quantity rendering to compute a last pixel colour. Importantly, all these operations are differentiable, permitting for end-to-end supervision. By implementing that every rendered pixel (of the 3D illustration) matches the colour of floor reality (2D) pixels, the neural community creates a 3D illustration that may be rendered from any viewpoint.

We mix NeRF with GLO by assigning every object a latent code and concatenating it with customary NeRF inputs, giving it the flexibility to reconstruct a number of objects. Following GLO, we co-optimize these latent codes together with community weights throughout coaching to reconstruct the enter photos. Unlike customary NeRF, which requires a number of views of the identical object, we supervise our methodology with solely single views of anyone object (however a number of examples of that kind of object). Because NeRF is inherently 3D, we will then render the thing from arbitrary viewpoints. Combining NeRF with GLO offers it the flexibility to be taught frequent 3D construction throughout situations from solely single views whereas nonetheless retaining the flexibility to recreate particular situations of the dataset.

Camera Estimation

In order for NeRF to work, it must know the precise digicam location, relative to the thing, for every picture. Unless this was measured when the picture was taken, it’s usually unknown. Instead, we use the MediaPipe Face Mesh to extract 5 landmark places from the pictures. Each of those 2D predictions correspond to a semantically constant level on the thing (e.g., the tip of the nostril or corners of the eyes). We can then derive a set of canonical 3D places for the semantic factors, together with estimates of the digicam poses for every picture, such that the projection of the canonical factors into the pictures is as constant as potential with the 2D landmarks.

Hard Surface and Mask Losses

Standard NeRF is efficient for precisely reproducing the pictures, however in our single-view case, it tends to supply photos that look blurry when considered off-axis. To tackle this, we introduce a novel exhausting floor loss, which inspires the density to undertake sharp transitions from exterior to inside areas, lowering blurring. This primarily tells the community to create “solid” surfaces, and never semi-transparent ones like clouds.

We additionally obtained higher outcomes by splitting the community into separate foreground and background networks. We supervised this separation with a masks from the MediaPipe Selfie Segmenter and a loss to encourage community specialization. This permits the foreground community to specialize solely on the thing of curiosity, and never get “distracted” by the background, growing its high quality.

Results

We surprisingly discovered that becoming solely 5 key factors gave correct sufficient digicam estimates to coach a mannequin for cats, canines, or human faces. This signifies that given solely a single view of your loved one cats Schnitzel, Widget and associates, you’ll be able to create a brand new picture from every other angle.

|

| Top: instance cat photos from AFHQ. Bottom: A synthesis of novel 3D views created by LOLNeRF. |

Conclusion

We’ve developed a way that’s efficient at discovering 3D construction from single 2D photos. We see nice potential in LOLNeRF for a wide range of functions and are at the moment investigating potential use-cases.

|

| Interpolation of feline identities from linear interpolation of discovered latent codes for various examples in AFHQ. |

Code Release

We acknowledge the potential for misuse and significance of appearing responsibly. To that finish, we’ll solely launch the code for reproducibility functions, however won’t launch any skilled generative fashions.

Acknowledgements

We wish to thank Andrea Tagliasacchi, Kwang Moo Yi, Viral Carpenter, David Fleet, Danica Matthews, Florian Schroff, Hartwig Adam and Dmitry Lagun for steady assist in constructing this expertise.