[ad_1]

During the primary weeks of February, we requested recipients of our Data and AI Newsletters to take part in a survey on AI adoption within the enterprise. We have been excited by answering two questions. First, we wished to grasp how using AI grew prior to now yr. We have been additionally within the observe of AI: how builders work, what strategies and instruments they use, what their considerations are, and what improvement practices are in place.

The most hanging result’s the sheer variety of respondents. In our 2020 survey, which reached the identical viewers, we had 1,239 responses. This yr, we had a complete of 5,154. After eliminating 1,580 respondents who didn’t full the survey, we’re left with 3,574 responses—virtually 3 times as many as final yr. It’s potential that pandemic-induced boredom led extra individuals to reply, however we doubt it. Whether they’re placing merchandise into manufacturing or simply kicking the tires, extra persons are utilizing AI than ever earlier than.

Executive Summary

- We had virtually 3 times as many responses as final yr, with related efforts at promotion. More persons are working with AI.

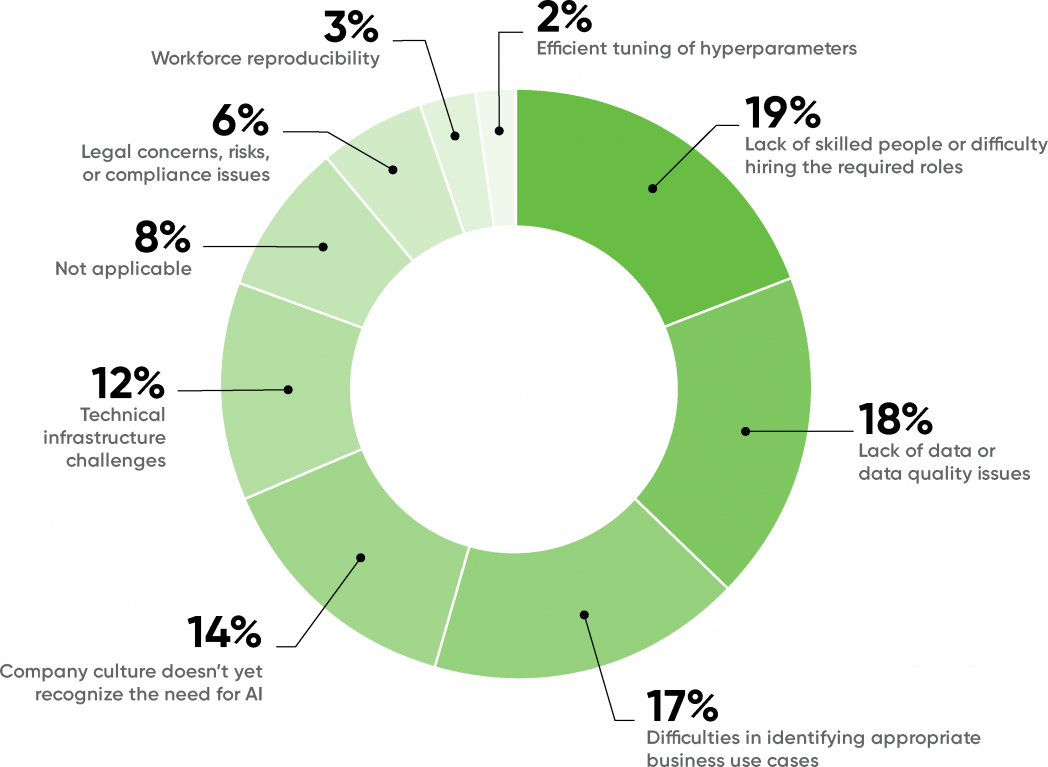

- In the previous, firm tradition has been probably the most vital barrier to AI adoption. While it’s nonetheless a problem, tradition has dropped to fourth place.

- This yr, probably the most vital barrier to AI adoption is the dearth of expert individuals and the problem of hiring. That scarcity has been predicted for a number of years; we’re lastly seeing it.

- The second-most vital barrier was the supply of high quality knowledge. That realization is an indication that the sector is rising up.

- The share of respondents reporting “mature” practices has been roughly the identical for the previous few years. That isn’t stunning, given the rise within the variety of respondents: we suspect many organizations are simply starting their AI tasks.

- The retail business sector has the best share of mature practices; schooling has the bottom. But schooling additionally had the best share of respondents who have been “considering” AI.

- Relatively few respondents are utilizing model management for knowledge and fashions. Tools for versioning knowledge and fashions are nonetheless immature, however they’re crucial for making AI outcomes reproducible and dependable.

Respondents

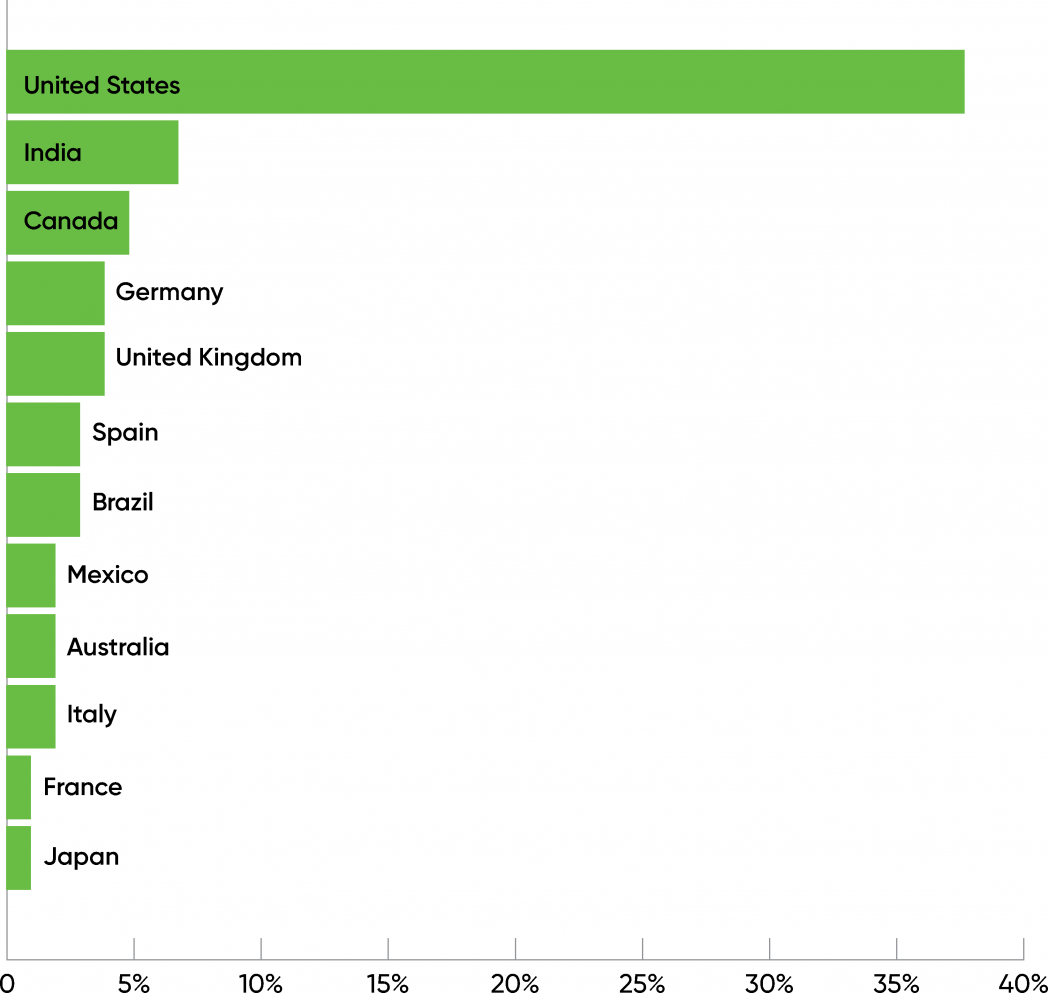

Of the three,574 respondents who accomplished this yr’s survey, 3,099 have been working with AI not directly: contemplating it, evaluating it, or placing merchandise into manufacturing. Of these respondents, it’s not a shock that the most important quantity are primarily based within the United States (39%) and that roughly half have been from North America (47%). India had the second-most respondents (7%), whereas Asia (together with India) had 16% of the full. Australia and New Zealand accounted for 3% of the full, giving the Asia-Pacific (APAC) area 19%. Somewhat over 1 / 4 (26%) of respondents have been from Europe, led by Germany (4%). 7% of the respondents have been from South America, and a couple of% have been from Africa. Except for Antarctica, there have been no continents with zero respondents, and a complete of 111 international locations have been represented. These outcomes that curiosity and use of AI is worldwide and rising.

This yr’s outcomes match final yr’s knowledge nicely. But it’s equally vital to note what the information doesn’t say. Only 0.2% of the respondents mentioned they have been from China. That clearly doesn’t replicate actuality; China is a frontrunner in AI and possibly has extra AI builders than some other nation, together with the US. Likewise, 1% of the respondents have been from Russia. Purely as a guess, we suspect that the variety of AI builders in Russia is barely smaller than the quantity within the US. These anomalies say rather more about who the survey reached (subscribers to O’Reilly’s newsletters) than they are saying concerning the precise variety of AI builders in Russia and China.

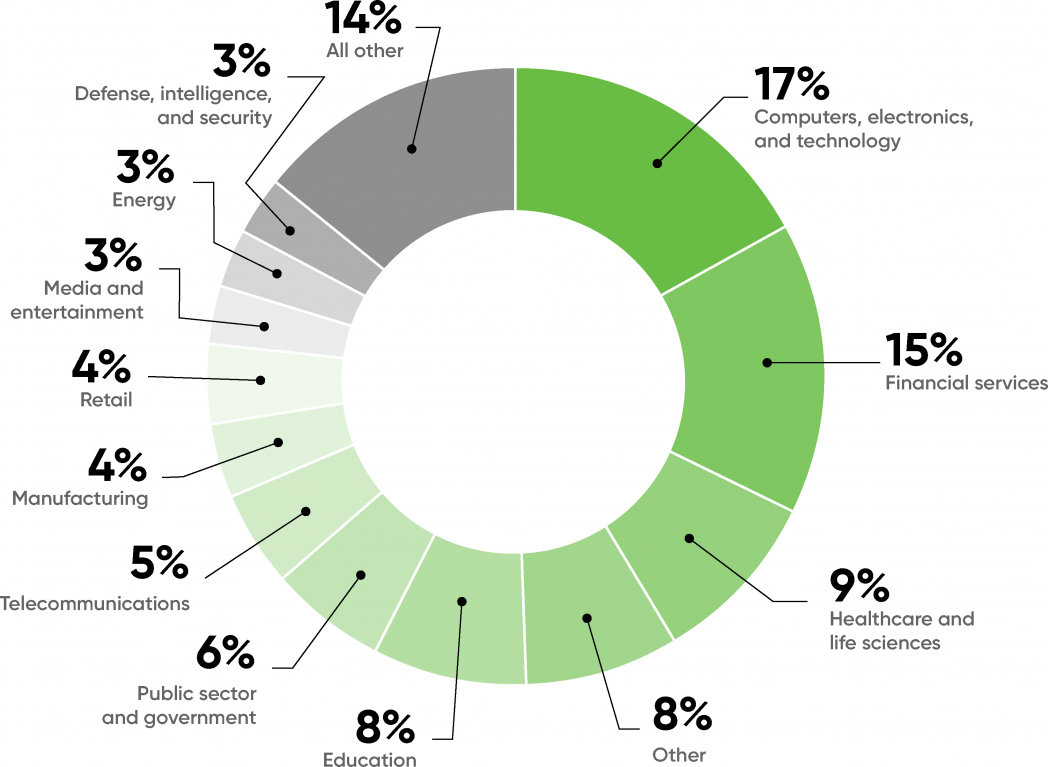

The respondents represented a various vary of industries. Not surprisingly, computer systems, electronics, and expertise topped the charts, with 17% of the respondents. Financial companies (15%), healthcare (9%), and schooling (8%) are the industries making the next-most vital use of AI. We see comparatively little use of AI within the pharmaceutical and chemical industries (2%), although we anticipate that to alter sharply given the position of AI in creating the COVID-19 vaccine. Likewise, we see few respondents from the automotive business (2%), although we all know that AI is essential to new merchandise corresponding to autonomous automobiles.

3% of the respondents have been from the power business, and one other 1% from public utilities (which incorporates a part of the power sector). That’s a decent quantity by itself, however we’ve got to ask: Will AI play a task in rebuilding our frail and outdated power infrastructure, as occasions of the previous few years—not simply the Texas freeze or the California fires—have demonstrated? We anticipate that it’ll, although it’s truthful to ask whether or not AI techniques educated on normative knowledge will likely be strong within the face of “black swan” occasions. What will an AI system do when confronted with a uncommon scenario, one which isn’t well-represented in its coaching knowledge? That, in any case, is the issue dealing with the builders of autonomous automobiles. Driving a automotive safely is straightforward when the opposite site visitors and pedestrians all play by the foundations. It’s solely tough when one thing surprising occurs. The identical is true of {the electrical} grid.

We additionally anticipate AI to reshape agriculture (1% of respondents). As with power, AI-driven adjustments gained’t come shortly. However, we’ve seen a gentle stream of AI tasks in agriculture, with objectives starting from detecting crop illness to killing moths with small drones.

Finally, 8% of respondents mentioned that their business was “Other,” and 14% have been grouped into “All Others.” “All Others” combines 12 industries that the survey listed as potential responses (together with automotive, pharmaceutical and chemical, and agriculture) however that didn’t have sufficient responses to point out within the chart. “Other” is the wild card, comprising industries we didn’t record as choices. “Other” seems within the fourth place, simply behind healthcare. Unfortunately, we don’t know which industries are represented by that class—but it surely exhibits that the unfold of AI has certainly grow to be broad!

Maturity

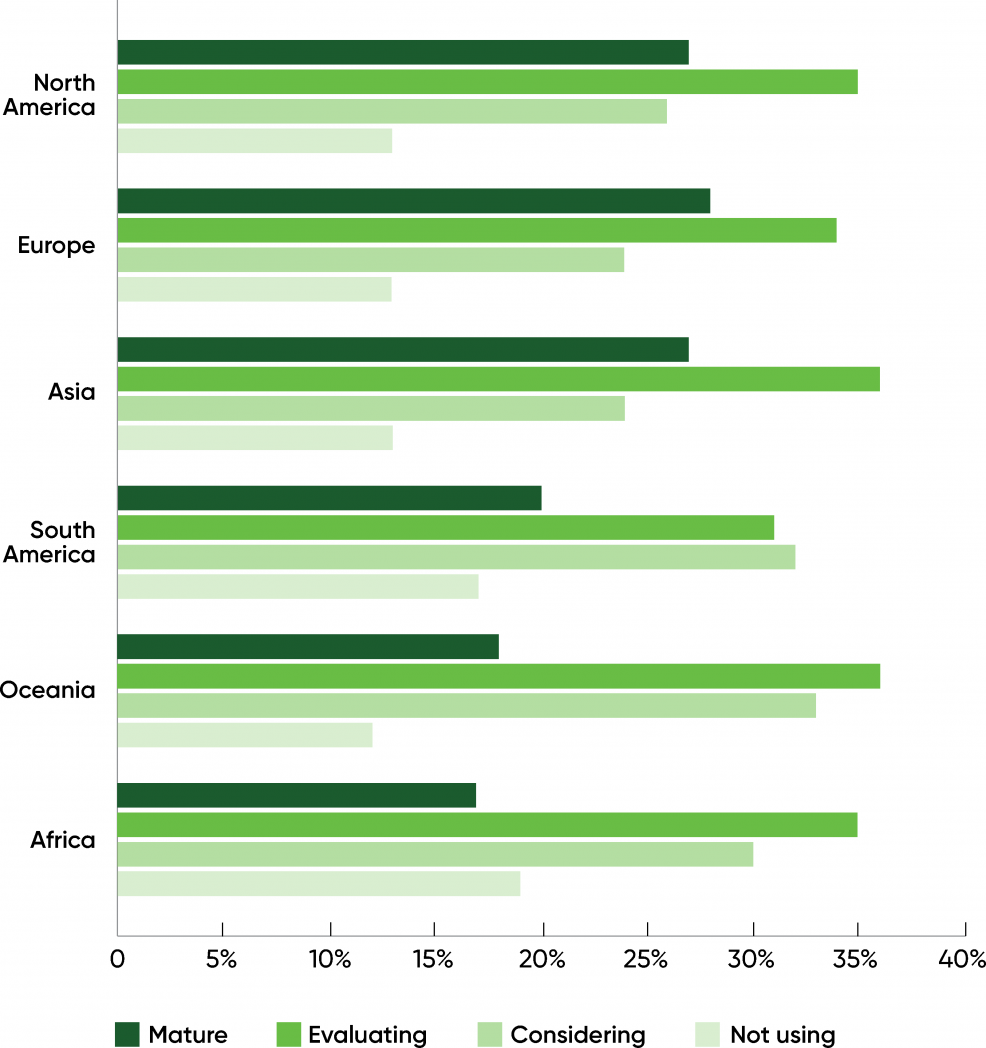

Roughly one quarter of the respondents described their use of AI as “mature” (26%), which means that that they had revenue-bearing AI merchandise in manufacturing. This is nearly precisely in step with the outcomes from 2020, the place 25% of the respondents reported that that they had merchandise in manufacturing (“Mature” wasn’t a potential response within the 2020 survey.)

This yr, 35% of our respondents have been “evaluating” AI (trials and proof-of-concept tasks), additionally roughly the identical as final yr (33%). 13% of the respondents weren’t making use of AI or contemplating utilizing it; that is down from final yr’s quantity (15%), however once more, it’s not considerably completely different.

What will we make of the respondents who’re “considering” AI however haven’t but began any tasks (26%)? That’s not an choice final yr’s respondents had. We suspect that final yr respondents who have been contemplating AI mentioned they have been both “evaluating” or “not using” it.

Looking on the issues respondents confronted in AI adoption gives one other method to gauge the general maturity of AI as a discipline. Last yr, the key bottleneck holding again adoption was firm tradition (22%), adopted by the problem of figuring out acceptable use instances (20%). This yr, cultural issues are in fourth place (14%) and discovering acceptable use instances is in third (17%). That’s a really vital change, significantly for company tradition. Companies have accepted AI to a a lot better diploma, though discovering acceptable issues to resolve nonetheless stays a problem.

The largest issues on this yr’s survey are lack of expert individuals and issue in hiring (19%) and knowledge high quality (18%). It’s no shock that the demand for AI experience has exceeded the availability, but it surely’s vital to understand that it’s now grow to be the largest bar to wider adoption. The largest abilities gaps have been ML modelers and knowledge scientists (52%), understanding enterprise use instances (49%), and knowledge engineering (42%). The want for individuals managing and sustaining computing infrastructure was comparatively low (24%), hinting that corporations are fixing their infrastructure necessities within the cloud.

It’s gratifying to notice that organizations beginning to notice the significance of information high quality (18%). We’ve identified about “garbage in, garbage out” for a very long time; that goes double for AI. Bad knowledge yields dangerous outcomes at scale.

Hyperparameter tuning (2%) wasn’t thought of an issue. It’s on the backside of the record—the place, we hope, it belongs. That might replicate the success of automated instruments for constructing fashions (AutoML, though as we’ll see later, most respondents aren’t utilizing them). It’s extra regarding that workflow reproducibility (3%) is in second-to-last place. This is smart, provided that we don’t see heavy utilization of instruments for mannequin and knowledge versioning. We’ll take a look at this later, however having the ability to reproduce experimental outcomes is crucial to any science, and it’s a widely known drawback in AI.

Maturity by Continent

When wanting on the geographic distribution of respondents with mature practices, we discovered virtually no distinction between North America (27%), Asia (27%), and Europe (28%). In distinction, in our 2018 report, Asia was behind in mature practices, although it had a markedly greater variety of respondents within the “early adopter” or “exploring” levels. Asia has clearly caught up. There’s no vital distinction between these three continents in our 2021 knowledge.

We discovered a smaller share of respondents with mature practices and the next share of respondents who have been “considering” AI in South America (20%), Oceania (Australia and New Zealand, 18%), and Africa (17%). Don’t underestimate AI’s future influence on any of those continents.

Finally, the share of respondents “evaluating” AI was virtually the identical on every continent, various solely from 31% (South America) to 36% (Oceania).

Maturity by Industry

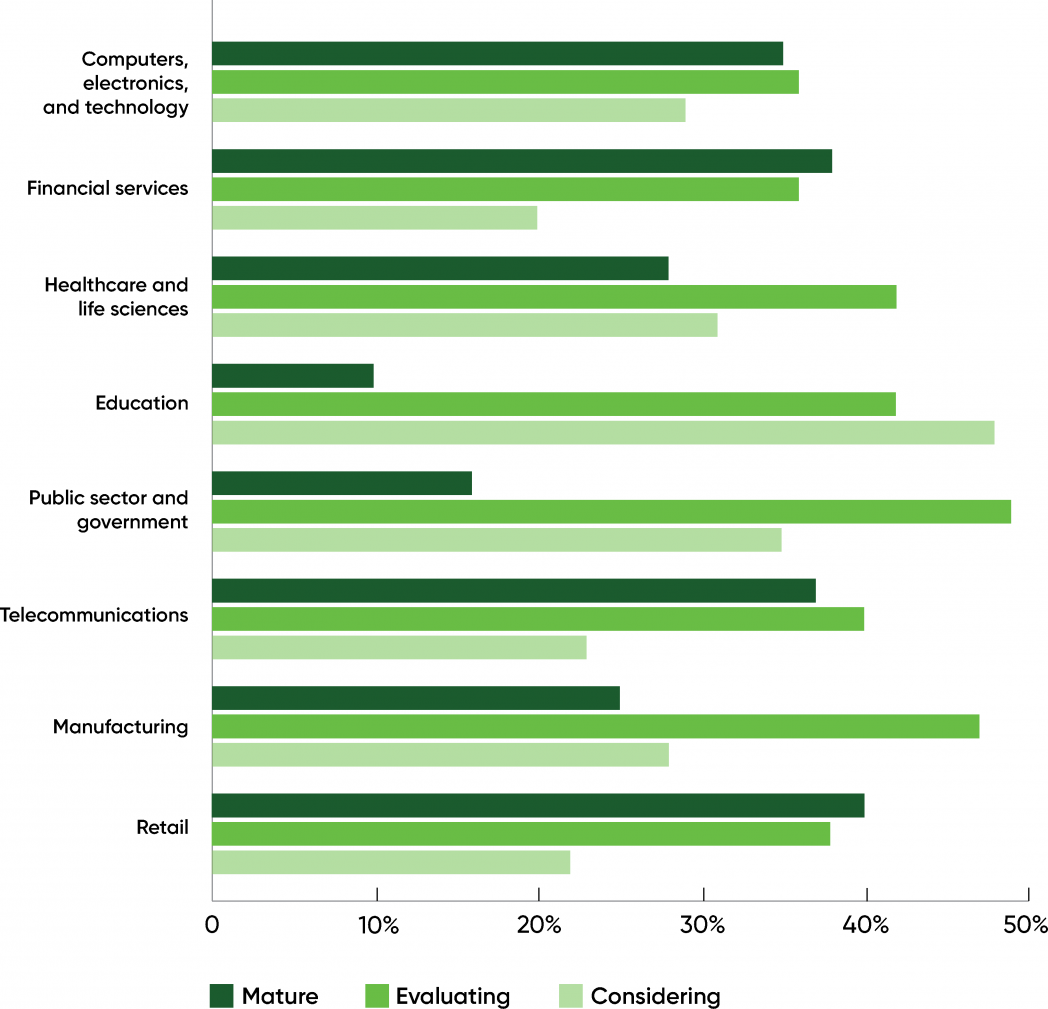

While AI maturity doesn’t rely strongly on geography, we see a distinct image if we take a look at maturity by business.

Looking on the prime eight industries, monetary companies (38%), telecommunications (37%), and retail (40%) had the best share of respondents reporting mature practices. And whereas it had by far the best variety of respondents, computer systems, electronics, and expertise was in fourth place, with 35% of respondents reporting mature practices. Education (10%) and authorities (16%) have been the laggards. Healthcare and life sciences, at 28%, have been within the center, as have been manufacturing (25%), protection (26%), and media (29%).

On the opposite hand, if we take a look at industries which are contemplating AI, we discover that schooling is the chief (48%). Respondents working in authorities and manufacturing appear to be considerably additional alongside, with 49% and 47% evaluating AI, which means that they’ve pilot or proof-of-concept tasks in progress.

This could be a trick of the numbers: each group provides as much as 100%, so if there are fewer “mature” practices in a single group, the share of “evaluating” and “considering” practices needs to be greater. But there’s additionally an actual sign: respondents in these industries might not take into account their practices “mature,” however every of those business sectors had over 100 respondents, and schooling had virtually 250. Manufacturing must automate many processes (from meeting to inspection and extra); authorities has been as challenged as any business by the worldwide pandemic, and has all the time wanted methods to “do more with less”; and schooling has been experimenting with expertise for plenty of years now. There is an actual want to do extra with AI in these fields. It’s value stating that academic and governmental functions of AI ceaselessly increase moral questions—and one of the vital vital points for the following few years will likely be seeing how these organizations reply to moral issues.

The Practice of AI

Now that we’ve mentioned the place mature practices are discovered, each geographically and by business, let’s see what a mature observe seems to be like. What do these organizations have in frequent? How are they completely different from organizations which are evaluating or contemplating AI?

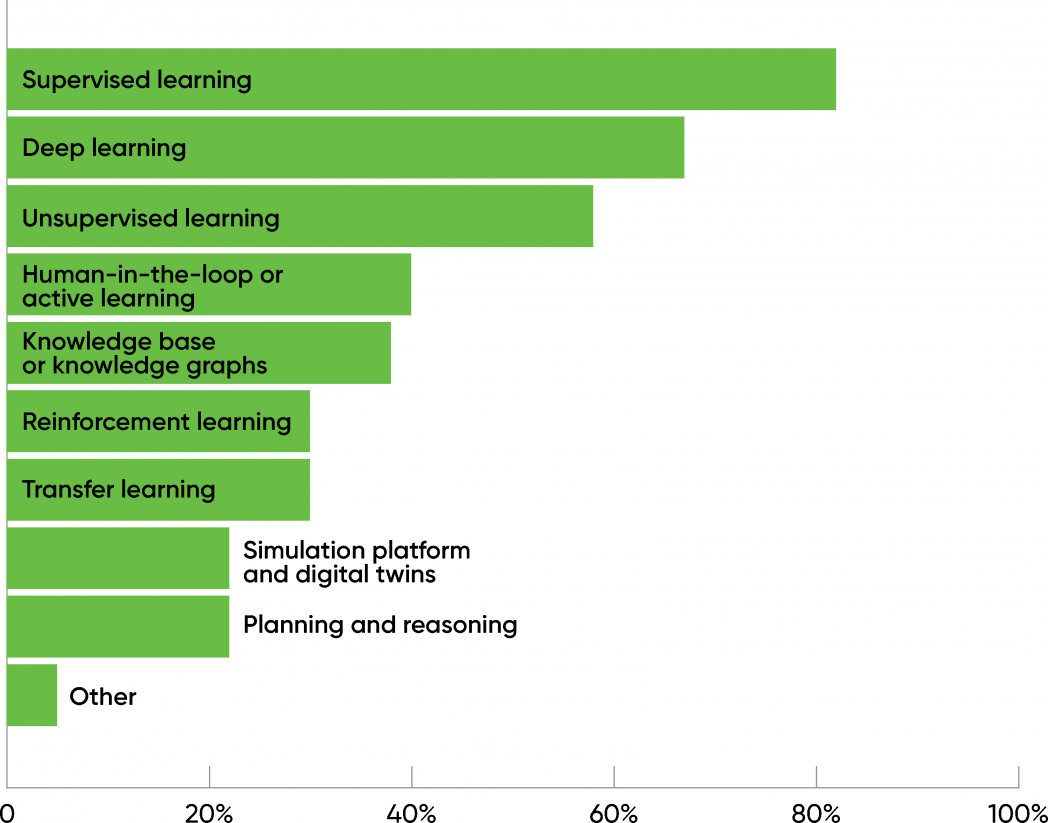

Techniques

First, 82% of the respondents are utilizing supervised studying, and 67% are utilizing deep studying. Deep studying is a set of algorithms which are frequent to virtually all AI approaches, so this overlap isn’t stunning. (Participants may present a number of solutions.) 58% claimed to be utilizing unsupervised studying.

After unsupervised studying, there was a big drop-off. Human-in-the-loop, data graphs, reinforcement studying, simulation, and planning and reasoning all noticed utilization under 40%. Surprisingly, pure language processing wasn’t within the image in any respect. (A really small variety of respondents wrote in “natural language processing” as a response, however they have been solely a small share of the full.) This is critical and undoubtedly value watching over the following few months. In the previous few years, there have been many breakthroughs in NLP and NLU (pure language understanding): everybody within the business has examine GPT-3, and lots of distributors are betting closely on utilizing AI to automate customer support name facilities and related functions. This survey means that these functions nonetheless haven’t moved into observe.

We requested an identical query to respondents who have been contemplating or evaluating using AI (60% of the full). While the chances have been decrease, the applied sciences appeared in the identical order, with only a few variations. This signifies that respondents who’re nonetheless evaluating AI are experimenting with fewer applied sciences than respondents with mature practices. That suggests (moderately sufficient) that respondents are selecting to “start simple” and restrict the strategies that they experiment with.

Data

We additionally requested what varieties of information our “mature” respondents are utilizing. Most (83%) are utilizing structured knowledge (logfiles, time sequence knowledge, geospatial knowledge). 71% are utilizing textual content knowledge—that isn’t per the variety of respondents who reported utilizing NLP, until “text” is getting used generically to incorporate any knowledge that may be represented as textual content (e.g., kind knowledge). 52% of the respondents reported utilizing photos and video. That appears low relative to the quantity of analysis we examine AI and laptop imaginative and prescient. Perhaps it’s not stunning although: there’s no cause for enterprise use instances to be in sync with tutorial analysis. We’d anticipate most enterprise functions to contain structured knowledge, kind knowledge, or textual content knowledge of some variety. Relatively few respondents (23%) are working with audio, which stays very difficult.

Again, we requested an identical query to respondents who have been evaluating or contemplating AI, and once more, we obtained related outcomes, although the share of respondents for any given reply was considerably smaller (4–5%).

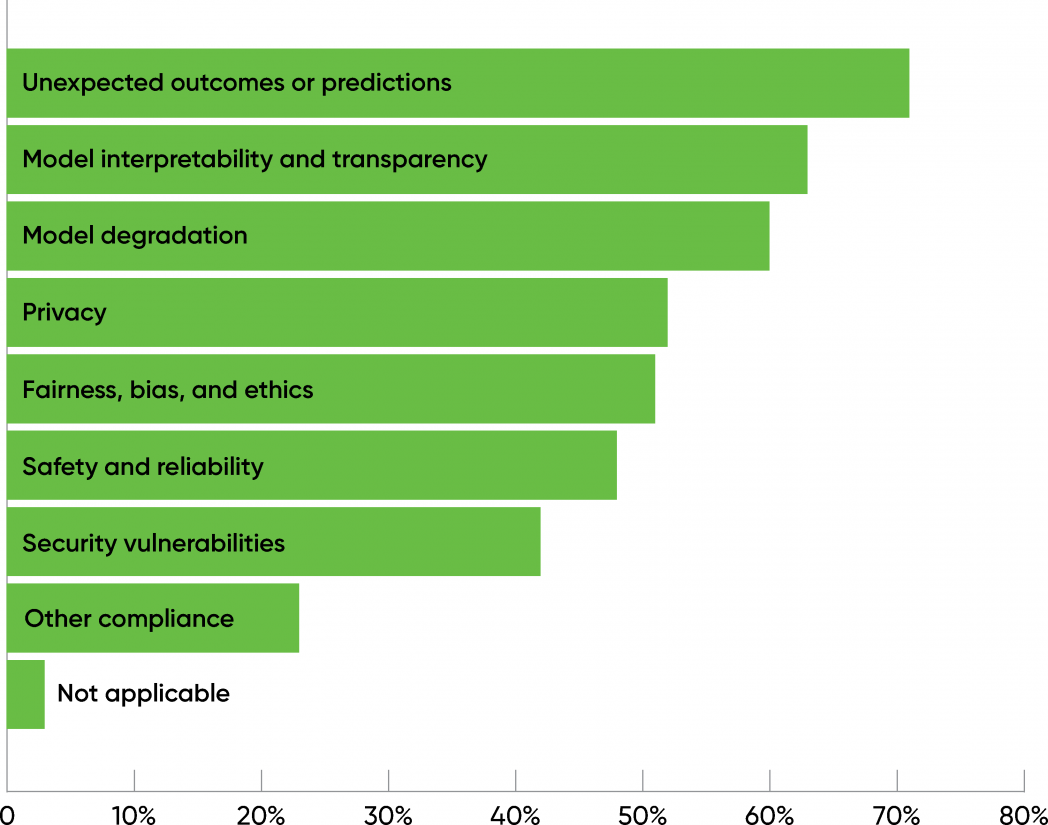

Risk

When we requested respondents with mature practices what dangers they checked for, 71% mentioned “unexpected outcomes or predictions.” Interpretability, mannequin degradation over time, privateness, and equity additionally ranked excessive (over 50%), although it’s disappointing that solely 52% of the respondents chosen this selection. Security can be a priority, at 42%. AI raises vital new safety points, together with the potential of poisoned knowledge sources and reverse engineering fashions to extract non-public info.

It’s exhausting to interpret these outcomes with out understanding precisely what functions are being developed. Privacy, safety, equity, and security are vital considerations for each software of AI, but it surely’s additionally vital to understand that not all functions are the identical. A farming software that detects crop illness doesn’t have the identical sort of dangers as an software that’s approving or denying loans. Safety is a a lot larger concern for autonomous automobiles than for customized buying bots. However, do we actually imagine that these dangers don’t have to be addressed for almost half of all tasks?

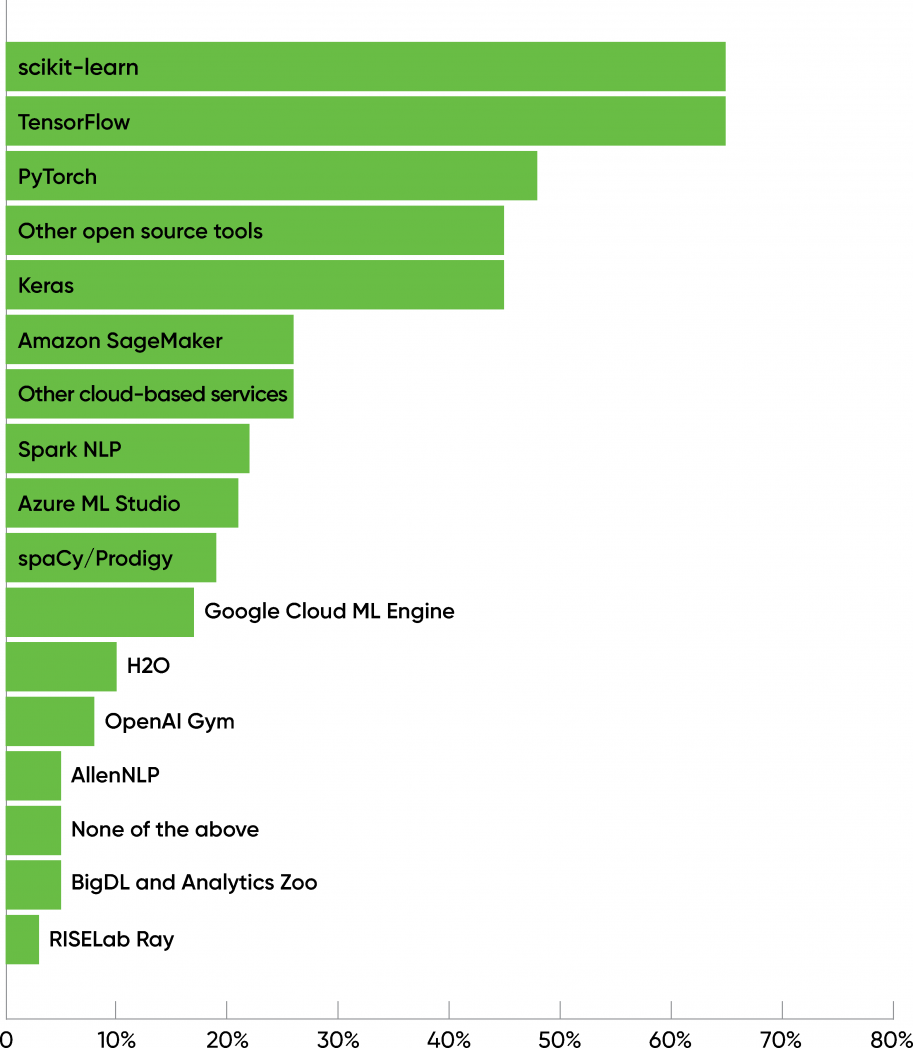

Tools

Respondents with mature practices clearly had their favourite instruments: scikit-learn, TensorFlow, PyTorch, and Keras every scored over 45%, with scikit-learn and TensorFlow the leaders (each with 65%). A second group of instruments, together with Amazon’s SageMaker (25%), Microsoft’s Azure ML Studio (21%), and Google’s Cloud ML Engine (18%), clustered round 20%, together with Spark NLP and spaCy.

When requested which instruments they deliberate to include over the approaching 12 months, roughly half of the respondents answered mannequin monitoring (57%) and mannequin visualization (49%). Models grow to be stale for a lot of causes, not the least of which is adjustments in human habits, adjustments for which the mannequin itself could also be accountable. The potential to observe a mannequin’s efficiency and detect when it has grow to be “stale” will likely be more and more vital as companies develop extra reliant on AI and in flip demand that AI tasks reveal their worth.

Responses from those that have been evaluating or contemplating AI have been related, however with some fascinating variations: scikit-learn moved from first place to 3rd (48%). The second group was led by merchandise from cloud distributors that incorporate AutoML: Microsoft Azure ML Studio (29%), Google Cloud ML Engine (25%), and Amazon SageMaker (23%). These merchandise have been considerably extra fashionable than they have been amongst “mature” customers. The distinction isn’t enormous, however it’s hanging. At danger of over-overinterpreting, customers who’re newer to AI are extra inclined to make use of vendor-specific packages, extra inclined to make use of AutoML in certainly one of its incarnations, and considerably extra inclined to go together with Microsoft or Google slightly than Amazon. It’s additionally potential that scikit-learn has much less model recognition amongst those that are comparatively new to AI in comparison with packages from organizations like Google or Facebook.

When requested particularly about AutoML merchandise, 51% of “mature” respondents mentioned they weren’t utilizing AutoML in any respect. 22% use Amazon SageMaker; 16% use Microsoft Azure AutoML; 14% use Google Cloud AutoML; and different instruments have been all beneath 10%. Among customers who’re evaluating or contemplating AI, solely 40% mentioned they weren’t utilizing AutoML in any respect—and the Google, Microsoft, and Amazon packages have been all however tied (27–28%). AutoML isn’t but an enormous a part of the image, but it surely seems to be gaining traction amongst customers who’re nonetheless contemplating or experimenting with AI. And it’s potential that we’ll see elevated use of AutoML instruments amongst mature customers, of whom 45% indicated that they’d be incorporating instruments for automated mannequin search and hyperparameter tuning (in a phrase, AutoML) within the coming but.

Deployment and Monitoring

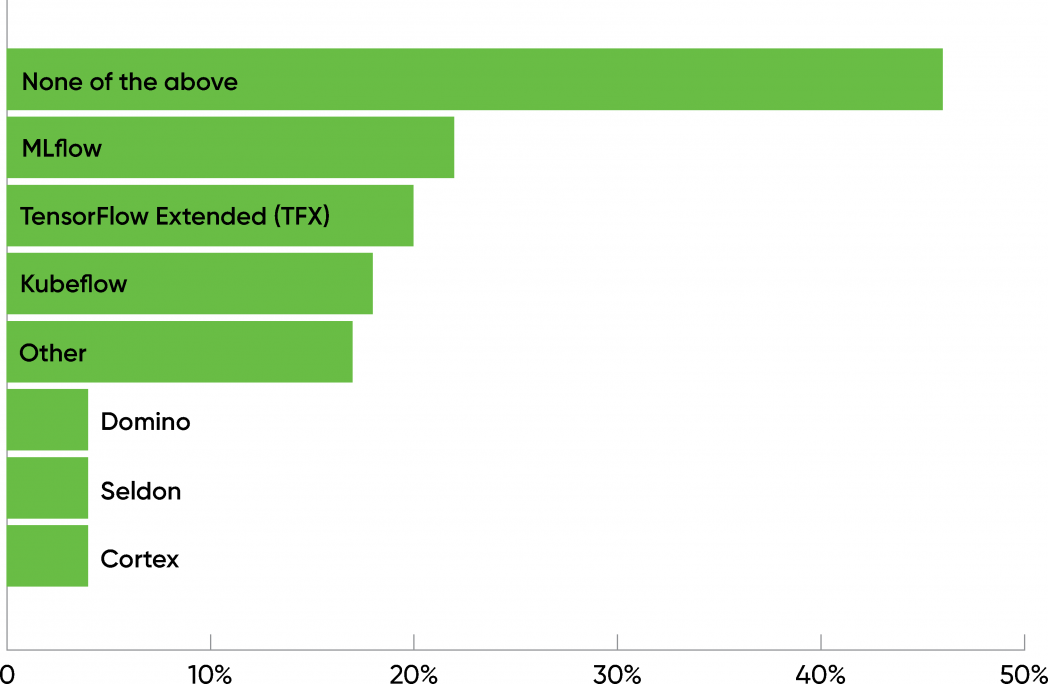

An AI venture means nothing if it might’t be deployed; even tasks which are solely meant for inner use want some sort of deployment. Our survey confirmed that AI deployment remains to be largely unknown territory, dominated by homegrown advert hoc processes. The three most important instruments for deploying AI all had roughly 20% adoption: MLflow (22%), TensorFlow Extended, a.ok.a. TFX (20%), and Kubeflow (18%). Three merchandise from smaller startups—Domino, Seldon, and Cortex—had roughly 4% adoption. But probably the most frequent reply to this query was “none of the above” (46%). Since this query was solely requested of respondents with “mature” AI practices (i.e., respondents who’ve AI merchandise in manufacturing), we are able to solely assume that they’ve constructed their very own instruments and pipelines for deployment and monitoring. Given the various kinds that an AI venture can take, and that AI deployment remains to be one thing of a darkish artwork, it isn’t stunning that AI builders and operations groups are solely beginning to undertake third-party instruments for deployment.

and monitoring

Versioning

Source management has lengthy been a regular observe in software program improvement. There are many well-known instruments used to construct supply code repositories.

We’re assured that AI tasks use supply code repositories corresponding to Git or GitHub; that’s a regular observe for all software program builders. However, AI brings with it a distinct set of issues. In AI techniques, the coaching knowledge is as vital as, if no more vital than, the supply code. So is the mannequin constructed from the coaching knowledge: the mannequin displays the coaching knowledge and hyperparameters, along with the supply code itself, and could also be the results of a whole bunch of experiments.

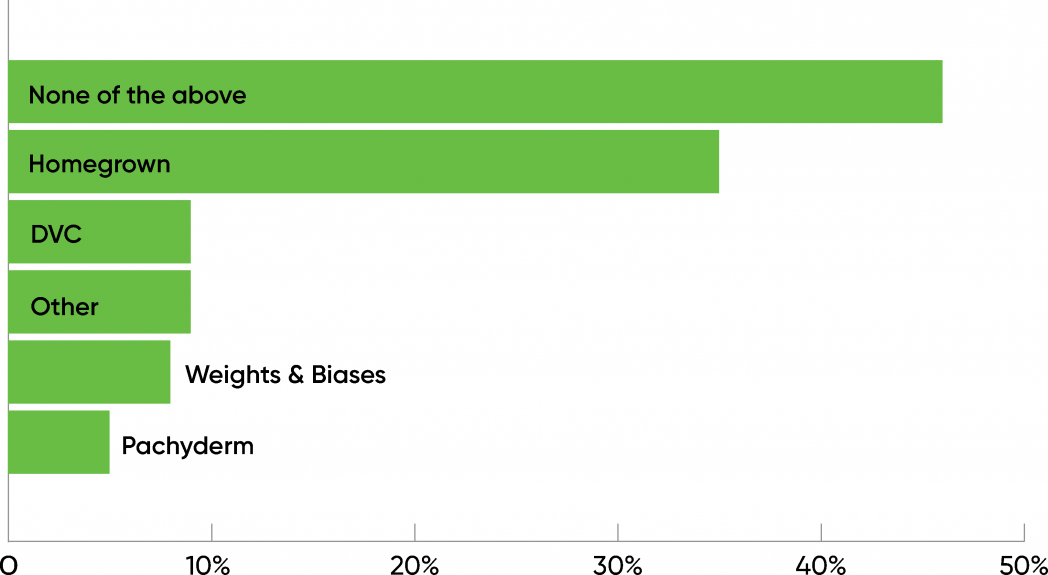

Our survey exhibits that AI builders are solely beginning to use instruments for knowledge and mannequin versioning. For knowledge versioning, 35% of the respondents are utilizing homegrown instruments, whereas 46% responded “none of the above,” which we take to imply they’re utilizing nothing greater than a database. 9% are utilizing DVC, 8% are utilizing instruments from Weights & Biases, and 5% are utilizing Pachyderm.

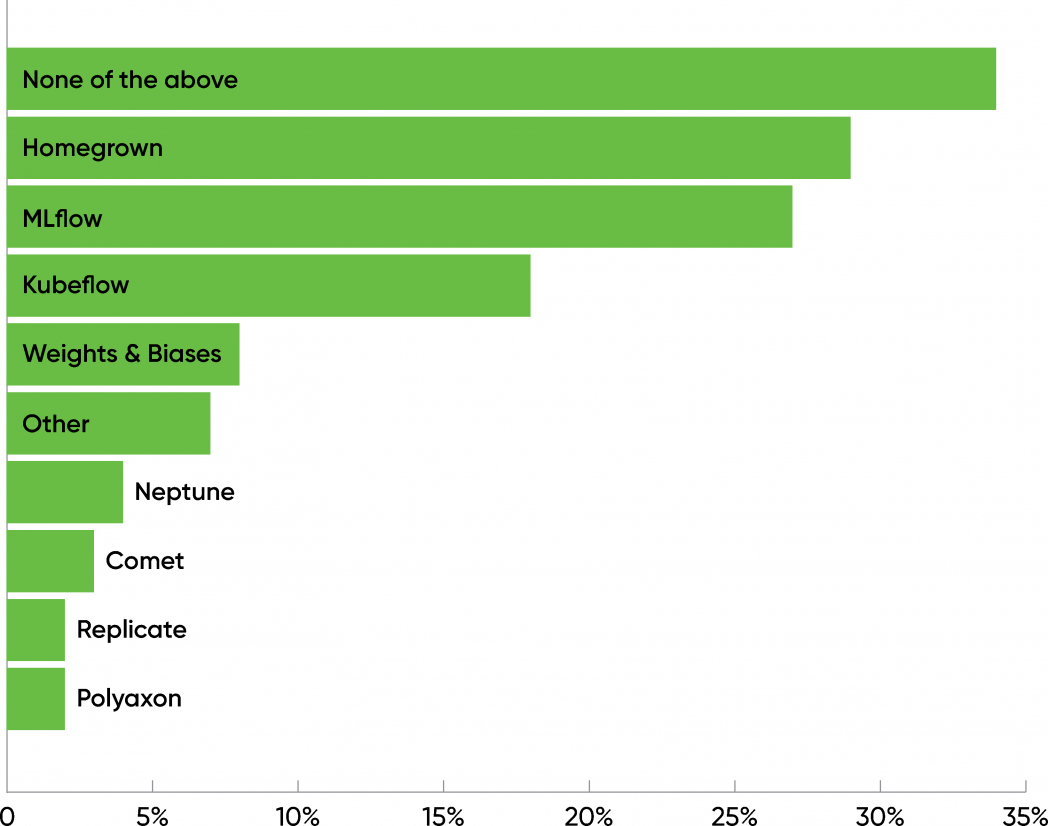

Tools for mannequin and experiment monitoring have been used extra ceaselessly, though the outcomes are basically the identical. 29% are utilizing homegrown instruments, whereas 34% mentioned “none of the above.” The main instruments have been MLflow (27%) and Kubeflow (18%), with Weights & Biases at 8%.

Respondents who’re contemplating or evaluating AI are even much less probably to make use of knowledge versioning instruments: 59% mentioned “none of the above,” whereas solely 26% are utilizing homegrown instruments. Weights & Biases was the most well-liked third-party resolution (12%). When requested about mannequin and experiment monitoring, 44% mentioned “none of the above,” whereas 21% are utilizing homegrown instruments. It’s fascinating, although, that on this group, MLflow (25%) and Kubeflow (21%) ranked above homegrown instruments.

Although the instruments accessible for versioning fashions and knowledge are nonetheless rudimentary, it’s disturbing that so many practices, together with those who have AI merchandise in manufacturing, aren’t utilizing them. You can’t reproduce outcomes if you happen to can’t reproduce the information and the fashions that generated the outcomes. We’ve mentioned {that a} quarter of respondents thought of their AI observe mature—but it surely’s unclear what maturity means if it doesn’t embrace reproducibility.

The Bottom Line

In the previous two years, the viewers for AI has grown, but it surely hasn’t modified a lot: Roughly the identical share of respondents take into account themselves to be a part of a “mature” observe; the identical industries are represented, and at roughly the identical ranges; and the geographical distribution of our respondents has modified little.

We don’t know whether or not to be gratified or discouraged that solely 50% of the respondents listed privateness or ethics as a danger they have been involved about. Without knowledge from prior years, it’s exhausting to inform whether or not that is an enchancment or a step backward. But it’s tough to imagine that there are such a lot of AI functions for which privateness, ethics, and safety aren’t vital dangers.

Tool utilization didn’t current any large surprises: the sector is dominated by scikit-learn, TensorFlow, PyTorch, and Keras, although there’s a wholesome ecosystem of open supply, commercially licensed, and cloud native instruments. AutoML has but to make large inroads, however respondents representing much less mature practices appear to be leaning towards automated instruments and are much less probably to make use of scikit-learn.

The variety of respondents who aren’t addressing knowledge or mannequin versioning was an unwelcome shock. These practices must be foundational: central to creating AI merchandise which have verifiable, repeatable outcomes. While we acknowledge that versioning instruments acceptable to AI functions are nonetheless of their early levels, the variety of members who checked “none of the above” was revealing—significantly since “the above” included homegrown instruments. You can’t have reproducible outcomes if you happen to don’t have reproducible knowledge and fashions. Period.

In the previous yr, AI within the enterprise has grown; the sheer variety of respondents will let you know that. But has it matured? Many new groups are coming into the sector, whereas the share of respondents who’ve deployed functions has remained roughly fixed. In many respects, this means success: 25% of an even bigger quantity is greater than 25% of a smaller quantity. But is software deployment the correct metric for maturity? Enterprise AI gained’t actually have matured till improvement and operations teams can have interaction in practices like steady deployment, till outcomes are repeatable (a minimum of in a statistical sense), and till ethics, security, privateness, and safety are main slightly than secondary considerations. Mature AI? Yes, enterprise AI has been maturing. But it’s time to set the bar for maturity greater.