[ad_1]

Figure 1: Airmass measurements (clouds) over Ukraine from February 18, 2022 – March 01, 2022 from the SEVIRI instrument. Data accessed by way of the EUMETSAT Viewer.

Satellite imagery is a crucial supply of knowledge throughout the present invasion of Ukraine. Military strategists, journalists, and researchers use this imagery to make selections, unveil violations of worldwide agreements, and inform the general public of the stark realities of conflict. With Ukraine experiencing a considerable amount of cloud cowl and assaults typically occuring throughout night-time, many types of satellite tv for pc imagery are hindered from seeing the bottom. Synthetic Aperture Radar (SAR) imagery penetrates cloud cowl, however requires particular coaching to interpret. Automating this tedious process would allow real-time insights, however present pc imaginative and prescient strategies developed on typical RGB imagery don’t correctly account for the phenomenology of SAR. This results in suboptimal efficiency on this crucial modality. Improving the entry to and availability of SAR-specific strategies, codebases, datasets, and pretrained fashions will profit intelligence companies, researchers, and journalists alike throughout this crucial time for Ukraine.

In this put up, we current a baseline technique and pretrained fashions that allow the interchangeable use of RGB and SAR for downstream classification, semantic segmentation, and alter detection pipelines.

Introduction

We stay in a quickly altering world, one which experiences pure disasters, civic upheaval, conflict, and all kinds of chaotic occasions which go away unpredictable—and sometimes everlasting—marks on the face of the planet. Understanding this transformation has traditionally been tough. Surveyors have been despatched out to discover our new actuality, and their distributed findings have been typically noisily built-in right into a supply of actuality. Maintaining a relentless state of vigilance has been a objective of mankind since we have been capable of conceive such a thought, all the way in which from when Nadar took the primary aerial {photograph} to when Sputnik 1’s radio alerts have been used to research the ionosphere.

Vigilance, or to the French, surveillance, has been part of human historical past for millenia. As with any instrument, it has been a double-edged sword. Historically, surveillance with out checks and balances has been detrimental to society. Conversely, the correct and accountable surveillance has allowed us to study deep truths about our world which have resulted in advances within the scientific and humanitarian domains. With the quantity of satellites in orbit at present, our understanding of the setting is up to date nearly every day. We have quickly transitioned from having little or no info to now having extra information than we are able to meaningfully extract information from. Storing this info, not to mention understanding, is an engineering problem that’s of rising urgency.

Machine Learning and Remote Sensing

With a whole bunch of terabytes of knowledge being downlinked from satellites to information facilities each day, gaining information and actionable insights from that information with handbook processing has already grow to be an not possible process. The most generally used type of distant sensing information is electro-optical (EO) satellite tv for pc imagery. EO imagery is commonplace—anybody who has used Google Maps or comparable mapping software program has interacted with EO satellite tv for pc imagery.

Machine studying (ML) on EO imagery is utilized in all kinds of scientific and business purposes. From enhancing precipitation predictions, analyzing human slavery by figuring out brick kilns, to classifying whole cities to enhance site visitors routing, the outputs of ML on EO imagery have been built-in into nearly each aspect of human society.

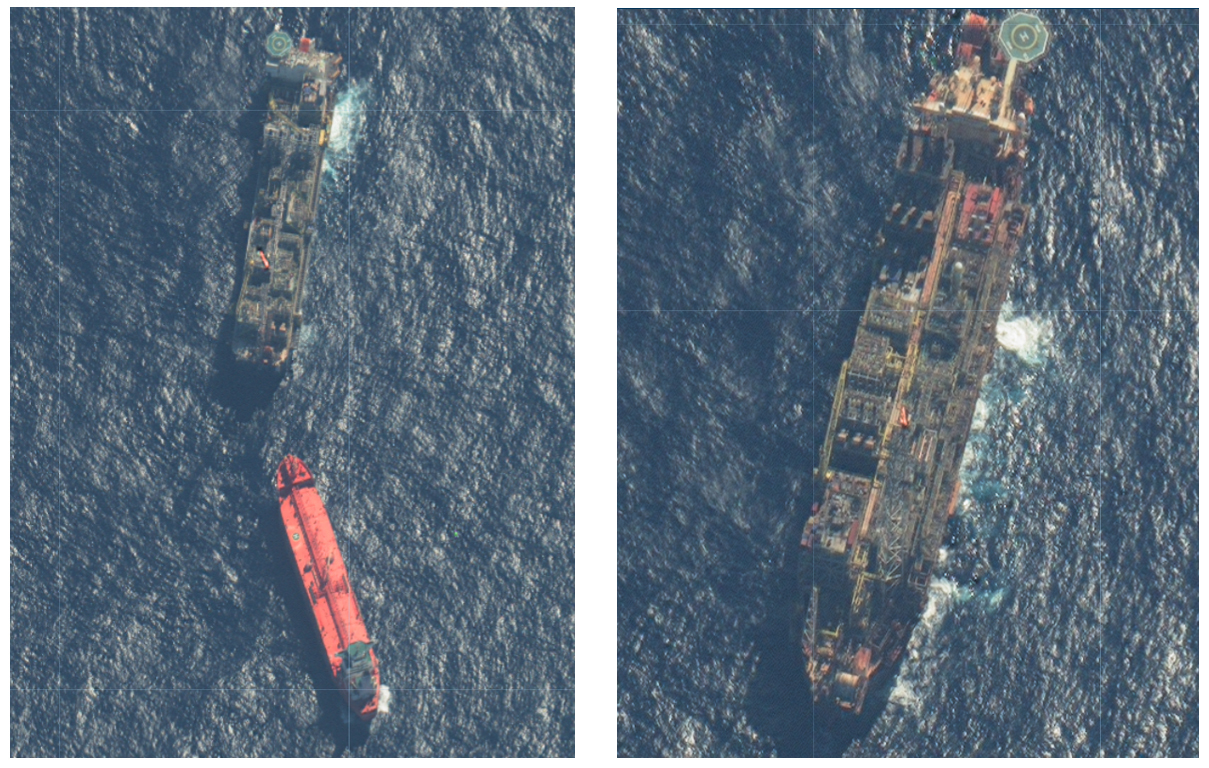

Figure 2: VHR EO imagery over the Kyiv area as acquired by Maxar on February 28, 2022.

Commonly used satellite tv for pc constellations for EO imagery embody the Landsat collection of satellites operated by the United States Geological Survey and the Copernicus Sentinel-2 constellation operated by the European Space Agency. These constellations present imagery at resolutions between 10-60 meters which is nice sufficient for a lot of use instances, however preclude the commentary of finer particulars.

The Advent of Very High Resolution, Commercial Electro-Optical Satellite Imagery

Over the previous couple of years, very excessive decision (VHR) EO imagery has been made out there by way of quite a lot of business sources. Ranging from between 0.3 – 2.0 meter decision, corporations akin to Planet, Maxar, Airbus, and others are offering extraordinarily exact imagery with excessive revisit charges, imaging the whole planet each day.

The elevated decision offered by VHR imagery allows a litany of downstream use instances. Erosion could be detected at finer scales, and the constructing injury could be labeled after pure disasters.

Machine studying strategies have needed to adapt in response to VHR satellite tv for pc imagery. With an elevated acuity, the quantity of pixels and the quantity of courses that may be discerned has elevated by orders of magnitude. Computer imaginative and prescient analysis has responded by decreasing the computational value to study environment friendly illustration of satellite tv for pc imagery, creating strategies to alleviate the elevated burden on labelers, and even engineering giant software program frameworks to permit pc imaginative and prescient practitioners to deal with this plentiful supply of images.

In normal, present pc imaginative and prescient strategies on different, non-aerial RGB imagery switch very properly to satellite tv for pc imagery. This has allowed business VHR imagery to be instantly helpful with extremely correct outcomes.

The Problem with Electro-Optical Imagery

For extremely turbulent and dangerous conditions akin to conflict and pure disasters, having fixed, dependable entry to the Earth is paramount. Unfortunately, EO imagery can not clear up all of our surveillance wants. EO can solely detect mild sources throughout daytime, and because it seems, practically 2/3rds of the Earth is roofed by clouds at any given time. Unless you care about clouds, this blockage of the floor of the planet is problematic when understanding what occurs on the bottom is of crucial significance. Machine studying strategies try to sidestep this drawback by predicting what the world would appear like with out clouds. However, the lack of info is essentially irrecoverable.

Synthetic Aperture Radar Imagery

Synthetic aperture radar (SAR) imagery is an energetic type of distant sensing during which a satellite tv for pc transmits pulses of microwave radar waves all the way down to the floor of the Earth. These radar waves mirror off the bottom and any objects on it and are returned again to the satellite tv for pc. By processing these pulses over time and area, a SAR picture is fashioned the place every pixel is the superposition of various radar scatters.

Radar waves penetrate clouds, and because the satellite tv for pc is actively producing the radar waves, it illuminates the floor of the Earth even throughout the evening. Synthetic aperture radar has all kinds of makes use of, getting used to estimate the roughness of the Earth, mapping the extent of flooding over giant areas, and to detect the presence of unlawful fishing vessels in protected waters.

There are a number of SAR satellite tv for pc constellations in operation for the time being. The Copernicus Sentinel-1 constellation supplies imagery to the general public at giant with resolutions starting from 10 – 80 meters (10 meter imagery being the commonest. Most business SAR suppliers, akin to ICEYE and Capella Space, present imagery all the way down to 0.5 meter decision. In upcoming launches, different business distributors intention to provide SAR imagery with sub-0.5 meter decision with excessive revisit charges as satellite tv for pc constellations develop and authorities laws evolve.

Figure 4: A VHR SAR picture offered by Capella Space over the Ukraine-Belarus border.

The Wacky World of Synthetic Aperture Radar Imagery

While SAR imagery, at a fast look, could look similar to EO imagery, the underlying physics is kind of totally different, which ends up in many fascinating results within the imagery product which could be counterintuitive and incompatible with trendy pc imaginative and prescient. Three widespread results are termed polarization, layover, and multi-path results.

Radar antennas on SAR satellites typically transmit polarized radar waves. The course of polarization is the orientation of the wave’s electrical area. Objects on the bottom exhibit totally different responses to the totally different polarizations of radar waves. Therefore, SAR satellites typically function in twin or quad-polarization modes, broadcasting horizontally (H) or vertically (V) polarized waves and studying both polarization again, leading to HH, HV, VH, and VV bands. You can distinction this with RGB bands in EO imagery, however the basic physics are totally different.

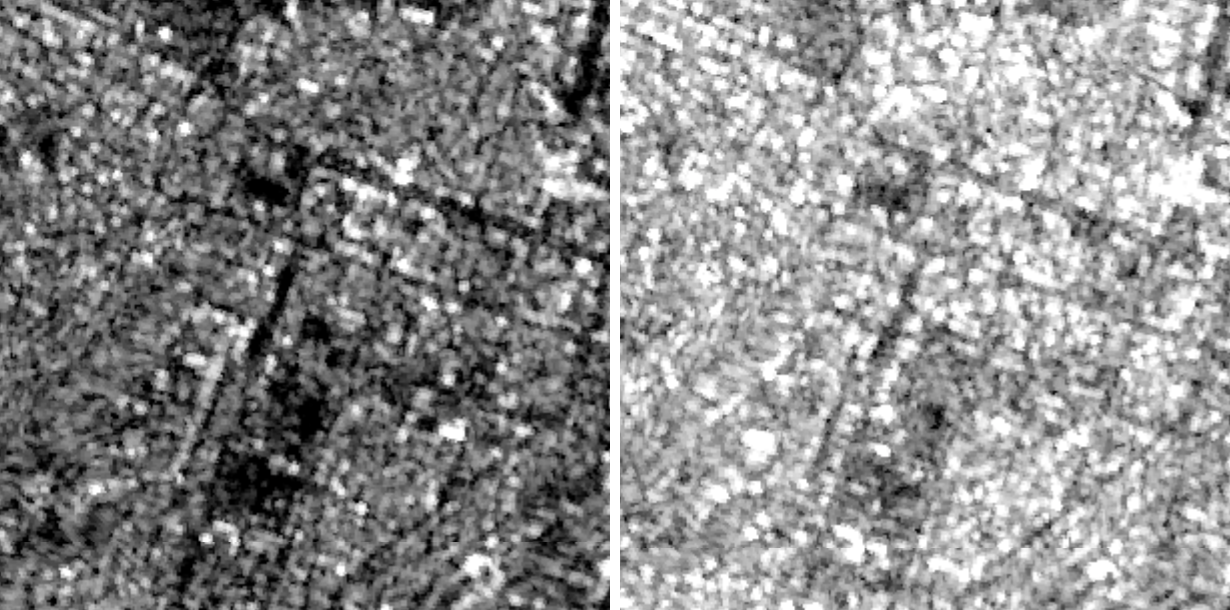

Figure 5: Difference between VH (left) and VV (proper) polarizations over the identical area in Dnipro, Ukraine from Sentinel-1 radiometric terrain corrected imagery. As seen right here, the radar returns in corresponding native areas could be totally different.

Layover is an impact during which radar beams attain the highest of a construction earlier than they attain the underside, ensuing within the high of the article being offered as overlapping with the underside. This occurs when objects are notably tall. Visually, tall buildings seem as if they’re laying on their aspect, whereas mountains can have their peaks intersecting with their bases.

Figure 6: Example of layover in Capella’s VHR SAR imagery. The higher portion of the stadium is intersecting, seemingly, with the parking zone behind it.

Multi-path results happen when radar waves mirror off of objects on the bottom and incur a number of bounces earlier than returning to the SAR sensor. Multi-path results lead to objects showing within the imagery in numerous transformations within the ensuing picture. This impact could be seen in all places in SAR imagery, however is especially noticeable in city areas, forests, and different dense environments.

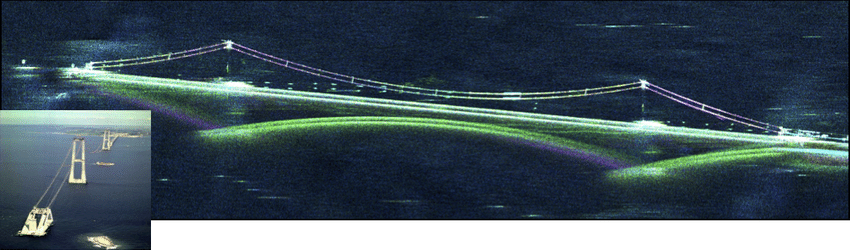

Figure 7: Example of a multi-path impact on a bridge from indirect SAR imagery.

Existing pc imaginative and prescient strategies which can be constructed on conventional RGB imagery are usually not constructed with these results in thoughts. Object detectors skilled on EO satellite tv for pc imagery assume {that a} distinctive object will solely seem as soon as, or that the article will seem comparatively comparable in numerous contexts, quite than probably mirrored or scattered or interwoven with surrounding objects. The very nature of occlusion and the imaginative and prescient ideas underlying the assumptions of occlusion in EO imagery don’t switch to SAR. Taken collectively, present pc imaginative and prescient strategies can switch to SAR imagery, however with decreased efficiency and a set of systematic errors that may be addressed by way of SAR-specific methodology.

Computer Vision on SAR Imagery for Ukraine

Imagery analysts are at present counting on each EO and SAR imagery the place out there over Ukraine. When EO imagery is offered, present pc imaginative and prescient tooling constructed for that modality is used to expedite the method of intelligence gathering. However, when solely SAR imagery is offered, these toolchains can’t be used. Imagery analysts need to resort to handbook evaluation which is time consuming and could be susceptible to errors. This matter is being explored by another establishments internationally, nevertheless, it nonetheless stays an understudied space with respect to the quantity of knowledge out there.

At Berkeley AI Research, we’ve created an preliminary set of strategies and fashions which have realized strong representations for RGB, SAR, and co-registered RGB + SAR imagery from the publicly launched BigEarthNet-MM dataset and the info from Capella’s Open Data, which consists of each RGB and SAR imagery. As such, utilizing our fashions, imagery analysts are capable of interchangeably use RGB, SAR, or co-registered RGB+SAR imagery for downstream duties akin to picture classification, semantic segmentation, object detection, or change detection.

Given that SAR is a phenomenologically totally different information supply than EO imagery, we’ve discovered that the Vision Transformer (ViT) is a very efficient structure for illustration studying with SAR because it removes the size and shift invariant inductive biases constructed into convolutional neural networks. Our high performing technique, MAERS, for illustration studying on RGB, SAR, and co-registered RGB + SAR builds upon the Masked Autoencoder (MAE) not too long ago launched by He et. al., the place the community learns to encode the enter information by taking a masked model of the info as enter, encoding the info, after which studying to decode the info in such a method that it reconstructs the unmasked enter information.

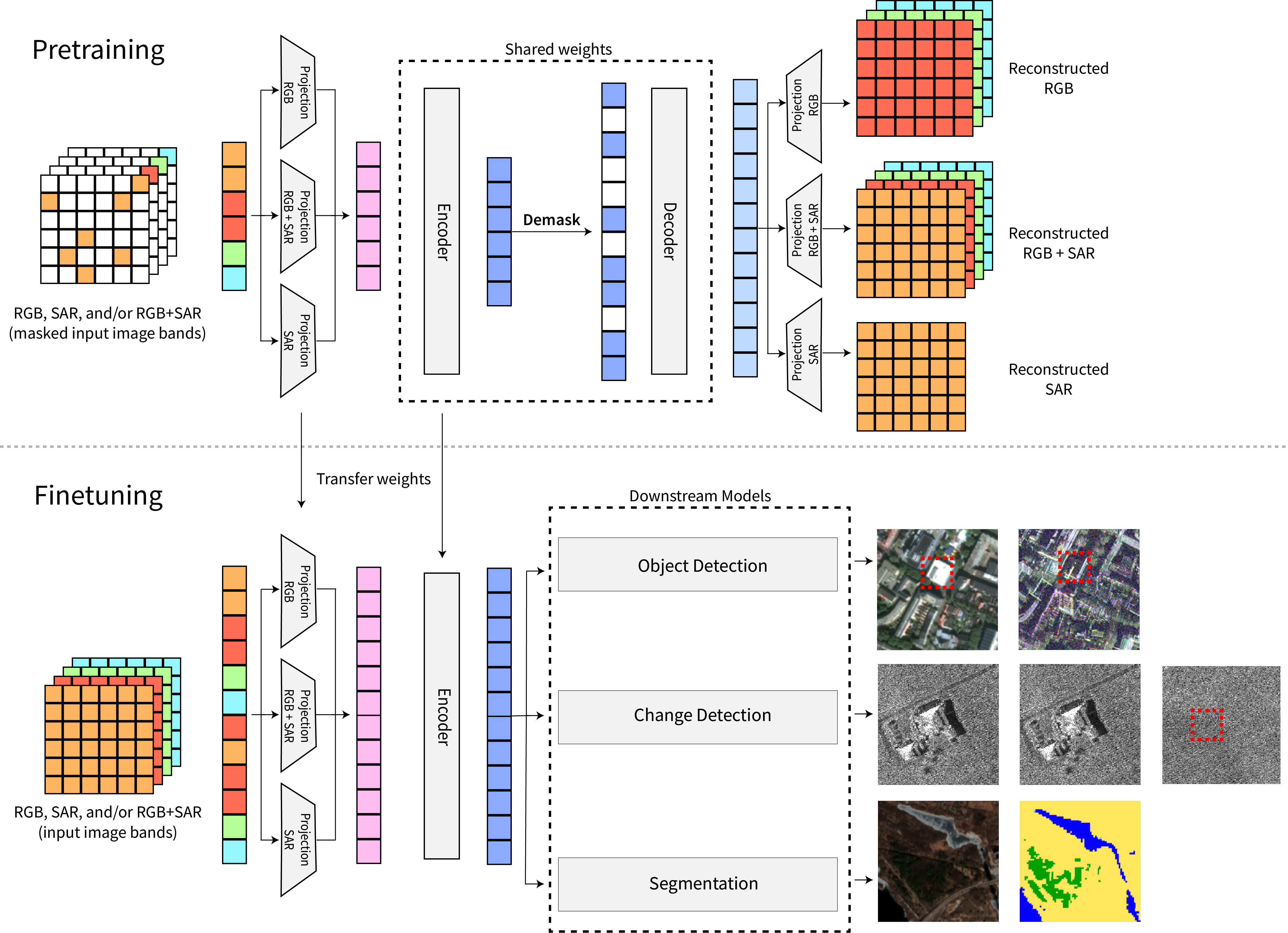

Contrary to widespread courses of contrastive studying strategies, the MAE doesn’t presuppose sure augmentation invariances within the information that could be incorrect for SAR options. Instead, it solely depends on reconstructing the unique enter, which is agnostic to RGB, SAR, or co-registered modalities. As proven in Figure 8, MAERS additional extends MAE by studying unbiased enter projection layers for RGB, SAR, and RGB+SAR channels, encoding the output of those projected layers utilizing a shared ViT, after which decoding to the RGB, SAR, or RGB+SAR channels utilizing unbiased output projection layers. The enter projection layers and shared ViT can then be transferred to downstream duties, akin to object detection or change detection, the place the enter encoder can then take RGB, SAR, or RGB+SAR as enter.

Figure 8: (high) A visualization of MAERS to study a joint illustration and encoder that can be utilized for a (backside) downstream process, akin to object detection on both, or each, modalities.

Learning representations for RGB, SAR, and co-registered modalities can profit a spread of downstream duties, akin to content-based picture retrieval, classification, segmentation, and detection. To exhibit the effectiveness of our realized representations, we carry out experiments on the well-established benchmarks of 1) multi-label classification of co-registered EO and SAR scenes from the BigEarthNet-MM dataset, and a pair of) semantic segmentation on the VHR EO and SAR SpaceWeb 6 dataset.

Multi-Label Classification on LargeEarth-MM

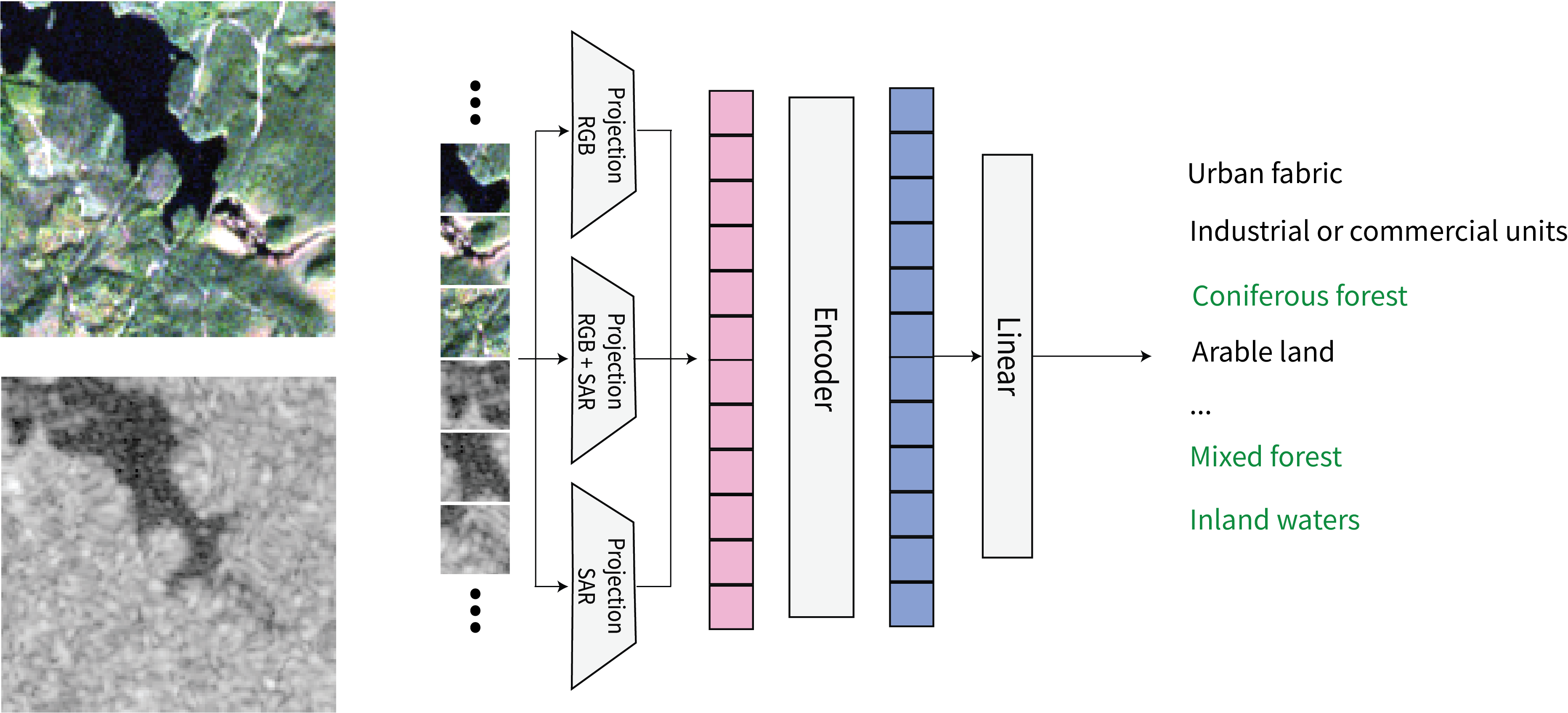

Figure 9: (left) co-registered Sentinel-2 EO and Sentinel-1 SAR imagery are patchified and used to carry out a multi-label classification process as specified by the LargeEarth-MM problem. A linear layer is added to our multi-modal encoder after which fine-tuned end-to-end.

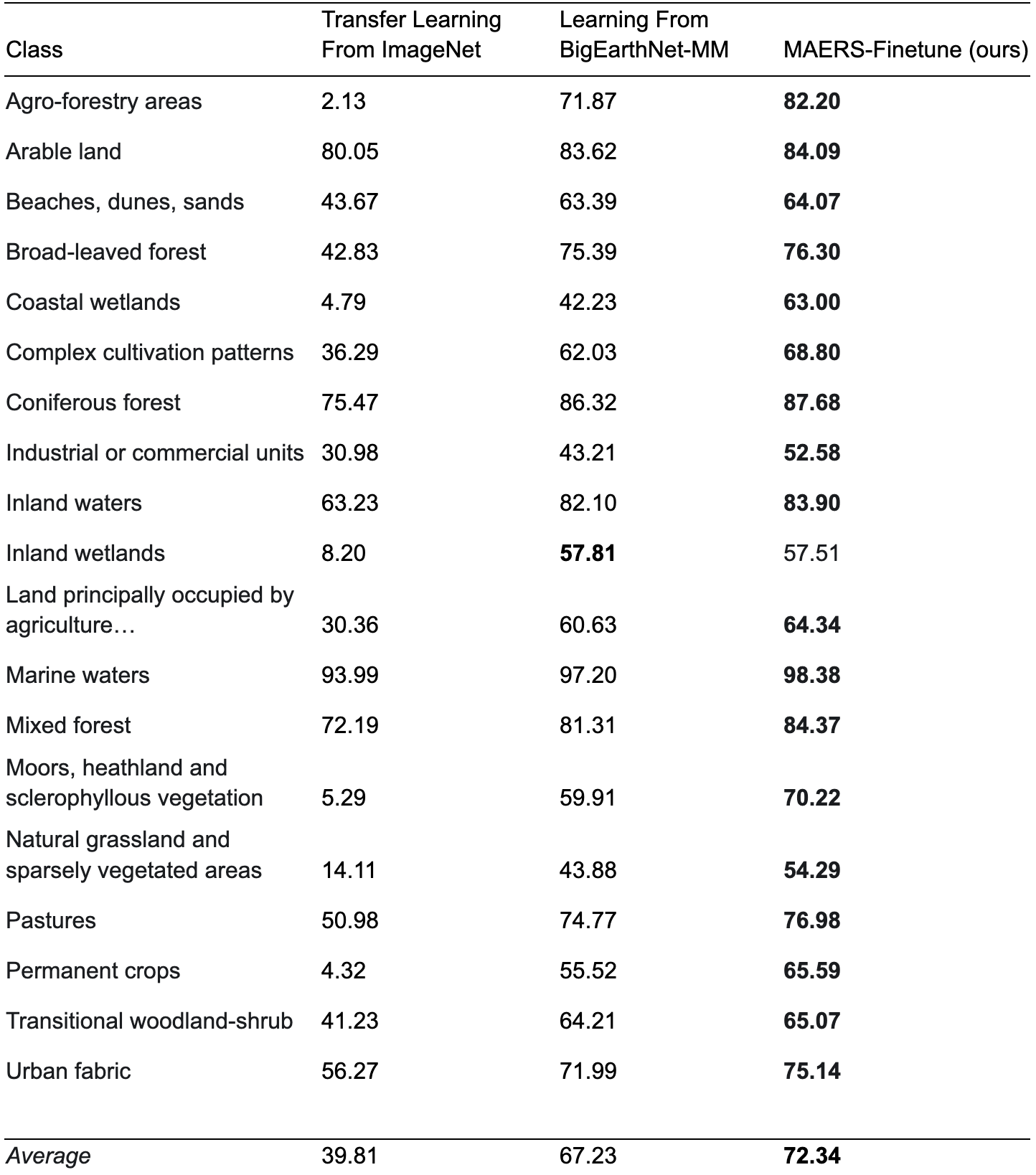

MAERS is initialized with a set of ImageNet weights for a ViT-Base encoder, adopted by pretraining on the LargeEarthWeb-MM dataset for 20 epochs with RGB, SAR, and RGB+SAR imagery. We append a single linear layer to the MAERS encoder and study the multi-label classification process by fine-tuning the whole mannequin for 20 epochs (linear probing experiments get hold of comparable outcomes, as we are going to present in our upcoming paper). Our outcomes are proven in Table 1. MAERS with fine-tuning outperforms the perfect RGB+SAR outcomes as offered within the LargeEarthWeb-MM paper, and present that adapting the State-of-the-Art MAE structure for illustration studying for RGB, SAR, and RGB+SAR enter modalities results in State-of-the-Art outcomes.

Table 1: Reported per-class F2 scores on the take a look at set of LargeEarthWeb-MM.

Semantic Segmentation on VHR EO and SAR SpaceWeb 6

We additional experimented with switch studying for a well timed process that can assist imagery analysts aiming to know the destruction in Ukraine: semantic segmentation of buildings footprints, which is a precursor process to performing constructing injury evaluation. Building injury evaluation is of direct curiosity to authorities officers, journalists, and human rights organizations aiming to know the scope and severity of Russia’s assaults in opposition to infrastructure and civilian populations.

Figure 10: Example of constructing SAR-based MAERS segmentation taken from SpaceNet6, the place the picture on the left exhibits the RGB picture, and the picture on the appropriate exhibits the SAR picture with overlaid segmentation outcomes. The SAR picture is displayed in false colour with VV, VH, and VV/VH bands.

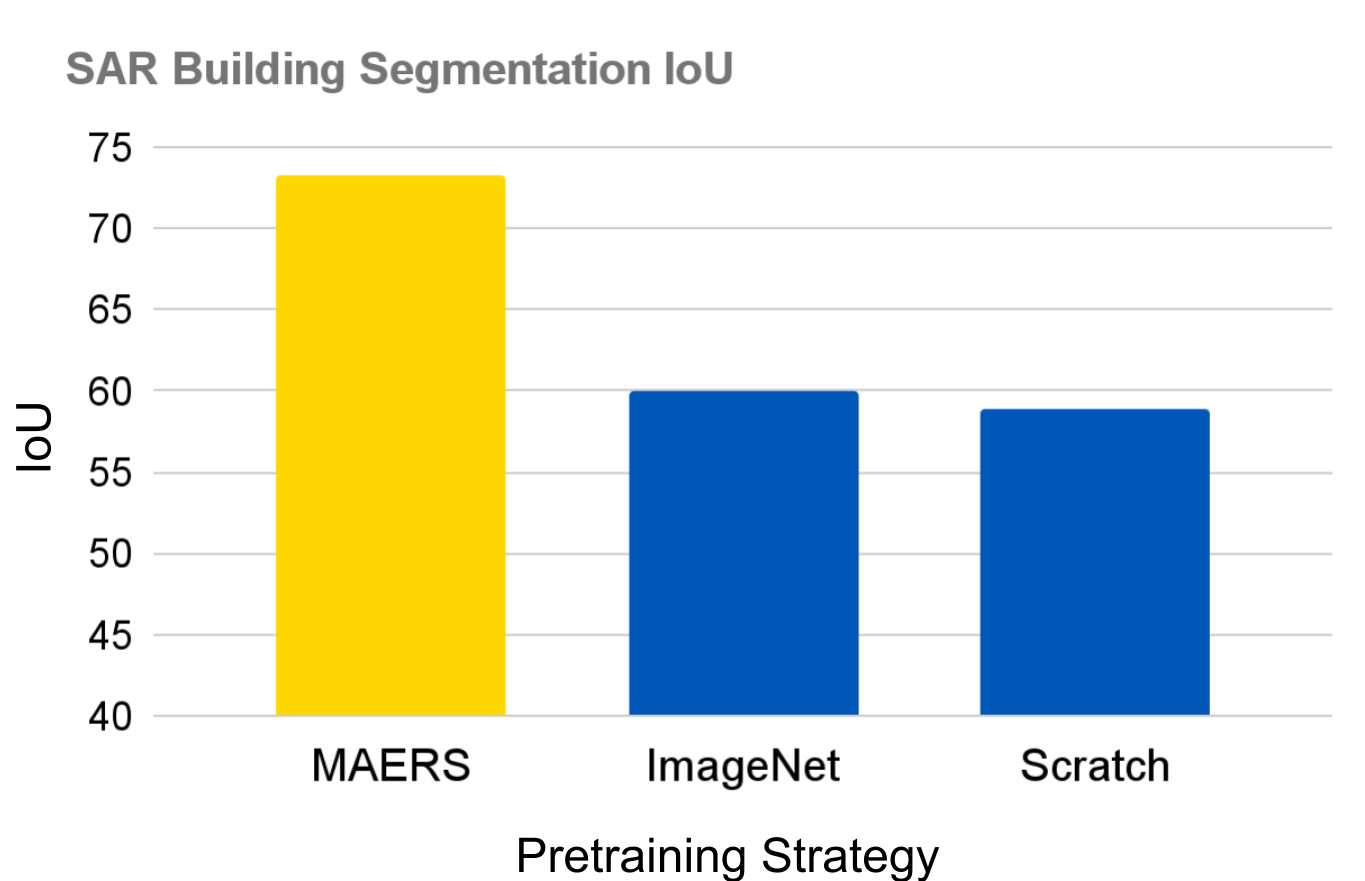

For this experiment, we used the SpaceWeb 6 dataset as an open and public benchmark as an example the effectiveness of our realized representations for constructing footprint detection with VHR SAR from Capella Space. We used this encoder in tandem with the UperNet structure for semantic segmentation. Figure 11 exhibits the IoU efficiency of segmenting constructing footprints in a held-out validation element of the SpaceWeb 6 with solely SAR enter imagery, on a segmentation mannequin that was skilled to make use of both SAR or RGB imagery. The MAERS pretrained mannequin results in a ~13 level enchancment in comparison with coaching the RGB+SAR mannequin from scratch or adapting ImageNet weights with the very same structure.

This demonstrates that MAERS can study strong RGB+SAR representations that enable a practitioner to make use of EO or SAR imagery interchangeably to perform downstream duties. It is vital to notice that the phenomenology of SAR imagery is just not totally conducive for constructing segmentation and that utilizing EO imagery for this process results in IoU scores > 90. This leaves a considerable hole but to be coated by SAR strategies, one thing we hope to cowl in our following paper. However, getting this efficiency out of SAR is crucial when environmental circumstances are usually not conducive to EO imagery seize.

Figure 11: Building segmentation IoU on the SpaceWeb 6 Challenge, utilizing an UperNet segmentation mannequin with a ViT spine. MAERS pretraining results in ~13 level acquire in IoU efficiency in comparison with coaching from scratch or adapting ImageNet pretrained weights.

These outcomes are preliminary, however compelling. We will comply with up this effort with a publication with an in depth set of experiments and benchmarks. Furthermore, we are going to assist within the transition of our fashions to our humanitarian companions to allow them to carry out change detection over residential and different civilian areas to allow higher monitoring of conflict crimes being dedicated in Ukraine.

These fashions are created with the objective of accelerating the efficacy of organizations concerned in humanitarian missions which can be preserving a watchful eye on the conflict in Ukraine. However, as with every know-how, it’s our duty to know how this know-how might be misused. Therefore, we’ve designed these fashions with enter from companions who carry out intelligence and imagery evaluation in humanitarian settings. By taking into consideration their ideas, feedback, and critiques, we’re releasing a functionality we’re assured will likely be used for the nice of humanity and with processes which dictate their protected and accountable use.

Call to Action

As residents of free democracies who develop applied sciences which assist us make sense of the difficult, chaotic, and counter-intuitive world that we stay in, we’ve a duty to behave when acts of injustice happen. Our colleagues and buddies in Ukraine are going through excessive uncertainties and hazard. We possess abilities within the cyber area that may assist within the struggle in opposition to Russian forces. By focusing our time and efforts, whether or not that be by way of focused analysis or volunteering our time in serving to preserve monitor of processing occasions at border crossings, we are able to make a small dent in an in any other case tough state of affairs.

We urge our fellow pc scientists to accomplice with authorities and humanitarian organizations and hearken to their wants as tough occasions persist. Simple issues could make giant variations.

Model and Weights

The fashions are usually not being made publicly accessible right now. We are releasing our fashions to certified researchers and companions by way of this kind. Full distribution will comply with as soon as we’ve accomplished an intensive evaluation of our fashions.

Acknowledgements

Thank you to Gen. Steve Butow and Dr. Nirav Patel on the Department of Defense’s Defense Innovation Unit for reviewing this put up and offering their experience on the way forward for business SAR constellations.