[ad_1]

Anomaly detection (AD), the duty of distinguishing anomalies from regular information, performs a significant function in lots of real-world purposes, equivalent to detecting defective merchandise from imaginative and prescient sensors in manufacturing, fraudulent behaviors in monetary transactions, or community safety threats. Depending on the supply of the kind of information — unfavourable (regular) vs. constructive (anomalous) and the supply of their labels — the duty of AD includes completely different challenges.

|

| (a) Fully supervised anomaly detection, (b) normal-only anomaly detection, (c, d, e) semi-supervised anomaly detection, (f) unsupervised anomaly detection. |

While most earlier works had been proven to be efficient for circumstances with fully-labeled information (both (a) or (b) within the above determine), such settings are much less frequent in follow as a result of labels are significantly tedious to acquire. In most situations customers have a restricted labeling price range, and typically there aren’t even any labeled samples throughout coaching. Furthermore, even when labeled information can be found, there could possibly be biases in the best way samples are labeled, inflicting distribution variations. Such real-world information challenges restrict the achievable accuracy of prior strategies in detecting anomalies.

This put up covers two of our current papers on AD, revealed in Transactions on Machine Learning Research (TMLR), that tackle the above challenges in unsupervised and semi-supervised settings. Using data-centric approaches, we present state-of-the-art ends in each. In “Self-supervised, Refine, Repeat: Improving Unsupervised Anomaly Detection”, we suggest a novel unsupervised AD framework that depends on the rules of self-supervised studying with out labels and iterative information refinement based mostly on the settlement of one-class classifier (OCC) outputs. In “SPADE: Semi-supervised Anomaly Detection under Distribution Mismatch”, we suggest a novel semi-supervised AD framework that yields strong efficiency even underneath distribution mismatch with restricted labeled samples.

Unsupervised anomaly detection with SRR: Self-supervised, Refine, Repeat

Discovering a call boundary for a one-class (regular) distribution (i.e., OCC coaching) is difficult in absolutely unsupervised settings as unlabeled coaching information embrace two courses (regular and irregular). The problem will get additional exacerbated because the anomaly ratio will get greater for unlabeled information. To assemble a sturdy OCC with unlabeled information, excluding likely-positive (anomalous) samples from the unlabeled information, the method known as information refinement, is crucial. The refined information, with a decrease anomaly ratio, are proven to yield superior anomaly detection fashions.

SRR first refines information from an unlabeled dataset, then iteratively trains deep representations utilizing refined information whereas bettering the refinement of unlabeled information by excluding likely-positive samples. For information refinement, an ensemble of OCCs is employed, every of which is skilled on a disjoint subset of unlabeled coaching information. If there may be consensus amongst all of the OCCs within the ensemble, the information which can be predicted to be unfavourable (regular) are included within the refined information. Finally, the refined coaching information are used to coach the ultimate OCC to generate the anomaly predictions.

|

| Training SRR with a knowledge refinement module (OCCs ensemble), illustration learner, and ultimate OCC. (Green/crimson dots symbolize regular/irregular samples, respectively). |

SRR outcomes

We conduct intensive experiments throughout numerous datasets from completely different domains, together with semantic AD (CIFAR-10, Dog-vs-Cat), real-world manufacturing visible AD (MVTec), and real-world tabular AD benchmarks equivalent to detecting medical (Thyroid) or community safety (KDD 1999) anomalies. We take into account strategies with each shallow (e.g., OC-SVM) and deep (e.g., GOAD, CutPaste) fashions. Since the anomaly ratio of real-world information can range, we consider fashions at completely different anomaly ratios of unlabeled coaching information and present that SRR considerably boosts AD efficiency. For instance, SRR improves greater than 15.0 common precision (AP) with a ten% anomaly ratio in comparison with a state-of-the-art one-class deep mannequin on CIFAR-10. Similarly, on MVTec, SRR retains strong efficiency, dropping lower than 1.0 AUC with a ten% anomaly ratio, whereas the greatest current OCC drops greater than 6.0 AUC. Lastly, on Thyroid (tabular information), SRR outperforms a state-of-the-art one-class classifier by 22.9 F1 rating with a 2.5% anomaly ratio.

.png) |

| Across numerous domains, SRR (blue line) considerably boosts AD efficiency with numerous anomaly ratios in absolutely unsupervised settings. |

SPADE: Semi-supervised Pseudo-labeler Anomaly Detection with Ensembling

Most semi-supervised studying strategies (e.g., FixMatch, VIME) assume that the labeled and unlabeled information come from the identical distributions. However, in follow, distribution mismatch generally happens, with labeled and unlabeled information coming from completely different distributions. One such case is constructive and unlabeled (PU) or unfavourable and unlabeled (NU) settings, the place the distributions between labeled (both constructive or unfavourable) and unlabeled (each constructive and unfavourable) samples are completely different. Another reason behind distribution shift is further unlabeled information being gathered after labeling. For instance, manufacturing processes might hold evolving, inflicting the corresponding defects to vary and the defect sorts at labeling to vary from the defect sorts in unlabeled information. In addition, for purposes like monetary fraud detection and anti-money laundering, new anomalies can seem after the information labeling course of, as legal conduct might adapt. Lastly, labelers are extra assured on simple samples once they label them; thus, simple/tough samples usually tend to be included within the labeled/unlabeled information. For instance, with some crowd-sourcing–based labeling, solely the samples with some consensus on the labels (as a measure of confidence) are included within the labeled set.

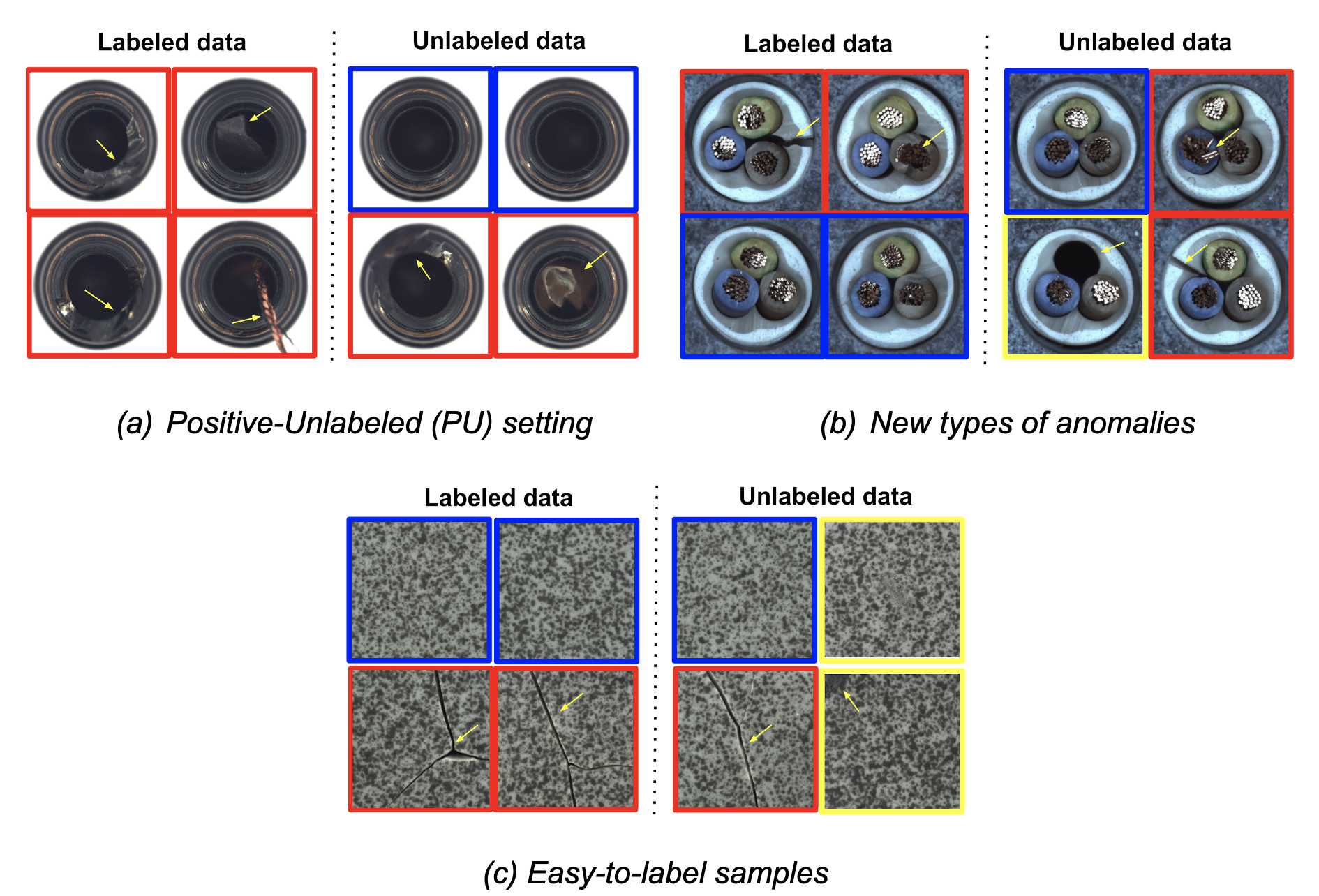

|

| Three frequent real-world situations with distribution mismatches (blue field: regular samples, crimson field: identified/simple anomaly samples, yellow field: new/tough anomaly samples). |

Standard semi-supervised studying strategies assume that labeled and unlabeled information come from the identical distribution, so are sub-optimal for semi-supervised AD underneath distribution mismatch. SPADE makes use of an ensemble of OCCs to estimate the pseudo-labels of the unlabeled information — it does this unbiased of the given constructive labeled information, thus decreasing the dependency on the labels. This is particularly helpful when there’s a distribution mismatch. In addition, SPADE employs partial matching to routinely choose the crucial hyper-parameters for pseudo-labeling with out counting on labeled validation information, a vital functionality given restricted labeled information.

|

| Block diagram of SPADE with zoom within the detailed block diagram of the proposed pseudo-labelers. |

SPADE outcomes

We conduct intensive experiments to showcase the advantages of SPADE in numerous real-world settings of semi-supervised studying with distribution mismatch. We take into account a number of AD datasets for picture (together with MVTec) and tabular (together with Covertype, Thyroid) information.

SPADE exhibits state-of-the-art semi-supervised anomaly detection efficiency throughout a variety of situations: (i) new-types of anomalies, (ii) easy-to-label samples, and (iii) positive-unlabeled examples. As proven under, with new-types of anomalies, SPADE outperforms the state-of-the-art options by 5% AUC on common.

|

| AD performances with three completely different situations throughout numerous datasets (Covertype, MVTec, Thyroid) by way of AUC. Some baselines are solely relevant to some situations. More outcomes with different baselines and datasets could be discovered within the paper. |

We additionally consider SPADE on real-world monetary fraud detection datasets: Kaggle bank card fraud and Xente fraud detection. For these, anomalies evolve (i.e., their distributions change over time) and to establish evolving anomalies, we have to hold labeling for brand new anomalies and retrain the AD mannequin. However, labeling could be expensive and time consuming. Even with out further labeling, SPADE can enhance the AD efficiency utilizing each labeled information and newly-gathered unlabeled information.

|

| AD performances with time-varying distributions utilizing two real-world fraud detection datasets with 10% labeling ratio. More baselines could be discovered within the paper. |

As proven above, SPADE constantly outperforms options on each datasets, profiting from the unlabeled information and displaying robustness to evolving distributions.

Conclusions

AD has a variety of use circumstances with vital significance in real-world purposes, from detecting safety threats in monetary techniques to figuring out defective behaviors of producing machines.

One difficult and dear facet of constructing an AD system is that anomalies are uncommon and never simply detectable by folks. To this finish, we have now proposed SRR, a canonical AD framework to allow excessive efficiency AD with out the necessity for handbook labels for coaching. SRR could be flexibly built-in with any OCC, and utilized on uncooked information or on trainable representations.

Semi-supervised AD is one other highly-important problem — in lots of situations, the distributions of labeled and unlabeled samples don’t match. SPADE introduces a sturdy pseudo-labeling mechanism utilizing an ensemble of OCCs and a even handed method of mixing supervised and self-supervised studying. In addition, SPADE introduces an environment friendly method to choose crucial hyperparameters and not using a validation set, a vital part for data-efficient AD.

Overall, we show that SRR and SPADE constantly outperform the options in numerous situations throughout a number of forms of datasets.

Acknowledgements

We gratefully acknowledge the contributions of Kihyuk Sohn, Chun-Liang Li, Chen-Yu Lee, Kyle Ziegler, Nate Yoder, and Tomas Pfister.