[ad_1]

Before a machine-learning mannequin can full a activity, reminiscent of figuring out most cancers in medical pictures, the mannequin have to be skilled. Training picture classification fashions sometimes includes displaying the mannequin hundreds of thousands of instance pictures gathered into a large dataset.

However, utilizing actual picture information can increase sensible and moral issues: The pictures might run afoul of copyright legal guidelines, violate folks’s privateness, or be biased in opposition to a sure racial or ethnic group. To keep away from these pitfalls, researchers can use picture technology applications to create artificial information for mannequin coaching. But these strategies are restricted as a result of knowledgeable data is usually wanted to hand-design a picture technology program that may create efficient coaching information.

Researchers from MIT, the MIT-IBM Watson AI Lab, and elsewhere took a distinct strategy. Instead of designing personalized picture technology applications for a selected coaching activity, they gathered a dataset of 21,000 publicly out there applications from the web. Then they used this huge assortment of primary picture technology applications to coach a pc imaginative and prescient mannequin.

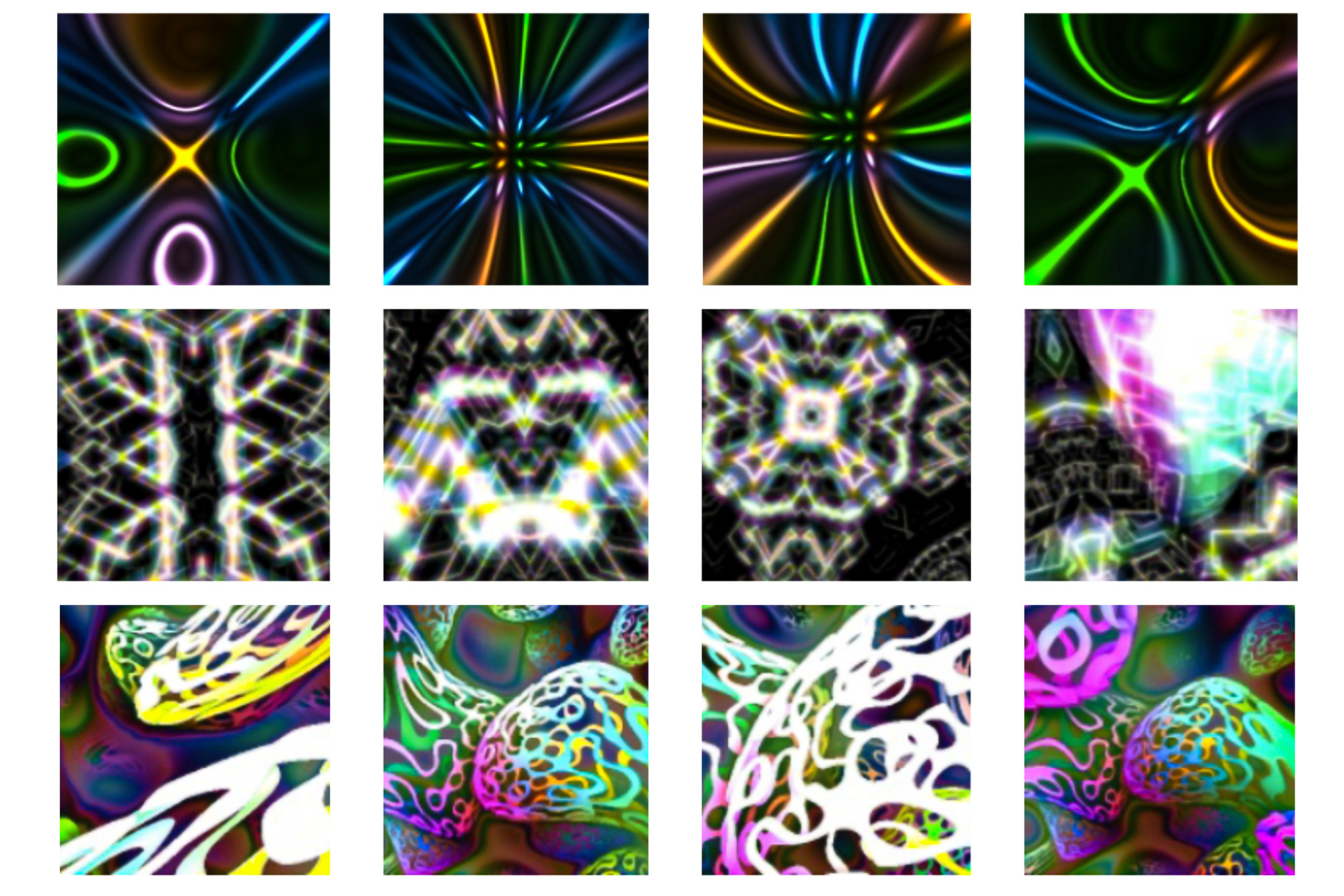

These applications produce various pictures that show easy colours and textures. The researchers didn’t curate or alter the applications, which every comprised just some traces of code.

The fashions they skilled with this huge dataset of applications categorised pictures extra precisely than different synthetically skilled fashions. And, whereas their fashions underperformed these skilled with actual information, the researchers confirmed that growing the variety of picture applications within the dataset additionally elevated mannequin efficiency, revealing a path to attaining larger accuracy.

“It turns out that using lots of programs that are uncurated is actually better than using a small set of programs that people need to manipulate. Data are important, but we have shown that you can go pretty far without real data,” says Manel Baradad, {an electrical} engineering and pc science (EECS) graduate pupil working within the Computer Science and Artificial Intelligence Laboratory (CSAIL) and lead writer of the paper describing this system.

Co-authors embody Tongzhou Wang, an EECS grad pupil in CSAIL; Rogerio Feris, principal scientist and supervisor on the MIT-IBM Watson AI Lab; Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Computer Science and a member of CSAIL; and senior writer Phillip Isola, an affiliate professor in EECS and CSAIL; together with others at JPMorgan Chase Bank and Xyla, Inc. The analysis shall be introduced on the Conference on Neural Information Processing Systems.

Rethinking pretraining

Machine-learning fashions are sometimes pretrained, which suggests they’re skilled on one dataset first to assist them construct parameters that can be utilized to sort out a distinct activity. A mannequin for classifying X-rays may be pretrained utilizing an enormous dataset of synthetically generated pictures earlier than it’s skilled for its precise activity utilizing a a lot smaller dataset of actual X-rays.

These researchers beforehand confirmed that they may use a handful of picture technology applications to create artificial information for mannequin pretraining, however the applications wanted to be rigorously designed so the artificial pictures matched up with sure properties of actual pictures. This made the approach troublesome to scale up.

In the brand new work, they used an unlimited dataset of uncurated picture technology applications as a substitute.

They started by gathering a group of 21,000 pictures technology applications from the web. All the applications are written in a easy programming language and comprise just some snippets of code, so that they generate pictures quickly.

“These programs have been designed by developers all over the world to produce images that have some of the properties we are interested in. They produce images that look kind of like abstract art,” Baradad explains.

These easy applications can run so rapidly that the researchers didn’t want to supply pictures upfront to coach the mannequin. The researchers discovered they may generate pictures and practice the mannequin concurrently, which streamlines the method.

They used their large dataset of picture technology applications to pretrain pc imaginative and prescient fashions for each supervised and unsupervised picture classification duties. In supervised studying, the picture information are labeled, whereas in unsupervised studying the mannequin learns to categorize pictures with out labels.

Improving accuracy

When they in contrast their pretrained fashions to state-of-the-art pc imaginative and prescient fashions that had been pretrained utilizing artificial information, their fashions have been extra correct, which means they put pictures into the proper classes extra typically. While the accuracy ranges have been nonetheless lower than fashions skilled on actual information, their approach narrowed the efficiency hole between fashions skilled on actual information and people skilled on artificial information by 38 p.c.

“Importantly, we show that for the number of programs you collect, performance scales logarithmically. We do not saturate performance, so if we collect more programs, the model would perform even better. So, there is a way to extend our approach,” Manel says.

The researchers additionally used every particular person picture technology program for pretraining, in an effort to uncover elements that contribute to mannequin accuracy. They discovered that when a program generates a extra various set of pictures, the mannequin performs higher. They additionally discovered that colourful pictures with scenes that fill your entire canvas have a tendency to enhance mannequin efficiency essentially the most.

Now that they’ve demonstrated the success of this pretraining strategy, the researchers wish to lengthen their approach to different sorts of information, reminiscent of multimodal information that embody textual content and pictures. They additionally wish to proceed exploring methods to enhance picture classification efficiency.

“There is still a gap to close with models trained on real data. This gives our research a direction that we hope others will follow,” he says.

[ad_2]